Gian Antonio Susto

Function Based Isolation Forest (FuBIF): A Unifying Framework for Interpretable Isolation-Based Anomaly Detection

Nov 08, 2025

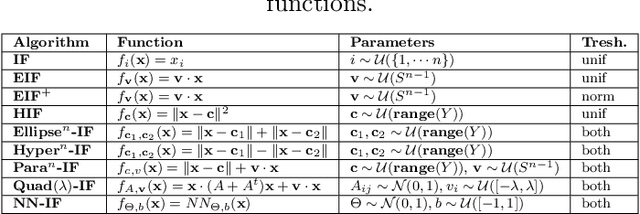

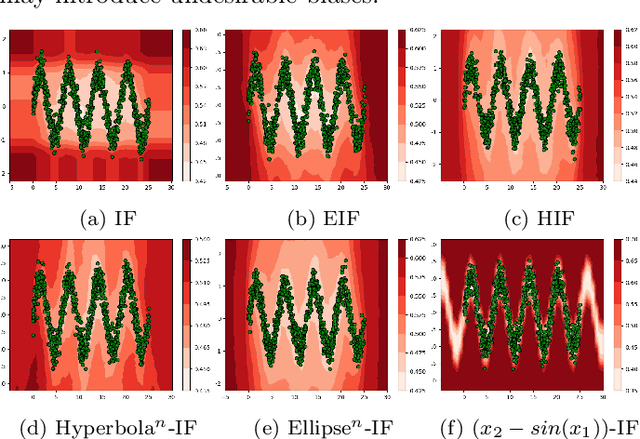

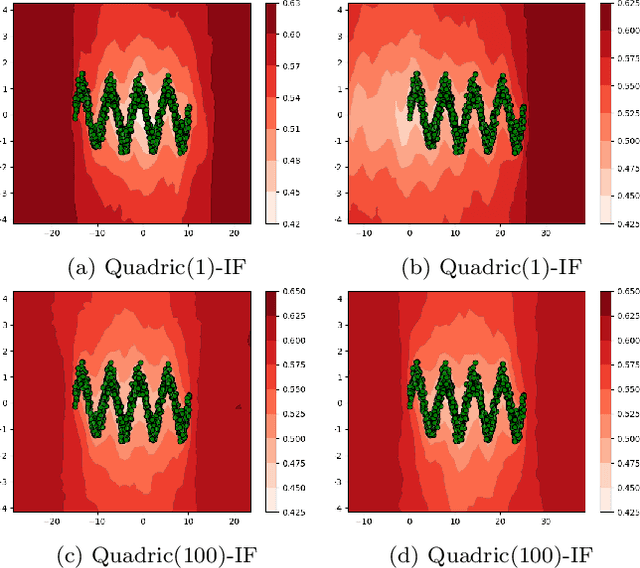

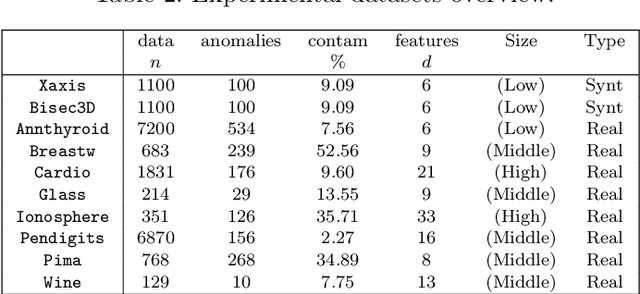

Abstract:Anomaly Detection (AD) is evolving through algorithms capable of identifying outliers in complex datasets. The Isolation Forest (IF), a pivotal AD technique, exhibits adaptability limitations and biases. This paper introduces the Function-based Isolation Forest (FuBIF), a generalization of IF that enables the use of real-valued functions for dataset branching, significantly enhancing the flexibility of evaluation tree construction. Complementing this, the FuBIF Feature Importance (FuBIFFI) algorithm extends the interpretability in IF-based approaches by providing feature importance scores across possible FuBIF models. This paper details the operational framework of FuBIF, evaluates its performance against established methods, and explores its theoretical contributions. An open-source implementation is provided to encourage further research and ensure reproducibility.

ProDER: A Continual Learning Approach for Fault Prediction in Evolving Smart Grids

Nov 07, 2025Abstract:As smart grids evolve to meet growing energy demands and modern operational challenges, the ability to accurately predict faults becomes increasingly critical. However, existing AI-based fault prediction models struggle to ensure reliability in evolving environments where they are required to adapt to new fault types and operational zones. In this paper, we propose a continual learning (CL) framework in the smart grid context to evolve the model together with the environment. We design four realistic evaluation scenarios grounded in class-incremental and domain-incremental learning to emulate evolving grid conditions. We further introduce Prototype-based Dark Experience Replay (ProDER), a unified replay-based approach that integrates prototype-based feature regularization, logit distillation, and a prototype-guided replay memory. ProDER achieves the best performance among tested CL techniques, with only a 0.045 accuracy drop for fault type prediction and 0.015 for fault zone prediction. These results demonstrate the practicality of CL for scalable, real-world fault prediction in smart grids.

Reinforcement Learning for Durable Algorithmic Recourse

Sep 26, 2025Abstract:Algorithmic recourse seeks to provide individuals with actionable recommendations that increase their chances of receiving favorable outcomes from automated decision systems (e.g., loan approvals). While prior research has emphasized robustness to model updates, considerably less attention has been given to the temporal dynamics of recourse--particularly in competitive, resource-constrained settings where recommendations shape future applicant pools. In this work, we present a novel time-aware framework for algorithmic recourse, explicitly modeling how candidate populations adapt in response to recommendations. Additionally, we introduce a novel reinforcement learning (RL)-based recourse algorithm that captures the evolving dynamics of the environment to generate recommendations that are both feasible and valid. We design our recommendations to be durable, supporting validity over a predefined time horizon T. This durability allows individuals to confidently reapply after taking time to implement the suggested changes. Through extensive experiments in complex simulation environments, we show that our approach substantially outperforms existing baselines, offering a superior balance between feasibility and long-term validity. Together, these results underscore the importance of incorporating temporal and behavioral dynamics into the design of practical recourse systems.

Multi-layer Abstraction for Nested Generation of Options (MANGO) in Hierarchical Reinforcement Learning

Aug 25, 2025

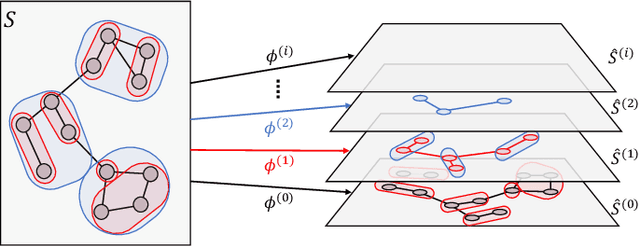

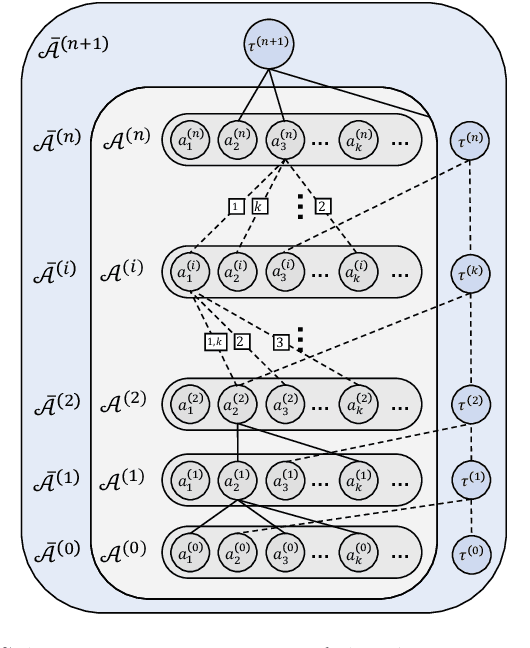

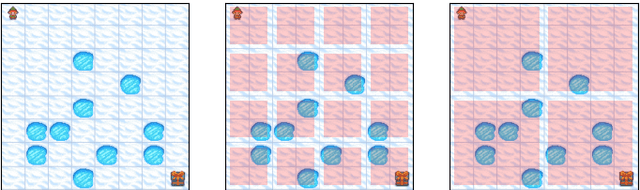

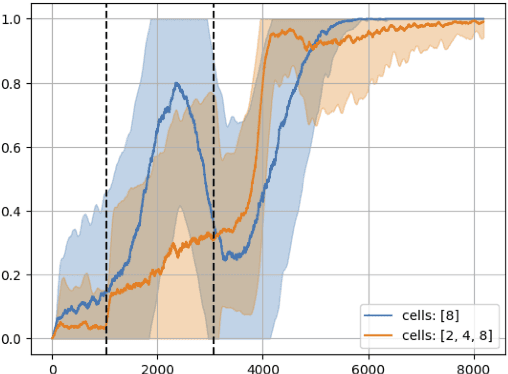

Abstract:This paper introduces MANGO (Multilayer Abstraction for Nested Generation of Options), a novel hierarchical reinforcement learning framework designed to address the challenges of long-term sparse reward environments. MANGO decomposes complex tasks into multiple layers of abstraction, where each layer defines an abstract state space and employs options to modularize trajectories into macro-actions. These options are nested across layers, allowing for efficient reuse of learned movements and improved sample efficiency. The framework introduces intra-layer policies that guide the agent's transitions within the abstract state space, and task actions that integrate task-specific components such as reward functions. Experiments conducted in procedurally-generated grid environments demonstrate substantial improvements in both sample efficiency and generalization capabilities compared to standard RL methods. MANGO also enhances interpretability by making the agent's decision-making process transparent across layers, which is particularly valuable in safety-critical and industrial applications. Future work will explore automated discovery of abstractions and abstract actions, adaptation to continuous or fuzzy environments, and more robust multi-layer training strategies.

Towards Continual Visual Anomaly Detection in the Medical Domain

Aug 25, 2025

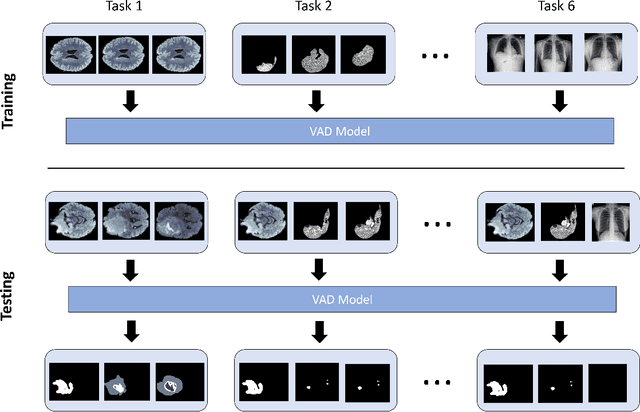

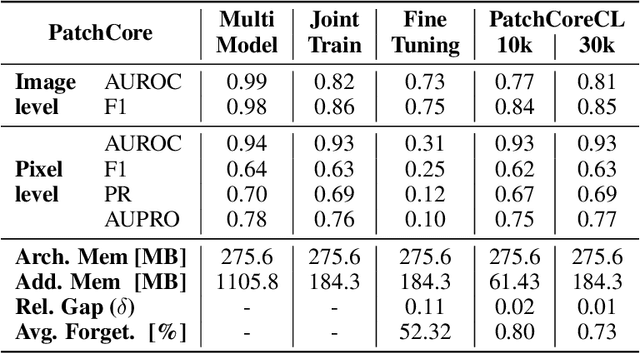

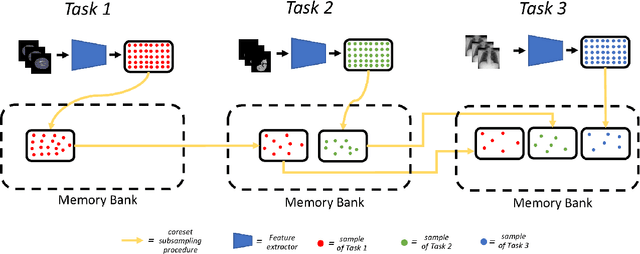

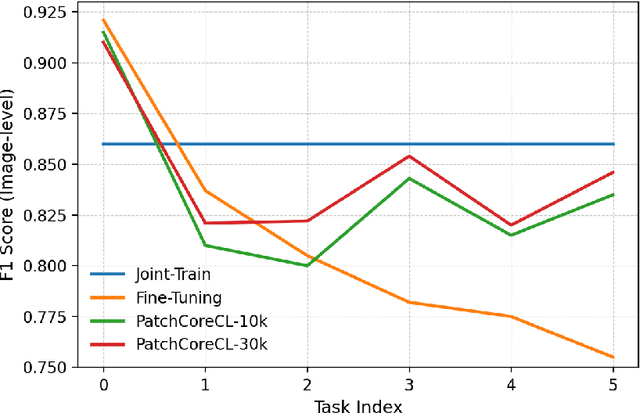

Abstract:Visual Anomaly Detection (VAD) seeks to identify abnormal images and precisely localize the corresponding anomalous regions, relying solely on normal data during training. This approach has proven essential in domains such as manufacturing and, more recently, in the medical field, where accurate and explainable detection is critical. Despite its importance, the impact of evolving input data distributions over time has received limited attention, even though such changes can significantly degrade model performance. In particular, given the dynamic and evolving nature of medical imaging data, Continual Learning (CL) provides a natural and effective framework to incrementally adapt models while preserving previously acquired knowledge. This study explores for the first time the application of VAD models in a CL scenario for the medical field. In this work, we utilize a CL version of the well-established PatchCore model, called PatchCoreCL, and evaluate its performance using BMAD, a real-world medical imaging dataset with both image-level and pixel-level annotations. Our results demonstrate that PatchCoreCL is an effective solution, achieving performance comparable to the task-specific models, with a forgetting value less than a 1%, highlighting the feasibility and potential of CL for adaptive VAD in medical imaging.

MoViAD: Modular Visual Anomaly Detection

Jul 16, 2025Abstract:VAD is a critical field in machine learning focused on identifying deviations from normal patterns in images, often challenged by the scarcity of anomalous data and the need for unsupervised training. To accelerate research and deployment in this domain, we introduce MoViAD, a comprehensive and highly modular library designed to provide fast and easy access to state-of-the-art VAD models, trainers, datasets, and VAD utilities. MoViAD supports a wide array of scenarios, including continual, semi-supervised, few-shots, noisy, and many more. In addition, it addresses practical deployment challenges through dedicated Edge and IoT settings, offering optimized models and backbones, along with quantization and compression utilities for efficient on-device execution and distributed inference. MoViAD integrates a selection of backbones, robust evaluation VAD metrics (pixel-level and image-level) and useful profiling tools for efficiency analysis. The library is designed for fast, effortless deployment, enabling machine learning engineers to easily use it for their specific setup with custom models, datasets, and backbones. At the same time, it offers the flexibility and extensibility researchers need to develop and experiment with new methods.

Domain Adaptation for Image Classification of Defects in Semiconductor Manufacturing

Jun 18, 2025Abstract:In the semiconductor sector, due to high demand but also strong and increasing competition, time to market and quality are key factors in securing significant market share in various application areas. Thanks to the success of deep learning methods in recent years in the computer vision domain, Industry 4.0 and 5.0 applications, such as defect classification, have achieved remarkable success. In particular, Domain Adaptation (DA) has proven highly effective since it focuses on using the knowledge learned on a (source) domain to adapt and perform effectively on a different but related (target) domain. By improving robustness and scalability, DA minimizes the need for extensive manual re-labeling or re-training of models. This not only reduces computational and resource costs but also allows human experts to focus on high-value tasks. Therefore, we tested the efficacy of DA techniques in semi-supervised and unsupervised settings within the context of the semiconductor field. Moreover, we propose the DBACS approach, a CycleGAN-inspired model enhanced with additional loss terms to improve performance. All the approaches are studied and validated on real-world Electron Microscope images considering the unsupervised and semi-supervised settings, proving the usefulness of our method in advancing DA techniques for the semiconductor field.

Simple and Effective Specialized Representations for Fair Classifiers

May 16, 2025Abstract:Fair classification is a critical challenge that has gained increasing importance due to international regulations and its growing use in high-stakes decision-making settings. Existing methods often rely on adversarial learning or distribution matching across sensitive groups; however, adversarial learning can be unstable, and distribution matching can be computationally intensive. To address these limitations, we propose a novel approach based on the characteristic function distance. Our method ensures that the learned representation contains minimal sensitive information while maintaining high effectiveness for downstream tasks. By utilizing characteristic functions, we achieve a more stable and efficient solution compared to traditional methods. Additionally, we introduce a simple relaxation of the objective function that guarantees fairness in common classification models with no performance degradation. Experimental results on benchmark datasets demonstrate that our approach consistently matches or achieves better fairness and predictive accuracy than existing methods. Moreover, our method maintains robustness and computational efficiency, making it a practical solution for real-world applications.

Towards Scalable IoT Deployment for Visual Anomaly Detection via Efficient Compression

May 15, 2025Abstract:Visual Anomaly Detection (VAD) is a key task in industrial settings, where minimizing operational costs is essential. Deploying deep learning models within Internet of Things (IoT) environments introduces specific challenges due to limited computational power and bandwidth of edge devices. This study investigates how to perform VAD effectively under such constraints by leveraging compact, efficient processing strategies. We evaluate several data compression techniques, examining the tradeoff between system latency and detection accuracy. Experiments on the MVTec AD benchmark demonstrate that significant compression can be achieved with minimal loss in anomaly detection performance compared to uncompressed data. Current results show up to 80% reduction in end-to-end inference time, including edge processing, transmission, and server computation.

Evaluating Modern Visual Anomaly Detection Approaches in Semiconductor Manufacturing: A Comparative Study

May 12, 2025Abstract:Semiconductor manufacturing is a complex, multistage process. Automated visual inspection of Scanning Electron Microscope (SEM) images is indispensable for minimizing equipment downtime and containing costs. Most previous research considers supervised approaches, assuming a sufficient number of anomalously labeled samples. On the contrary, Visual Anomaly Detection (VAD), an emerging research domain, focuses on unsupervised learning, avoiding the costly defect collection phase while providing explanations of the predictions. We introduce a benchmark for VAD in the semiconductor domain by leveraging the MIIC dataset. Our results demonstrate the efficacy of modern VAD approaches in this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge