Bumkyu Choi

Reinforcement Learning for Robust Athletic Intelligence: Lessons from the 2nd 'AI Olympics with RealAIGym' Competition

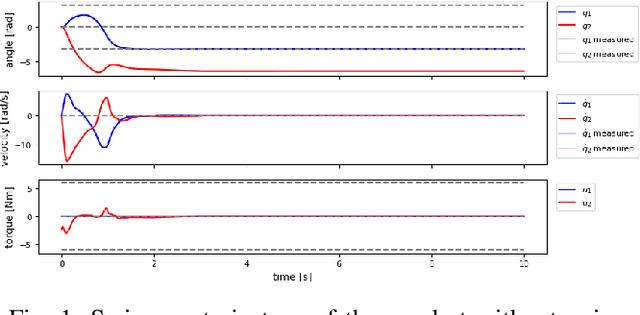

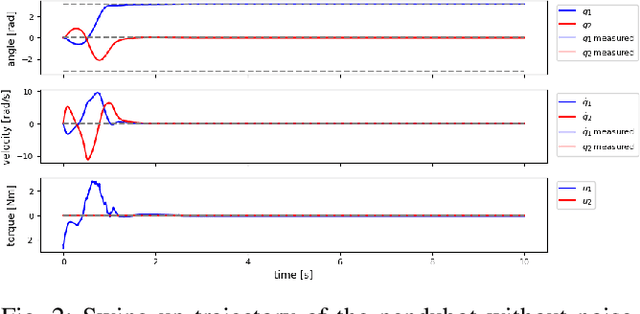

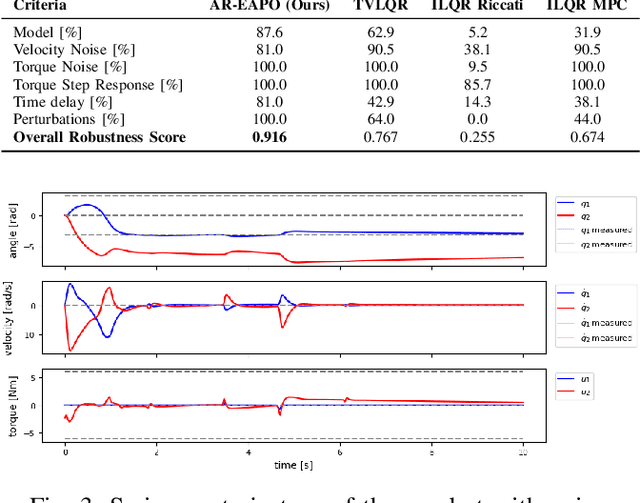

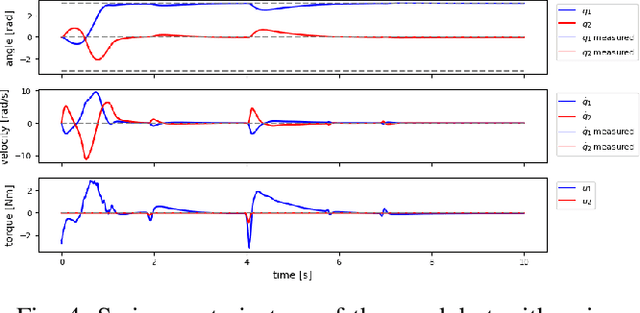

Mar 19, 2025Abstract:In the field of robotics many different approaches ranging from classical planning over optimal control to reinforcement learning (RL) are developed and borrowed from other fields to achieve reliable control in diverse tasks. In order to get a clear understanding of their individual strengths and weaknesses and their applicability in real world robotic scenarios is it important to benchmark and compare their performances not only in a simulation but also on real hardware. The '2nd AI Olympics with RealAIGym' competition was held at the IROS 2024 conference to contribute to this cause and evaluate different controllers according to their ability to solve a dynamic control problem on an underactuated double pendulum system with chaotic dynamics. This paper describes the four different RL methods submitted by the participating teams, presents their performance in the swing-up task on a real double pendulum, measured against various criteria, and discusses their transferability from simulation to real hardware and their robustness to external disturbances.

Average-Reward Maximum Entropy Reinforcement Learning for Underactuated Double Pendulum Tasks

Sep 13, 2024

Abstract:This report presents a solution for the swing-up and stabilisation tasks of the acrobot and the pendubot, developed for the AI Olympics competition at IROS 2024. Our approach employs the Average-Reward Entropy Advantage Policy Optimization (AR-EAPO), a model-free reinforcement learning (RL) algorithm that combines average-reward RL and maximum entropy RL. Results demonstrate that our controller achieves improved performance and robustness scores compared to established baseline methods in both the acrobot and pendubot scenarios, without the need for a heavily engineered reward function or system model. The current results are applicable exclusively to the simulation stage setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge