Gabriel Waibel

Scientific Exploration of Challenging Planetary Analog Environments with a Team of Legged Robots

Jul 19, 2023Abstract:The interest in exploring planetary bodies for scientific investigation and in-situ resource utilization is ever-rising. Yet, many sites of interest are inaccessible to state-of-the-art planetary exploration robots because of the robots' inability to traverse steep slopes, unstructured terrain, and loose soil. Additionally, current single-robot approaches only allow a limited exploration speed and a single set of skills. Here, we present a team of legged robots with complementary skills for exploration missions in challenging planetary analog environments. We equipped the robots with an efficient locomotion controller, a mapping pipeline for online and post-mission visualization, instance segmentation to highlight scientific targets, and scientific instruments for remote and in-situ investigation. Furthermore, we integrated a robotic arm on one of the robots to enable high-precision measurements. Legged robots can swiftly navigate representative terrains, such as granular slopes beyond 25 degrees, loose soil, and unstructured terrain, highlighting their advantages compared to wheeled rover systems. We successfully verified the approach in analog deployments at the BeyondGravity ExoMars rover testbed, in a quarry in Switzerland, and at the Space Resources Challenge in Luxembourg. Our results show that a team of legged robots with advanced locomotion, perception, and measurement skills, as well as task-level autonomy, can conduct successful, effective missions in a short time. Our approach enables the scientific exploration of planetary target sites that are currently out of human and robotic reach.

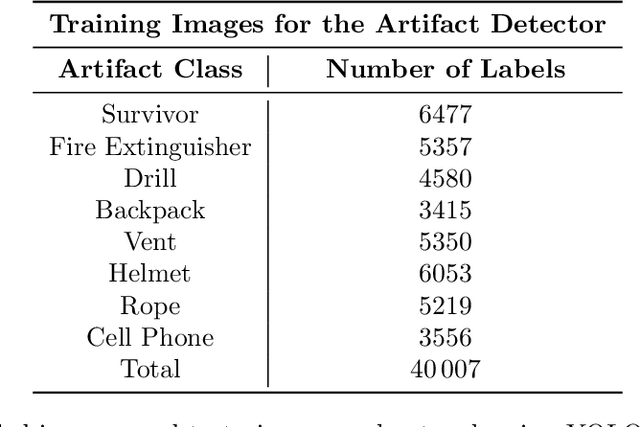

LiDAR-guided object search and detection in Subterranean Environments

Oct 26, 2022

Abstract:Detecting objects of interest, such as human survivors, safety equipment, and structure access points, is critical to any search-and-rescue operation. Robots deployed for such time-sensitive efforts rely on their onboard sensors to perform their designated tasks. However, as disaster response operations are predominantly conducted under perceptually degraded conditions, commonly utilized sensors such as visual cameras and LiDARs suffer in terms of performance degradation. In response, this work presents a method that utilizes the complementary nature of vision and depth sensors to leverage multi-modal information to aid object detection at longer distances. In particular, depth and intensity values from sparse LiDAR returns are used to generate proposals for objects present in the environment. These proposals are then utilized by a Pan-Tilt-Zoom (PTZ) camera system to perform a directed search by adjusting its pose and zoom level for performing object detection and classification in difficult environments. The proposed work has been thoroughly verified using an ANYmal quadruped robot in underground settings and on datasets collected during the DARPA Subterranean Challenge finals.

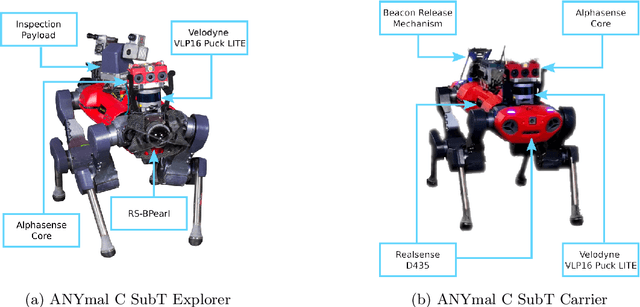

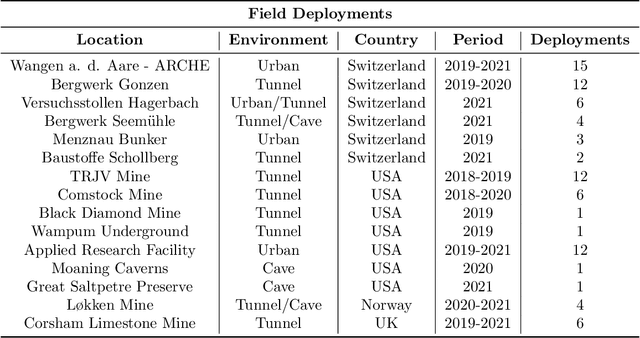

Team CERBERUS Wins the DARPA Subterranean Challenge: Technical Overview and Lessons Learned

Jul 11, 2022

Abstract:This article presents the CERBERUS robotic system-of-systems, which won the DARPA Subterranean Challenge Final Event in 2021. The Subterranean Challenge was organized by DARPA with the vision to facilitate the novel technologies necessary to reliably explore diverse underground environments despite the grueling challenges they present for robotic autonomy. Due to their geometric complexity, degraded perceptual conditions combined with lack of GPS support, austere navigation conditions, and denied communications, subterranean settings render autonomous operations particularly demanding. In response to this challenge, we developed the CERBERUS system which exploits the synergy of legged and flying robots, coupled with robust control especially for overcoming perilous terrain, multi-modal and multi-robot perception for localization and mapping in conditions of sensor degradation, and resilient autonomy through unified exploration path planning and local motion planning that reflects robot-specific limitations. Based on its ability to explore diverse underground environments and its high-level command and control by a single human supervisor, CERBERUS demonstrated efficient exploration, reliable detection of objects of interest, and accurate mapping. In this article, we report results from both the preliminary runs and the final Prize Round of the DARPA Subterranean Challenge, and discuss highlights and challenges faced, alongside lessons learned for the benefit of the community.

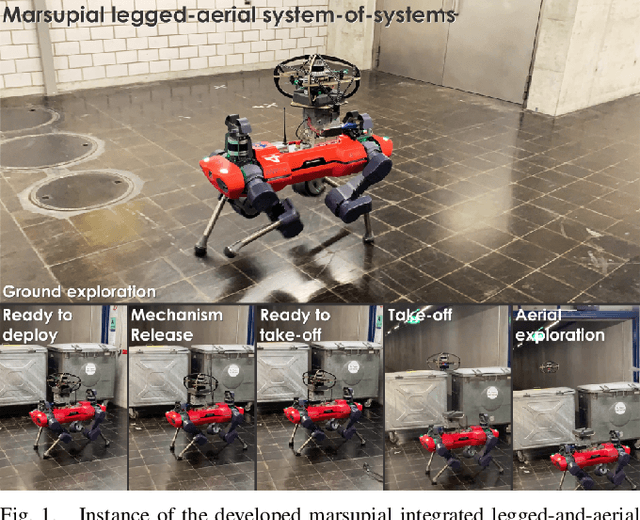

Marsupial Walking-and-Flying Robotic Deployment for Collaborative Exploration of Unknown Environments

May 11, 2022

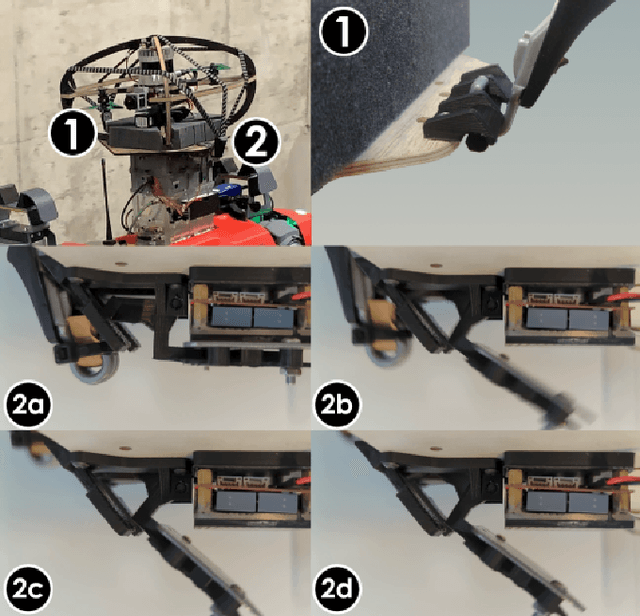

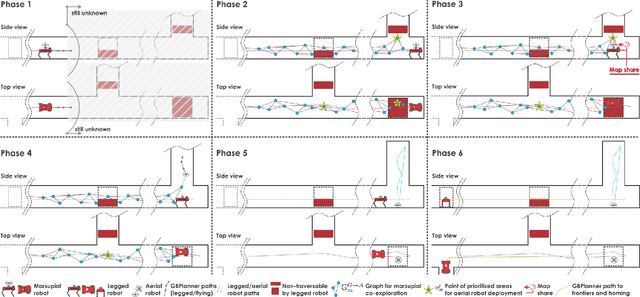

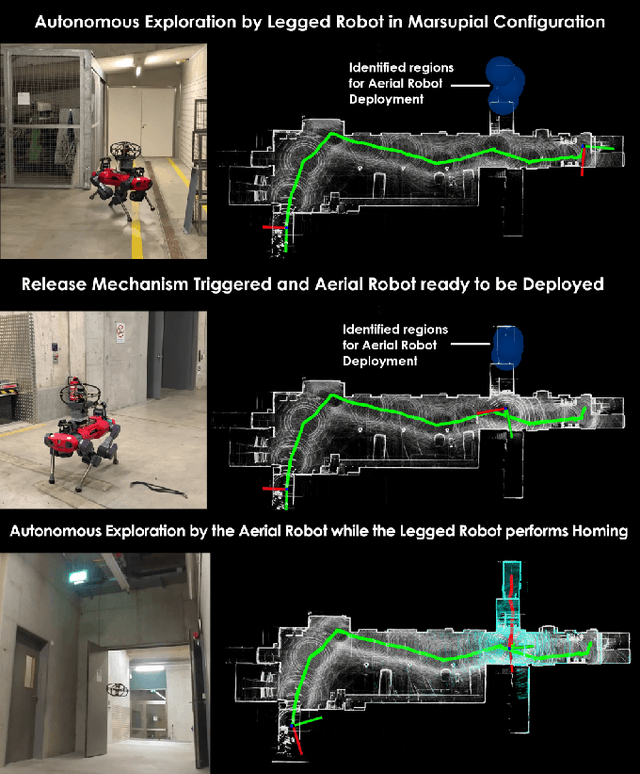

Abstract:This work contributes a marsupial robotic system-of-systems involving a legged and an aerial robot capable of collaborative mapping and exploration path planning that exploits the heterogeneous properties of the two systems and the ability to selectively deploy the aerial system from the ground robot. Exploiting the dexterous locomotion capabilities and long endurance of quadruped robots, the marsupial combination can explore within large-scale and confined environments involving rough terrain. However, as certain types of terrain or vertical geometries can render any ground system unable to continue its exploration, the marsupial system can - when needed - deploy the flying robot which, by exploiting its 3D navigation capabilities, can undertake a focused exploration task within its endurance limitations. Focusing on autonomy, the two systems can co-localize and map together by sharing LiDAR-based maps and plan exploration paths individually, while a tailored graph search onboard the legged robot allows it to identify where and when the ferried aerial platform should be deployed. The system is verified within multiple experimental studies demonstrating the expanded exploration capabilities of the marsupial system-of-systems and facilitating the exploration of otherwise individually unreachable areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge