Ruyi Zhou

PegasusFlow: Parallel Rolling-Denoising Score Sampling for Robot Diffusion Planner Flow Matching

Sep 10, 2025Abstract:Diffusion models offer powerful generative capabilities for robot trajectory planning, yet their practical deployment on robots is hindered by a critical bottleneck: a reliance on imitation learning from expert demonstrations. This paradigm is often impractical for specialized robots where data is scarce and creates an inefficient, theoretically suboptimal training pipeline. To overcome this, we introduce PegasusFlow, a hierarchical rolling-denoising framework that enables direct and parallel sampling of trajectory score gradients from environmental interaction, completely bypassing the need for expert data. Our core innovation is a novel sampling algorithm, Weighted Basis Function Optimization (WBFO), which leverages spline basis representations to achieve superior sample efficiency and faster convergence compared to traditional methods like MPPI. The framework is embedded within a scalable, asynchronous parallel simulation architecture that supports massively parallel rollouts for efficient data collection. Extensive experiments on trajectory optimization and robotic navigation tasks demonstrate that our approach, particularly Action-Value WBFO (AVWBFO) combined with a reinforcement learning warm-start, significantly outperforms baselines. In a challenging barrier-crossing task, our method achieved a 100% success rate and was 18% faster than the next-best method, validating its effectiveness for complex terrain locomotion planning. https://masteryip.github.io/pegasusflow.github.io/

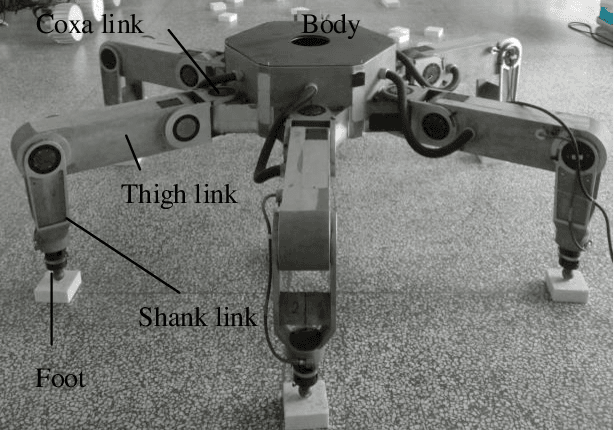

Whole-Body Constrained Learning for Legged Locomotion via Hierarchical Optimization

Jun 05, 2025Abstract:Reinforcement learning (RL) has demonstrated impressive performance in legged locomotion over various challenging environments. However, due to the sim-to-real gap and lack of explainability, unconstrained RL policies deployed in the real world still suffer from inevitable safety issues, such as joint collisions, excessive torque, or foot slippage in low-friction environments. These problems limit its usage in missions with strict safety requirements, such as planetary exploration, nuclear facility inspection, and deep-sea operations. In this paper, we design a hierarchical optimization-based whole-body follower, which integrates both hard and soft constraints into RL framework to make the robot move with better safety guarantees. Leveraging the advantages of model-based control, our approach allows for the definition of various types of hard and soft constraints during training or deployment, which allows for policy fine-tuning and mitigates the challenges of sim-to-real transfer. Meanwhile, it preserves the robustness of RL when dealing with locomotion in complex unstructured environments. The trained policy with introduced constraints was deployed in a hexapod robot and tested in various outdoor environments, including snow-covered slopes and stairs, demonstrating the great traversability and safety of our approach.

Identifying Terrain Physical Parameters from Vision -- Towards Physical-Parameter-Aware Locomotion and Navigation

Aug 29, 2024

Abstract:Identifying the physical properties of the surrounding environment is essential for robotic locomotion and navigation to deal with non-geometric hazards, such as slippery and deformable terrains. It would be of great benefit for robots to anticipate these extreme physical properties before contact; however, estimating environmental physical parameters from vision is still an open challenge. Animals can achieve this by using their prior experience and knowledge of what they have seen and how it felt. In this work, we propose a cross-modal self-supervised learning framework for vision-based environmental physical parameter estimation, which paves the way for future physical-property-aware locomotion and navigation. We bridge the gap between existing policies trained in simulation and identification of physical terrain parameters from vision. We propose to train a physical decoder in simulation to predict friction and stiffness from multi-modal input. The trained network allows the labeling of real-world images with physical parameters in a self-supervised manner to further train a visual network during deployment, which can densely predict the friction and stiffness from image data. We validate our physical decoder in simulation and the real world using a quadruped ANYmal robot, outperforming an existing baseline method. We show that our visual network can predict the physical properties in indoor and outdoor experiments while allowing fast adaptation to new environments.

Scientific Exploration of Challenging Planetary Analog Environments with a Team of Legged Robots

Jul 19, 2023Abstract:The interest in exploring planetary bodies for scientific investigation and in-situ resource utilization is ever-rising. Yet, many sites of interest are inaccessible to state-of-the-art planetary exploration robots because of the robots' inability to traverse steep slopes, unstructured terrain, and loose soil. Additionally, current single-robot approaches only allow a limited exploration speed and a single set of skills. Here, we present a team of legged robots with complementary skills for exploration missions in challenging planetary analog environments. We equipped the robots with an efficient locomotion controller, a mapping pipeline for online and post-mission visualization, instance segmentation to highlight scientific targets, and scientific instruments for remote and in-situ investigation. Furthermore, we integrated a robotic arm on one of the robots to enable high-precision measurements. Legged robots can swiftly navigate representative terrains, such as granular slopes beyond 25 degrees, loose soil, and unstructured terrain, highlighting their advantages compared to wheeled rover systems. We successfully verified the approach in analog deployments at the BeyondGravity ExoMars rover testbed, in a quarry in Switzerland, and at the Space Resources Challenge in Luxembourg. Our results show that a team of legged robots with advanced locomotion, perception, and measurement skills, as well as task-level autonomy, can conduct successful, effective missions in a short time. Our approach enables the scientific exploration of planetary target sites that are currently out of human and robotic reach.

Predicting Terrain Mechanical Properties in Sight for Planetary Rovers with Semantic Clues

Nov 03, 2020

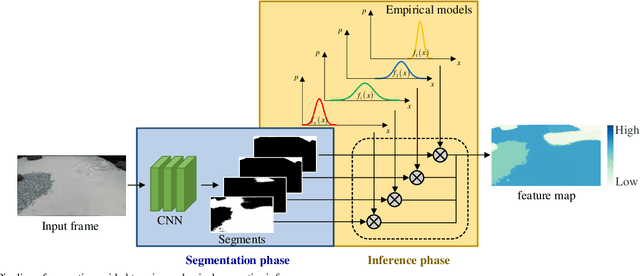

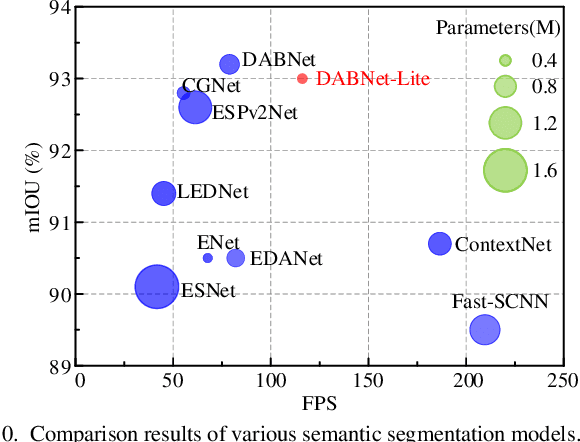

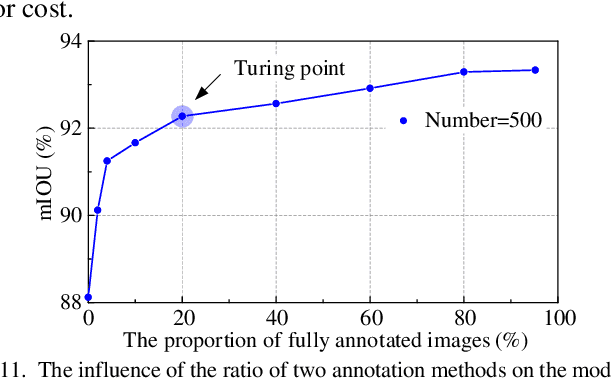

Abstract:Non-geometric mobility hazards such as rover slippage and sinkage posing great challenges to costly planetary missions are closely related to the mechanical properties of terrain. In-situ proprioceptive processes for rovers to estimate terrain mechanical properties need to experience different slip as well as sinkage and are helpless to untraversed regions. This paper proposes to predict terrain mechanical properties with vision in the distance, which expands the sensing range to the whole view and can partly halt potential slippage and sinkage hazards in the planning stage. A semantic-based method is designed to predict bearing and shearing properties of terrain in two stages connected with semantic clues. The former segmentation phase segments terrain with a light-weighted network promising to be applied onboard with competitive 93% accuracy and high recall rate over 96%, while the latter inference phase predicts terrain properties in a quantitative manner based on human-like inference principles. The prediction results in several test routes are 12.5% and 10.8% in full-scale error and help to plan appropriate strategies to avoid suffering non-geometric hazards.

Fault Tolerant Free Gait and Footstep Planning for Hexapod Robot Based on Monte-Carlo Tree

Jun 16, 2020

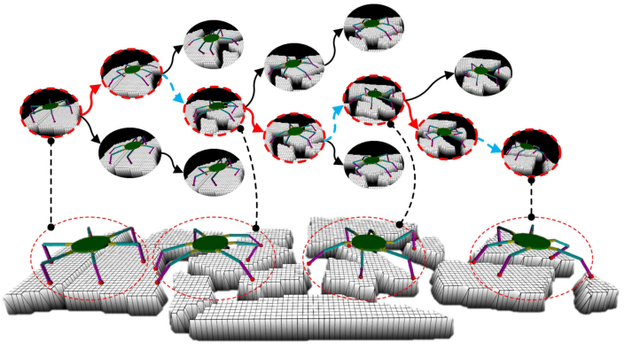

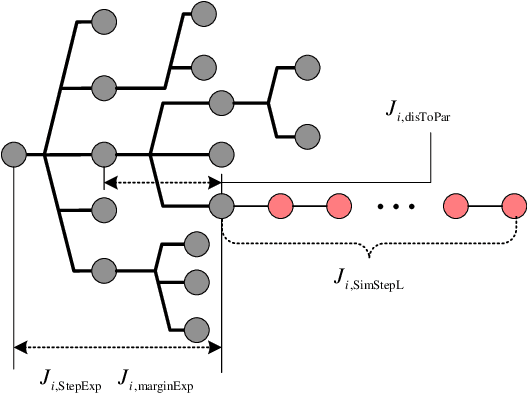

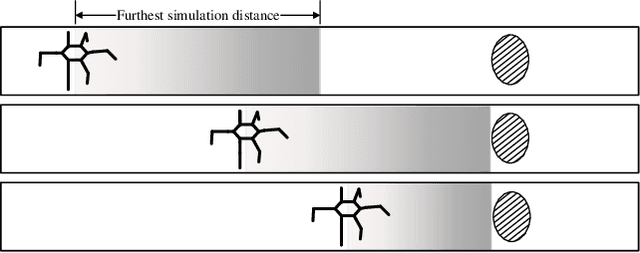

Abstract:Legged robots can pass through complex field environments by selecting gaits and discrete footholds carefully. Traditional methods plan gait and foothold separately and treat them as the single-step optimal process. However, such processing causes its poor passability in a sparse foothold environment. This paper novelly proposes a coordinative planning method for hexapod robots that regards the planning of gait and foothold as a sequence optimization problem with the consideration of dealing with the harshness of the environment as leg fault. The Monte Carlo tree search algorithm(MCTS) is used to optimize the entire sequence. Two methods, FastMCTS, and SlidingMCTS are proposed to solve some defeats of the standard MCTS applicating in the field of legged robot planning. The proposed planning algorithm combines the fault-tolerant gait method to improve the passability of the algorithm. Finally, compared with other planning methods, experiments on terrains with different densities of footholds and artificially-designed challenging terrain are carried out to verify our methods. All results show that the proposed method dramatically improves the hexapod robot's ability to pass through sparse footholds environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge