Zongquan Deng

PegasusFlow: Parallel Rolling-Denoising Score Sampling for Robot Diffusion Planner Flow Matching

Sep 10, 2025Abstract:Diffusion models offer powerful generative capabilities for robot trajectory planning, yet their practical deployment on robots is hindered by a critical bottleneck: a reliance on imitation learning from expert demonstrations. This paradigm is often impractical for specialized robots where data is scarce and creates an inefficient, theoretically suboptimal training pipeline. To overcome this, we introduce PegasusFlow, a hierarchical rolling-denoising framework that enables direct and parallel sampling of trajectory score gradients from environmental interaction, completely bypassing the need for expert data. Our core innovation is a novel sampling algorithm, Weighted Basis Function Optimization (WBFO), which leverages spline basis representations to achieve superior sample efficiency and faster convergence compared to traditional methods like MPPI. The framework is embedded within a scalable, asynchronous parallel simulation architecture that supports massively parallel rollouts for efficient data collection. Extensive experiments on trajectory optimization and robotic navigation tasks demonstrate that our approach, particularly Action-Value WBFO (AVWBFO) combined with a reinforcement learning warm-start, significantly outperforms baselines. In a challenging barrier-crossing task, our method achieved a 100% success rate and was 18% faster than the next-best method, validating its effectiveness for complex terrain locomotion planning. https://masteryip.github.io/pegasusflow.github.io/

Whole-Body Constrained Learning for Legged Locomotion via Hierarchical Optimization

Jun 05, 2025Abstract:Reinforcement learning (RL) has demonstrated impressive performance in legged locomotion over various challenging environments. However, due to the sim-to-real gap and lack of explainability, unconstrained RL policies deployed in the real world still suffer from inevitable safety issues, such as joint collisions, excessive torque, or foot slippage in low-friction environments. These problems limit its usage in missions with strict safety requirements, such as planetary exploration, nuclear facility inspection, and deep-sea operations. In this paper, we design a hierarchical optimization-based whole-body follower, which integrates both hard and soft constraints into RL framework to make the robot move with better safety guarantees. Leveraging the advantages of model-based control, our approach allows for the definition of various types of hard and soft constraints during training or deployment, which allows for policy fine-tuning and mitigates the challenges of sim-to-real transfer. Meanwhile, it preserves the robustness of RL when dealing with locomotion in complex unstructured environments. The trained policy with introduced constraints was deployed in a hexapod robot and tested in various outdoor environments, including snow-covered slopes and stairs, demonstrating the great traversability and safety of our approach.

Predicting Terrain Mechanical Properties in Sight for Planetary Rovers with Semantic Clues

Nov 03, 2020

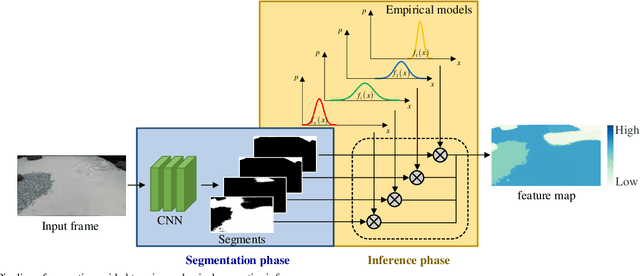

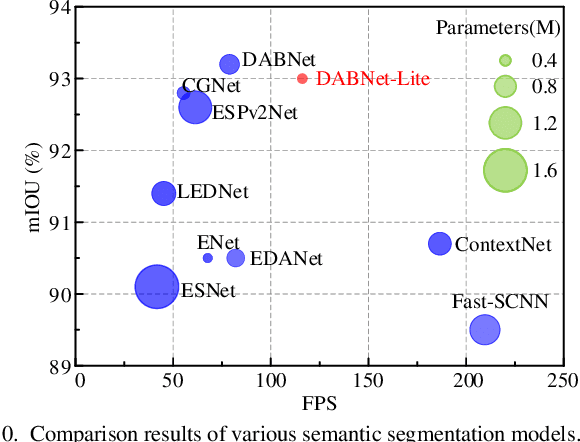

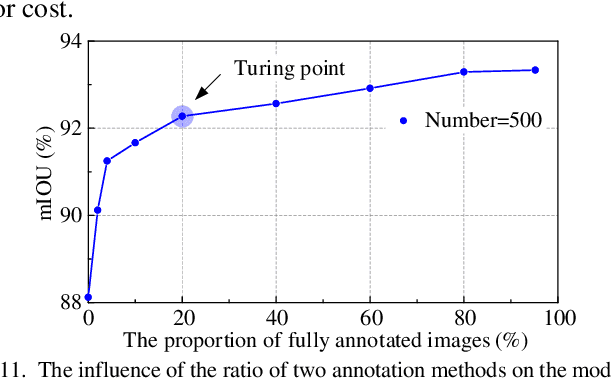

Abstract:Non-geometric mobility hazards such as rover slippage and sinkage posing great challenges to costly planetary missions are closely related to the mechanical properties of terrain. In-situ proprioceptive processes for rovers to estimate terrain mechanical properties need to experience different slip as well as sinkage and are helpless to untraversed regions. This paper proposes to predict terrain mechanical properties with vision in the distance, which expands the sensing range to the whole view and can partly halt potential slippage and sinkage hazards in the planning stage. A semantic-based method is designed to predict bearing and shearing properties of terrain in two stages connected with semantic clues. The former segmentation phase segments terrain with a light-weighted network promising to be applied onboard with competitive 93% accuracy and high recall rate over 96%, while the latter inference phase predicts terrain properties in a quantitative manner based on human-like inference principles. The prediction results in several test routes are 12.5% and 10.8% in full-scale error and help to plan appropriate strategies to avoid suffering non-geometric hazards.

RGBD-based Parameter Extraction for Door Opening Tasks with Human Assists in Nuclear Rescue

Oct 16, 2016

Abstract:The ability to open a door is essential for robots to perform home-serving and rescuing tasks. A substantial problem is to obtain the necessary parameters such as the width of the door and the length of the handle. Many researchers utilize computer vision techniques to extract the parameters automatically which lead to fine but not very stable results because of the complexity of the environment. We propose a method that utilizes an RGBD sensor and a GUI for users to 'point' at the target region with a mouse to acquire 3D information. Algorithms that can extract important parameters from the selected points are designed. To avoid large internal force induced by the misalignment of the robot orientation and the normal of the door plane, we design a module that can compute the normal of the plane by pointing at three non-collinear points and then drive the robot to the desired orientation. We carried out experiments on real robot. The result shows that the designed GUI and algorithms can help find the necessary parameters stably and get the robot prepared for further operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge