Florian Sauerbeck

FlexMap Fusion: Georeferencing and Automated Conflation of HD Maps with OpenStreetMap

Apr 18, 2024Abstract:Today's software stacks for autonomous vehicles rely on HD maps to enable sufficient localization, accurate path planning, and reliable motion prediction. Recent developments have resulted in pipelines for the automated generation of HD maps to reduce manual efforts for creating and updating these HD maps. We present FlexMap Fusion, a methodology to automatically update and enhance existing HD vector maps using OpenStreetMap. Our approach is designed to enable the use of HD maps created from LiDAR and camera data within Autoware. The pipeline provides different functionalities: It provides the possibility to georeference both the point cloud map and the vector map using an RTK-corrected GNSS signal. Moreover, missing semantic attributes can be conflated from OpenStreetMap into the vector map. Differences between the HD map and OpenStreetMap are visualized for manual refinement by the user. In general, our findings indicate that our approach leads to reduced human labor during HD map generation, increases the scalability of the mapping pipeline, and improves the completeness and usability of the maps. The methodological choices may have resulted in limitations that arise especially at complex street structures, e.g., traffic islands. Therefore, more research is necessary to create efficient preprocessing algorithms and advancements in the dynamic adjustment of matching parameters. In order to build upon our work, our source code is available at https://github.com/TUMFTM/FlexMap_Fusion.

Multi-LiDAR Localization and Mapping Pipeline for Urban Autonomous Driving

Nov 03, 2023

Abstract:Autonomous vehicles require accurate and robust localization and mapping algorithms to navigate safely and reliably in urban environments. We present a novel sensor fusion-based pipeline for offline mapping and online localization based on LiDAR sensors. The proposed approach leverages four LiDAR sensors. Mapping and localization algorithms are based on the KISS-ICP, enabling real-time performance and high accuracy. We introduce an approach to generate semantic maps for driving tasks such as path planning. The presented pipeline is integrated into the ROS 2 based Autoware software stack, providing a robust and flexible environment for autonomous driving applications. We show that our pipeline outperforms state-of-the-art approaches for a given research vehicle and real-world autonomous driving application.

* Accepted and presented at IEEE Sensors Conference 2023

Multi-Modal Sensor Fusion and Object Tracking for Autonomous Racing

Oct 12, 2023

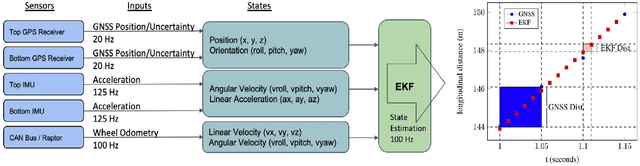

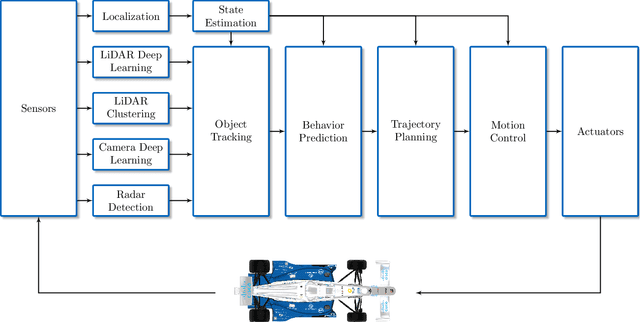

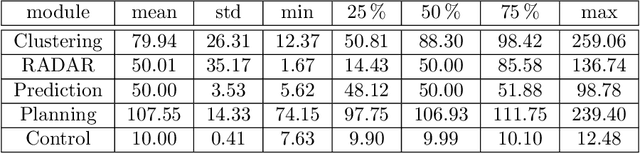

Abstract:Reliable detection and tracking of surrounding objects are indispensable for comprehensive motion prediction and planning of autonomous vehicles. Due to the limitations of individual sensors, the fusion of multiple sensor modalities is required to improve the overall detection capabilities. Additionally, robust motion tracking is essential for reducing the effect of sensor noise and improving state estimation accuracy. The reliability of the autonomous vehicle software becomes even more relevant in complex, adversarial high-speed scenarios at the vehicle handling limits in autonomous racing. In this paper, we present a modular multi-modal sensor fusion and tracking method for high-speed applications. The method is based on the Extended Kalman Filter (EKF) and is capable of fusing heterogeneous detection inputs to track surrounding objects consistently. A novel delay compensation approach enables to reduce the influence of the perception software latency and to output an updated object list. It is the first fusion and tracking method validated in high-speed real-world scenarios at the Indy Autonomous Challenge 2021 and the Autonomous Challenge at CES (AC@CES) 2022, proving its robustness and computational efficiency on embedded systems. It does not require any labeled data and achieves position tracking residuals below 0.1 m. The related code is available as open-source software at https://github.com/TUMFTM/FusionTracking.

EDGAR: An Autonomous Driving Research Platform -- From Feature Development to Real-World Application

Sep 27, 2023

Abstract:While current research and development of autonomous driving primarily focuses on developing new features and algorithms, the transfer from isolated software components into an entire software stack has been covered sparsely. Besides that, due to the complexity of autonomous software stacks and public road traffic, the optimal validation of entire stacks is an open research problem. Our paper targets these two aspects. We present our autonomous research vehicle EDGAR and its digital twin, a detailed virtual duplication of the vehicle. While the vehicle's setup is closely related to the state of the art, its virtual duplication is a valuable contribution as it is crucial for a consistent validation process from simulation to real-world tests. In addition, different development teams can work with the same model, making integration and testing of the software stacks much easier, significantly accelerating the development process. The real and virtual vehicles are embedded in a comprehensive development environment, which is also introduced. All parameters of the digital twin are provided open-source at https://github.com/TUMFTM/edgar_digital_twin.

RACECAR -- The Dataset for High-Speed Autonomous Racing

Jun 05, 2023

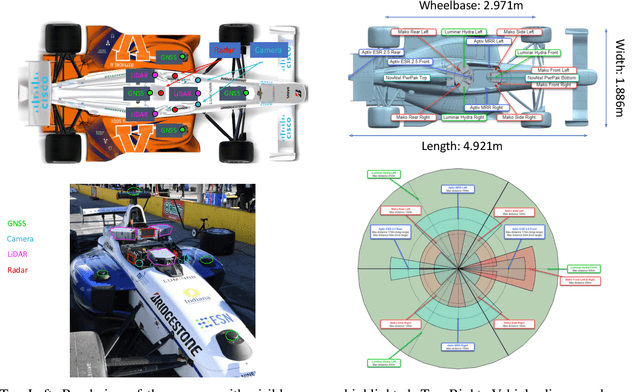

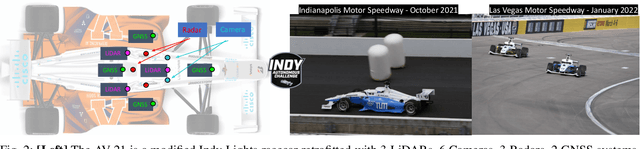

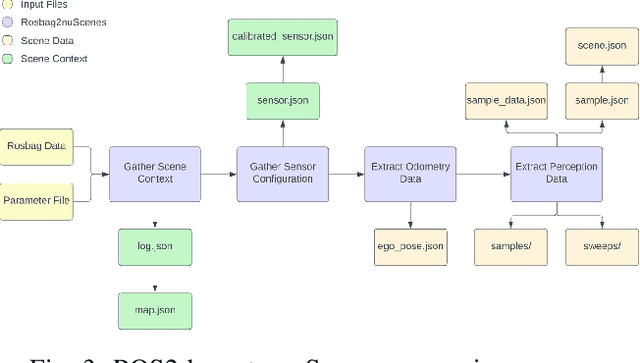

Abstract:This paper describes the first open dataset for full-scale and high-speed autonomous racing. Multi-modal sensor data has been collected from fully autonomous Indy race cars operating at speeds of up to 170 mph (273 kph). Six teams who raced in the Indy Autonomous Challenge have contributed to this dataset. The dataset spans 11 interesting racing scenarios across two race tracks which include solo laps, multi-agent laps, overtaking situations, high-accelerations, banked tracks, obstacle avoidance, pit entry and exit at different speeds. The dataset contains data from 27 racing sessions across the 11 scenarios with over 6.5 hours of sensor data recorded from the track. The data is organized and released in both ROS2 and nuScenes format. We have also developed the ROS2-to-nuScenes conversion library to achieve this. The RACECAR data is unique because of the high-speed environment of autonomous racing. We present several benchmark problems on localization, object detection and tracking (LiDAR, Radar, and Camera), and mapping using the RACECAR data to explore issues that arise at the limits of operation of the vehicle.

DeepSTEP -- Deep Learning-Based Spatio-Temporal End-To-End Perception for Autonomous Vehicles

May 11, 2023Abstract:Autonomous vehicles demand high accuracy and robustness of perception algorithms. To develop efficient and scalable perception algorithms, the maximum information should be extracted from the available sensor data. In this work, we present our concept for an end-to-end perception architecture, named DeepSTEP. The deep learning-based architecture processes raw sensor data from the camera, LiDAR, and RaDAR, and combines the extracted data in a deep fusion network. The output of this deep fusion network is a shared feature space, which is used by perception head networks to fulfill several perception tasks, such as object detection or local mapping. DeepSTEP incorporates multiple ideas to advance state of the art: First, combining detection and localization into a single pipeline allows for efficient processing to reduce computational overhead and further improves overall performance. Second, the architecture leverages the temporal domain by using a self-attention mechanism that focuses on the most important features. We believe that our concept of DeepSTEP will advance the development of end-to-end perception systems. The network will be deployed on our research vehicle, which will be used as a platform for data collection, real-world testing, and validation. In conclusion, DeepSTEP represents a significant advancement in the field of perception for autonomous vehicles. The architecture's end-to-end design, time-aware attention mechanism, and integration of multiple perception tasks make it a promising solution for real-world deployment. This research is a work in progress and presents the first concept of establishing a novel perception pipeline.

RGB-L: Enhancing Indirect Visual SLAM using LiDAR-based Dense Depth Maps

Dec 06, 2022

Abstract:In this paper, we present a novel method for integrating 3D LiDAR depth measurements into the existing ORB-SLAM3 by building upon the RGB-D mode. We propose and compare two methods of depth map generation: conventional computer vision methods, namely an inverse dilation operation, and a supervised deep learning-based approach. We integrate the former directly into the ORB-SLAM3 framework by adding a so-called RGB-L (LiDAR) mode that directly reads LiDAR point clouds. The proposed methods are evaluated on the KITTI Odometry dataset and compared to each other and the standard ORB-SLAM3 stereo method. We demonstrate that, depending on the environment, advantages in trajectory accuracy and robustness can be achieved. Furthermore, we demonstrate that the runtime of the ORB-SLAM3 algorithm can be reduced by more than 40 % compared to the stereo mode. The related code for the ORB-SLAM3 RGB-L mode will be available as open-source software under https://github.com/TUMFTM/ORB SLAM3 RGBL.

Teaching Autonomous Systems Hands-On: Leveraging Modular Small-Scale Hardware in the Robotics Classroom

Sep 21, 2022

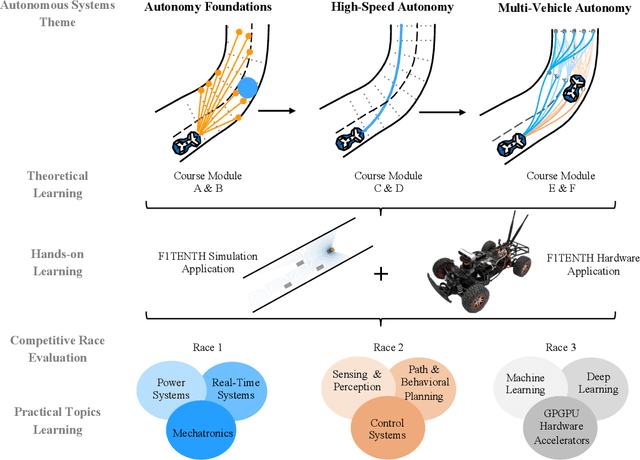

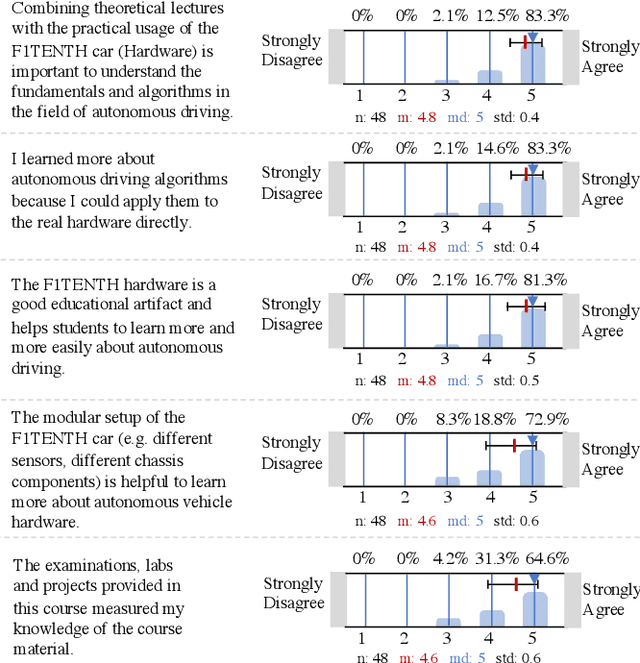

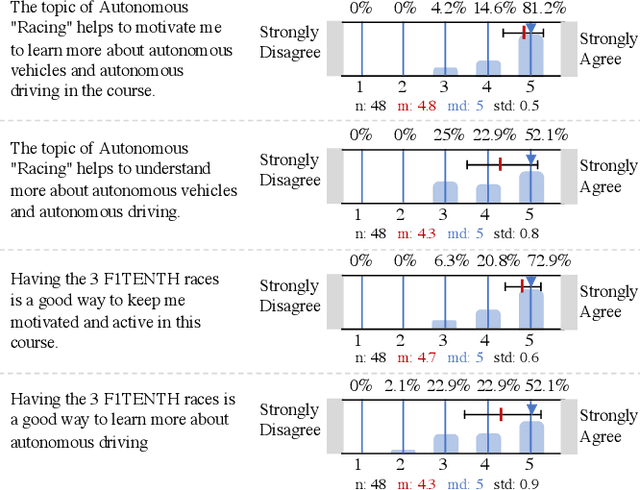

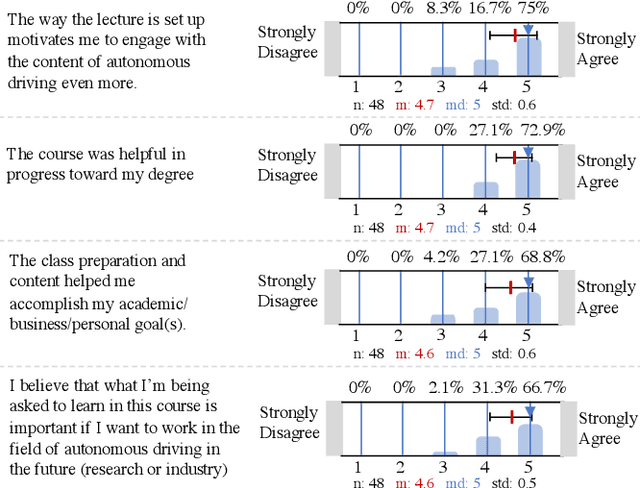

Abstract:Although robotics courses are well established in higher education, the courses often focus on theory and sometimes lack the systematic coverage of the techniques involved in developing, deploying, and applying software to real hardware. Additionally, most hardware platforms for robotics teaching are low-level toys aimed at younger students at middle-school levels. To address this gap, an autonomous vehicle hardware platform, called F1TENTH, is developed for teaching autonomous systems hands-on. This article describes the teaching modules and software stack for teaching at various educational levels with the theme of "racing" and competitions that replace exams. The F1TENTH vehicles offer a modular hardware platform and its related software for teaching the fundamentals of autonomous driving algorithms. From basic reactive methods to advanced planning algorithms, the teaching modules enhance students' computational thinking through autonomous driving with the F1TENTH vehicle. The F1TENTH car fills the gap between research platforms and low-end toy cars and offers hands-on experience in learning the topics in autonomous systems. Four universities have adopted the teaching modules for their semester-long undergraduate and graduate courses for multiple years. Student feedback is used to analyze the effectiveness of the F1TENTH platform. More than 80% of the students strongly agree that the hardware platform and modules greatly motivate their learning, and more than 70% of the students strongly agree that the hardware-enhanced their understanding of the subjects. The survey results show that more than 80% of the students strongly agree that the competitions motivate them for the course.

TUM Autonomous Motorsport: An Autonomous Racing Software for the Indy Autonomous Challenge

May 31, 2022

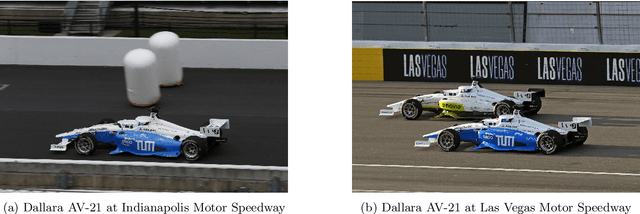

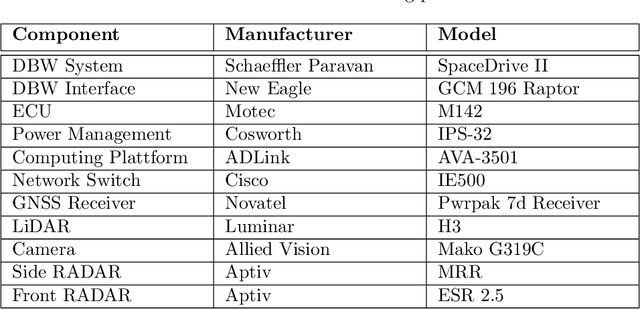

Abstract:For decades, motorsport has been an incubator for innovations in the automotive sector and brought forth systems like disk brakes or rearview mirrors. Autonomous racing series such as Roborace, F1Tenth, or the Indy Autonomous Challenge (IAC) are envisioned as playing a similar role within the autonomous vehicle sector, serving as a proving ground for new technology at the limits of the autonomous systems capabilities. This paper outlines the software stack and approach of the TUM Autonomous Motorsport team for their participation in the Indy Autonomous Challenge, which holds two competitions: A single-vehicle competition on the Indianapolis Motor Speedway and a passing competition at the Las Vegas Motor Speedway. Nine university teams used an identical vehicle platform: A modified Indy Lights chassis equipped with sensors, a computing platform, and actuators. All the teams developed different algorithms for object detection, localization, planning, prediction, and control of the race cars. The team from TUM placed first in Indianapolis and secured second place in Las Vegas. During the final of the passing competition, the TUM team reached speeds and accelerations close to the limit of the vehicle, peaking at around 270 km/h and 28 ms2. This paper will present details of the vehicle hardware platform, the developed algorithms, and the workflow to test and enhance the software applied during the two-year project. We derive deep insights into the autonomous vehicle's behavior at high speed and high acceleration by providing a detailed competition analysis. Based on this, we deduce a list of lessons learned and provide insights on promising areas of future work based on the real-world evaluation of the displayed concepts.

Indy Autonomous Challenge -- Autonomous Race Cars at the Handling Limits

Feb 08, 2022

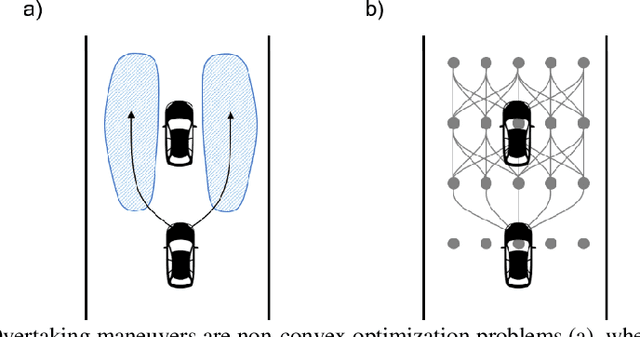

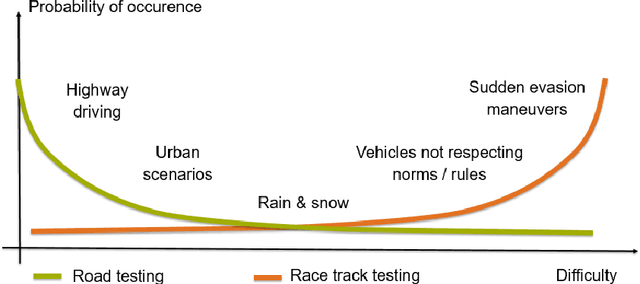

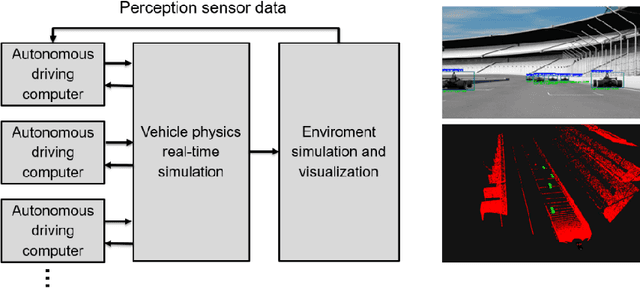

Abstract:Motorsport has always been an enabler for technological advancement, and the same applies to the autonomous driving industry. The team TUM Auton-omous Motorsports will participate in the Indy Autonomous Challenge in Octo-ber 2021 to benchmark its self-driving software-stack by racing one out of ten autonomous Dallara AV-21 racecars at the Indianapolis Motor Speedway. The first part of this paper explains the reasons for entering an autonomous vehicle race from an academic perspective: It allows focusing on several edge cases en-countered by autonomous vehicles, such as challenging evasion maneuvers and unstructured scenarios. At the same time, it is inherently safe due to the motor-sport related track safety precautions. It is therefore an ideal testing ground for the development of autonomous driving algorithms capable of mastering the most challenging and rare situations. In addition, we provide insight into our soft-ware development workflow and present our Hardware-in-the-Loop simulation setup. It is capable of running simulations of up to eight autonomous vehicles in real time. The second part of the paper gives a high-level overview of the soft-ware architecture and covers our development priorities in building a high-per-formance autonomous racing software: maximum sensor detection range, relia-ble handling of multi-vehicle situations, as well as reliable motion control under uncertainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge