Sebastian Huch

Multi-Modal Sensor Fusion and Object Tracking for Autonomous Racing

Oct 12, 2023

Abstract:Reliable detection and tracking of surrounding objects are indispensable for comprehensive motion prediction and planning of autonomous vehicles. Due to the limitations of individual sensors, the fusion of multiple sensor modalities is required to improve the overall detection capabilities. Additionally, robust motion tracking is essential for reducing the effect of sensor noise and improving state estimation accuracy. The reliability of the autonomous vehicle software becomes even more relevant in complex, adversarial high-speed scenarios at the vehicle handling limits in autonomous racing. In this paper, we present a modular multi-modal sensor fusion and tracking method for high-speed applications. The method is based on the Extended Kalman Filter (EKF) and is capable of fusing heterogeneous detection inputs to track surrounding objects consistently. A novel delay compensation approach enables to reduce the influence of the perception software latency and to output an updated object list. It is the first fusion and tracking method validated in high-speed real-world scenarios at the Indy Autonomous Challenge 2021 and the Autonomous Challenge at CES (AC@CES) 2022, proving its robustness and computational efficiency on embedded systems. It does not require any labeled data and achieves position tracking residuals below 0.1 m. The related code is available as open-source software at https://github.com/TUMFTM/FusionTracking.

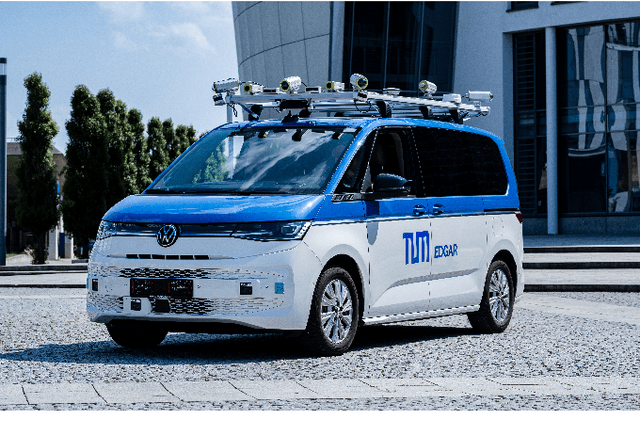

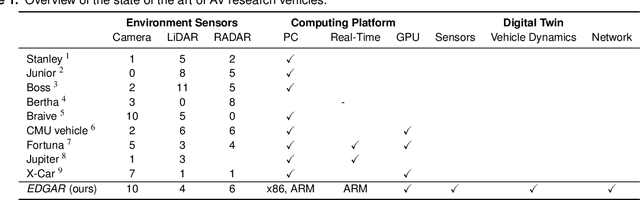

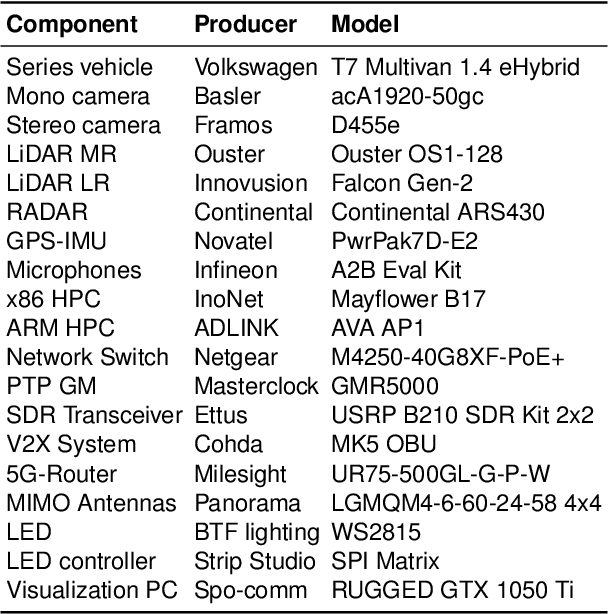

EDGAR: An Autonomous Driving Research Platform -- From Feature Development to Real-World Application

Sep 27, 2023

Abstract:While current research and development of autonomous driving primarily focuses on developing new features and algorithms, the transfer from isolated software components into an entire software stack has been covered sparsely. Besides that, due to the complexity of autonomous software stacks and public road traffic, the optimal validation of entire stacks is an open research problem. Our paper targets these two aspects. We present our autonomous research vehicle EDGAR and its digital twin, a detailed virtual duplication of the vehicle. While the vehicle's setup is closely related to the state of the art, its virtual duplication is a valuable contribution as it is crucial for a consistent validation process from simulation to real-world tests. In addition, different development teams can work with the same model, making integration and testing of the software stacks much easier, significantly accelerating the development process. The real and virtual vehicles are embedded in a comprehensive development environment, which is also introduced. All parameters of the digital twin are provided open-source at https://github.com/TUMFTM/edgar_digital_twin.

DeepSTEP -- Deep Learning-Based Spatio-Temporal End-To-End Perception for Autonomous Vehicles

May 11, 2023Abstract:Autonomous vehicles demand high accuracy and robustness of perception algorithms. To develop efficient and scalable perception algorithms, the maximum information should be extracted from the available sensor data. In this work, we present our concept for an end-to-end perception architecture, named DeepSTEP. The deep learning-based architecture processes raw sensor data from the camera, LiDAR, and RaDAR, and combines the extracted data in a deep fusion network. The output of this deep fusion network is a shared feature space, which is used by perception head networks to fulfill several perception tasks, such as object detection or local mapping. DeepSTEP incorporates multiple ideas to advance state of the art: First, combining detection and localization into a single pipeline allows for efficient processing to reduce computational overhead and further improves overall performance. Second, the architecture leverages the temporal domain by using a self-attention mechanism that focuses on the most important features. We believe that our concept of DeepSTEP will advance the development of end-to-end perception systems. The network will be deployed on our research vehicle, which will be used as a platform for data collection, real-world testing, and validation. In conclusion, DeepSTEP represents a significant advancement in the field of perception for autonomous vehicles. The architecture's end-to-end design, time-aware attention mechanism, and integration of multiple perception tasks make it a promising solution for real-world deployment. This research is a work in progress and presents the first concept of establishing a novel perception pipeline.

Quantifying the LiDAR Sim-to-Real Domain Shift: A Detailed Investigation Using Object Detectors and Analyzing Point Clouds at Target-Level

Mar 03, 2023Abstract:LiDAR object detection algorithms based on neural networks for autonomous driving require large amounts of data for training, validation, and testing. As real-world data collection and labeling are time-consuming and expensive, simulation-based synthetic data generation is a viable alternative. However, using simulated data for the training of neural networks leads to a domain shift of training and testing data due to differences in scenes, scenarios, and distributions. In this work, we quantify the sim-to-real domain shift by means of LiDAR object detectors trained with a new scenario-identical real-world and simulated dataset. In addition, we answer the questions of how well the simulated data resembles the real-world data and how well object detectors trained on simulated data perform on real-world data. Further, we analyze point clouds at the target-level by comparing real-world and simulated point clouds within the 3D bounding boxes of the targets. Our experiments show that a significant sim-to-real domain shift exists even for our scenario-identical datasets. This domain shift amounts to an average precision reduction of around 14 % for object detectors trained with simulated data. Additional experiments reveal that this domain shift can be lowered by introducing a simple noise model in simulation. We further show that a simple downsampling method to model real-world physics does not influence the performance of the object detectors.

TUM Autonomous Motorsport: An Autonomous Racing Software for the Indy Autonomous Challenge

May 31, 2022

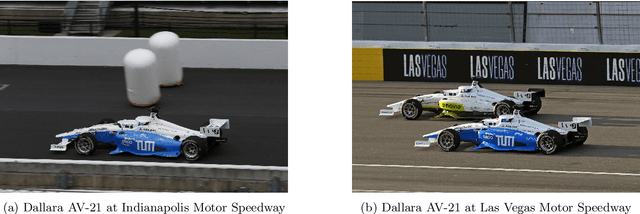

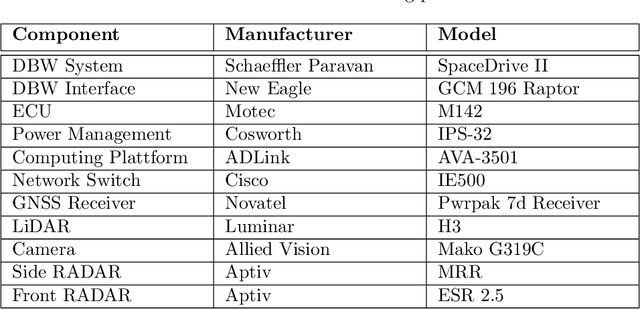

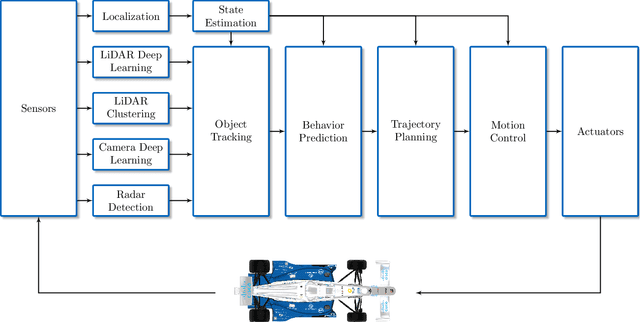

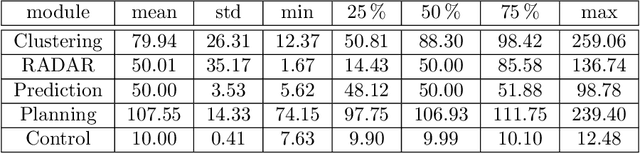

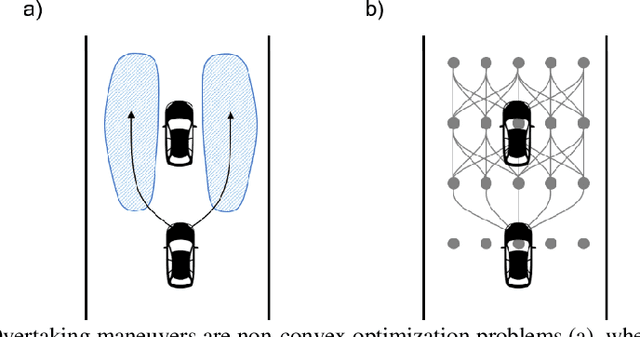

Abstract:For decades, motorsport has been an incubator for innovations in the automotive sector and brought forth systems like disk brakes or rearview mirrors. Autonomous racing series such as Roborace, F1Tenth, or the Indy Autonomous Challenge (IAC) are envisioned as playing a similar role within the autonomous vehicle sector, serving as a proving ground for new technology at the limits of the autonomous systems capabilities. This paper outlines the software stack and approach of the TUM Autonomous Motorsport team for their participation in the Indy Autonomous Challenge, which holds two competitions: A single-vehicle competition on the Indianapolis Motor Speedway and a passing competition at the Las Vegas Motor Speedway. Nine university teams used an identical vehicle platform: A modified Indy Lights chassis equipped with sensors, a computing platform, and actuators. All the teams developed different algorithms for object detection, localization, planning, prediction, and control of the race cars. The team from TUM placed first in Indianapolis and secured second place in Las Vegas. During the final of the passing competition, the TUM team reached speeds and accelerations close to the limit of the vehicle, peaking at around 270 km/h and 28 ms2. This paper will present details of the vehicle hardware platform, the developed algorithms, and the workflow to test and enhance the software applied during the two-year project. We derive deep insights into the autonomous vehicle's behavior at high speed and high acceleration by providing a detailed competition analysis. Based on this, we deduce a list of lessons learned and provide insights on promising areas of future work based on the real-world evaluation of the displayed concepts.

Indy Autonomous Challenge -- Autonomous Race Cars at the Handling Limits

Feb 08, 2022

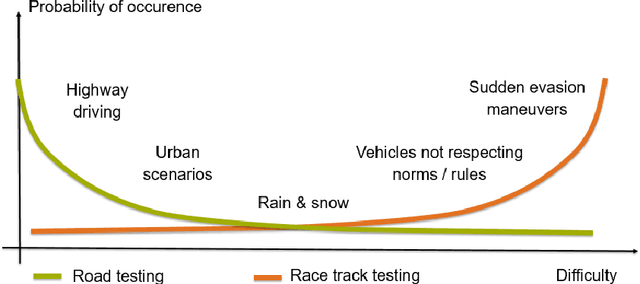

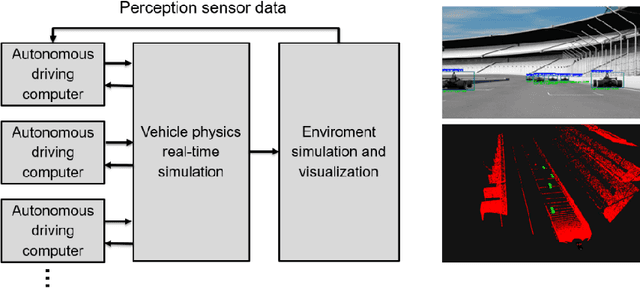

Abstract:Motorsport has always been an enabler for technological advancement, and the same applies to the autonomous driving industry. The team TUM Auton-omous Motorsports will participate in the Indy Autonomous Challenge in Octo-ber 2021 to benchmark its self-driving software-stack by racing one out of ten autonomous Dallara AV-21 racecars at the Indianapolis Motor Speedway. The first part of this paper explains the reasons for entering an autonomous vehicle race from an academic perspective: It allows focusing on several edge cases en-countered by autonomous vehicles, such as challenging evasion maneuvers and unstructured scenarios. At the same time, it is inherently safe due to the motor-sport related track safety precautions. It is therefore an ideal testing ground for the development of autonomous driving algorithms capable of mastering the most challenging and rare situations. In addition, we provide insight into our soft-ware development workflow and present our Hardware-in-the-Loop simulation setup. It is capable of running simulations of up to eight autonomous vehicles in real time. The second part of the paper gives a high-level overview of the soft-ware architecture and covers our development priorities in building a high-per-formance autonomous racing software: maximum sensor detection range, relia-ble handling of multi-vehicle situations, as well as reliable motion control under uncertainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge