Dominik Kulmer

vEDGAR -- Can CARLA Do HiL?

Dec 09, 2025Abstract:Simulation offers advantages throughout the development process of automated driving functions, both in research and product development. Common open-source simulators like CARLA are extensively used in training, evaluation, and software-in-the-loop testing of new automated driving algorithms. However, the CARLA simulator lacks an evaluation where research and automated driving vehicles are simulated with their entire sensor and actuation stack in real time. The goal of this work is therefore to create a simulation framework for testing the automation software on its dedicated hardware and identifying its limits. Achieving this goal would greatly benefit the open-source development workflow of automated driving functions, designating CARLA as a consistent evaluation tool along the entire development process. To achieve this goal, in a first step, requirements are derived, and a simulation architecture is specified and implemented. Based on the formulated requirements, the proposed vEDGAR software is evaluated, resulting in a final conclusion on the applicability of CARLA for HiL testing of automated vehicles. The tool is available open source: Modified CARLA fork: https://github.com/TUMFTM/carla, vEDGAR Framework: https://github.com/TUMFTM/vEDGAR

CaLiV: LiDAR-to-Vehicle Calibration of Arbitrary Sensor Setups via Object Reconstruction

Mar 31, 2025Abstract:In autonomous systems, sensor calibration is essential for a safe and efficient navigation in dynamic environments. Accurate calibration is a prerequisite for reliable perception and planning tasks such as object detection and obstacle avoidance. Many existing LiDAR calibration methods require overlapping fields of view, while others use external sensing devices or postulate a feature-rich environment. In addition, Sensor-to-Vehicle calibration is not supported by the vast majority of calibration algorithms. In this work, we propose a novel target-based technique for extrinsic Sensor-to-Sensor and Sensor-to-Vehicle calibration of multi-LiDAR systems called CaLiV. This algorithm works for non-overlapping FoVs, as well as arbitrary calibration targets, and does not require any external sensing devices. First, we apply motion to produce FoV overlaps and utilize a simple unscented Kalman filter to obtain vehicle poses. Then, we use the Gaussian mixture model-based registration framework GMMCalib to align the point clouds in a common calibration frame. Finally, we reduce the task of recovering the sensor extrinsics to a minimization problem. We show that both translational and rotational Sensor-to-Sensor errors can be solved accurately by our method. In addition, all Sensor-to-Vehicle rotation angles can also be calibrated with high accuracy. We validate the simulation results in real-world experiments. The code is open source and available on https://github.com/TUMFTM/CaLiV.

OpenLiDARMap: Zero-Drift Point Cloud Mapping using Map Priors

Jan 19, 2025

Abstract:Accurate localization is a critical component of mobile autonomous systems, especially in Global Navigation Satellite Systems (GNSS)-denied environments where traditional methods fail. In such scenarios, environmental sensing is essential for reliable operation. However, approaches such as LiDAR odometry and Simultaneous Localization and Mapping (SLAM) suffer from drift over long distances, especially in the absence of loop closures. Map-based localization offers a robust alternative, but the challenge lies in creating and georeferencing maps without GNSS support. To address this issue, we propose a method for creating georeferenced maps without GNSS by using publicly available data, such as building footprints and surface models derived from sparse aerial scans. Our approach integrates these data with onboard LiDAR scans to produce dense, accurate, georeferenced 3D point cloud maps. By combining an Iterative Closest Point (ICP) scan-to-scan and scan-to-map matching strategy, we achieve high local consistency without suffering from long-term drift. Thus, we eliminate the reliance on GNSS for the creation of georeferenced maps. The results demonstrate that LiDAR-only mapping can produce accurate georeferenced point cloud maps when augmented with existing map priors.

Multi-LiCa: A Motion and Targetless Multi LiDAR-to-LiDAR Calibration Framework

Jan 19, 2025

Abstract:Today's autonomous vehicles rely on a multitude of sensors to perceive their environment. To improve the perception or create redundancy, the sensor's alignment relative to each other must be known. With Multi-LiCa, we present a novel approach for the alignment, e.g. calibration. We present an automatic motion- and targetless approach for the extrinsic multi LiDAR-to-LiDAR calibration without the need for additional sensor modalities or an initial transformation input. We propose a two-step process with feature-based matching for the coarse alignment and a GICP-based fine registration in combination with a cost-based matching strategy. Our approach can be applied to any number of sensors and positions if there is a partial overlap between the field of view of single sensors. We show that our pipeline is better generalized to different sensor setups and scenarios and is on par or better in calibration accuracy than existing approaches. The presented framework is integrated in ROS 2 but can also be used as a standalone application. To build upon our work, our source code is available at: https://github.com/TUMFTM/Multi_LiCa.

FlexMap Fusion: Georeferencing and Automated Conflation of HD Maps with OpenStreetMap

Apr 18, 2024Abstract:Today's software stacks for autonomous vehicles rely on HD maps to enable sufficient localization, accurate path planning, and reliable motion prediction. Recent developments have resulted in pipelines for the automated generation of HD maps to reduce manual efforts for creating and updating these HD maps. We present FlexMap Fusion, a methodology to automatically update and enhance existing HD vector maps using OpenStreetMap. Our approach is designed to enable the use of HD maps created from LiDAR and camera data within Autoware. The pipeline provides different functionalities: It provides the possibility to georeference both the point cloud map and the vector map using an RTK-corrected GNSS signal. Moreover, missing semantic attributes can be conflated from OpenStreetMap into the vector map. Differences between the HD map and OpenStreetMap are visualized for manual refinement by the user. In general, our findings indicate that our approach leads to reduced human labor during HD map generation, increases the scalability of the mapping pipeline, and improves the completeness and usability of the maps. The methodological choices may have resulted in limitations that arise especially at complex street structures, e.g., traffic islands. Therefore, more research is necessary to create efficient preprocessing algorithms and advancements in the dynamic adjustment of matching parameters. In order to build upon our work, our source code is available at https://github.com/TUMFTM/FlexMap_Fusion.

Multi-LiDAR Localization and Mapping Pipeline for Urban Autonomous Driving

Nov 03, 2023

Abstract:Autonomous vehicles require accurate and robust localization and mapping algorithms to navigate safely and reliably in urban environments. We present a novel sensor fusion-based pipeline for offline mapping and online localization based on LiDAR sensors. The proposed approach leverages four LiDAR sensors. Mapping and localization algorithms are based on the KISS-ICP, enabling real-time performance and high accuracy. We introduce an approach to generate semantic maps for driving tasks such as path planning. The presented pipeline is integrated into the ROS 2 based Autoware software stack, providing a robust and flexible environment for autonomous driving applications. We show that our pipeline outperforms state-of-the-art approaches for a given research vehicle and real-world autonomous driving application.

* Accepted and presented at IEEE Sensors Conference 2023

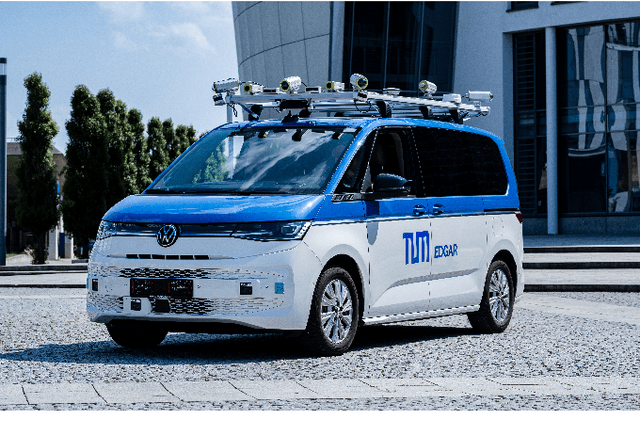

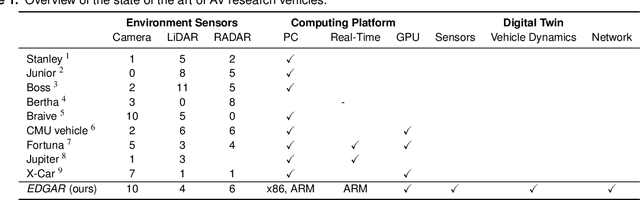

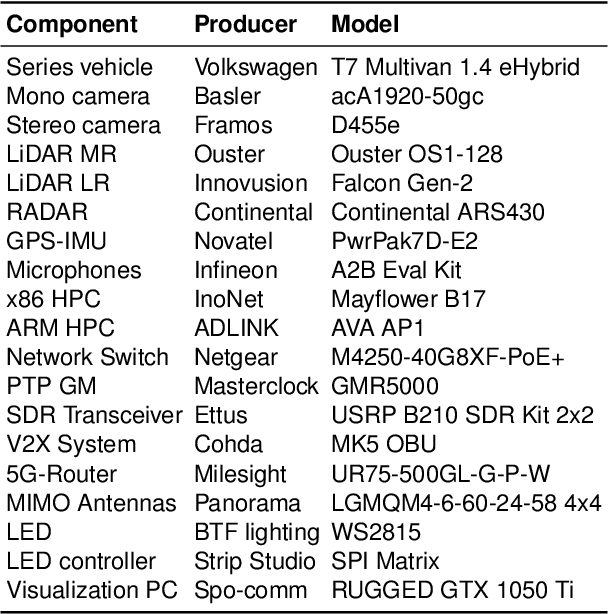

EDGAR: An Autonomous Driving Research Platform -- From Feature Development to Real-World Application

Sep 27, 2023

Abstract:While current research and development of autonomous driving primarily focuses on developing new features and algorithms, the transfer from isolated software components into an entire software stack has been covered sparsely. Besides that, due to the complexity of autonomous software stacks and public road traffic, the optimal validation of entire stacks is an open research problem. Our paper targets these two aspects. We present our autonomous research vehicle EDGAR and its digital twin, a detailed virtual duplication of the vehicle. While the vehicle's setup is closely related to the state of the art, its virtual duplication is a valuable contribution as it is crucial for a consistent validation process from simulation to real-world tests. In addition, different development teams can work with the same model, making integration and testing of the software stacks much easier, significantly accelerating the development process. The real and virtual vehicles are embedded in a comprehensive development environment, which is also introduced. All parameters of the digital twin are provided open-source at https://github.com/TUMFTM/edgar_digital_twin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge