Davide Brunelli

AI and Vision based Autonomous Navigation of Nano-Drones in Partially-Known Environments

May 08, 2025Abstract:The miniaturisation of sensors and processors, the advancements in connected edge intelligence, and the exponential interest in Artificial Intelligence are boosting the affirmation of autonomous nano-size drones in the Internet of Robotic Things ecosystem. However, achieving safe autonomous navigation and high-level tasks such as exploration and surveillance with these tiny platforms is extremely challenging due to their limited resources. This work focuses on enabling the safe and autonomous flight of a pocket-size, 30-gram platform called Crazyflie 2.1 in a partially known environment. We propose a novel AI-aided, vision-based reactive planning method for obstacle avoidance under the ambit of Integrated Sensing, Computing and Communication paradigm. We deal with the constraints of the nano-drone by splitting the navigation task into two parts: a deep learning-based object detector runs on the edge (external hardware) while the planning algorithm is executed onboard. The results show the ability to command the drone at $\sim8$ frames-per-second and a model performance reaching a COCO mean-average-precision of $60.8$. Field experiments demonstrate the feasibility of the solution with the drone flying at a top speed of $1$ m/s while steering away from an obstacle placed in an unknown position and reaching the target destination. The outcome highlights the compatibility of the communication delay and the model performance with the requirements of the real-time navigation task. We provide a feasible alternative to a fully onboard implementation that can be extended to autonomous exploration with nano-drones.

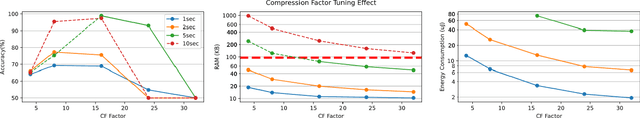

Parallelization is All System Identification Needs: End-to-end Vibration Diagnostics on a multi-core RISC-V edge device

Apr 07, 2025Abstract:The early detection of structural malfunctions requires the installation of real-time monitoring systems ensuring continuous access to the damage-sensitive information; nevertheless, it can generate bottlenecks in terms of bandwidth and storage. Deploying data reduction techniques at the edge is recognized as a proficient solution to reduce the system's network traffic. However, the most effective solutions currently employed for the purpose are based on memory and power-hungry algorithms, making their embedding on resource-constrained devices very challenging; this is the case of vibration data reduction based on System Identification models. This paper presents PARSY-VDD, a fully optimized PArallel end-to-end software framework based on SYstem identification for Vibration-based Damage Detection, as a suitable solution to perform damage detection at the edge in a time and energy-efficient manner, avoiding streaming raw data to the cloud. We evaluate the damage detection capabilities of PARSY-VDD with two benchmarks: a bridge and a wind turbine blade, showcasing the robustness of the end-to-end approach. Then, we deploy PARSY-VDD on both commercial single-core and a specific multi-core edge device. We introduce an architecture-agnostic algorithmic optimization for SysId, improving the execution by 90x and reducing the consumption by 85x compared with the state-of-the-art SysId implementation on GAP9. Results show that by utilizing the unique parallel computing capabilities of GAP9, the execution time is 751{\mu}s with the high-performance multi-core solution operating at 370MHz and 0.8V, while the energy consumption is 37{\mu}J with the low-power solution operating at 240MHz and 0.65V. Compared with other single-core implementations based on STM32 microcontrollers, the GAP9 high-performance configuration is 76x faster, while the low-power configuration is 360x more energy efficient.

Accelerating Image-based Pest Detection on a Heterogeneous Multi-core Microcontroller

Aug 29, 2024Abstract:The codling moth pest poses a significant threat to global crop production, with potential losses of up to 80% in apple orchards. Special camera-based sensor nodes are deployed in the field to record and transmit images of trapped insects to monitor the presence of the pest. This paper investigates the embedding of computer vision algorithms in the sensor node using a novel State-of-the-Art Microcontroller Unit (MCU), the GreenWaves Technologies' GAP9 System-on-Chip, which combines 10 RISC-V general purposes cores with a convolution hardware accelerator. We compare the performance of a lightweight Viola-Jones detector algorithm with a Convolutional Neural Network (CNN), MobileNetV3-SSDLite, trained for the pest detection task. On two datasets that differentiate for the distance between the camera sensor and the pest targets, the CNN generalizes better than the other method and achieves a detection accuracy between 83% and 72%. Thanks to the GAP9's CNN accelerator, the CNN inference task takes only 147 ms to process a 320$\times$240 image. Compared to the GAP8 MCU, which only relies on general-purpose cores for processing, we achieved 9.5$\times$ faster inference speed. When running on a 1000 mAh battery at 3.7 V, the estimated lifetime is approximately 199 days, processing an image every 30 seconds. Our study demonstrates that the novel heterogeneous MCU can perform end-to-end CNN inference with an energy consumption of just 4.85 mJ, matching the efficiency of the simpler Viola-Jones algorithm and offering power consumption up to 15$\times$ lower than previous methods. Code at: https://github.com/Bomps4/TAFE_Pest_Detection

Modular Meshed Ultra-Wideband Aided Inertial Navigation with Robust Anchor Calibration

Aug 26, 2024

Abstract:This paper introduces a generic filter-based state estimation framework that supports two state-decoupling strategies based on cross-covariance factorization. These strategies reduce the computational complexity and inherently support true modularity -- a perquisite for handling and processing meshed range measurements among a time-varying set of devices. In order to utilize these measurements in the estimation framework, positions of newly detected stationary devices (anchors) and the pairwise biases between the ranging devices are required. In this work an autonomous calibration procedure for new anchors is presented, that utilizes range measurements from multiple tags as well as already known anchors. To improve the robustness, an outlier rejection method is introduced. After the calibration is performed, the sensor fusion framework obtains initial beliefs of the anchor positions and dictionaries of pairwise biases, in order to fuse range measurements obtained from new anchors tightly-coupled. The effectiveness of the filter and calibration framework has been validated through evaluations on a recorded dataset and real-world experiments.

Is That Rain? Understanding Effects on Visual Odometry Performance for Autonomous UAVs and Efficient DNN-based Rain Classification at the Edge

Jul 17, 2024

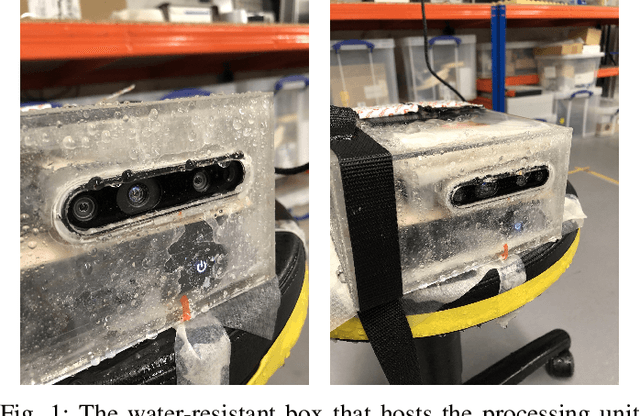

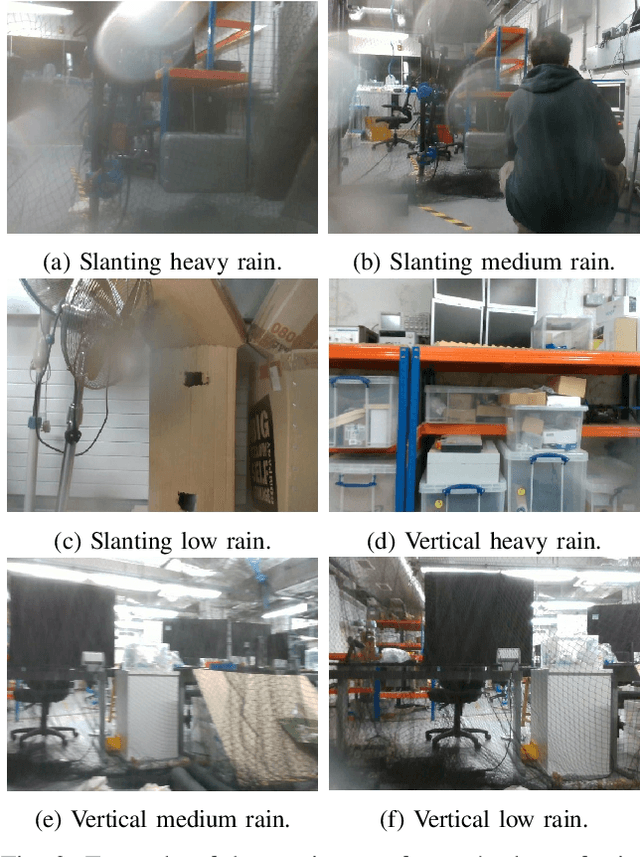

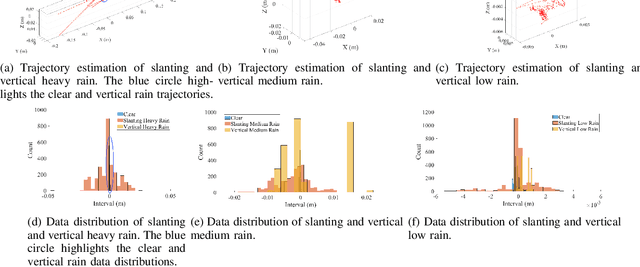

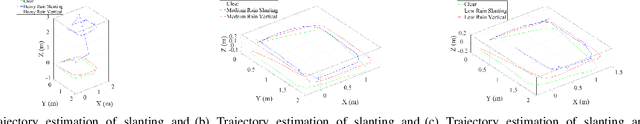

Abstract:The development of safe and reliable autonomous unmanned aerial vehicles relies on the ability of the system to recognise and adapt to changes in the local environment based on sensor inputs. State-of-the-art local tracking and trajectory planning are typically performed using camera sensor input to the flight control algorithm, but the extent to which environmental disturbances like rain affect the performance of these systems is largely unknown. In this paper, we first describe the development of an open dataset comprising ~335k images to examine these effects for seven different classes of precipitation conditions and show that a worst-case average tracking error of 1.5 m is possible for a state-of-the-art visual odometry system (VINS-Fusion). We then use the dataset to train a set of deep neural network models suited to mobile and constrained deployment scenarios to determine the extent to which it may be possible to efficiently and accurately classify these `rainy' conditions. The most lightweight of these models (MobileNetV3 small) can achieve an accuracy of 90% with a memory footprint of just 1.28 MB and a frame rate of 93 FPS, which is suitable for deployment in resource-constrained and latency-sensitive systems. We demonstrate a classification latency in the order of milliseconds using typical flight computer hardware. Accordingly, such a model can feed into the disturbance estimation component of an autonomous flight controller. In addition, data from unmanned aerial vehicles with the ability to accurately determine environmental conditions in real time may contribute to developing more granular timely localised weather forecasting.

Instance-based Learning with Prototype Reduction for Real-Time Proportional Myocontrol: A Randomized User Study Demonstrating Accuracy-preserving Data Reduction for Prosthetic Embedded Systems

Aug 21, 2023Abstract:This work presents the design, implementation and validation of learning techniques based on the kNN scheme for gesture detection in prosthetic control. To cope with high computational demands in instance-based prediction, methods of dataset reduction are evaluated considering real-time determinism to allow for the reliable integration into battery-powered portable devices. The influence of parameterization and varying proportionality schemes is analyzed, utilizing an eight-channel-sEMG armband. Besides offline cross-validation accuracy, success rates in real-time pilot experiments (online target achievement tests) are determined. Based on the assessment of specific dataset reduction techniques' adequacy for embedded control applications regarding accuracy and timing behaviour, Decision Surface Mapping (DSM) proves itself promising when applying kNN on the reduced set. A randomized, double-blind user study was conducted to evaluate the respective methods (kNN and kNN with DSM-reduction) against Ridge Regression (RR) and RR with Random Fourier Features (RR-RFF). The kNN-based methods performed significantly better (p<0.0005) than the regression techniques. Between DSM-kNN and kNN, there was no statistically significant difference (significance level 0.05). This is remarkable in consideration of only one sample per class in the reduced set, thus yielding a reduction rate of over 99% while preserving success rate. The same behaviour could be confirmed in an extended user study. With k=1, which turned out to be an excellent choice, the runtime complexity of both kNN (in every prediction step) as well as DSM-kNN (in the training phase) becomes linear concerning the number of original samples, favouring dependable wearable prosthesis applications.

Incremental Online Learning Algorithms Comparison for Gesture and Visual Smart Sensors

Sep 01, 2022

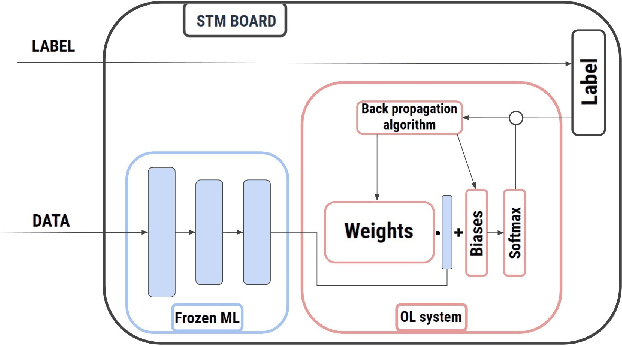

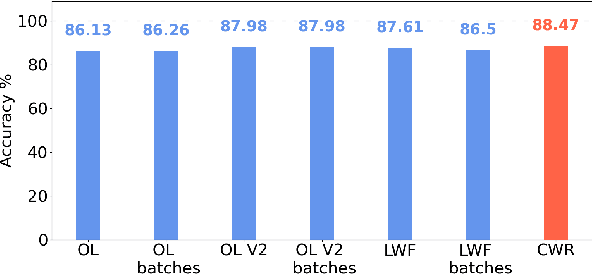

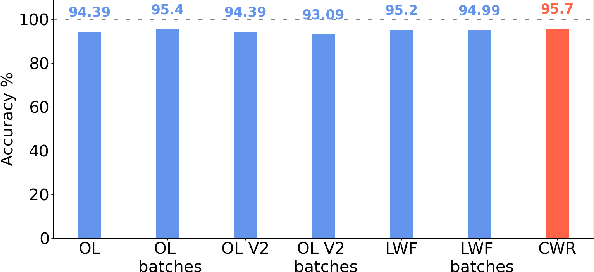

Abstract:Tiny machine learning (TinyML) in IoT systems exploits MCUs as edge devices for data processing. However, traditional TinyML methods can only perform inference, limited to static environments or classes. Real case scenarios usually work in dynamic environments, thus drifting the context where the original neural model is no more suitable. For this reason, pre-trained models reduce accuracy and reliability during their lifetime because the data recorded slowly becomes obsolete or new patterns appear. Continual learning strategies maintain the model up to date, with runtime fine-tuning of the parameters. This paper compares four state-of-the-art algorithms in two real applications: i) gesture recognition based on accelerometer data and ii) image classification. Our results confirm these systems' reliability and the feasibility of deploying them in tiny-memory MCUs, with a drop in the accuracy of a few percentage points with respect to the original models for unconstrained computing platforms.

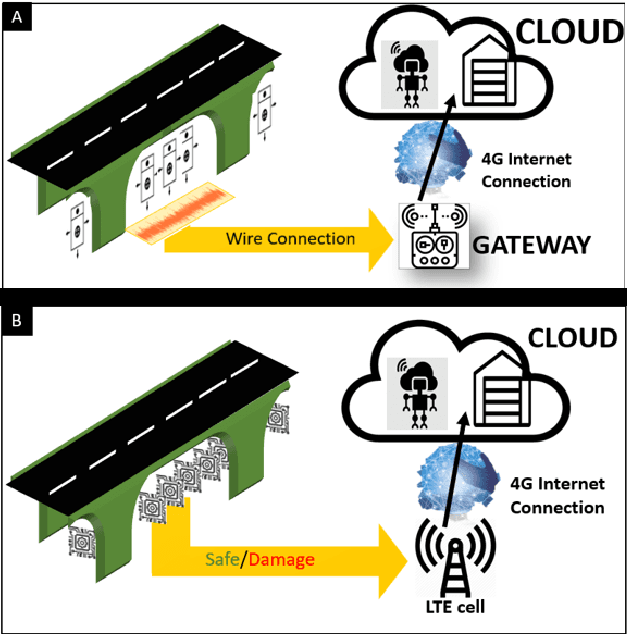

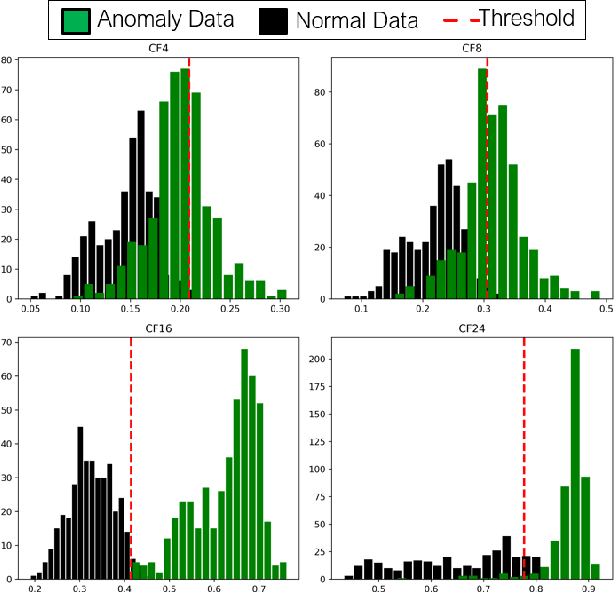

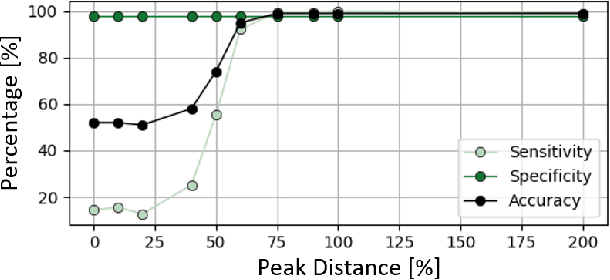

Exploring Scalable, Distributed Real-Time Anomaly Detection for Bridge Health Monitoring

Mar 10, 2022

Abstract:Modern real-time Structural Health Monitoring systems can generate a considerable amount of information that must be processed and evaluated for detecting early anomalies and generating prompt warnings and alarms about the civil infrastructure conditions. The current cloud-based solutions cannot scale if the raw data has to be collected from thousands of buildings. This paper presents a full-stack deployment of an efficient and scalable anomaly detection pipeline for SHM systems which does not require sending raw data to the cloud but relies on edge computation. First, we benchmark three algorithmic approaches of anomaly detection, i.e., Principal Component Analysis (PCA), Fully-Connected AutoEncoder (FC-AE), and Convolutional AutoEncoder (C-AE). Then, we deploy them on an edge-sensor, the STM32L4, with limited computing capabilities. Our approach decreases network traffic by $\approx8\cdot10^5\times$ , from 780KB/hour to less than 10 Bytes/hour for a single installation and minimize network and cloud resource utilization, enabling the scaling of the monitoring infrastructure. A real-life case study, a highway bridge in Italy, demonstrates that combining near-sensor computation of anomaly detection algorithms, smart pre-processing, and low-power wide-area network protocols (LPWAN) we can greatly reduce data communication and cloud computing costs, while anomaly detection accuracy is not adversely affected.

Scale up to infinity: the UWB Indoor Global Positioning System

Dec 03, 2021

Abstract:Determining assets position with high accuracy and scalability is one of the most investigated technology on the market. The accuracy provided by satellites-based positioning systems (i.e., GLONASS or Galileo) is not always sufficient when a decimeter-level accuracy is required or when there is the need of localising entities that operate inside indoor environments. Scalability is also a recurrent problem when dealing with indoor positioning systems. This paper presents an innovative UWB Indoor GPS-Like local positioning system able to tracks any number of assets without decreasing measurements update rate. To increase the system's accuracy the mathematical model and the sources of uncertainties are investigated. Results highlight how the proposed implementation provides positioning information with an absolute maximum error below 20 cm. Scalability is also resolved thanks to DTDoA transmission mechanisms not requiring an active role from the asset to be tracked.

TinyML Platforms Benchmarking

Nov 30, 2021

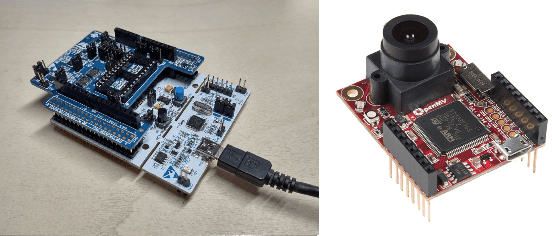

Abstract:Recent advances in state-of-the-art ultra-low power embedded devices for machine learning (ML) have permitted a new class of products whose key features enable ML capabilities on microcontrollers with less than 1 mW power consumption (TinyML). TinyML provides a unique solution by aggregating and analyzing data at the edge on low-power embedded devices. However, we have only recently been able to run ML on microcontrollers, and the field is still in its infancy, which means that hardware, software, and research are changing extremely rapidly. Consequently, many TinyML frameworks have been developed for different platforms to facilitate the deployment of ML models and standardize the process. Therefore, in this paper, we focus on bench-marking two popular frameworks: Tensorflow Lite Micro (TFLM) on the Arduino Nano BLE and CUBE AI on the STM32-NucleoF401RE to provide a standardized framework selection criterion for specific applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge