Andrea Albanese

Is That Rain? Understanding Effects on Visual Odometry Performance for Autonomous UAVs and Efficient DNN-based Rain Classification at the Edge

Jul 17, 2024

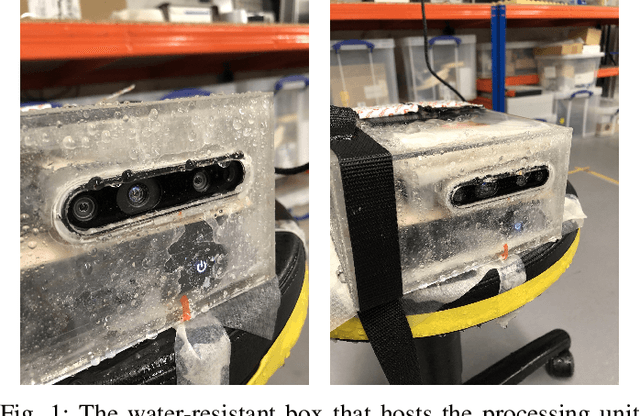

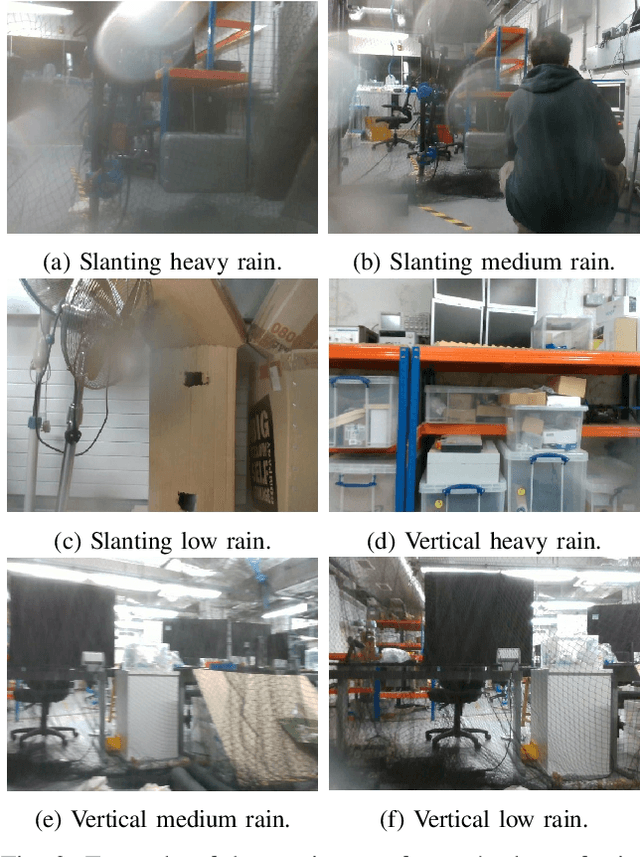

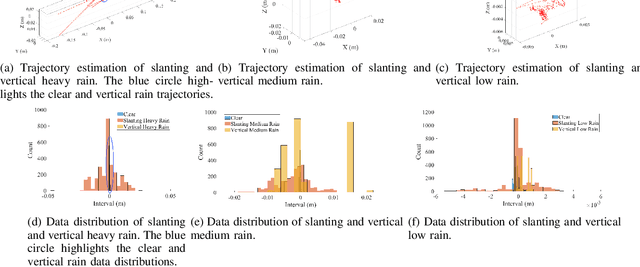

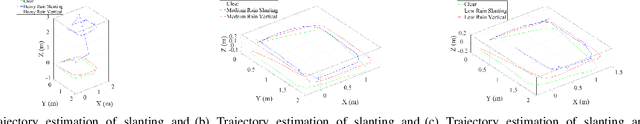

Abstract:The development of safe and reliable autonomous unmanned aerial vehicles relies on the ability of the system to recognise and adapt to changes in the local environment based on sensor inputs. State-of-the-art local tracking and trajectory planning are typically performed using camera sensor input to the flight control algorithm, but the extent to which environmental disturbances like rain affect the performance of these systems is largely unknown. In this paper, we first describe the development of an open dataset comprising ~335k images to examine these effects for seven different classes of precipitation conditions and show that a worst-case average tracking error of 1.5 m is possible for a state-of-the-art visual odometry system (VINS-Fusion). We then use the dataset to train a set of deep neural network models suited to mobile and constrained deployment scenarios to determine the extent to which it may be possible to efficiently and accurately classify these `rainy' conditions. The most lightweight of these models (MobileNetV3 small) can achieve an accuracy of 90% with a memory footprint of just 1.28 MB and a frame rate of 93 FPS, which is suitable for deployment in resource-constrained and latency-sensitive systems. We demonstrate a classification latency in the order of milliseconds using typical flight computer hardware. Accordingly, such a model can feed into the disturbance estimation component of an autonomous flight controller. In addition, data from unmanned aerial vehicles with the ability to accurately determine environmental conditions in real time may contribute to developing more granular timely localised weather forecasting.

Incremental Online Learning Algorithms Comparison for Gesture and Visual Smart Sensors

Sep 01, 2022

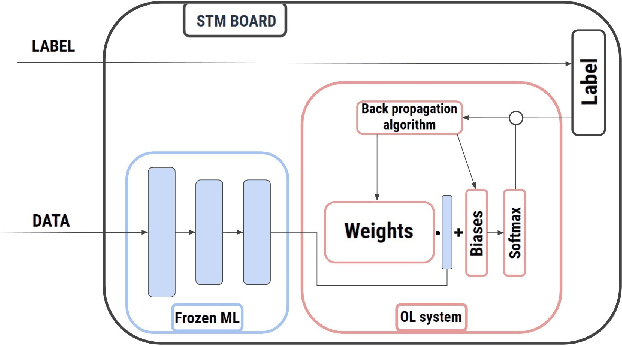

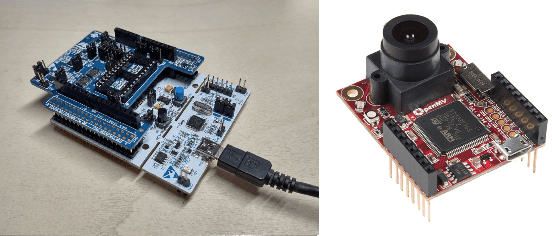

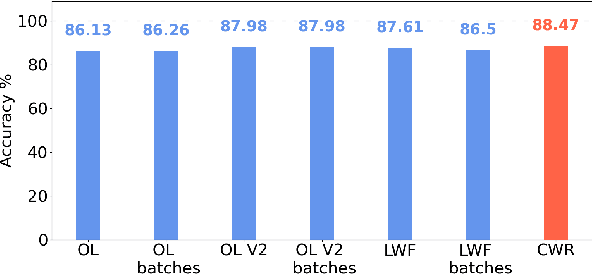

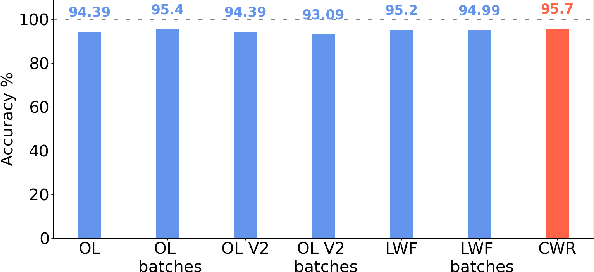

Abstract:Tiny machine learning (TinyML) in IoT systems exploits MCUs as edge devices for data processing. However, traditional TinyML methods can only perform inference, limited to static environments or classes. Real case scenarios usually work in dynamic environments, thus drifting the context where the original neural model is no more suitable. For this reason, pre-trained models reduce accuracy and reliability during their lifetime because the data recorded slowly becomes obsolete or new patterns appear. Continual learning strategies maintain the model up to date, with runtime fine-tuning of the parameters. This paper compares four state-of-the-art algorithms in two real applications: i) gesture recognition based on accelerometer data and ii) image classification. Our results confirm these systems' reliability and the feasibility of deploying them in tiny-memory MCUs, with a drop in the accuracy of a few percentage points with respect to the original models for unconstrained computing platforms.

Automated Pest Detection with DNN on the Edge for Precision Agriculture

Aug 01, 2021

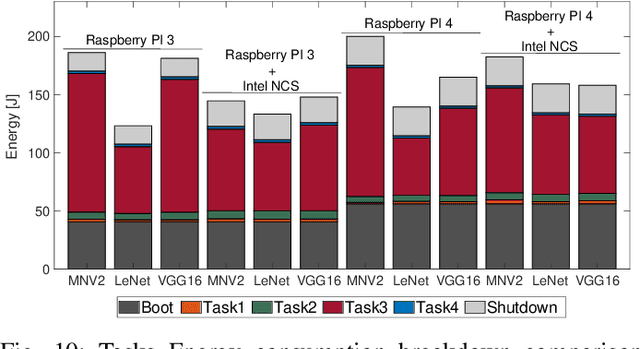

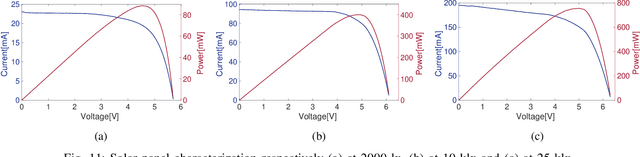

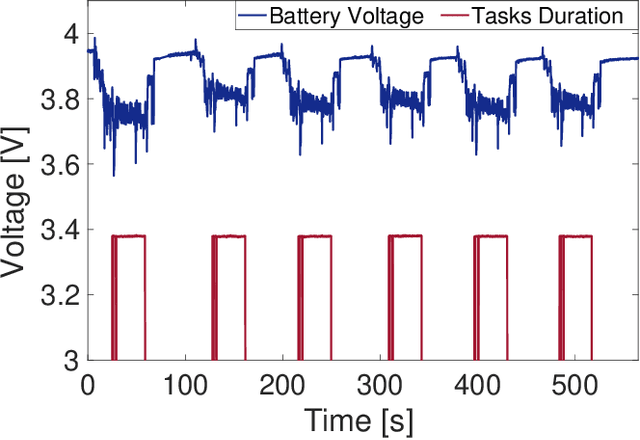

Abstract:Artificial intelligence has smoothly penetrated several economic activities, especially monitoring and control applications, including the agriculture sector. However, research efforts toward low-power sensing devices with fully functional machine learning (ML) on-board are still fragmented and limited in smart farming. Biotic stress is one of the primary causes of crop yield reduction. With the development of deep learning in computer vision technology, autonomous detection of pest infestation through images has become an important research direction for timely crop disease diagnosis. This paper presents an embedded system enhanced with ML functionalities, ensuring continuous detection of pest infestation inside fruit orchards. The embedded solution is based on a low-power embedded sensing system along with a Neural Accelerator able to capture and process images inside common pheromone-based traps. Three different ML algorithms have been trained and deployed, highlighting the capabilities of the platform. Moreover, the proposed approach guarantees an extended battery life thanks to the integration of energy harvesting functionalities. Results show how it is possible to automate the task of pest infestation for unlimited time without the farmer's intervention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge