Daniel Schneider

NFDI4DS Shared Tasks for Scholarly Document Processing

Sep 26, 2025Abstract:Shared tasks are powerful tools for advancing research through community-based standardised evaluation. As such, they play a key role in promoting findable, accessible, interoperable, and reusable (FAIR), as well as transparent and reproducible research practices. This paper presents an updated overview of twelve shared tasks developed and hosted under the German National Research Data Infrastructure for Data Science and Artificial Intelligence (NFDI4DS) consortium, covering a diverse set of challenges in scholarly document processing. Hosted at leading venues, the tasks foster methodological innovations and contribute open-access datasets, models, and tools for the broader research community, which are integrated into the consortium's research data infrastructure.

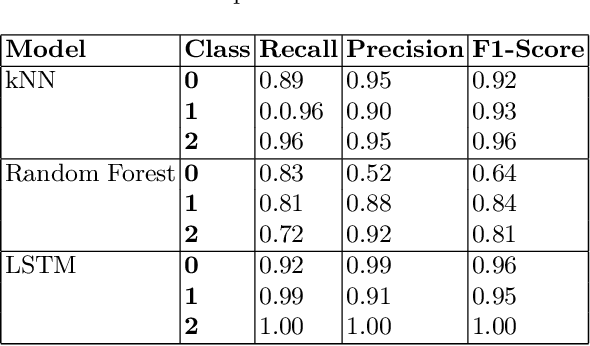

Inadequacy of common stochastic neural networks for reliable clinical decision support

Jan 25, 2024Abstract:Widespread adoption of AI for medical decision making is still hindered due to ethical and safety-related concerns. For AI-based decision support systems in healthcare settings it is paramount to be reliable and trustworthy. Common deep learning approaches, however, have the tendency towards overconfidence under data shift. Such inappropriate extrapolation beyond evidence-based scenarios may have dire consequences. This highlights the importance of reliable estimation of local uncertainty and its communication to the end user. While stochastic neural networks have been heralded as a potential solution to these issues, this study investigates their actual reliability in clinical applications. We centered our analysis on the exemplary use case of mortality prediction for ICU hospitalizations using EHR from MIMIC3 study. For predictions on the EHR time series, Encoder-Only Transformer models were employed. Stochasticity of model functions was achieved by incorporating common methods such as Bayesian neural network layers and model ensembles. Our models achieve state of the art performance in terms of discrimination performance (AUC ROC: 0.868+-0.011, AUC PR: 0.554+-0.034) and calibration on the mortality prediction benchmark. However, epistemic uncertainty is critically underestimated by the selected stochastic deep learning methods. A heuristic proof for the responsible collapse of the posterior distribution is provided. Our findings reveal the inadequacy of commonly used stochastic deep learning approaches to reliably recognize OoD samples. In both methods, unsubstantiated model confidence is not prevented due to strongly biased functional posteriors, rendering them inappropriate for reliable clinical decision support. This highlights the need for approaches with more strictly enforced or inherent distance-awareness to known data points, e.g., using kernel-based techniques.

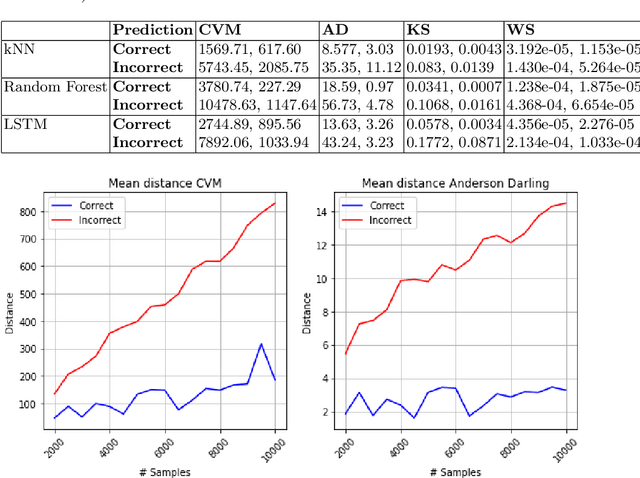

Scope Compliance Uncertainty Estimate

Dec 17, 2023Abstract:The zeitgeist of the digital era has been dominated by an expanding integration of Artificial Intelligence~(AI) in a plethora of applications across various domains. With this expansion, however, questions of the safety and reliability of these methods come have become more relevant than ever. Consequently, a run-time ML model safety system has been developed to ensure the model's operation within the intended context, especially in applications whose environments are greatly variable such as Autonomous Vehicles~(AVs). SafeML is a model-agnostic approach for performing such monitoring, using distance measures based on statistical testing of the training and operational datasets; comparing them to a predetermined threshold, returning a binary value whether the model should be trusted in the context of the observed data or be deemed unreliable. Although a systematic framework exists for this approach, its performance is hindered by: (1) a dependency on a number of design parameters that directly affect the selection of a safety threshold and therefore likely affect its robustness, (2) an inherent assumption of certain distributions for the training and operational sets, as well as (3) a high computational complexity for relatively large sets. This work addresses these limitations by changing the binary decision to a continuous metric. Furthermore, all data distribution assumptions are made obsolete by implementing non-parametric approaches, and the computational speed increased by introducing a new distance measure based on the Empirical Characteristics Functions~(ECF).

On Explainability in AI-Solutions: A Cross-Domain Survey

Oct 11, 2022Abstract:Artificial Intelligence (AI) increasingly shows its potential to outperform predicate logic algorithms and human control alike. In automatically deriving a system model, AI algorithms learn relations in data that are not detectable for humans. This great strength, however, also makes use of AI methods dubious. The more complex a model, the more difficult it is for a human to understand the reasoning for the decisions. As currently, fully automated AI algorithms are sparse, every algorithm has to provide a reasoning for human operators. For data engineers, metrics such as accuracy and sensitivity are sufficient. However, if models are interacting with non-experts, explanations have to be understandable. This work provides an extensive survey of literature on this topic, which, to a large part, consists of other surveys. The findings are mapped to ways of explaining decisions and reasons for explaining decisions. It shows that the heterogeneity of reasons and methods of and for explainability lead to individual explanatory frameworks.

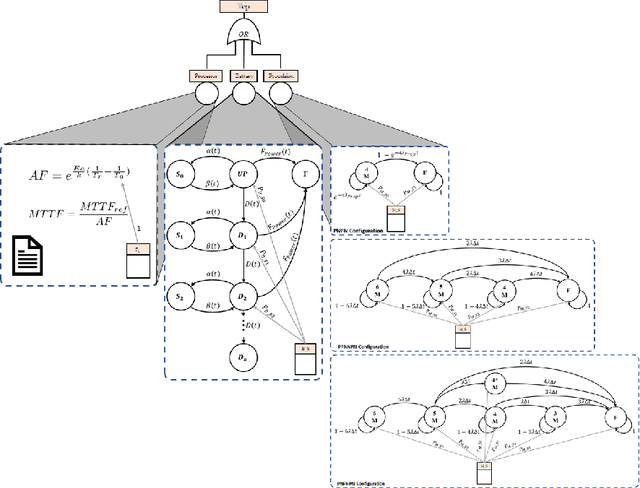

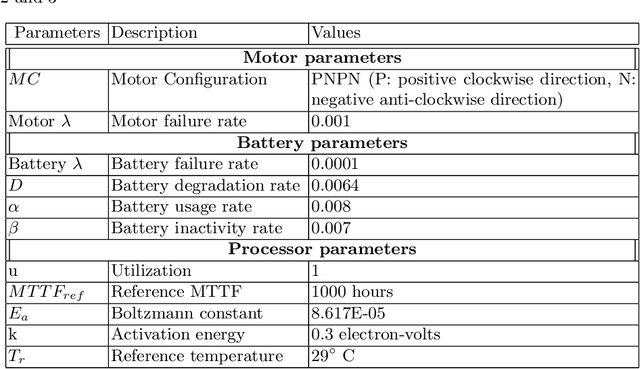

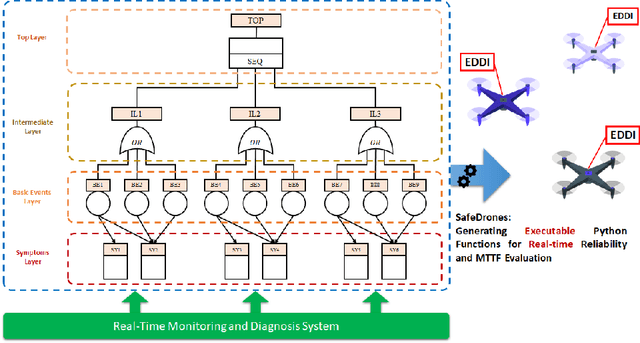

SafeDrones: Real-Time Reliability Evaluation of UAVs using Executable Digital Dependable Identities

Jul 12, 2022

Abstract:The use of Unmanned Arial Vehicles (UAVs) offers many advantages across a variety of applications. However, safety assurance is a key barrier to widespread usage, especially given the unpredictable operational and environmental factors experienced by UAVs, which are hard to capture solely at design-time. This paper proposes a new reliability modeling approach called SafeDrones to help address this issue by enabling runtime reliability and risk assessment of UAVs. It is a prototype instantiation of the Executable Digital Dependable Identity (EDDI) concept, which aims to create a model-based solution for real-time, data-driven dependability assurance for multi-robot systems. By providing real-time reliability estimates, SafeDrones allows UAVs to update their missions accordingly in an adaptive manner.

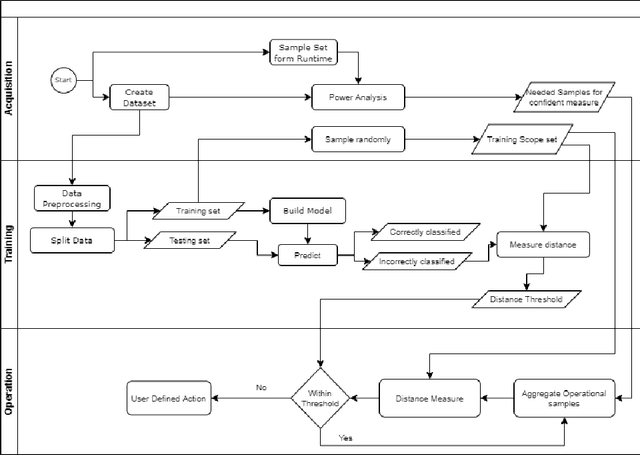

Keep your Distance: Determining Sampling and Distance Thresholds in Machine Learning Monitoring

Jul 11, 2022

Abstract:Machine Learning~(ML) has provided promising results in recent years across different applications and domains. However, in many cases, qualities such as reliability or even safety need to be ensured. To this end, one important aspect is to determine whether or not ML components are deployed in situations that are appropriate for their application scope. For components whose environments are open and variable, for instance those found in autonomous vehicles, it is therefore important to monitor their operational situation to determine its distance from the ML components' trained scope. If that distance is deemed too great, the application may choose to consider the ML component outcome unreliable and switch to alternatives, e.g. using human operator input instead. SafeML is a model-agnostic approach for performing such monitoring, using distance measures based on statistical testing of the training and operational datasets. Limitations in setting SafeML up properly include the lack of a systematic approach for determining, for a given application, how many operational samples are needed to yield reliable distance information as well as to determine an appropriate distance threshold. In this work, we address these limitations by providing a practical approach and demonstrate its use in a well known traffic sign recognition problem, and on an example using the CARLA open-source automotive simulator.

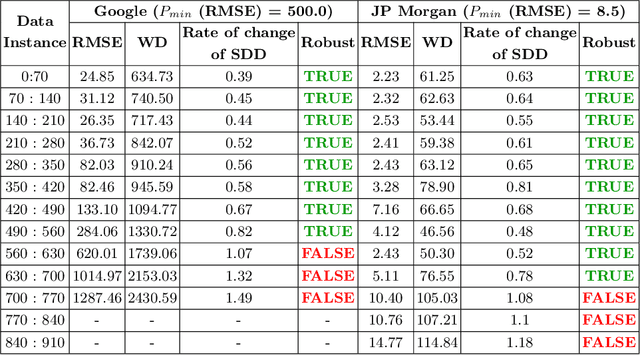

StaDRe and StaDRo: Reliability and Robustness Estimation of ML-based Forecasting using Statistical Distance Measures

Jun 17, 2022

Abstract:Reliability estimation of Machine Learning (ML) models is becoming a crucial subject. This is particularly the case when such \mbox{models} are deployed in safety-critical applications, as the decisions based on model predictions can result in hazardous situations. In this regard, recent research has proposed methods to achieve safe, \mbox{dependable}, and reliable ML systems. One such method consists of detecting and analyzing distributional shift, and then measuring how such systems respond to these shifts. This was proposed in earlier work in SafeML. This work focuses on the use of SafeML for time series data, and on reliability and robustness estimation of ML-forecasting methods using statistical distance measures. To this end, distance measures based on the Empirical Cumulative Distribution Function (ECDF) proposed in SafeML are explored to measure Statistical-Distance Dissimilarity (SDD) across time series. We then propose SDD-based Reliability Estimate (StaDRe) and SDD-based Robustness (StaDRo) measures. With the help of a clustering technique, the similarity between the statistical properties of data seen during training and the forecasts is identified. The proposed method is capable of providing a link between dataset SDD and Key Performance Indicators (KPIs) of the ML models.

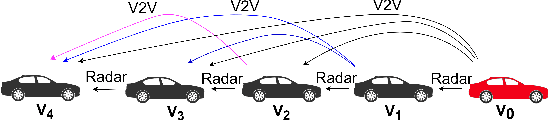

Finite State Markov Modeling of C-V2X Erasure Links For Performance and Stability Analysis of Platooning Applications

Nov 13, 2021

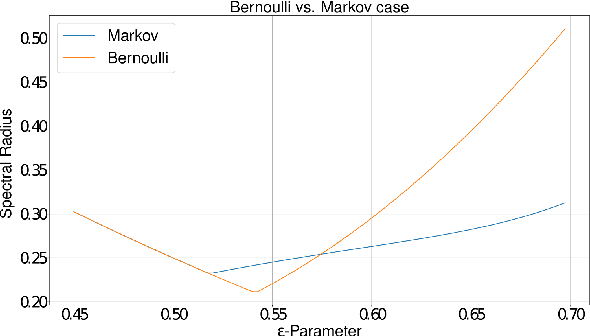

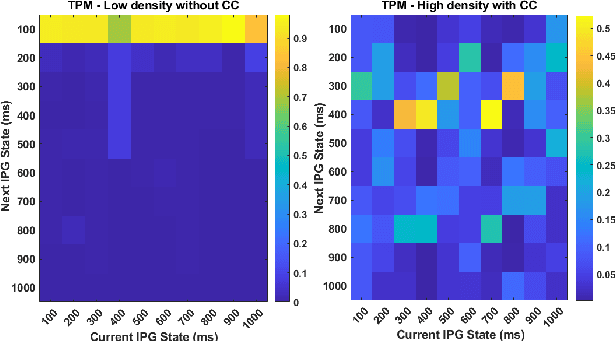

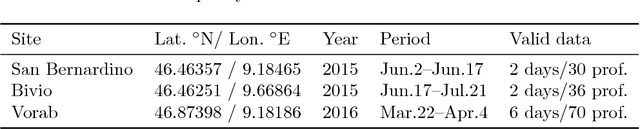

Abstract:Cooperative driving systems, such as platooning, rely on communication and information exchange to create situational awareness for each agent. Design and performance of control components are therefore tightly coupled with communication component performance. The information flow between vehicles can significantly affect the dynamics of a platoon. Therefore, both the performance and the stability of a platoon depend not only on the vehicle's controller but also on the information flow Topology (IFT). The IFT can cause limitations for certain platoon properties, i.e., stability and scalability. Cellular Vehicle-To-Everything (C-V2X) has emerged as one of the main communication technologies to support connected and automated vehicle applications. As a result of packet loss, wireless channels create random link interruption and changes in network topologies. In this paper, we model the communication links between vehicles with a first-order Markov model to capture the prevalent time correlations for each link. These models enable performance evaluation through better approximation of communication links during system design stages. Our approach is to use data from experiments to model the Inter-Packet Gap (IPG) using Markov chains and derive transition probability matrices for consecutive IPG states. Training data is collected from high fidelity simulations using models derived based on empirical data for a variety of different vehicle densities and communication rates. Utilizing the IPG models, we analyze the mean-square stability of a platoon of vehicles with the standard consensus protocol tuned for ideal communication and compare the degradation in performance for different scenarios.

Towards Fully Environment-Aware UAVs: Real-Time Path Planning with Online 3D Wind Field Prediction in Complex Terrain

Dec 10, 2017

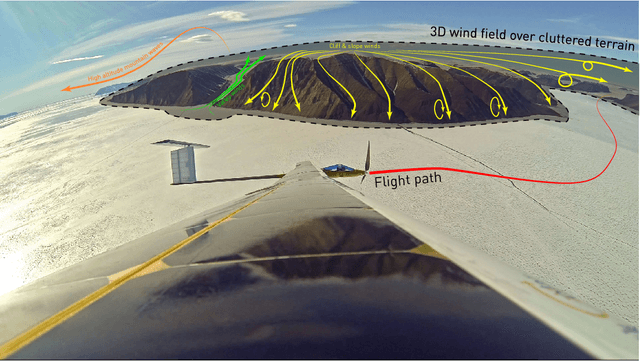

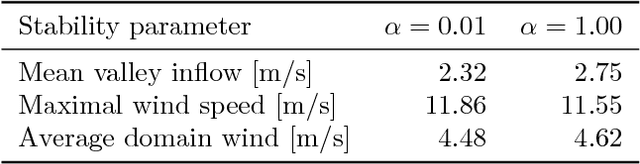

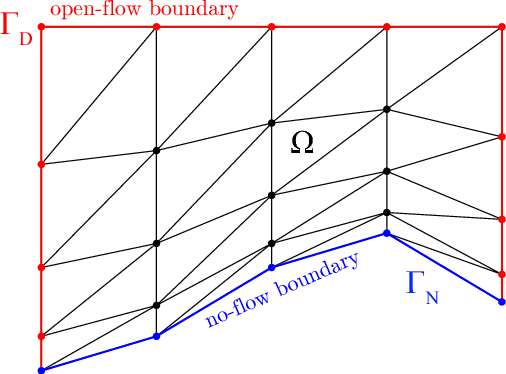

Abstract:Today, low-altitude fixed-wing Unmanned Aerial Vehicles (UAVs) are largely limited to primitively follow user-defined waypoints. To allow fully-autonomous remote missions in complex environments, real-time environment-aware navigation is required both with respect to terrain and strong wind drafts. This paper presents two relevant initial contributions: First, the literature's first-ever 3D wind field prediction method which can run in real time onboard a UAV is presented. The approach retrieves low-resolution global weather data, and uses potential flow theory to adjust the wind field such that terrain boundaries, mass conservation, and the atmospheric stratification are observed. A comparison with 1D LIDAR data shows an overall wind error reduction of 23% with respect to the zero-wind assumption that is mostly used for UAV path planning today. However, given that the vertical winds are not resolved accurately enough further research is required and identified. Second, a sampling-based path planner that considers the aircraft dynamics in non-uniform wind iteratively via Dubins airplane paths is presented. Performance optimizations, e.g. obstacle-aware sampling and fast 2.5D-map collision checks, render the planner 50% faster than the Open Motion Planning Library (OMPL) implementation. Test cases in Alpine terrain show that the wind-aware planning performs up to 50x less iterations than shortest-path planning and is thus slower in low winds, but that it tends to deliver lower-cost paths in stronger winds. More importantly, in contrast to the shortest-path planner, it always delivers collision-free paths. Overall, our initial research demonstrates the feasibility of 3D wind field prediction from a UAV and the advantages of wind-aware planning. This paves the way for follow-up research on fully-autonomous environment-aware navigation of UAVs in real-life missions and complex terrain.

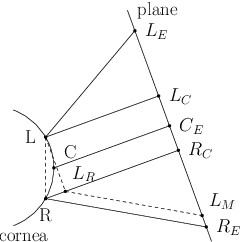

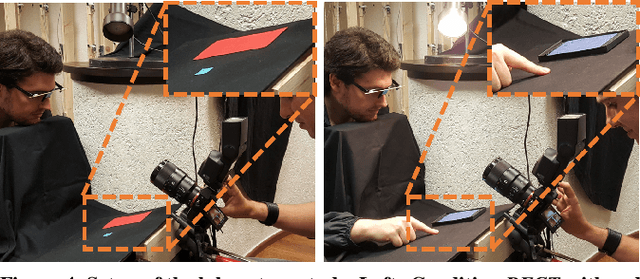

Towards Around-Device Interaction using Corneal Imaging

Sep 04, 2017

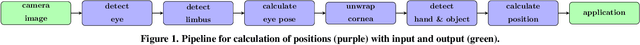

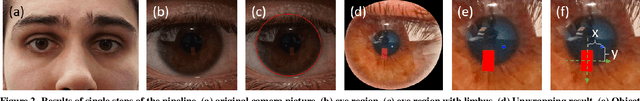

Abstract:Around-device interaction techniques aim at extending the input space using various sensing modalities on mobile and wearable devices. In this paper, we present our work towards extending the input area of mobile devices using front-facing device-centered cameras that capture reflections in the human eye. As current generation mobile devices lack high resolution front-facing cameras we study the feasibility of around-device interaction using corneal reflective imaging based on a high resolution camera. We present a workflow, a technical prototype and an evaluation, including a migration path from high resolution to low resolution imagers. Our study indicates, that under optimal conditions a spatial sensing resolution of 5 cm in the vicinity of a mobile phone is possible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge