Jan Reich

Causal Bayesian Networks for Data-driven Safety Analysis of Complex Systems

May 26, 2025Abstract:Ensuring safe operation of safety-critical complex systems interacting with their environment poses significant challenges, particularly when the system's world model relies on machine learning algorithms to process the perception input. A comprehensive safety argumentation requires knowledge of how faults or functional insufficiencies propagate through the system and interact with external factors, to manage their safety impact. While statistical analysis approaches can support the safety assessment, associative reasoning alone is neither sufficient for the safety argumentation nor for the identification and investigation of safety measures. A causal understanding of the system and its interaction with the environment is crucial for safeguarding safety-critical complex systems. It allows to transfer and generalize knowledge, such as insights gained from testing, and facilitates the identification of potential improvements. This work explores using causal Bayesian networks to model the system's causalities for safety analysis, and proposes measures to assess causal influences based on Pearl's framework of causal inference. We compare the approach of causal Bayesian networks to the well-established fault tree analysis, outlining advantages and limitations. In particular, we examine importance metrics typically employed in fault tree analysis as foundation to discuss suitable causal metrics. An evaluation is performed on the example of a perception system for automated driving. Overall, this work presents an approach for causal reasoning in safety analysis that enables the integration of data-driven and expert-based knowledge to account for uncertainties arising from complex systems operating in open environments.

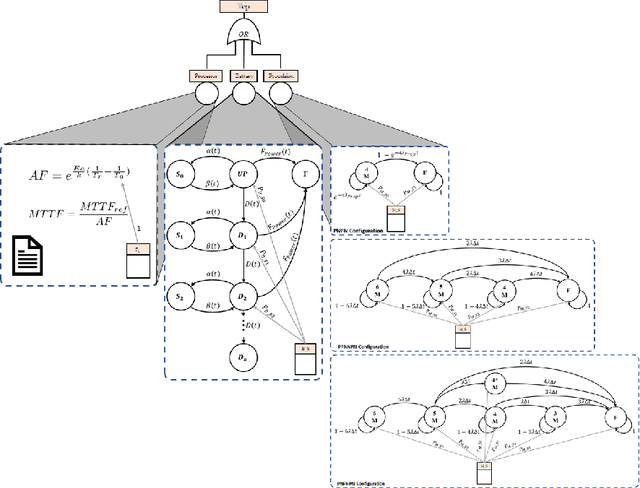

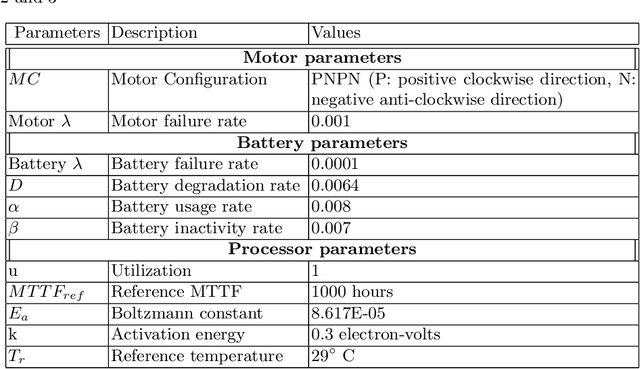

SafeDrones: Real-Time Reliability Evaluation of UAVs using Executable Digital Dependable Identities

Jul 12, 2022

Abstract:The use of Unmanned Arial Vehicles (UAVs) offers many advantages across a variety of applications. However, safety assurance is a key barrier to widespread usage, especially given the unpredictable operational and environmental factors experienced by UAVs, which are hard to capture solely at design-time. This paper proposes a new reliability modeling approach called SafeDrones to help address this issue by enabling runtime reliability and risk assessment of UAVs. It is a prototype instantiation of the Executable Digital Dependable Identity (EDDI) concept, which aims to create a model-based solution for real-time, data-driven dependability assurance for multi-robot systems. By providing real-time reliability estimates, SafeDrones allows UAVs to update their missions accordingly in an adaptive manner.

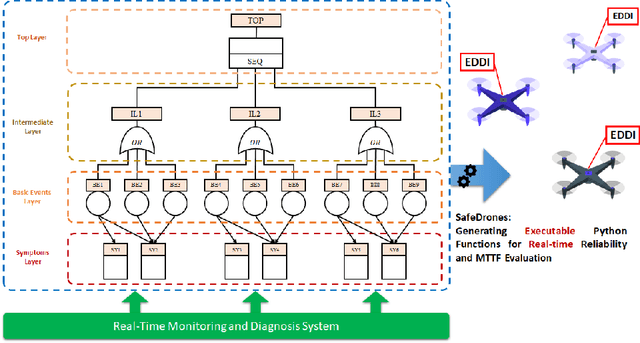

Architectural patterns for handling runtime uncertainty of data-driven models in safety-critical perception

Jun 14, 2022

Abstract:Data-driven models (DDM) based on machine learning and other AI techniques play an important role in the perception of increasingly autonomous systems. Due to the merely implicit definition of their behavior mainly based on the data used for training, DDM outputs are subject to uncertainty. This poses a challenge with respect to the realization of safety-critical perception tasks by means of DDMs. A promising approach to tackling this challenge is to estimate the uncertainty in the current situation during operation and adapt the system behavior accordingly. In previous work, we focused on runtime estimation of uncertainty and discussed approaches for handling uncertainty estimations. In this paper, we present additional architectural patterns for handling uncertainty. Furthermore, we evaluate the four patterns qualitatively and quantitatively with respect to safety and performance gains. For the quantitative evaluation, we consider a distance controller for vehicle platooning where performance gains are measured by considering how much the distance can be reduced in different operational situations. We conclude that the consideration of context information of the driving situation makes it possible to accept more or less uncertainty depending on the inherent risk of the situation, which results in performance gains.

Integrating Testing and Operation-related Quantitative Evidences in Assurance Cases to Argue Safety of Data-Driven AI/ML Components

Feb 10, 2022

Abstract:In the future, AI will increasingly find its way into systems that can potentially cause physical harm to humans. For such safety-critical systems, it must be demonstrated that their residual risk does not exceed what is acceptable. This includes, in particular, the AI components that are part of such systems' safety-related functions. Assurance cases are an intensively discussed option today for specifying a sound and comprehensive safety argument to demonstrate a system's safety. In previous work, it has been suggested to argue safety for AI components by structuring assurance cases based on two complementary risk acceptance criteria. One of these criteria is used to derive quantitative targets regarding the AI. The argumentation structures commonly proposed to show the achievement of such quantitative targets, however, focus on failure rates from statistical testing. Further important aspects are only considered in a qualitative manner -- if at all. In contrast, this paper proposes a more holistic argumentation structure for having achieved the target, namely a structure that integrates test results with runtime aspects and the impact of scope compliance and test data quality in a quantitative manner. We elaborate different argumentation options, present the underlying mathematical considerations, and discuss resulting implications for their practical application. Using the proposed argumentation structure might not only increase the integrity of assurance cases but may also allow claims on quantitative targets that would not be justifiable otherwise.

Phänomen-Signal-Modell: Formalismus, Graph und Anwendung

Jul 31, 2021Abstract:If we consider information as the basis of action, it may be of interest to examine the flow and acquisition of information between the actors in traffic. The central question is, which signals an automaton has to receive, decode or send in road traffic in order to act safely and in a conform manner to valid standards. The phenomenon-signal-model is a method to structure the problem, to analyze and to describe this very signal flow. Explaining the basics, structure and application of this method is the aim of this paper. -- Betrachtet man Information als Grundlage des Handelns, so wird es interessant sein, Fluss und Erfassung von Information zwischen den Akteuren des Verkehrsgeschehens zu untersuchen. Die zentrale Frage ist, welche Signale ein Automat im Stra{\ss}enverkehr empfangen, decodieren oder senden muss, um konform zu geltenden Ma{\ss}st\"aben und sicher zu agieren. Das Ph\"anomen-Signal-Modell ist eine Methode, das Problemfeld zu strukturieren, eben diesen Signalfluss zu analysieren und zu beschreiben. Der vorliegende Aufsatz erkl\"art Grundlagen, Aufbau und Anwendung dieser Methode.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge