Koorosh Aslansefat

HybridVFL: Disentangled Feature Learning for Edge-Enabled Vertical Federated Multimodal Classification

Dec 11, 2025Abstract:Vertical Federated Learning (VFL) offers a privacy-preserving paradigm for Edge AI scenarios like mobile health diagnostics, where sensitive multimodal data reside on distributed, resource-constrained devices. Yet, standard VFL systems often suffer performance limitations due to simplistic feature fusion. This paper introduces HybridVFL, a novel framework designed to overcome this bottleneck by employing client-side feature disentanglement paired with a server-side cross-modal transformer for context-aware fusion. Through systematic evaluation on the multimodal HAM10000 skin lesion dataset, we demonstrate that HybridVFL significantly outperforms standard federated baselines, validating the criticality of advanced fusion mechanisms in robust, privacy-preserving systems.

Skewness-Guided Pruning of Multimodal Swin Transformers for Federated Skin Lesion Classification on Edge Devices

Dec 09, 2025Abstract:In recent years, high-performance computer vision models have achieved remarkable success in medical imaging, with some skin lesion classification systems even surpassing dermatology specialists in diagnostic accuracy. However, such models are computationally intensive and large in size, making them unsuitable for deployment on edge devices. In addition, strict privacy constraints hinder centralized data management, motivating the adoption of Federated Learning (FL). To address these challenges, this study proposes a skewness-guided pruning method that selectively prunes the Multi-Head Self-Attention and Multi-Layer Perceptron layers of a multimodal Swin Transformer based on the statistical skewness of their output distributions. The proposed method was validated in a horizontal FL environment and shown to maintain performance while substantially reducing model complexity. Experiments on the compact Swin Transformer demonstrate approximately 36\% model size reduction with no loss in accuracy. These findings highlight the feasibility of achieving efficient model compression and privacy-preserving distributed learning for multimodal medical AI on edge devices.

Mitigating Individual Skin Tone Bias in Skin Lesion Classification through Distribution-Aware Reweighting

Dec 09, 2025

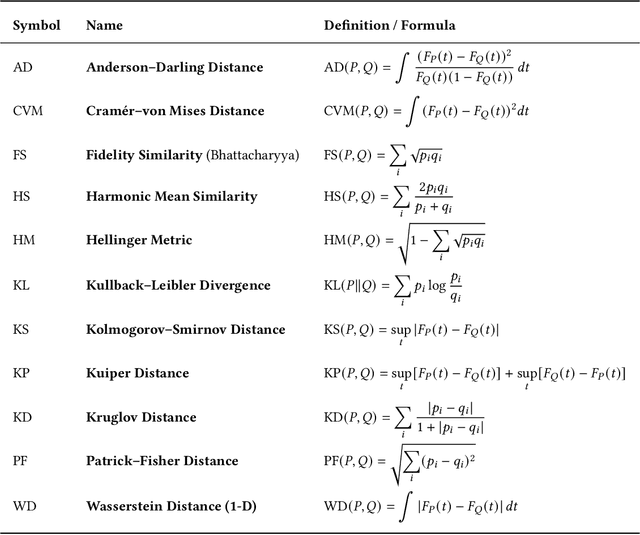

Abstract:Skin color has historically been a focal point of discrimination, yet fairness research in machine learning for medical imaging often relies on coarse subgroup categories, overlooking individual-level variations. Such group-based approaches risk obscuring biases faced by outliers within subgroups. This study introduces a distribution-based framework for evaluating and mitigating individual fairness in skin lesion classification. We treat skin tone as a continuous attribute rather than a categorical label, and employ kernel density estimation (KDE) to model its distribution. We further compare twelve statistical distance metrics to quantify disparities between skin tone distributions and propose a distance-based reweighting (DRW) loss function to correct underrepresentation in minority tones. Experiments across CNN and Transformer models demonstrate: (i) the limitations of categorical reweighting in capturing individual-level disparities, and (ii) the superior performance of distribution-based reweighting, particularly with Fidelity Similarity (FS), Wasserstein Distance (WD), Hellinger Metric (HM), and Harmonic Mean Similarity (HS). These findings establish a robust methodology for advancing fairness at individual level in dermatological AI systems, and highlight broader implications for sensitive continuous attributes in medical image analysis.

Explainable Knowledge Graph Retrieval-Augmented Generation (KG-RAG) with KG-SMILE

Sep 03, 2025Abstract:Generative AI, such as Large Language Models (LLMs), has achieved impressive progress but still produces hallucinations and unverifiable claims, limiting reliability in sensitive domains. Retrieval-Augmented Generation (RAG) improves accuracy by grounding outputs in external knowledge, especially in domains like healthcare, where precision is vital. However, RAG remains opaque and essentially a black box, heavily dependent on data quality. We developed a method-agnostic, perturbation-based framework that provides token and component-level interoperability for Graph RAG using SMILE and named it as Knowledge-Graph (KG)-SMILE. By applying controlled perturbations, computing similarities, and training weighted linear surrogates, KG-SMILE identifies the graph entities and relations most influential to generated outputs, thereby making RAG more transparent. We evaluate KG-SMILE using comprehensive attribution metrics, including fidelity, faithfulness, consistency, stability, and accuracy. Our findings show that KG-SMILE produces stable, human-aligned explanations, demonstrating its capacity to balance model effectiveness with interpretability and thereby fostering greater transparency and trust in machine learning technologies.

RAGuard: A Novel Approach for in-context Safe Retrieval Augmented Generation for LLMs

Sep 03, 2025Abstract:Accuracy and safety are paramount in Offshore Wind (OSW) maintenance, yet conventional Large Language Models (LLMs) often fail when confronted with highly specialised or unexpected scenarios. We introduce RAGuard, an enhanced Retrieval-Augmented Generation (RAG) framework that explicitly integrates safety-critical documents alongside technical manuals.By issuing parallel queries to two indices and allocating separate retrieval budgets for knowledge and safety, RAGuard guarantees both technical depth and safety coverage. We further develop a SafetyClamp extension that fetches a larger candidate pool, "hard-clamping" exact slot guarantees to safety. We evaluate across sparse (BM25), dense (Dense Passage Retrieval) and hybrid retrieval paradigms, measuring Technical Recall@K and Safety Recall@K. Both proposed extensions of RAG show an increase in Safety Recall@K from almost 0\% in RAG to more than 50\% in RAGuard, while maintaining Technical Recall above 60\%. These results demonstrate that RAGuard and SafetyClamp have the potential to establish a new standard for integrating safety assurance into LLM-powered decision support in critical maintenance contexts.

Safer Skin Lesion Classification with Global Class Activation Probability Map Evaluation and SafeML

Aug 28, 2025Abstract:Recent advancements in skin lesion classification models have significantly improved accuracy, with some models even surpassing dermatologists' diagnostic performance. However, in medical practice, distrust in AI models remains a challenge. Beyond high accuracy, trustworthy, explainable diagnoses are essential. Existing explainability methods have reliability issues, with LIME-based methods suffering from inconsistency, while CAM-based methods failing to consider all classes. To address these limitations, we propose Global Class Activation Probabilistic Map Evaluation, a method that analyses all classes' activation probability maps probabilistically and at a pixel level. By visualizing the diagnostic process in a unified manner, it helps reduce the risk of misdiagnosis. Furthermore, the application of SafeML enhances the detection of false diagnoses and issues warnings to doctors and patients as needed, improving diagnostic reliability and ultimately patient safety. We evaluated our method using the ISIC datasets with MobileNetV2 and Vision Transformers.

Evaluating Fairness and Mitigating Bias in Machine Learning: A Novel Technique using Tensor Data and Bayesian Regression

Jun 13, 2025Abstract:Fairness is a critical component of Trustworthy AI. In this paper, we focus on Machine Learning (ML) and the performance of model predictions when dealing with skin color. Unlike other sensitive attributes, the nature of skin color differs significantly. In computer vision, skin color is represented as tensor data rather than categorical values or single numerical points. However, much of the research on fairness across sensitive groups has focused on categorical features such as gender and race. This paper introduces a new technique for evaluating fairness in ML for image classification tasks, specifically without the use of annotation. To address the limitations of prior work, we handle tensor data, like skin color, without classifying it rigidly. Instead, we convert it into probability distributions and apply statistical distance measures. This novel approach allows us to capture fine-grained nuances in fairness both within and across what would traditionally be considered distinct groups. Additionally, we propose an innovative training method to mitigate the latent biases present in conventional skin tone categorization. This method leverages color distance estimates calculated through Bayesian regression with polynomial functions, ensuring a more nuanced and equitable treatment of skin color in ML models.

Incorporating Failure of Machine Learning in Dynamic Probabilistic Safety Assurance

Jun 07, 2025Abstract:Machine Learning (ML) models are increasingly integrated into safety-critical systems, such as autonomous vehicle platooning, to enable real-time decision-making. However, their inherent imperfection introduces a new class of failure: reasoning failures often triggered by distributional shifts between operational and training data. Traditional safety assessment methods, which rely on design artefacts or code, are ill-suited for ML components that learn behaviour from data. SafeML was recently proposed to dynamically detect such shifts and assign confidence levels to the reasoning of ML-based components. Building on this, we introduce a probabilistic safety assurance framework that integrates SafeML with Bayesian Networks (BNs) to model ML failures as part of a broader causal safety analysis. This allows for dynamic safety evaluation and system adaptation under uncertainty. We demonstrate the approach on an simulated automotive platooning system with traffic sign recognition. The findings highlight the potential broader benefits of explicitly modelling ML failures in safety assessment.

Explainability of Large Language Models using SMILE: Statistical Model-agnostic Interpretability with Local Explanations

May 27, 2025Abstract:Large language models like GPT, LLAMA, and Claude have become incredibly powerful at generating text, but they are still black boxes, so it is hard to understand how they decide what to say. That lack of transparency can be problematic, especially in fields where trust and accountability matter. To help with this, we introduce SMILE, a new method that explains how these models respond to different parts of a prompt. SMILE is model-agnostic and works by slightly changing the input, measuring how the output changes, and then highlighting which words had the most impact. Create simple visual heat maps showing which parts of a prompt matter the most. We tested SMILE on several leading LLMs and used metrics such as accuracy, consistency, stability, and fidelity to show that it gives clear and reliable explanations. By making these models easier to understand, SMILE brings us one step closer to making AI more transparent and trustworthy.

Mapping the Mind of an Instruction-based Image Editing using SMILE

Dec 20, 2024Abstract:Despite recent advancements in Instruct-based Image Editing models for generating high-quality images, they are known as black boxes and a significant barrier to transparency and user trust. To solve this issue, we introduce SMILE (Statistical Model-agnostic Interpretability with Local Explanations), a novel model-agnostic for localized interpretability that provides a visual heatmap to clarify the textual elements' influence on image-generating models. We applied our method to various Instruction-based Image Editing models like Pix2Pix, Image2Image-turbo and Diffusers-Inpaint and showed how our model can improve interpretability and reliability. Also, we use stability, accuracy, fidelity, and consistency metrics to evaluate our method. These findings indicate the exciting potential of model-agnostic interpretability for reliability and trustworthiness in critical applications such as healthcare and autonomous driving while encouraging additional investigation into the significance of interpretability in enhancing dependable image editing models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge