Roman Gansch

Causal Bayesian Networks for Data-driven Safety Analysis of Complex Systems

May 26, 2025Abstract:Ensuring safe operation of safety-critical complex systems interacting with their environment poses significant challenges, particularly when the system's world model relies on machine learning algorithms to process the perception input. A comprehensive safety argumentation requires knowledge of how faults or functional insufficiencies propagate through the system and interact with external factors, to manage their safety impact. While statistical analysis approaches can support the safety assessment, associative reasoning alone is neither sufficient for the safety argumentation nor for the identification and investigation of safety measures. A causal understanding of the system and its interaction with the environment is crucial for safeguarding safety-critical complex systems. It allows to transfer and generalize knowledge, such as insights gained from testing, and facilitates the identification of potential improvements. This work explores using causal Bayesian networks to model the system's causalities for safety analysis, and proposes measures to assess causal influences based on Pearl's framework of causal inference. We compare the approach of causal Bayesian networks to the well-established fault tree analysis, outlining advantages and limitations. In particular, we examine importance metrics typically employed in fault tree analysis as foundation to discuss suitable causal metrics. An evaluation is performed on the example of a perception system for automated driving. Overall, this work presents an approach for causal reasoning in safety analysis that enables the integration of data-driven and expert-based knowledge to account for uncertainties arising from complex systems operating in open environments.

System Theoretic View on Uncertainties

Mar 07, 2023

Abstract:The complexity of the operating environment and required technologies for highly automated driving is unprecedented. A different type of threat to safe operation besides the fault-error-failure model by Laprie et al. arises in the form of performance limitations. We propose a system theoretic approach to handle these and derive a taxonomy based on uncertainty, i.e. lack of knowledge, as a root cause. Uncertainty is a threat to the dependability of a system, as it limits our ability to assess its dependability properties. We distinguish uncertainties by aleatory (inherent to probabilistic models), epistemic (lack of model parameter knowledge) and ontological (incompleteness of models) in order to determine strategies and methods to cope with them. Analogous to the taxonomy of Laprie et al. we cluster methods into uncertainty prevention (use of elements with well-known behavior, avoiding architectures prone to emergent behavior, restriction of operational design domain, etc.), uncertainty removal (during design time by design of experiment, etc. and after release by field observation, continuous updates, etc.), uncertainty tolerance (use of redundant architectures with diverse uncertainties, uncertainty aware deep learning, etc.) and uncertainty forecasting (estimation of residual uncertainty, etc.).

Grasping Causality for the Explanation of Criticality for Automated Driving

Oct 27, 2022

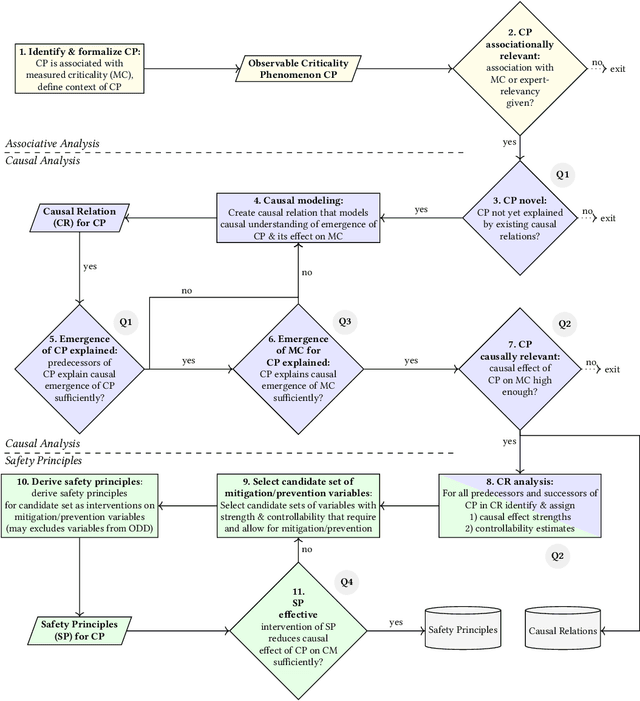

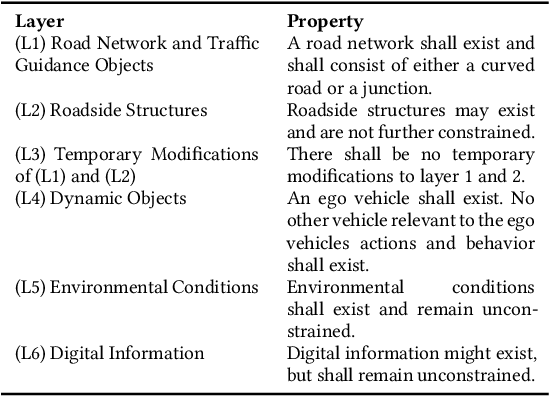

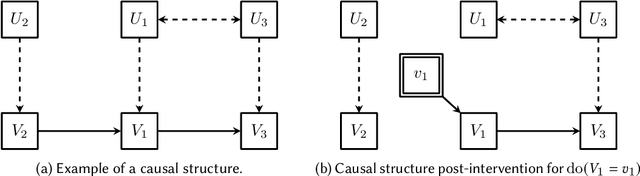

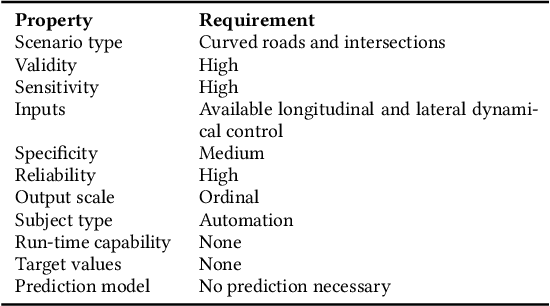

Abstract:The verification and validation of automated driving systems at SAE levels 4 and 5 is a multi-faceted challenge for which classical statistical considerations become infeasible. For this, contemporary approaches suggest a decomposition into scenario classes combined with statistical analysis thereof regarding the emergence of criticality. Unfortunately, these associational approaches may yield spurious inferences, or worse, fail to recognize the causalities leading to critical scenarios, which are, in turn, prerequisite for the development and safeguarding of automated driving systems. As to incorporate causal knowledge within these processes, this work introduces a formalization of causal queries whose answers facilitate a causal understanding of safety-relevant influencing factors for automated driving. This formalized causal knowledge can be used to specify and implement abstract safety principles that provably reduce the criticality associated with these influencing factors. Based on Judea Pearl's causal theory, we define a causal relation as a causal structure together with a context, both related to a domain ontology, where the focus lies on modeling the effect of such influencing factors on criticality as measured by a suitable metric. As to assess modeling quality, we suggest various quantities and evaluate them on a small example. As availability and quality of data are imperative for validly estimating answers to the causal queries, we also discuss requirements on real-world and synthetic data acquisition. We thereby contribute to establishing causal considerations at the heart of the safety processes that are urgently needed as to ensure the safe operation of automated driving systems.

Architectural patterns for handling runtime uncertainty of data-driven models in safety-critical perception

Jun 14, 2022

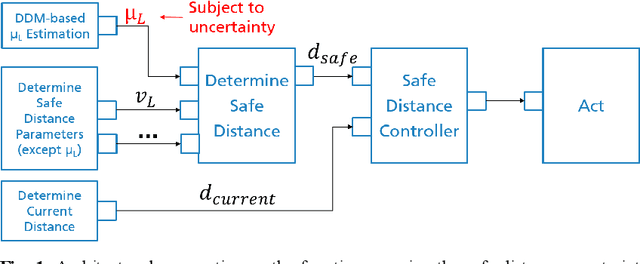

Abstract:Data-driven models (DDM) based on machine learning and other AI techniques play an important role in the perception of increasingly autonomous systems. Due to the merely implicit definition of their behavior mainly based on the data used for training, DDM outputs are subject to uncertainty. This poses a challenge with respect to the realization of safety-critical perception tasks by means of DDMs. A promising approach to tackling this challenge is to estimate the uncertainty in the current situation during operation and adapt the system behavior accordingly. In previous work, we focused on runtime estimation of uncertainty and discussed approaches for handling uncertainty estimations. In this paper, we present additional architectural patterns for handling uncertainty. Furthermore, we evaluate the four patterns qualitatively and quantitatively with respect to safety and performance gains. For the quantitative evaluation, we consider a distance controller for vehicle platooning where performance gains are measured by considering how much the distance can be reduced in different operational situations. We conclude that the consideration of context information of the driving situation makes it possible to accept more or less uncertainty depending on the inherent risk of the situation, which results in performance gains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge