Benjamin Müller

Comparative Validation of Machine Learning Algorithms for Surgical Workflow and Skill Analysis with the HeiChole Benchmark

Sep 30, 2021

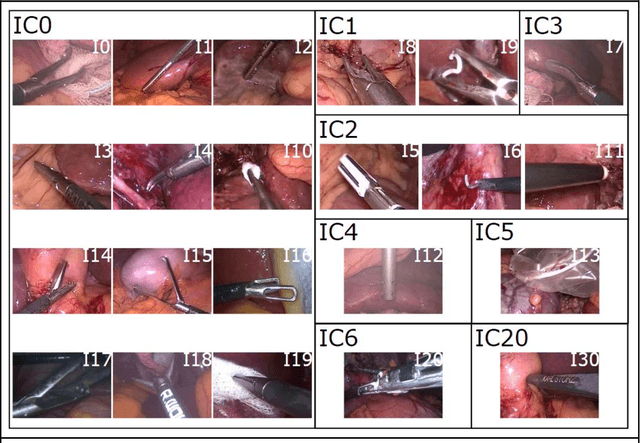

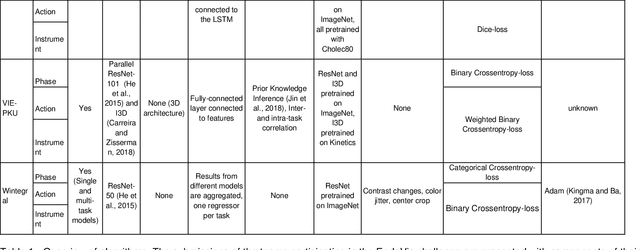

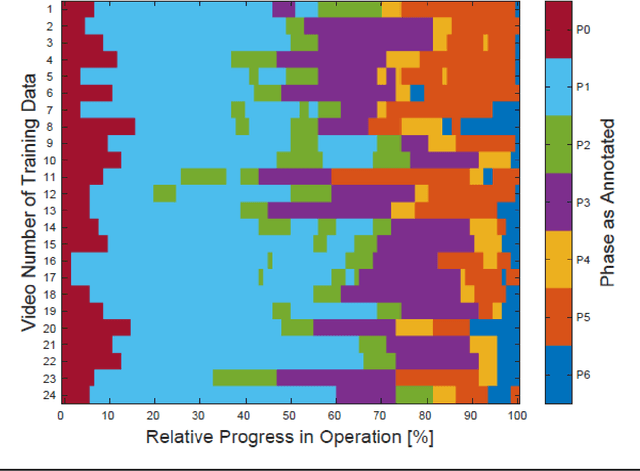

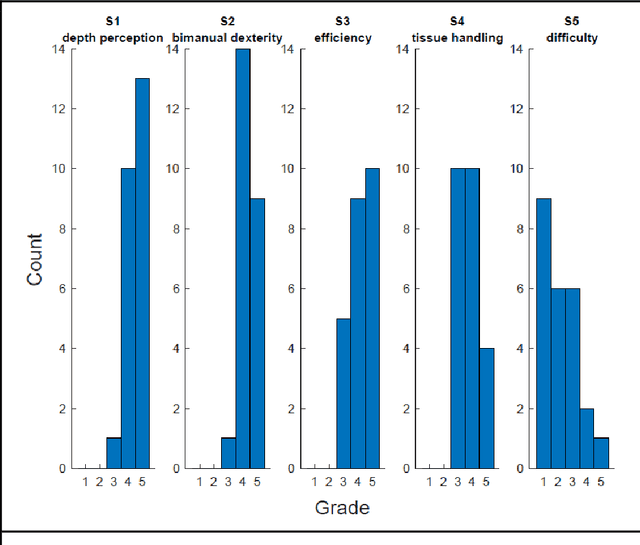

Abstract:PURPOSE: Surgical workflow and skill analysis are key technologies for the next generation of cognitive surgical assistance systems. These systems could increase the safety of the operation through context-sensitive warnings and semi-autonomous robotic assistance or improve training of surgeons via data-driven feedback. In surgical workflow analysis up to 91% average precision has been reported for phase recognition on an open data single-center dataset. In this work we investigated the generalizability of phase recognition algorithms in a multi-center setting including more difficult recognition tasks such as surgical action and surgical skill. METHODS: To achieve this goal, a dataset with 33 laparoscopic cholecystectomy videos from three surgical centers with a total operation time of 22 hours was created. Labels included annotation of seven surgical phases with 250 phase transitions, 5514 occurences of four surgical actions, 6980 occurences of 21 surgical instruments from seven instrument categories and 495 skill classifications in five skill dimensions. The dataset was used in the 2019 Endoscopic Vision challenge, sub-challenge for surgical workflow and skill analysis. Here, 12 teams submitted their machine learning algorithms for recognition of phase, action, instrument and/or skill assessment. RESULTS: F1-scores were achieved for phase recognition between 23.9% and 67.7% (n=9 teams), for instrument presence detection between 38.5% and 63.8% (n=8 teams), but for action recognition only between 21.8% and 23.3% (n=5 teams). The average absolute error for skill assessment was 0.78 (n=1 team). CONCLUSION: Surgical workflow and skill analysis are promising technologies to support the surgical team, but are not solved yet, as shown by our comparison of algorithms. This novel benchmark can be used for comparable evaluation and validation of future work.

Heidelberg Colorectal Data Set for Surgical Data Science in the Sensor Operating Room

May 28, 2020

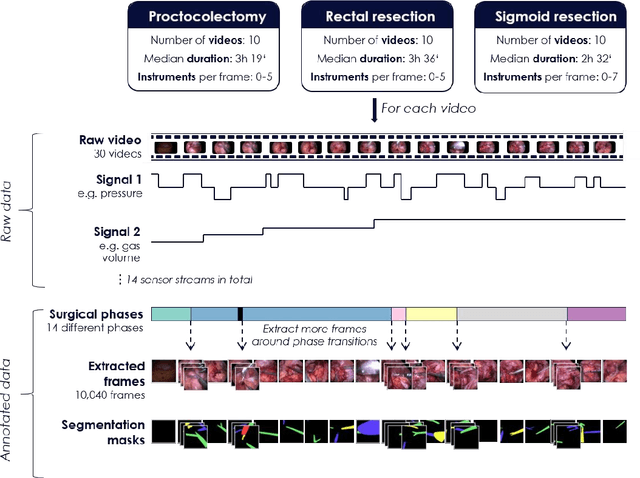

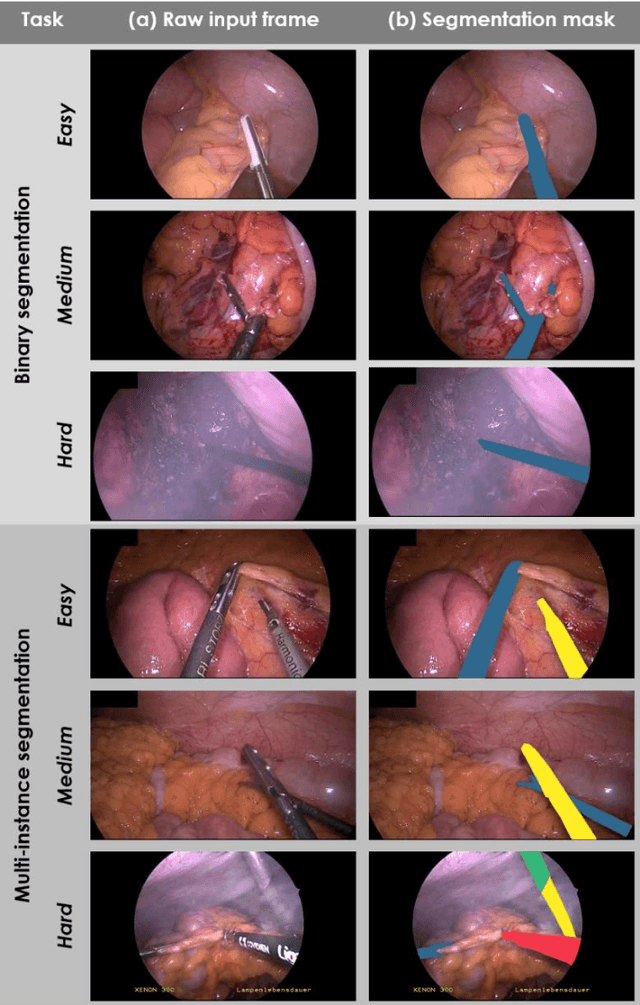

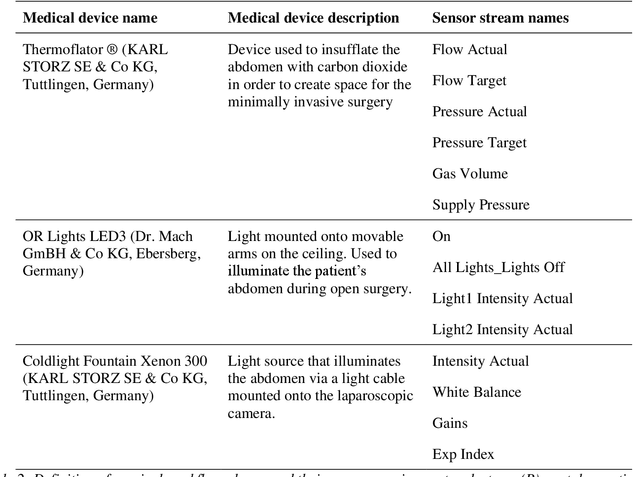

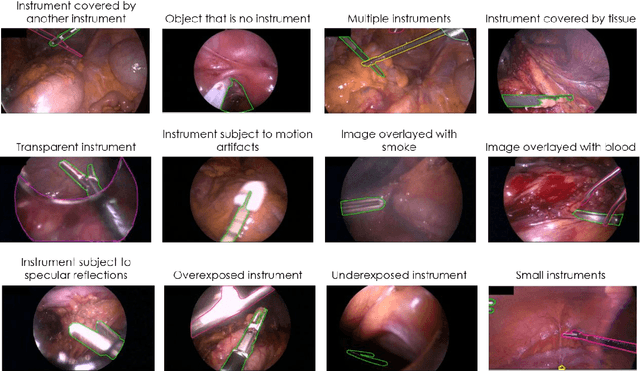

Abstract:Image-based tracking of medical instruments is an integral part of many surgical data science applications. Previous research has addressed the tasks of detecting, segmenting and tracking medical instruments based on laparoscopic video data. However, the methods proposed still tend to fail when applied to challenging images and do not generalize well to data they have not been trained on. This paper introduces the Heidelberg Colorectal (HeiCo) data set - the first publicly available data set enabling comprehensive benchmarking of medical instrument detection and segmentation algorithms with a specific emphasis on robustness and generalization capabilities of the methods. Our data set comprises 30 laparoscopic videos and corresponding sensor data from medical devices in the operating room for three different types of laparoscopic surgery. Annotations include surgical phase labels for all frames in the videos as well as instance-wise segmentation masks for surgical instruments in more than 10,000 individual frames. The data has successfully been used to organize international competitions in the scope of the Endoscopic Vision Challenges (EndoVis) 2017 and 2019.

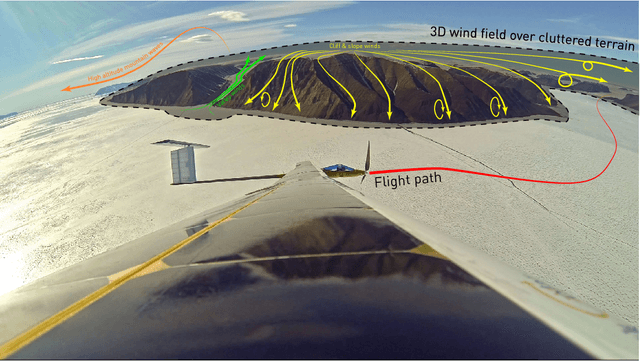

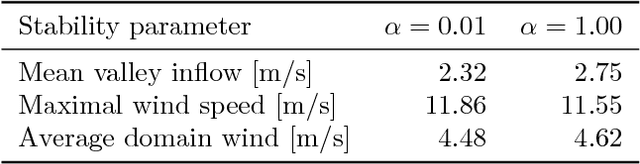

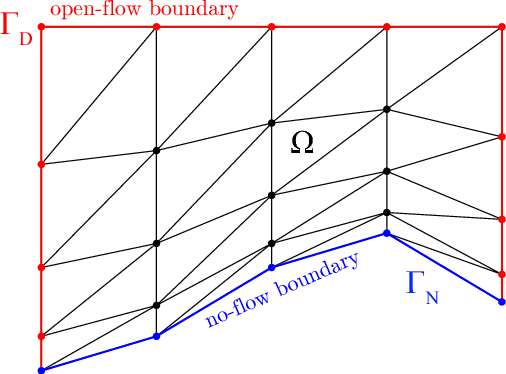

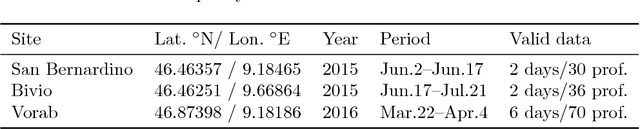

Towards Fully Environment-Aware UAVs: Real-Time Path Planning with Online 3D Wind Field Prediction in Complex Terrain

Dec 10, 2017

Abstract:Today, low-altitude fixed-wing Unmanned Aerial Vehicles (UAVs) are largely limited to primitively follow user-defined waypoints. To allow fully-autonomous remote missions in complex environments, real-time environment-aware navigation is required both with respect to terrain and strong wind drafts. This paper presents two relevant initial contributions: First, the literature's first-ever 3D wind field prediction method which can run in real time onboard a UAV is presented. The approach retrieves low-resolution global weather data, and uses potential flow theory to adjust the wind field such that terrain boundaries, mass conservation, and the atmospheric stratification are observed. A comparison with 1D LIDAR data shows an overall wind error reduction of 23% with respect to the zero-wind assumption that is mostly used for UAV path planning today. However, given that the vertical winds are not resolved accurately enough further research is required and identified. Second, a sampling-based path planner that considers the aircraft dynamics in non-uniform wind iteratively via Dubins airplane paths is presented. Performance optimizations, e.g. obstacle-aware sampling and fast 2.5D-map collision checks, render the planner 50% faster than the Open Motion Planning Library (OMPL) implementation. Test cases in Alpine terrain show that the wind-aware planning performs up to 50x less iterations than shortest-path planning and is thus slower in low winds, but that it tends to deliver lower-cost paths in stronger winds. More importantly, in contrast to the shortest-path planner, it always delivers collision-free paths. Overall, our initial research demonstrates the feasibility of 3D wind field prediction from a UAV and the advantages of wind-aware planning. This paves the way for follow-up research on fully-autonomous environment-aware navigation of UAVs in real-life missions and complex terrain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge