Lars Mündermann

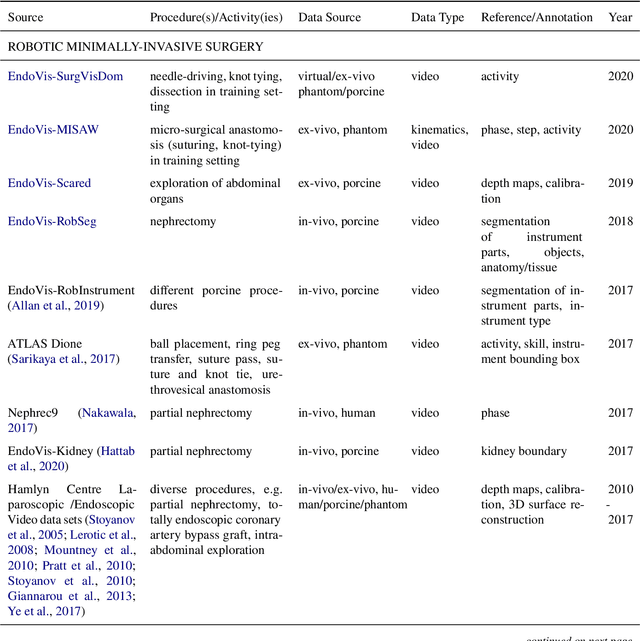

Comparative Validation of Machine Learning Algorithms for Surgical Workflow and Skill Analysis with the HeiChole Benchmark

Sep 30, 2021

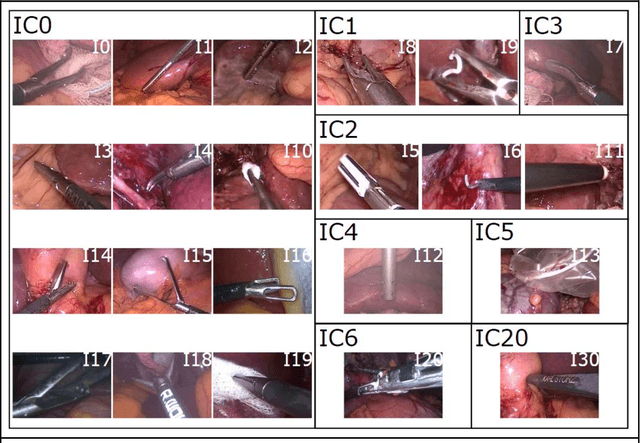

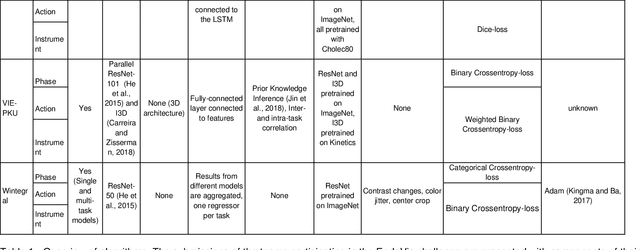

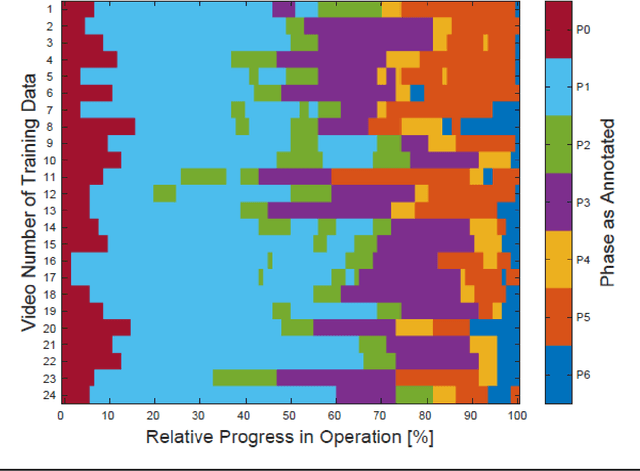

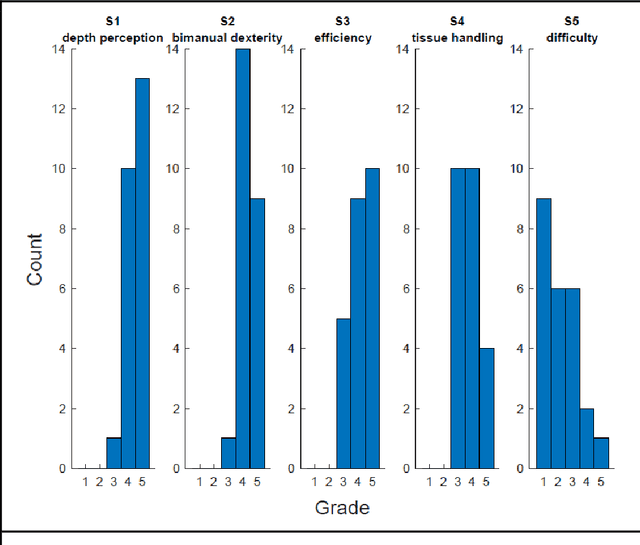

Abstract:PURPOSE: Surgical workflow and skill analysis are key technologies for the next generation of cognitive surgical assistance systems. These systems could increase the safety of the operation through context-sensitive warnings and semi-autonomous robotic assistance or improve training of surgeons via data-driven feedback. In surgical workflow analysis up to 91% average precision has been reported for phase recognition on an open data single-center dataset. In this work we investigated the generalizability of phase recognition algorithms in a multi-center setting including more difficult recognition tasks such as surgical action and surgical skill. METHODS: To achieve this goal, a dataset with 33 laparoscopic cholecystectomy videos from three surgical centers with a total operation time of 22 hours was created. Labels included annotation of seven surgical phases with 250 phase transitions, 5514 occurences of four surgical actions, 6980 occurences of 21 surgical instruments from seven instrument categories and 495 skill classifications in five skill dimensions. The dataset was used in the 2019 Endoscopic Vision challenge, sub-challenge for surgical workflow and skill analysis. Here, 12 teams submitted their machine learning algorithms for recognition of phase, action, instrument and/or skill assessment. RESULTS: F1-scores were achieved for phase recognition between 23.9% and 67.7% (n=9 teams), for instrument presence detection between 38.5% and 63.8% (n=8 teams), but for action recognition only between 21.8% and 23.3% (n=5 teams). The average absolute error for skill assessment was 0.78 (n=1 team). CONCLUSION: Surgical workflow and skill analysis are promising technologies to support the surgical team, but are not solved yet, as shown by our comparison of algorithms. This novel benchmark can be used for comparable evaluation and validation of future work.

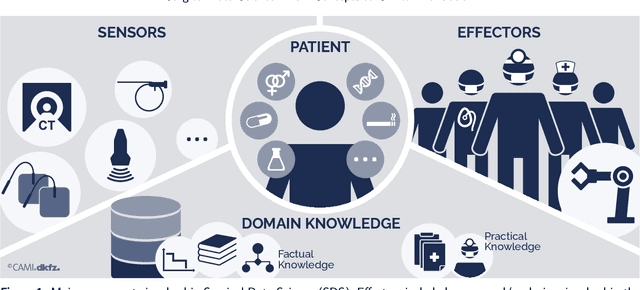

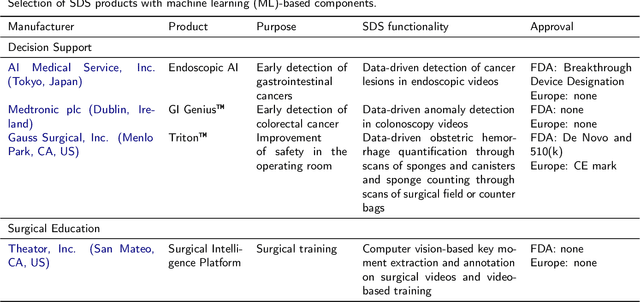

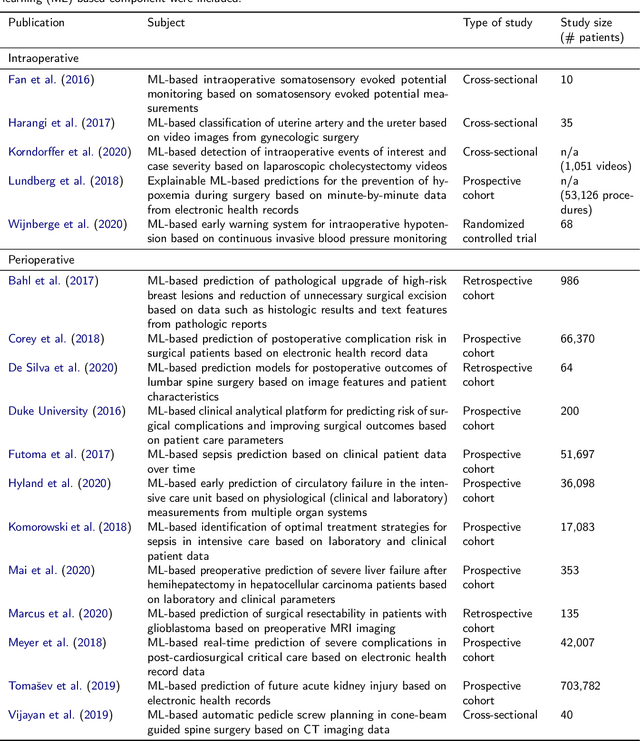

Surgical Data Science -- from Concepts to Clinical Translation

Oct 30, 2020

Abstract:Recent developments in data science in general and machine learning in particular have transformed the way experts envision the future of surgery. Surgical data science is a new research field that aims to improve the quality of interventional healthcare through the capture, organization, analysis and modeling of data. While an increasing number of data-driven approaches and clinical applications have been studied in the fields of radiological and clinical data science, translational success stories are still lacking in surgery. In this publication, we shed light on the underlying reasons and provide a roadmap for future advances in the field. Based on an international workshop involving leading researchers in the field of surgical data science, we review current practice, key achievements and initiatives as well as available standards and tools for a number of topics relevant to the field, namely (1) technical infrastructure for data acquisition, storage and access in the presence of regulatory constraints, (2) data annotation and sharing and (3) data analytics. Drawing from this extensive review, we present current challenges for technology development and (4) describe a roadmap for faster clinical translation and exploitation of the full potential of surgical data science.

Prediction of laparoscopic procedure duration using unlabeled, multimodal sensor data

Nov 08, 2018

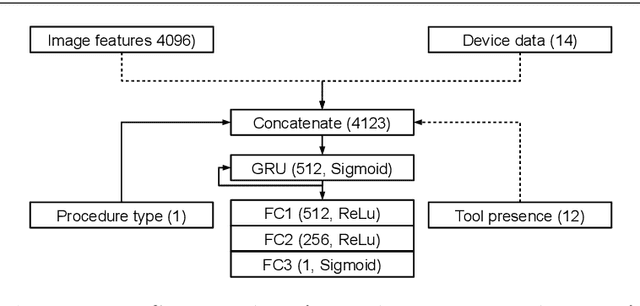

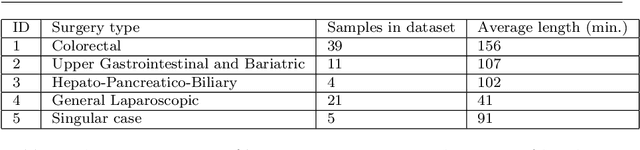

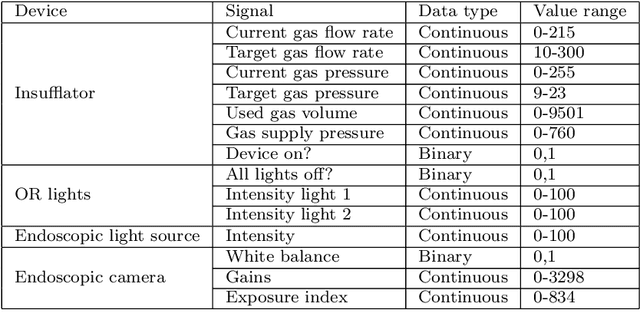

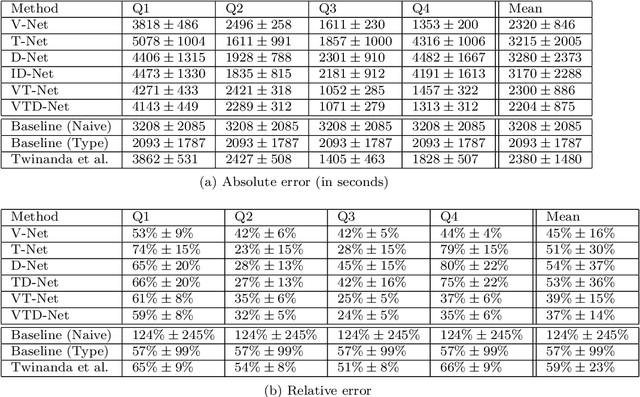

Abstract:The course of surgical procedures is often unpredictable, making it difficult to estimate the duration of procedures beforehand. This uncertainty makes scheduling surgical procedures a difficult task. A context-aware method that analyses the workflow of an intervention online and automatically predicts the remaining duration would alleviate these problems. As basis for such an estimate, information regarding the current state of the intervention is a requirement. Today, the operating room contains a diverse range of sensors. During laparoscopic interventions, the endoscopic video stream is an ideal source of such information. Extracting quantitative information from the video is challenging though, due to its high dimensionality. Other surgical devices (e.g. insufflator, lights, etc.) provide data streams which are, in contrast to the video stream, more compact and easier to quantify. Though whether such streams offer sufficient information for estimating the duration of surgery is uncertain. In this paper, we propose and compare methods, based on convolutional neural networks, for continuously predicting the duration of laparoscopic interventions based on unlabeled data, such as from endoscopic image and surgical device streams. The methods are evaluated on 80 recorded laparoscopic interventions of various types, for which surgical device data and the endoscopic video streams are available. Here the combined method performs best with an overall average error of 37% and an average halftime error of approximately 28%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge