Yaser P. Fallah

Context-Aware Target Classification with Hybrid Gaussian Process prediction for Cooperative Vehicle Safety systems

Dec 24, 2022Abstract:Vehicle-to-Everything (V2X) communication has been proposed as a potential solution to improve the robustness and safety of autonomous vehicles by improving coordination and removing the barrier of non-line-of-sight sensing. Cooperative Vehicle Safety (CVS) applications are tightly dependent on the reliability of the underneath data system, which can suffer from loss of information due to the inherent issues of their different components, such as sensors failures or the poor performance of V2X technologies under dense communication channel load. Particularly, information loss affects the target classification module and, subsequently, the safety application performance. To enable reliable and robust CVS systems that mitigate the effect of information loss, we proposed a Context-Aware Target Classification (CA-TC) module coupled with a hybrid learning-based predictive modeling technique for CVS systems. The CA-TC consists of two modules: A Context-Aware Map (CAM), and a Hybrid Gaussian Process (HGP) prediction system. Consequently, the vehicle safety applications use the information from the CA-TC, making them more robust and reliable. The CAM leverages vehicles path history, road geometry, tracking, and prediction; and the HGP is utilized to provide accurate vehicles' trajectory predictions to compensate for data loss (due to communication congestion) or sensor measurements' inaccuracies. Based on offline real-world data, we learn a finite bank of driver models that represent the joint dynamics of the vehicle and the drivers' behavior. We combine offline training and online model updates with on-the-fly forecasting to account for new possible driver behaviors. Finally, our framework is validated using simulation and realistic driving scenarios to confirm its potential in enhancing the robustness and reliability of CVS systems.

Learning-based social coordination to improve safety and robustness of cooperative autonomous vehicles in mixed traffic

Nov 22, 2022Abstract:It is expected that autonomous vehicles(AVs) and heterogeneous human-driven vehicles(HVs) will coexist on the same road. The safety and reliability of AVs will depend on their social awareness and their ability to engage in complex social interactions in a socially accepted manner. However, AVs are still inefficient in terms of cooperating with HVs and struggle to understand and adapt to human behavior, which is particularly challenging in mixed autonomy. In a road shared by AVs and HVs, the social preferences or individual traits of HVs are unknown to the AVs and different from AVs, which are expected to follow a policy, HVs are particularly difficult to forecast since they do not necessarily follow a stationary policy. To address these challenges, we frame the mixed-autonomy problem as a multi-agent reinforcement learning (MARL) problem and propose an approach that allows AVs to learn the decision-making of HVs implicitly from experience, account for all vehicles' interests, and safely adapt to other traffic situations. In contrast with existing works, we quantify AVs' social preferences and propose a distributed reward structure that introduces altruism into their decision-making process, allowing the altruistic AVs to learn to establish coalitions and influence the behavior of HVs.

Prediction-aware and Reinforcement Learning based Altruistic Cooperative Driving

Nov 19, 2022

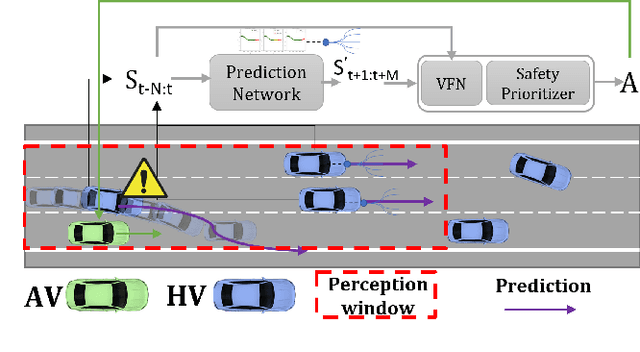

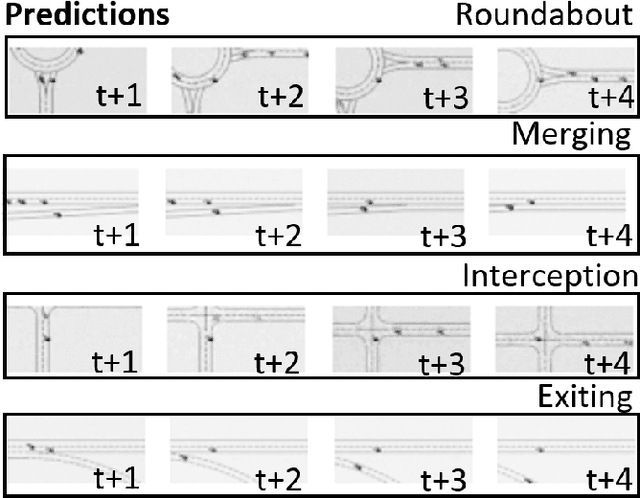

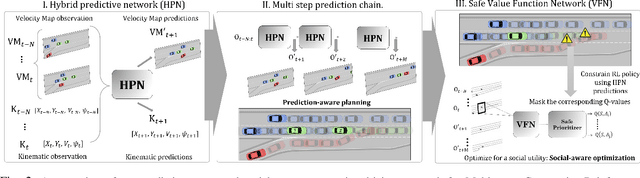

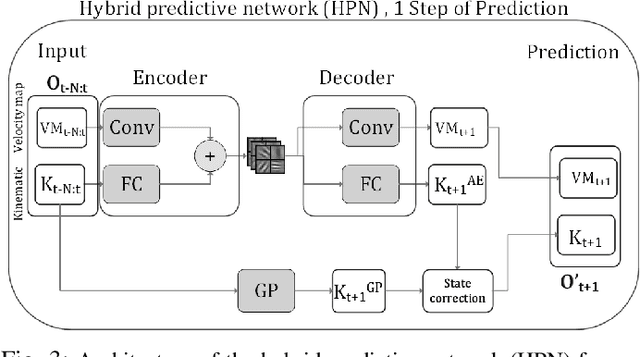

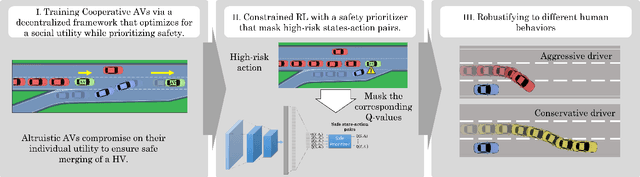

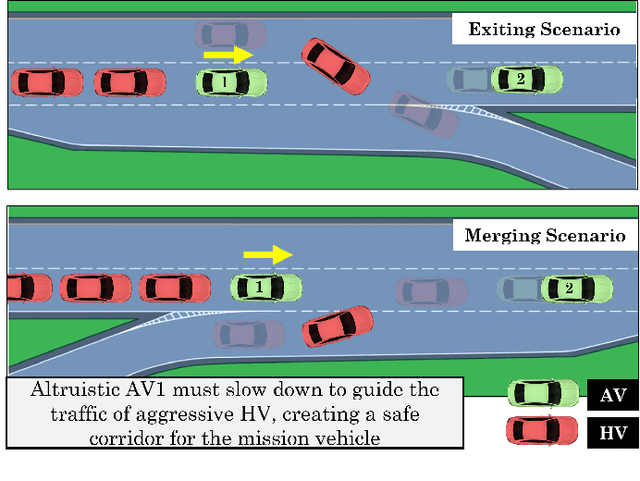

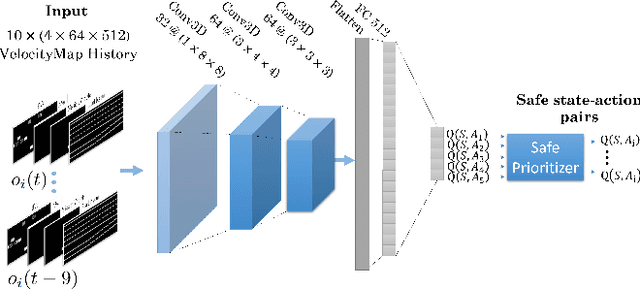

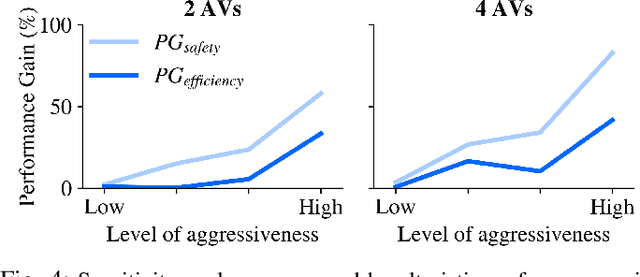

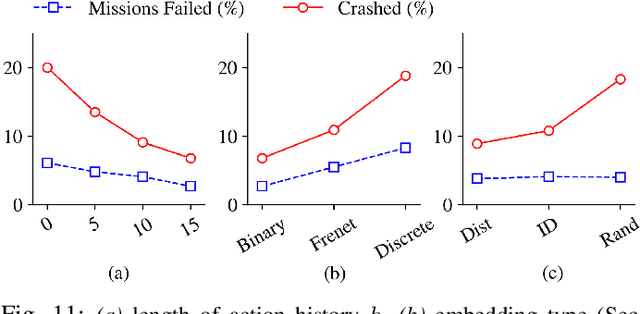

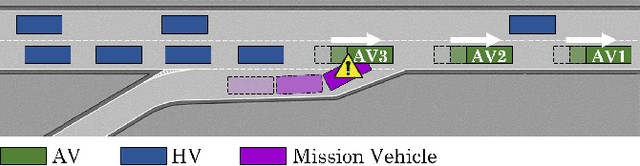

Abstract:Autonomous vehicle (AV) navigation in the presence of Human-driven vehicles (HVs) is challenging, as HVs continuously update their policies in response to AVs. In order to navigate safely in the presence of complex AV-HV social interactions, the AVs must learn to predict these changes. Humans are capable of navigating such challenging social interaction settings because of their intrinsic knowledge about other agents behaviors and use that to forecast what might happen in the future. Inspired by humans, we provide our AVs the capability of anticipating future states and leveraging prediction in a cooperative reinforcement learning (RL) decision-making framework, to improve safety and robustness. In this paper, we propose an integration of two essential and earlier-presented components of AVs: social navigation and prediction. We formulate the AV decision-making process as a RL problem and seek to obtain optimal policies that produce socially beneficial results utilizing a prediction-aware planning and social-aware optimization RL framework. We also propose a Hybrid Predictive Network (HPN) that anticipates future observations. The HPN is used in a multi-step prediction chain to compute a window of predicted future observations to be used by the value function network (VFN). Finally, a safe VFN is trained to optimize a social utility using a sequence of previous and predicted observations, and a safety prioritizer is used to leverage the interpretable kinematic predictions to mask the unsafe actions, constraining the RL policy. We compare our prediction-aware AV to state-of-the-art solutions and demonstrate performance improvements in terms of efficiency and safety in multiple simulated scenarios.

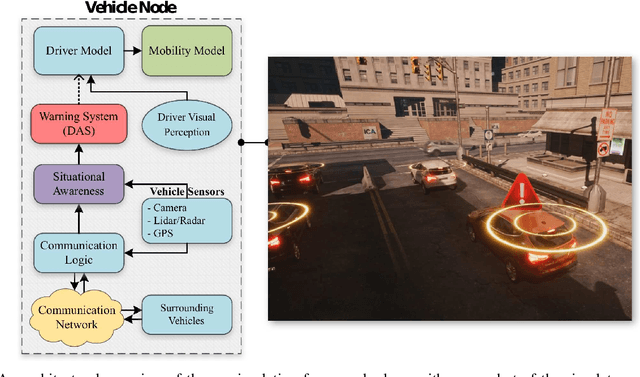

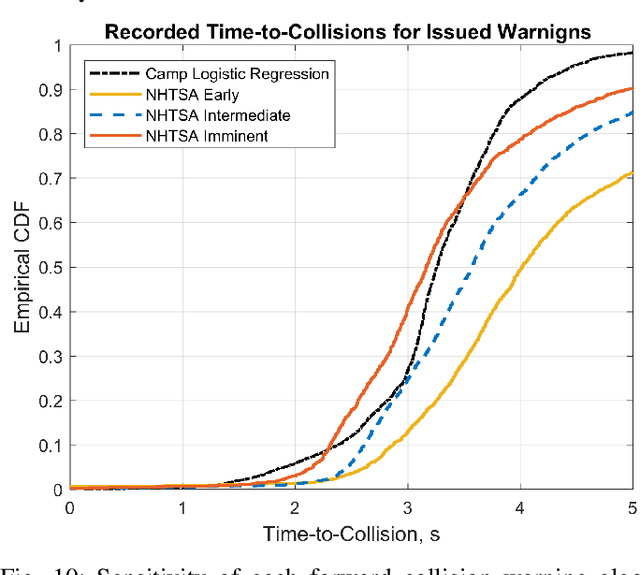

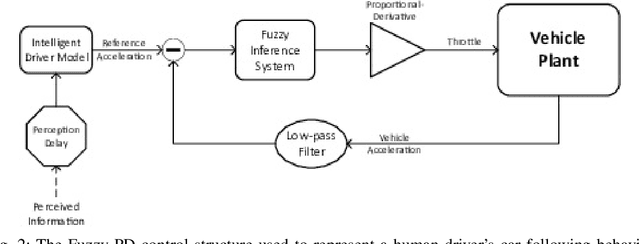

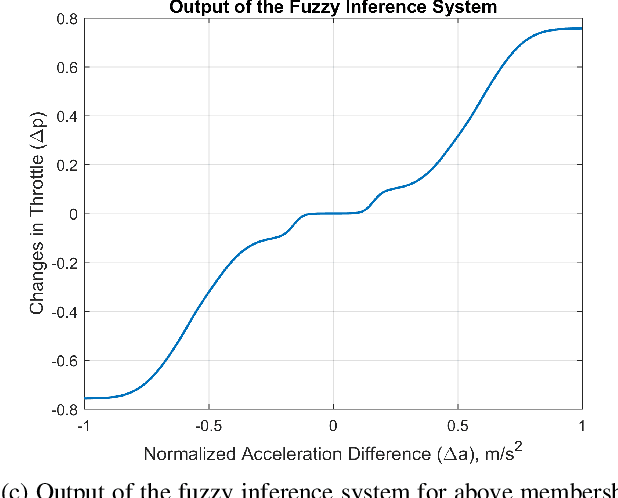

Augmented Driver Behavior Models for High-Fidelity Simulation Study of Crash Detection Algorithms

Aug 10, 2022

Abstract:Developing safety and efficiency applications for Connected and Automated Vehicles (CAVs) require a great deal of testing and evaluation. The need for the operation of these systems in critical and dangerous situations makes the burden of their evaluation very costly, possibly dangerous, and time-consuming. As an alternative, researchers attempt to study and evaluate their algorithms and designs using simulation platforms. Modeling the behavior of drivers or human operators in CAVs or other vehicles interacting with them is one of the main challenges of such simulations. While developing a perfect model for human behavior is a challenging task and an open problem, we present a significant augmentation of the current models used in simulators for driver behavior. In this paper, we present a simulation platform for a hybrid transportation system that includes both human-driven and automated vehicles. In addition, we decompose the human driving task and offer a modular approach to simulating a large-scale traffic scenario, allowing for a thorough investigation of automated and active safety systems. Such representation through Interconnected modules offers a human-interpretable system that can be tuned to represent different classes of drivers. Additionally, we analyze a large driving dataset to extract expressive parameters that would best describe different driving characteristics. Finally, we recreate a similarly dense traffic scenario within our simulator and conduct a thorough analysis of various human-specific and system-specific factors, studying their effect on traffic network performance and safety.

Impact of Information Flow Topology on Safety of Tightly-coupled Connected and Automated Vehicle Platoons Utilizing Stochastic Control

Mar 29, 2022

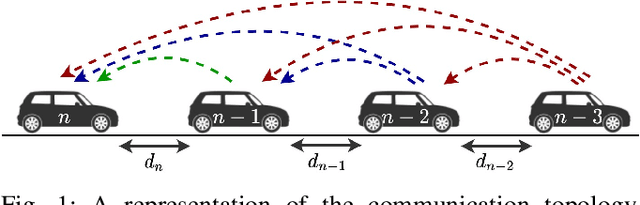

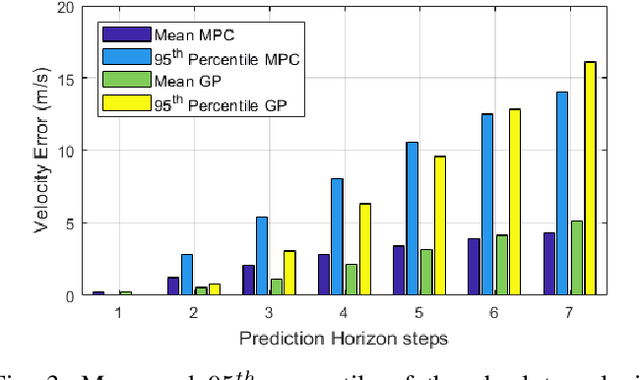

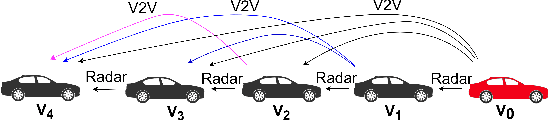

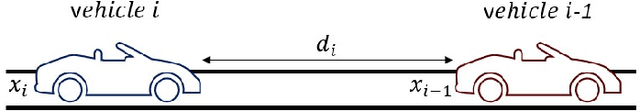

Abstract:Cooperative driving, enabled by Vehicle-to-Everything (V2X) communication, is expected to significantly contribute to the transportation system's safety and efficiency. Cooperative Adaptive Cruise Control (CACC), a major cooperative driving application, has been the subject of many studies in recent years. The primary motivation behind using CACC is to reduce traffic congestion and improve traffic flow, traffic throughput, and highway capacity. Since the information flow between cooperative vehicles can significantly affect the dynamics of a platoon, the design and performance of control components are tightly dependent on the communication component performance. In addition, the choice of Information Flow Topology (IFT) can affect certain platoons properties such as stability and scalability. Although cooperative vehicles perception can be expanded to multiple predecessors information by using V2X communication, the communication technologies still suffer from scalability issues. Therefore, cooperative vehicles are required to predict each other's behavior to compensate for the effects of non-ideal communication. The notion of Model-Based Communication (MBC) was proposed to enhance cooperative vehicles perception under non-ideal communication by introducing a new flexible content structure for broadcasting joint vehicles dynamic/drivers behavior models. By utilizing a non-parametric (Bayesian) modeling scheme, i.e., Gaussian Process Regression (GPR), and the MBC concept, this paper develops a discrete hybrid stochastic model predictive control approach and examines the impact of communication losses and different information flow topologies on the performance and safety of the platoon. The results demonstrate an improvement in response time and safety using more vehicles information, validating the potential of cooperation to attenuate disturbances and improve traffic flow and safety.

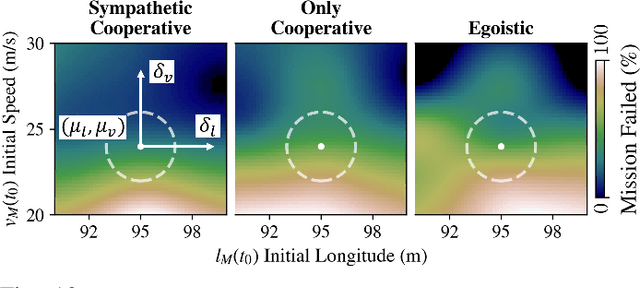

Robustness and Adaptability of Reinforcement Learning based Cooperative Autonomous Driving in Mixed-autonomy Traffic

Feb 02, 2022

Abstract:Building autonomous vehicles (AVs) is a complex problem, but enabling them to operate in the real world where they will be surrounded by human-driven vehicles (HVs) is extremely challenging. Prior works have shown the possibilities of creating inter-agent cooperation between a group of AVs that follow a social utility. Such altruistic AVs can form alliances and affect the behavior of HVs to achieve socially desirable outcomes. We identify two major challenges in the co-existence of AVs and HVs. First, social preferences and individual traits of a given human driver, e.g., selflessness and aggressiveness are unknown to an AV, and it is almost impossible to infer them in real-time during a short AV-HV interaction. Second, contrary to AVs that are expected to follow a policy, HVs do not necessarily follow a stationary policy and therefore are extremely hard to predict. To alleviate the above-mentioned challenges, we formulate the mixed-autonomy problem as a multi-agent reinforcement learning (MARL) problem and propose a decentralized framework and reward function for training cooperative AVs. Our approach enables AVs to learn the decision-making of HVs implicitly from experience, optimizes for a social utility while prioritizing safety and allowing adaptability; robustifying altruistic AVs to different human behaviors and constraining them to a safe action space. Finally, we investigate the robustness, safety and sensitivity of AVs to various HVs behavioral traits and present the settings in which the AVs can learn cooperative policies that are adaptable to different situations.

Finite State Markov Modeling of C-V2X Erasure Links For Performance and Stability Analysis of Platooning Applications

Nov 13, 2021

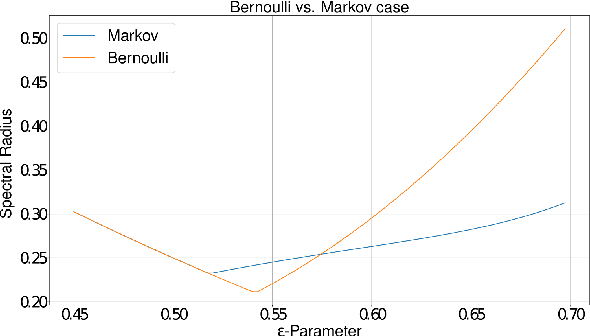

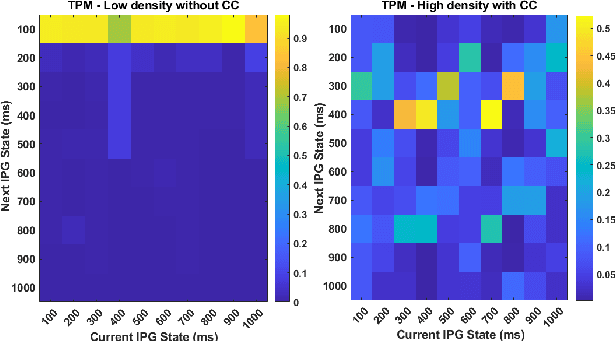

Abstract:Cooperative driving systems, such as platooning, rely on communication and information exchange to create situational awareness for each agent. Design and performance of control components are therefore tightly coupled with communication component performance. The information flow between vehicles can significantly affect the dynamics of a platoon. Therefore, both the performance and the stability of a platoon depend not only on the vehicle's controller but also on the information flow Topology (IFT). The IFT can cause limitations for certain platoon properties, i.e., stability and scalability. Cellular Vehicle-To-Everything (C-V2X) has emerged as one of the main communication technologies to support connected and automated vehicle applications. As a result of packet loss, wireless channels create random link interruption and changes in network topologies. In this paper, we model the communication links between vehicles with a first-order Markov model to capture the prevalent time correlations for each link. These models enable performance evaluation through better approximation of communication links during system design stages. Our approach is to use data from experiments to model the Inter-Packet Gap (IPG) using Markov chains and derive transition probability matrices for consecutive IPG states. Training data is collected from high fidelity simulations using models derived based on empirical data for a variety of different vehicle densities and communication rates. Utilizing the IPG models, we analyze the mean-square stability of a platoon of vehicles with the standard consensus protocol tuned for ideal communication and compare the degradation in performance for different scenarios.

Gaussian Process based Stochastic Model Predictive Control for Cooperative Adaptive Cruise Control

Nov 13, 2021

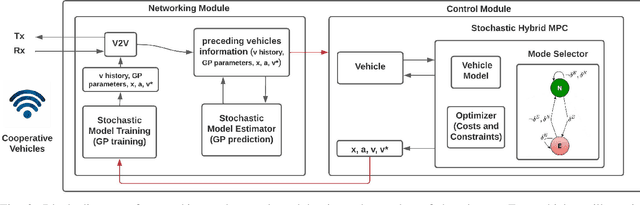

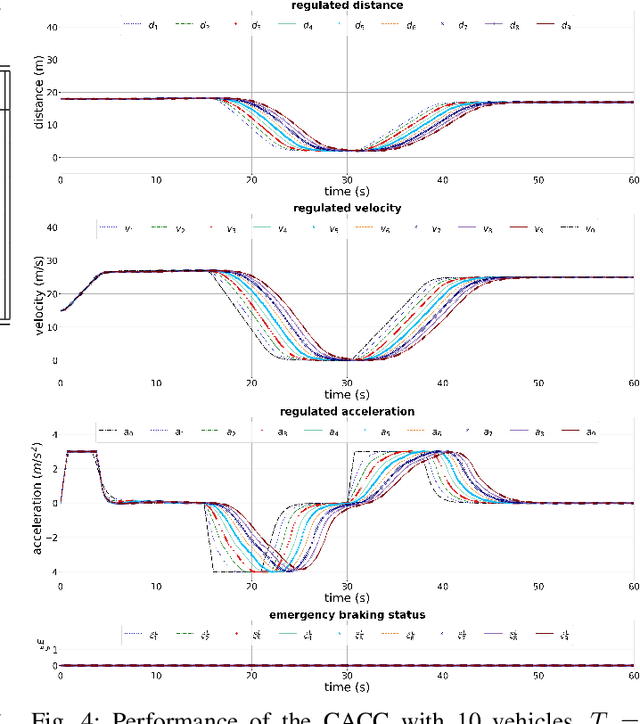

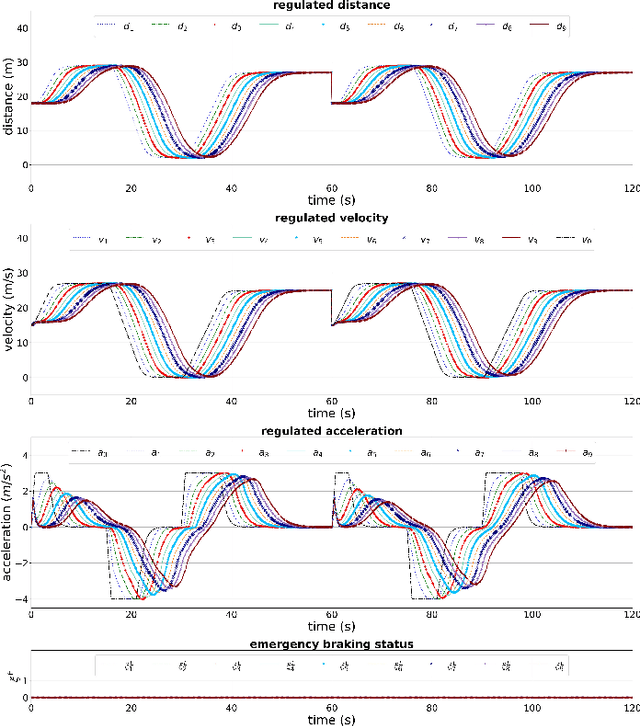

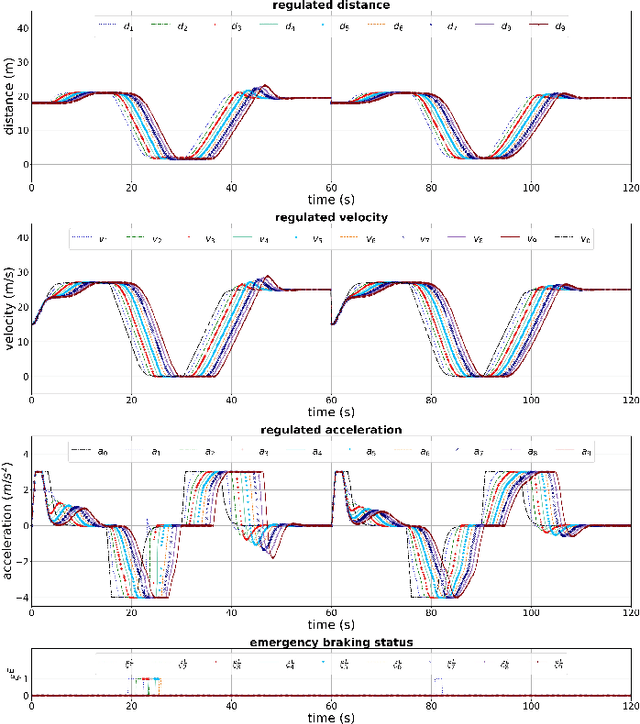

Abstract:Cooperative driving relies on communication among vehicles to create situational awareness. One application of cooperative driving is Cooperative Adaptive Cruise Control (CACC) that aims at enhancing highway transportation safety and capacity. Model-based communication (MBC) is a new paradigm with a flexible content structure for broadcasting joint vehicle-driver predictive behavioral models. The vehicle's complex dynamics and diverse driving behaviors add complexity to the modeling process. Gaussian process (GP) is a fully data-driven and non-parametric Bayesian modeling approach which can be used as a modeling component of MBC. The knowledge about the uncertainty is propagated through predictions by generating local GPs for vehicles and broadcasting their hyper-parameters as a model to the neighboring vehicles. In this research study, GP is used to model each vehicle's speed trajectory, which allows vehicles to access the future behavior of their preceding vehicle during communication loss and/or low-rate communication. Besides, to overcome the safety issues in a vehicle platoon, two operating modes for each vehicle are considered; free following and emergency braking. This paper presents a discrete hybrid stochastic model predictive control, which incorporates system modes as well as uncertainties captured by GP models. The proposed control design approach finds the optimal vehicle speed trajectory with the goal of achieving a safe and efficient platoon of vehicles with small inter-vehicle gap while reducing the reliance of the vehicles on a frequent communication. Simulation studies demonstrate the efficacy of the proposed controller considering the aforementioned communication paradigm with low-rate intermittent communication.

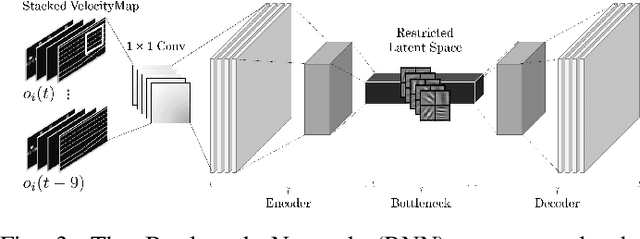

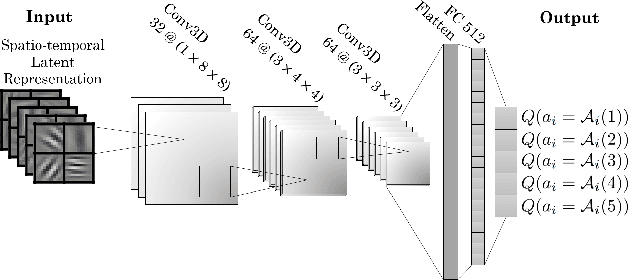

Towards Learning Generalizable Driving Policies from Restricted Latent Representations

Nov 05, 2021

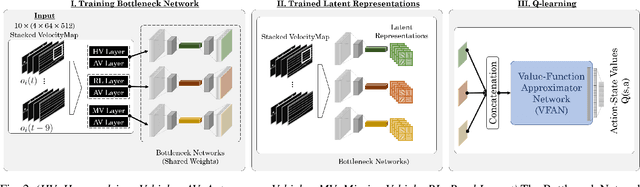

Abstract:Training intelligent agents that can drive autonomously in various urban and highway scenarios has been a hot topic in the robotics society within the last decades. However, the diversity of driving environments in terms of road topology and positioning of the neighboring vehicles makes this problem very challenging. It goes without saying that although scenario-specific driving policies for autonomous driving are promising and can improve transportation safety and efficiency, they are clearly not a universal scalable solution. Instead, we seek decision-making schemes and driving policies that can generalize to novel and unseen environments. In this work, we capitalize on the key idea that human drivers learn abstract representations of their surroundings that are fairly similar among various driving scenarios and environments. Through these representations, human drivers are able to quickly adapt to novel environments and drive in unseen conditions. Formally, through imposing an information bottleneck, we extract a latent representation that minimizes the \textit{distance} -- a quantification that we introduce to gauge the similarity among different driving configurations -- between driving scenarios. This latent space is then employed as the input to a Q-learning module to learn generalizable driving policies. Our experiments revealed that, using this latent representation can reduce the number of crashes to about half.

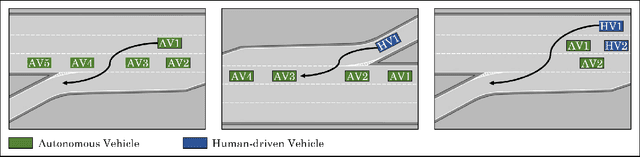

Social Coordination and Altruism in Autonomous Driving

Jul 20, 2021

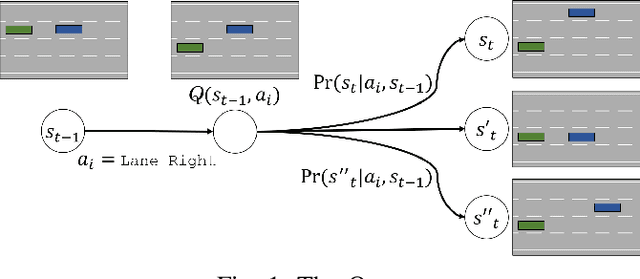

Abstract:Despite the advances in the autonomous driving domain, autonomous vehicles (AVs) are still inefficient and limited in terms of cooperating with each other or coordinating with vehicles operated by humans. A group of autonomous and human-driven vehicles (HVs) which work together to optimize an altruistic social utility -- as opposed to the egoistic individual utility -- can co-exist seamlessly and assure safety and efficiency on the road. Achieving this mission without explicit coordination among agents is challenging, mainly due to the difficulty of predicting the behavior of humans with heterogeneous preferences in mixed-autonomy environments. Formally, we model an AV's maneuver planning in mixed-autonomy traffic as a partially-observable stochastic game and attempt to derive optimal policies that lead to socially-desirable outcomes using a multi-agent reinforcement learning framework. We introduce a quantitative representation of the AVs' social preferences and design a distributed reward structure that induces altruism into their decision making process. Our altruistic AVs are able to form alliances, guide the traffic, and affect the behavior of the HVs to handle competitive driving scenarios. As a case study, we compare egoistic AVs to our altruistic autonomous agents in a highway merging setting and demonstrate the emerging behaviors that lead to a noticeable improvement in the number of successful merges as well as the overall traffic flow and safety.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge