Chen Tian

DART: Diffusion-Inspired Speculative Decoding for Fast LLM Inference

Jan 27, 2026Abstract:Speculative decoding is an effective and lossless approach for accelerating LLM inference. However, existing widely adopted model-based draft designs, such as EAGLE3, improve accuracy at the cost of multi-step autoregressive inference, resulting in high drafting latency and ultimately rendering the drafting stage itself a performance bottleneck. Inspired by diffusion-based large language models (dLLMs), we propose DART, which leverages parallel generation to reduce drafting latency. DART predicts logits for multiple future masked positions in parallel within a single forward pass based on hidden states of the target model, thereby eliminating autoregressive rollouts in the draft model while preserving a lightweight design. Based on these parallel logit predictions, we further introduce an efficient tree pruning algorithm that constructs high-quality draft token trees with N-gram-enforced semantic continuity. DART substantially reduces draft-stage overhead while preserving high draft accuracy, leading to significantly improved end-to-end decoding speed. Experimental results demonstrate that DART achieves a 2.03x--3.44x wall-clock time speedup across multiple datasets, surpassing EAGLE3 by 30% on average and offering a practical speculative decoding framework. Code is released at https://github.com/fvliang/DART.

OrchANN: A Unified I/O Orchestration Framework for Skewed Out-of-Core Vector Search

Dec 28, 2025Abstract:Approximate nearest neighbor search (ANNS) at billion scale is fundamentally an out-of-core problem: vectors and indexes live on SSD, so performance is dominated by I/O rather than compute. Under skewed semantic embeddings, existing out-of-core systems break down: a uniform local index mismatches cluster scales, static routing misguides queries and inflates the number of probed partitions, and pruning is incomplete at the cluster level and lossy at the vector level, triggering "fetch-to-discard" reranking on raw vectors. We present OrchANN, an out-of-core ANNS engine that uses an I/O orchestration model for unified I/O governance along the route-access-verify pipeline. OrchANN selects a heterogeneous local index per cluster via offline auto-profiling, maintains a query-aware in-memory navigation graph that adapts to skewed workloads, and applies multi-level pruning with geometric bounds to filter both clusters and vectors before issuing SSD reads. Across five standard datasets under strict out-of-core constraints, OrchANN outperforms four baselines including DiskANN, Starling, SPANN, and PipeANN in both QPS and latency while reducing SSD accesses. Furthermore, OrchANN delivers up to 17.2x higher QPS and 25.0x lower latency than competing systems without sacrificing accuracy.

Chordless Structure: A Pathway to Simple and Expressive GNNs

May 25, 2025Abstract:Researchers have proposed various methods of incorporating more structured information into the design of Graph Neural Networks (GNNs) to enhance their expressiveness. However, these methods are either computationally expensive or lacking in provable expressiveness. In this paper, we observe that the chords increase the complexity of the graph structure while contributing little useful information in many cases. In contrast, chordless structures are more efficient and effective for representing the graph. Therefore, when leveraging the information of cycles, we choose to omit the chords. Accordingly, we propose a Chordless Structure-based Graph Neural Network (CSGNN) and prove that its expressiveness is strictly more powerful than the k-hop GNN (KPGNN) with polynomial complexity. Experimental results on real-world datasets demonstrate that CSGNN outperforms existing GNNs across various graph tasks while incurring lower computational costs and achieving better performance than the GNNs of 3-WL expressiveness.

FlashForge: Ultra-Efficient Prefix-Aware Attention for LLM Decoding

May 23, 2025

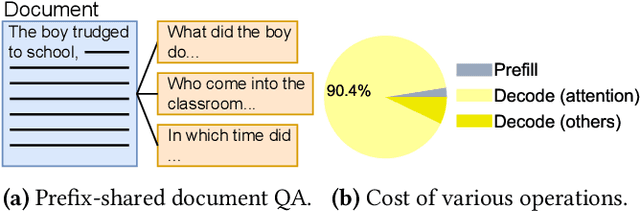

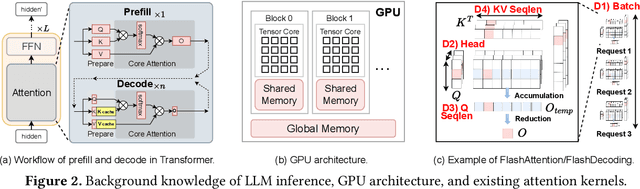

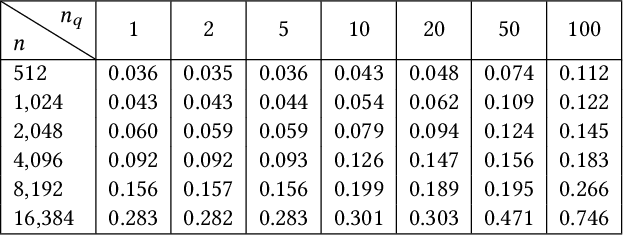

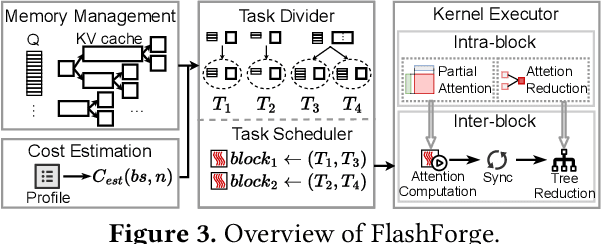

Abstract:Prefix-sharing among multiple prompts presents opportunities to combine the operations of the shared prefix, while attention computation in the decode stage, which becomes a critical bottleneck with increasing context lengths, is a memory-intensive process requiring heavy memory access on the key-value (KV) cache of the prefixes. Therefore, in this paper, we explore the potential of prefix-sharing in the attention computation of the decode stage. However, the tree structure of the prefix-sharing mechanism presents significant challenges for attention computation in efficiently processing shared KV cache access patterns while managing complex dependencies and balancing irregular workloads. To address the above challenges, we propose a dedicated attention kernel to combine the memory access of shared prefixes in the decoding stage, namely FlashForge. FlashForge delivers two key innovations: a novel shared-prefix attention kernel that optimizes memory hierarchy and exploits both intra-block and inter-block parallelism, and a comprehensive workload balancing mechanism that efficiently estimates cost, divides tasks, and schedules execution. Experimental results show that FlashForge achieves an average 1.9x speedup and 120.9x memory access reduction compared to the state-of-the-art FlashDecoding kernel regarding attention computation in the decode stage and 3.8x end-to-end time per output token compared to the vLLM.

Revisiting SLO and Goodput Metrics in LLM Serving

Oct 18, 2024

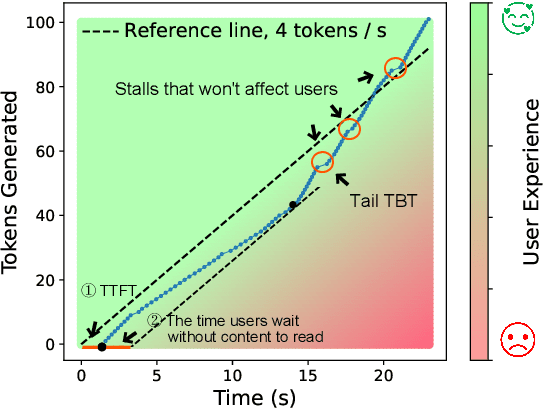

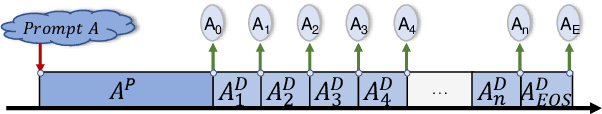

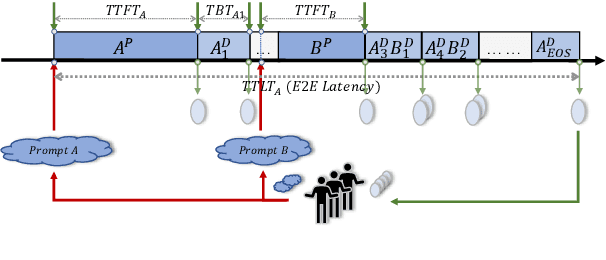

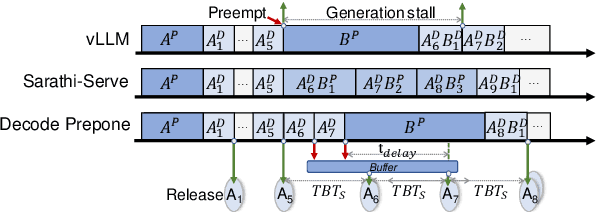

Abstract:Large language models (LLMs) have achieved remarkable performance and are widely deployed in various applications, while the serving of LLM inference has raised concerns about user experience and serving throughput. Accordingly, service level objectives (SLOs) and goodput-the number of requests that meet SLOs per second-are introduced to evaluate the performance of LLM serving. However, existing metrics fail to capture the nature of user experience. We observe two ridiculous phenomena in existing metrics: 1) delaying token delivery can smooth the tail time between tokens (tail TBT) of a request and 2) dropping the request that fails to meet the SLOs midway can improve goodput. In this paper, we revisit SLO and goodput metrics in LLM serving and propose a unified metric framework smooth goodput including SLOs and goodput to reflect the nature of user experience in LLM serving. The framework can adapt to specific goals of different tasks by setting parameters. We re-evaluate the performance of different LLM serving systems under multiple workloads based on this unified framework and provide possible directions for future optimization of existing strategies. We hope that this framework can provide a unified standard for evaluating LLM serving and foster researches in the field of LLM serving optimization to move in a cohesive direction.

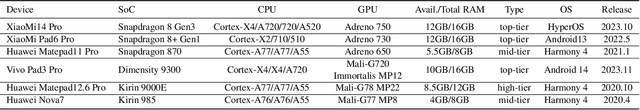

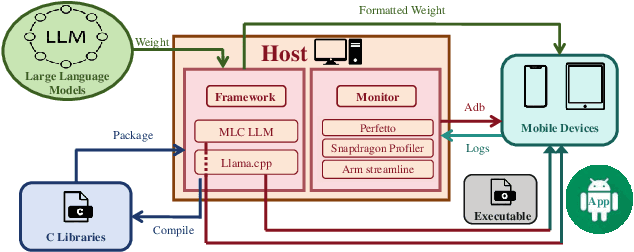

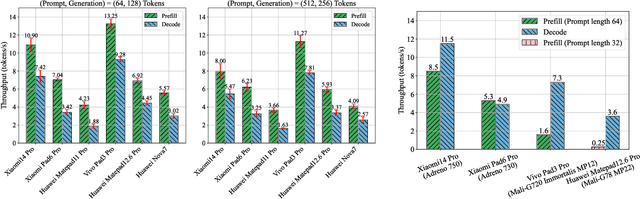

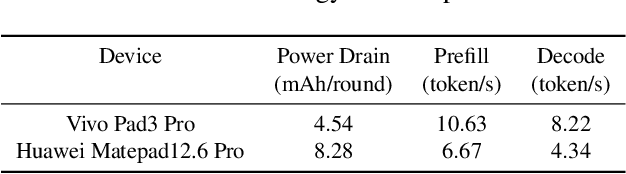

Large Language Model Performance Benchmarking on Mobile Platforms: A Thorough Evaluation

Oct 04, 2024

Abstract:As large language models (LLMs) increasingly integrate into every aspect of our work and daily lives, there are growing concerns about user privacy, which push the trend toward local deployment of these models. There are a number of lightweight LLMs (e.g., Gemini Nano, LLAMA2 7B) that can run locally on smartphones, providing users with greater control over their personal data. As a rapidly emerging application, we are concerned about their performance on commercial-off-the-shelf mobile devices. To fully understand the current landscape of LLM deployment on mobile platforms, we conduct a comprehensive measurement study on mobile devices. We evaluate both metrics that affect user experience, including token throughput, latency, and battery consumption, as well as factors critical to developers, such as resource utilization, DVFS strategies, and inference engines. In addition, we provide a detailed analysis of how these hardware capabilities and system dynamics affect on-device LLM performance, which may help developers identify and address bottlenecks for mobile LLM applications. We also provide comprehensive comparisons across the mobile system-on-chips (SoCs) from major vendors, highlighting their performance differences in handling LLM workloads. We hope that this study can provide insights for both the development of on-device LLMs and the design for future mobile system architecture.

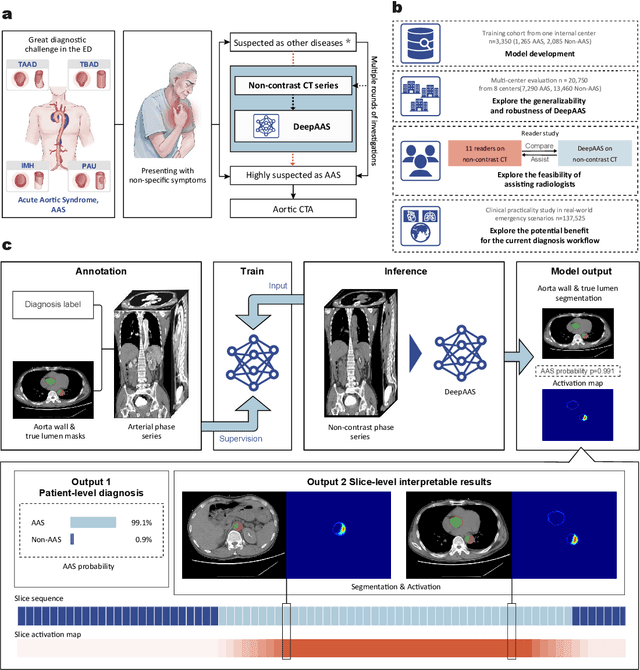

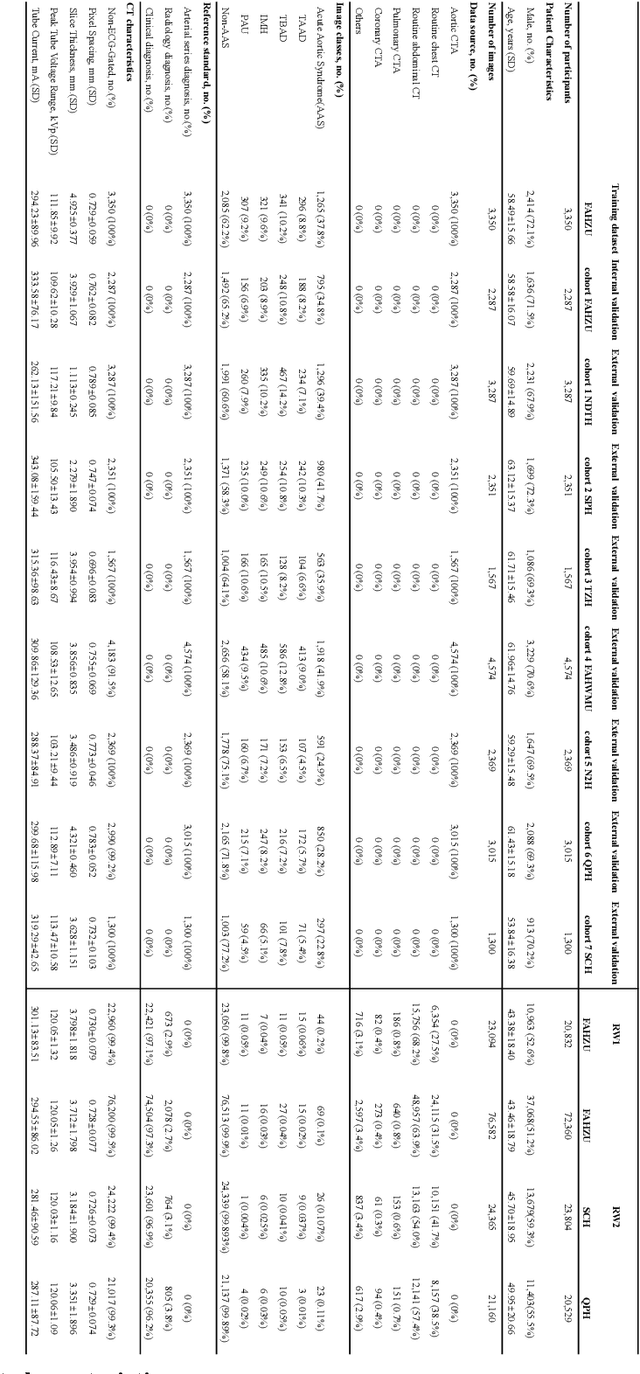

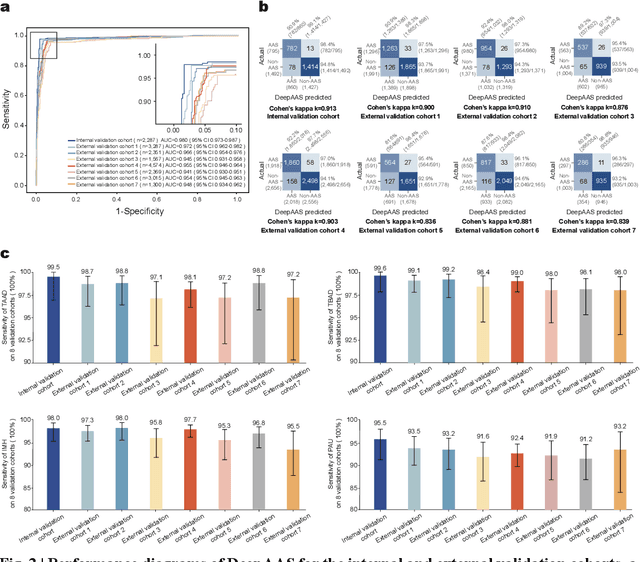

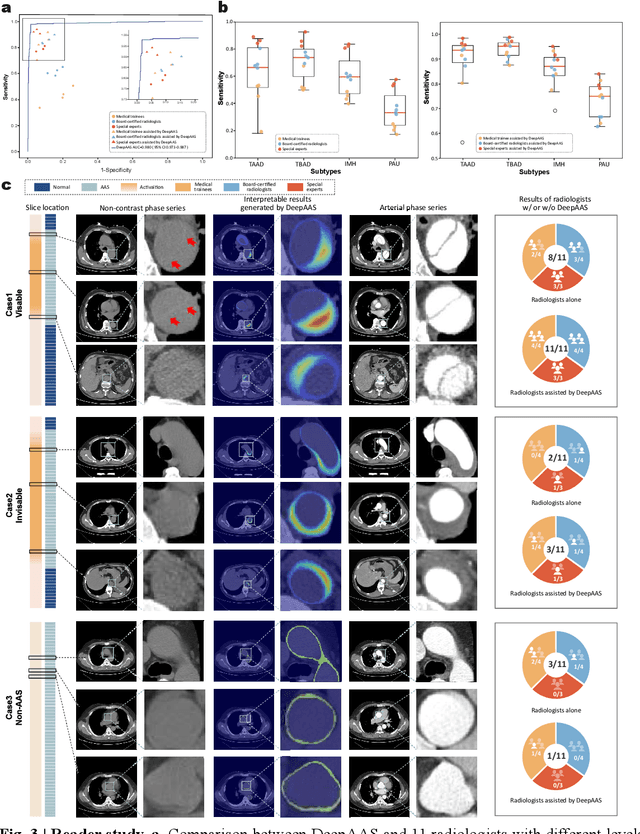

Rapid and Accurate Diagnosis of Acute Aortic Syndrome using Non-contrast CT: A Large-scale, Retrospective, Multi-center and AI-based Study

Jun 25, 2024

Abstract:Chest pain symptoms are highly prevalent in emergency departments (EDs), where acute aortic syndrome (AAS) is a catastrophic cardiovascular emergency with a high fatality rate, especially when timely and accurate treatment is not administered. However, current triage practices in the ED can cause up to approximately half of patients with AAS to have an initially missed diagnosis or be misdiagnosed as having other acute chest pain conditions. Subsequently, these AAS patients will undergo clinically inaccurate or suboptimal differential diagnosis. Fortunately, even under these suboptimal protocols, nearly all these patients underwent non-contrast CT covering the aorta anatomy at the early stage of differential diagnosis. In this study, we developed an artificial intelligence model (DeepAAS) using non-contrast CT, which is highly accurate for identifying AAS and provides interpretable results to assist in clinical decision-making. Performance was assessed in two major phases: a multi-center retrospective study (n = 20,750) and an exploration in real-world emergency scenarios (n = 137,525). In the multi-center cohort, DeepAAS achieved a mean area under the receiver operating characteristic curve of 0.958 (95% CI 0.950-0.967). In the real-world cohort, DeepAAS detected 109 AAS patients with misguided initial suspicion, achieving 92.6% (95% CI 76.2%-97.5%) in mean sensitivity and 99.2% (95% CI 99.1%-99.3%) in mean specificity. Our AI model performed well on non-contrast CT at all applicable early stages of differential diagnosis workflows, effectively reduced the overall missed diagnosis and misdiagnosis rate from 48.8% to 4.8% and shortened the diagnosis time for patients with misguided initial suspicion from an average of 681.8 (74-11,820) mins to 68.5 (23-195) mins. DeepAAS could effectively fill the gap in the current clinical workflow without requiring additional tests.

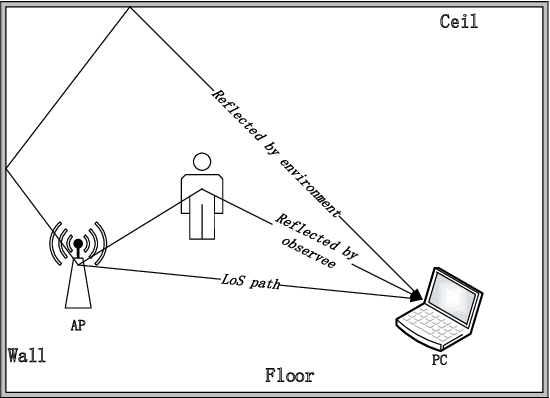

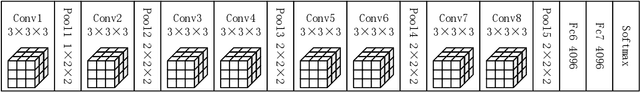

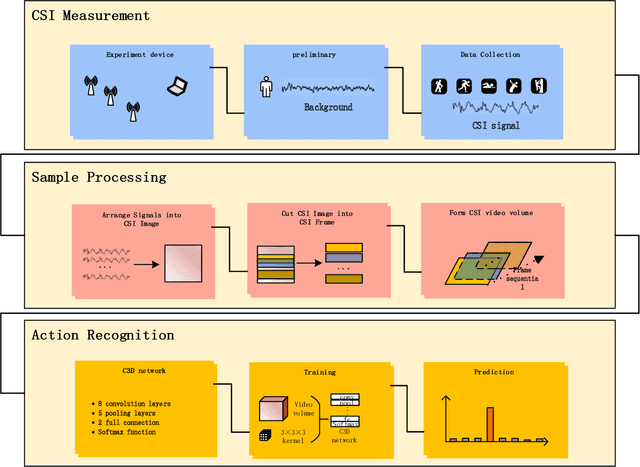

Simultaneous Implementation Features Extraction and Recognition Using C3D Network for WiFi-based Human Activity Recognition

Nov 21, 2019

Abstract:Human actions recognition has attracted more and more people's attention. Many technology have been developed to express human action's features, such as image, skeleton-based, and channel state information(CSI). Among them, on account of CSI's easy to be equipped and undemanding for light, and it has gained more and more attention in some special scene. However, the relationship between CSI signal and human actions is very complex, and some preliminary work must be done to make CSI features easy to understand for computer. Nowadays, many work departed CSI-based features' action dealing into two parts. One part is for features extraction and dimension reduce, and the other part is for time series problems. Some of them even omitted one of the two part work. Therefore, the accuracies of current recognition systems are far from satisfactory. In this paper, we propose a new deep learning based approach, i.e. C3D network and C3D network with attention mechanism, for human actions recognition using CSI signals. This kind of network can make feature extraction from spatial convolution and temporal convolution simultaneously, and through this network the two part of CSI-based human actions recognition mentioned above can be realized at the same time. The entire algorithm structure is simplified. The experimental results show that our proposed C3D network is able to achieve the best recognition performance for all activities when compared with some benchmark approaches.

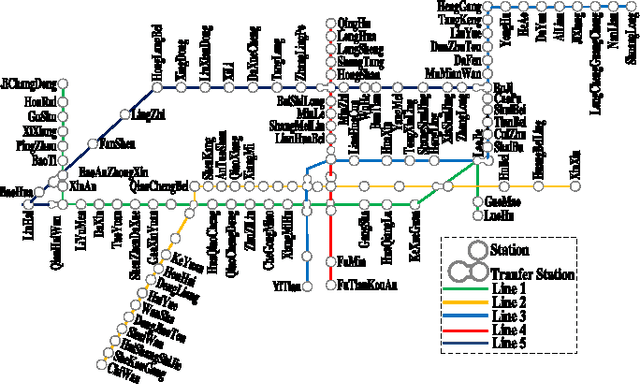

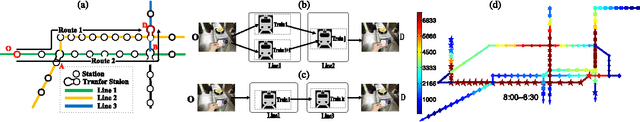

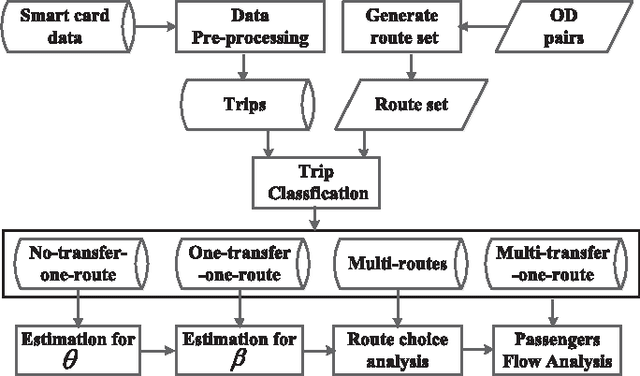

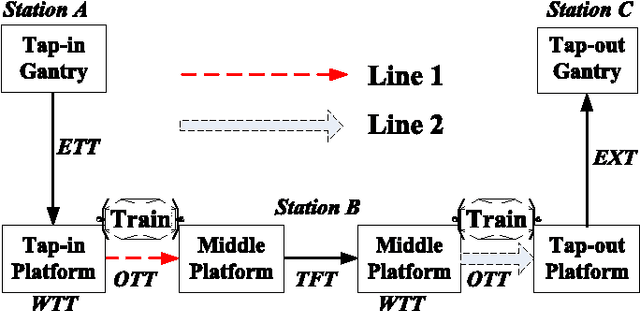

Estimation of Passenger Route Choice Pattern Using Smart Card Data for Complex Metro Systems

Apr 19, 2016

Abstract:Nowadays, metro systems play an important role in meeting the urban transportation demand in large cities. The understanding of passenger route choice is critical for public transit management. The wide deployment of Automated Fare Collection(AFC) systems opens up a new opportunity. However, only each trip's tap-in and tap-out timestamp and stations can be directly obtained from AFC system records; the train and route chosen by a passenger are unknown, which are necessary to solve our problem. While existing methods work well in some specific situations, they don't work for complicated situations. In this paper, we propose a solution that needs no additional equipment or human involvement than the AFC systems. We develop a probabilistic model that can estimate from empirical analysis how the passenger flows are dispatched to different routes and trains. We validate our approach using a large scale data set collected from the Shenzhen metro system. The measured results provide us with useful inputs when building the passenger path choice model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge