Changjian Wang

D-FaST: Cognitive Signal Decoding with Disentangled Frequency-Spatial-Temporal Attention

Jun 02, 2024

Abstract:Cognitive Language Processing (CLP), situated at the intersection of Natural Language Processing (NLP) and cognitive science, plays a progressively pivotal role in the domains of artificial intelligence, cognitive intelligence, and brain science. Among the essential areas of investigation in CLP, Cognitive Signal Decoding (CSD) has made remarkable achievements, yet there still exist challenges related to insufficient global dynamic representation capability and deficiencies in multi-domain feature integration. In this paper, we introduce a novel paradigm for CLP referred to as Disentangled Frequency-Spatial-Temporal Attention(D-FaST). Specifically, we present an novel cognitive signal decoder that operates on disentangled frequency-space-time domain attention. This decoder encompasses three key components: frequency domain feature extraction employing multi-view attention, spatial domain feature extraction utilizing dynamic brain connection graph attention, and temporal feature extraction relying on local time sliding window attention. These components are integrated within a novel disentangled framework. Additionally, to encourage advancements in this field, we have created a new CLP dataset, MNRED. Subsequently, we conducted an extensive series of experiments, evaluating D-FaST's performance on MNRED, as well as on publicly available datasets including ZuCo, BCIC IV-2A, and BCIC IV-2B. Our experimental results demonstrate that D-FaST outperforms existing methods significantly on both our datasets and traditional CSD datasets including establishing a state-of-the-art accuracy score 78.72% on MNRED, pushing the accuracy score on ZuCo to 78.35%, accuracy score on BCIC IV-2A to 74.85% and accuracy score on BCIC IV-2B to 76.81%.

At Which Training Stage Does Code Data Help LLMs Reasoning?

Sep 30, 2023

Abstract:Large Language Models (LLMs) have exhibited remarkable reasoning capabilities and become the foundation of language technologies. Inspired by the great success of code data in training LLMs, we naturally wonder at which training stage introducing code data can really help LLMs reasoning. To this end, this paper systematically explores the impact of code data on LLMs at different stages. Concretely, we introduce the code data at the pre-training stage, instruction-tuning stage, and both of them, respectively. Then, the reasoning capability of LLMs is comprehensively and fairly evaluated via six reasoning tasks in five domains. We critically analyze the experimental results and provide conclusions with insights. First, pre-training LLMs with the mixture of code and text can significantly enhance LLMs' general reasoning capability almost without negative transfer on other tasks. Besides, at the instruction-tuning stage, code data endows LLMs the task-specific reasoning capability. Moreover, the dynamic mixing strategy of code and text data assists LLMs to learn reasoning capability step-by-step during training. These insights deepen the understanding of LLMs regarding reasoning ability for their application, such as scientific question answering, legal support, etc. The source code and model parameters are released at the link:~\url{https://github.com/yingweima2022/CodeLLM}.

GeoTransformer: Fast and Robust Point Cloud Registration with Geometric Transformer

Jul 25, 2023

Abstract:We study the problem of extracting accurate correspondences for point cloud registration. Recent keypoint-free methods have shown great potential through bypassing the detection of repeatable keypoints which is difficult to do especially in low-overlap scenarios. They seek correspondences over downsampled superpoints, which are then propagated to dense points. Superpoints are matched based on whether their neighboring patches overlap. Such sparse and loose matching requires contextual features capturing the geometric structure of the point clouds. We propose Geometric Transformer, or GeoTransformer for short, to learn geometric feature for robust superpoint matching. It encodes pair-wise distances and triplet-wise angles, making it invariant to rigid transformation and robust in low-overlap cases. The simplistic design attains surprisingly high matching accuracy such that no RANSAC is required in the estimation of alignment transformation, leading to $100$ times acceleration. Extensive experiments on rich benchmarks encompassing indoor, outdoor, synthetic, multiway and non-rigid demonstrate the efficacy of GeoTransformer. Notably, our method improves the inlier ratio by $18{\sim}31$ percentage points and the registration recall by over $7$ points on the challenging 3DLoMatch benchmark. Our code and models are available at \url{https://github.com/qinzheng93/GeoTransformer}.

Deep Graph-based Spatial Consistency for Robust Non-rigid Point Cloud Registration

Mar 17, 2023

Abstract:We study the problem of outlier correspondence pruning for non-rigid point cloud registration. In rigid registration, spatial consistency has been a commonly used criterion to discriminate outliers from inliers. It measures the compatibility of two correspondences by the discrepancy between the respective distances in two point clouds. However, spatial consistency no longer holds in non-rigid cases and outlier rejection for non-rigid registration has not been well studied. In this work, we propose Graph-based Spatial Consistency Network (GraphSCNet) to filter outliers for non-rigid registration. Our method is based on the fact that non-rigid deformations are usually locally rigid, or local shape preserving. We first design a local spatial consistency measure over the deformation graph of the point cloud, which evaluates the spatial compatibility only between the correspondences in the vicinity of a graph node. An attention-based non-rigid correspondence embedding module is then devised to learn a robust representation of non-rigid correspondences from local spatial consistency. Despite its simplicity, GraphSCNet effectively improves the quality of the putative correspondences and attains state-of-the-art performance on three challenging benchmarks. Our code and models are available at https://github.com/qinzheng93/GraphSCNet.

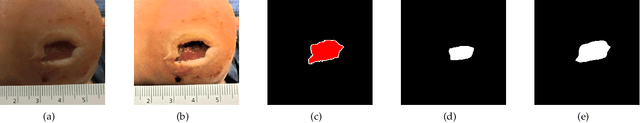

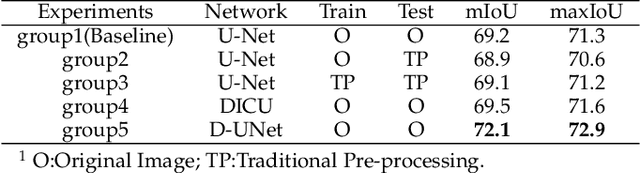

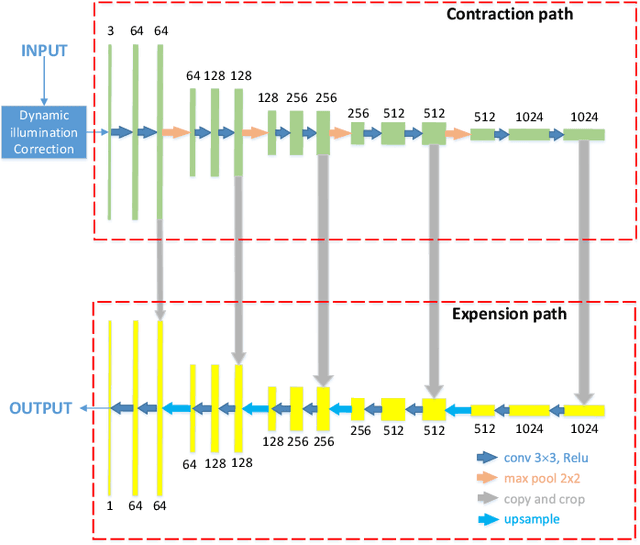

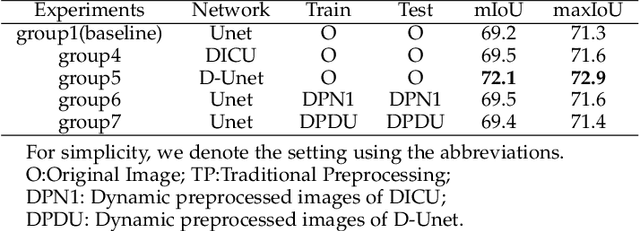

Wound Segmentation with Dynamic Illumination Correction and Dual-view Semantic Fusion

Jul 12, 2022

Abstract:Wound image segmentation is a critical component for the clinical diagnosis and in-time treatment of wounds. Recently, deep learning has become the mainstream methodology for wound image segmentation. However, the pre-processing of the wound image, such as the illumination correction, is required before the training phase as the performance can be greatly improved. The correction procedure and the training of deep models are independent of each other, which leads to sub-optimal segmentation performance as the fixed illumination correction may not be suitable for all images. To address aforementioned issues, an end-to-end dual-view segmentation approach was proposed in this paper, by incorporating a learn-able illumination correction module into the deep segmentation models. The parameters of the module can be learned and updated during the training stage automatically, while the dual-view fusion can fully employ the features from both the raw images and the enhanced ones. To demonstrate the effectiveness and robustness of the proposed framework, the extensive experiments are conducted on the benchmark datasets. The encouraging results suggest that our framework can significantly improve the segmentation performance, compared to the state-of-the-art methods.

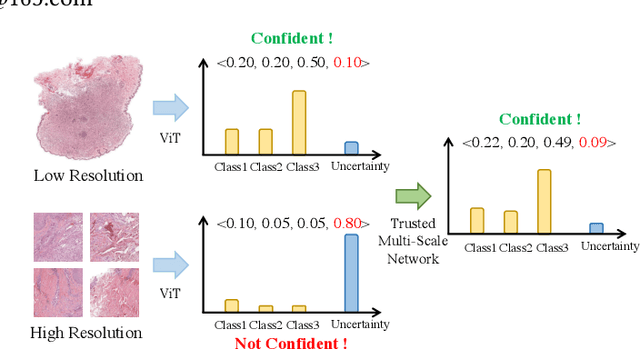

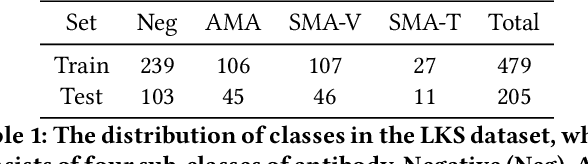

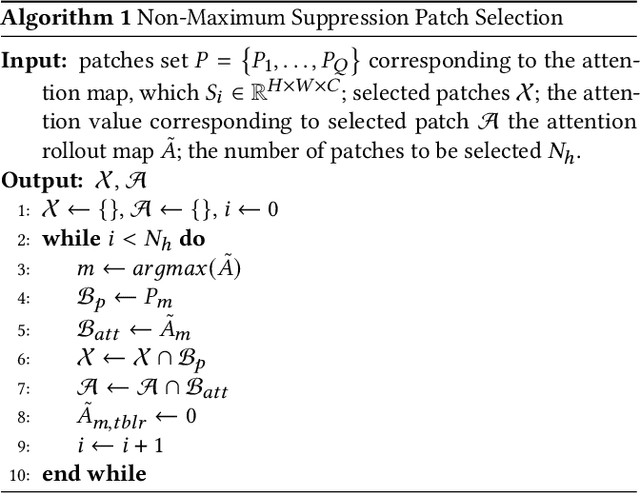

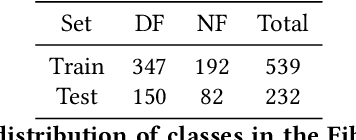

Trusted Multi-Scale Classification Framework for Whole Slide Image

Jul 12, 2022

Abstract:Despite remarkable efforts been made, the classification of gigapixels whole-slide image (WSI) is severely restrained from either the constrained computing resources for the whole slides, or limited utilizing of the knowledge from different scales. Moreover, most of the previous attempts lacked of the ability of uncertainty estimation. Generally, the pathologists often jointly analyze WSI from the different magnifications. If the pathologists are uncertain by using single magnification, then they will change the magnification repeatedly to discover various features of the tissues. Motivated by the diagnose process of the pathologists, in this paper, we propose a trusted multi-scale classification framework for the WSI. Leveraging the Vision Transformer as the backbone for multi branches, our framework can jointly classification modeling, estimating the uncertainty of each magnification of a microscope and integrate the evidence from different magnification. Moreover, to exploit discriminative patches from WSIs and reduce the requirement for computation resources, we propose a novel patch selection schema using attention rollout and non-maximum suppression. To empirically investigate the effectiveness of our approach, empirical experiments are conducted on our WSI classification tasks, using two benchmark databases. The obtained results suggest that the trusted framework can significantly improve the WSI classification performance compared with the state-of-the-art methods.

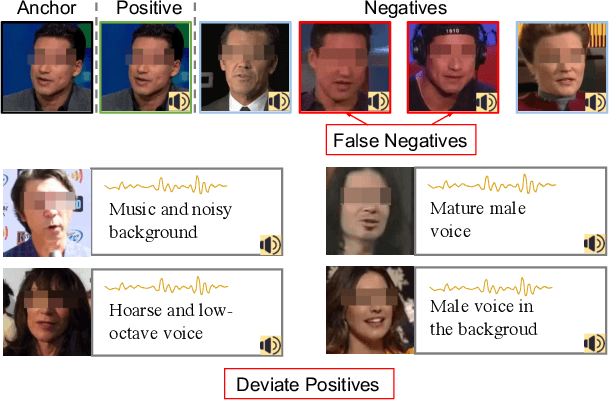

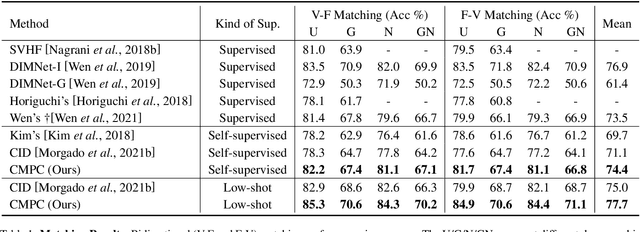

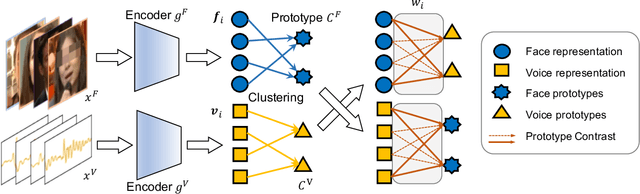

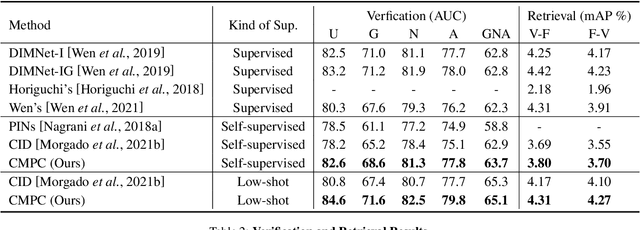

Unsupervised Voice-Face Representation Learning by Cross-Modal Prototype Contrast

May 02, 2022

Abstract:We present an approach to learn voice-face representations from the talking face videos, without any identity labels. Previous works employ cross-modal instance discrimination tasks to establish the correlation of voice and face. These methods neglect the semantic content of different videos, introducing false-negative pairs as training noise. Furthermore, the positive pairs are constructed based on the natural correlation between audio clips and visual frames. However, this correlation might be weak or inaccurate in a large amount of real-world data, which leads to deviating positives into the contrastive paradigm. To address these issues, we propose the cross-modal prototype contrastive learning (CMPC), which takes advantage of contrastive methods and resists adverse effects of false negatives and deviate positives. On one hand, CMPC could learn the intra-class invariance by constructing semantic-wise positives via unsupervised clustering in different modalities. On the other hand, by comparing the similarities of cross-modal instances from that of cross-modal prototypes, we dynamically recalibrate the unlearnable instances' contribution to overall loss. Experiments show that the proposed approach outperforms state-of-the-art unsupervised methods on various voice-face association evaluation protocols. Additionally, in the low-shot supervision setting, our method also has a significant improvement compared to previous instance-wise contrastive learning.

Geometric Transformer for Fast and Robust Point Cloud Registration

Mar 12, 2022

Abstract:We study the problem of extracting accurate correspondences for point cloud registration. Recent keypoint-free methods bypass the detection of repeatable keypoints which is difficult in low-overlap scenarios, showing great potential in registration. They seek correspondences over downsampled superpoints, which are then propagated to dense points. Superpoints are matched based on whether their neighboring patches overlap. Such sparse and loose matching requires contextual features capturing the geometric structure of the point clouds. We propose Geometric Transformer to learn geometric feature for robust superpoint matching. It encodes pair-wise distances and triplet-wise angles, making it robust in low-overlap cases and invariant to rigid transformation. The simplistic design attains surprisingly high matching accuracy such that no RANSAC is required in the estimation of alignment transformation, leading to $100$ times acceleration. Our method improves the inlier ratio by $17{\sim}30$ percentage points and the registration recall by over $7$ points on the challenging 3DLoMatch benchmark. Our code and models are available at \url{https://github.com/qinzheng93/GeoTransformer}.

hi-RF: Incremental Learning Random Forest for large-scale multi-class Data Classification

Oct 31, 2016

Abstract:In recent years, dynamically growing data and incrementally growing number of classes pose new challenges to large-scale data classification research. Most traditional methods struggle to balance the precision and computational burden when data and its number of classes increased. However, some methods are with weak precision, and the others are time-consuming. In this paper, we propose an incremental learning method, namely, heterogeneous incremental Nearest Class Mean Random Forest (hi-RF), to handle this issue. It is a heterogeneous method that either replaces trees or updates trees leaves in the random forest adaptively, to reduce the computational time in comparable performance, when data of new classes arrive. Specifically, to keep the accuracy, one proportion of trees are replaced by new NCM decision trees; to reduce the computational load, the rest trees are updated their leaves probabilities only. Most of all, out-of-bag estimation and out-of-bag boosting are proposed to balance the accuracy and the computational efficiency. Fair experiments were conducted and demonstrated its comparable precision with much less computational time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge