Canjia Li

SERM: Self-Evolving Relevance Model with Agent-Driven Learning from Massive Query Streams

Jan 14, 2026Abstract:Due to the dynamically evolving nature of real-world query streams, relevance models struggle to generalize to practical search scenarios. A sophisticated solution is self-evolution techniques. However, in large-scale industrial settings with massive query streams, this technique faces two challenges: (1) informative samples are often sparse and difficult to identify, and (2) pseudo-labels generated by the current model could be unreliable. To address these challenges, in this work, we propose a Self-Evolving Relevance Model approach (SERM), which comprises two complementary multi-agent modules: a multi-agent sample miner, designed to detect distributional shifts and identify informative training samples, and a multi-agent relevance annotator, which provides reliable labels through a two-level agreement framework. We evaluate SERM in a large-scale industrial setting, which serves billions of user requests daily. Experimental results demonstrate that SERM can achieve significant performance gains through iterative self-evolution, as validated by extensive offline multilingual evaluations and online testing.

Pretrained Language Model based Web Search Ranking: From Relevance to Satisfaction

Jun 02, 2023

Abstract:Search engine plays a crucial role in satisfying users' diverse information needs. Recently, Pretrained Language Models (PLMs) based text ranking models have achieved huge success in web search. However, many state-of-the-art text ranking approaches only focus on core relevance while ignoring other dimensions that contribute to user satisfaction, e.g., document quality, recency, authority, etc. In this work, we focus on ranking user satisfaction rather than relevance in web search, and propose a PLM-based framework, namely SAT-Ranker, which comprehensively models different dimensions of user satisfaction in a unified manner. In particular, we leverage the capacities of PLMs on both textual and numerical inputs, and apply a multi-field input that modularizes each dimension of user satisfaction as an input field. Overall, SAT-Ranker is an effective, extensible, and data-centric framework that has huge potential for industrial applications. On rigorous offline and online experiments, SAT-Ranker obtains remarkable gains on various evaluation sets targeting different dimensions of user satisfaction. It is now fully deployed online to improve the usability of our search engine.

Query-aware Tip Generation for Vertical Search

Oct 19, 2020

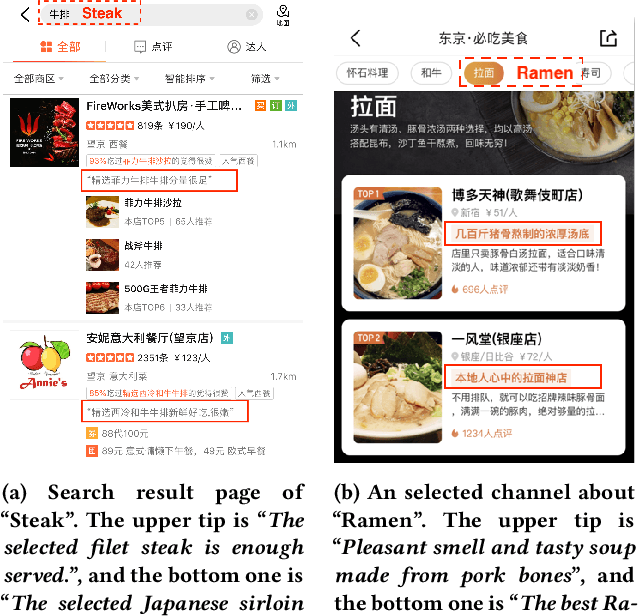

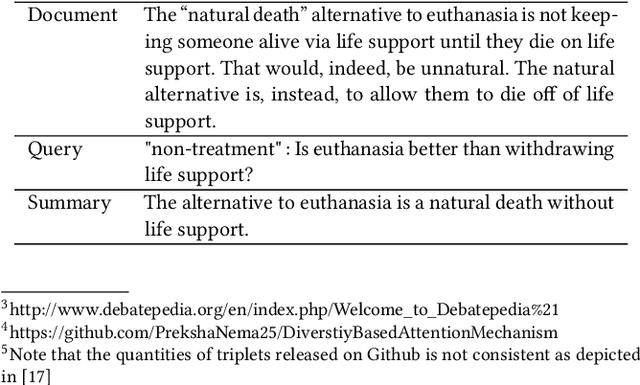

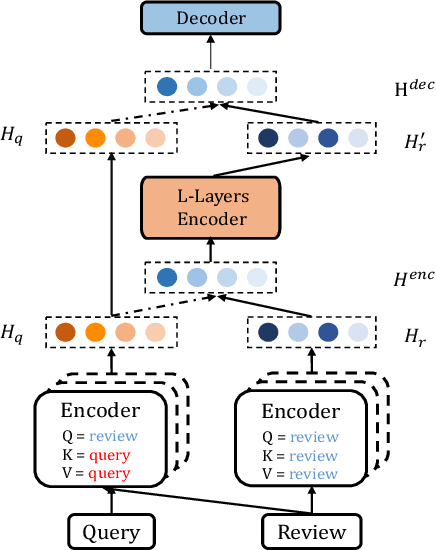

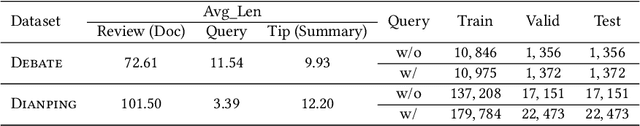

Abstract:As a concise form of user reviews, tips have unique advantages to explain the search results, assist users' decision making, and further improve user experience in vertical search scenarios. Existing work on tip generation does not take query into consideration, which limits the impact of tips in search scenarios. To address this issue, this paper proposes a query-aware tip generation framework, integrating query information into encoding and subsequent decoding processes. Two specific adaptations of Transformer and Recurrent Neural Network (RNN) are proposed. For Transformer, the query impact is incorporated into the self-attention computation of both the encoder and the decoder. As for RNN, the query-aware encoder adopts a selective network to distill query-relevant information from the review, while the query-aware decoder integrates the query information into the attention computation during decoding. The framework consistently outperforms the competing methods on both public and real-world industrial datasets. Last but not least, online deployment experiments on Dianping demonstrate the advantage of the proposed framework for tip generation as well as its online business values.

NPRF: A Neural Pseudo Relevance Feedback Framework for Ad-hoc Information Retrieval

Oct 30, 2018

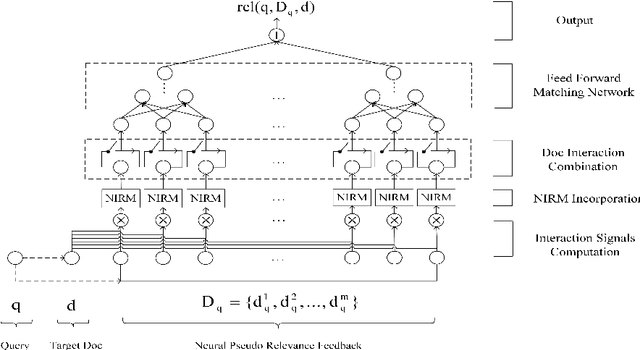

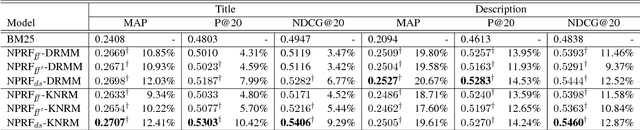

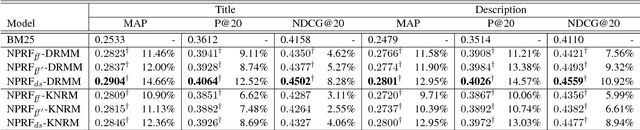

Abstract:Pseudo-relevance feedback (PRF) is commonly used to boost the performance of traditional information retrieval (IR) models by using top-ranked documents to identify and weight new query terms, thereby reducing the effect of query-document vocabulary mismatches. While neural retrieval models have recently demonstrated strong results for ad-hoc retrieval, combining them with PRF is not straightforward due to incompatibilities between existing PRF approaches and neural architectures. To bridge this gap, we propose an end-to-end neural PRF framework that can be used with existing neural IR models by embedding different neural models as building blocks. Extensive experiments on two standard test collections confirm the effectiveness of the proposed NPRF framework in improving the performance of two state-of-the-art neural IR models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge