Camillo Jose Taylor

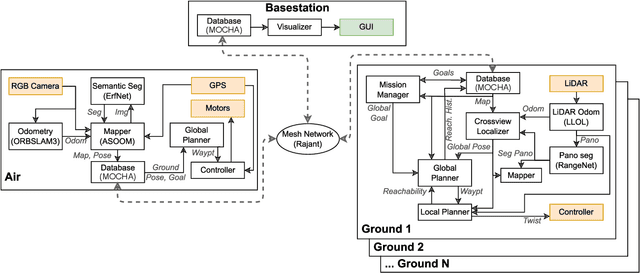

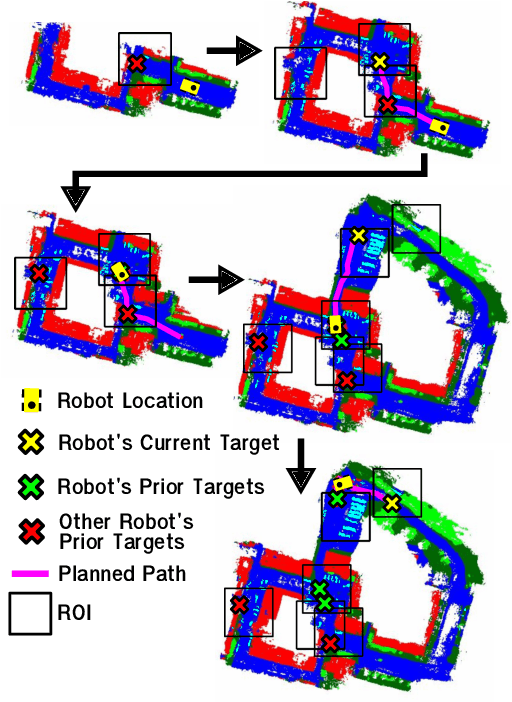

Air-Ground Collaboration with SPOMP: Semantic Panoramic Online Mapping and Planning

Jul 13, 2024

Abstract:Mapping and navigation have gone hand-in-hand since long before robots existed. Maps are a key form of communication, allowing someone who has never been somewhere to nonetheless navigate that area successfully. In the context of multi-robot systems, the maps and information that flow between robots are necessary for effective collaboration, whether those robots are operating concurrently, sequentially, or completely asynchronously. In this paper, we argue that maps must go beyond encoding purely geometric or visual information to enable increasingly complex autonomy, particularly between robots. We propose a framework for multi-robot autonomy, focusing in particular on air and ground robots operating in outdoor 2.5D environments. We show that semantic maps can enable the specification, planning, and execution of complex collaborative missions, including localization in GPS-denied settings. A distinguishing characteristic of this work is that we strongly emphasize field experiments and testing, and by doing so demonstrate that these ideas can work at scale in the real world. We also perform extensive simulation experiments to validate our ideas at even larger scales. We believe these experiments and the experimental results constitute a significant step forward toward advancing the state-of-the-art of large-scale, collaborative multi-robot systems operating with real communication, navigation, and perception constraints.

* Video: https://www.youtube.com/watch?v=ieNYH40buBo

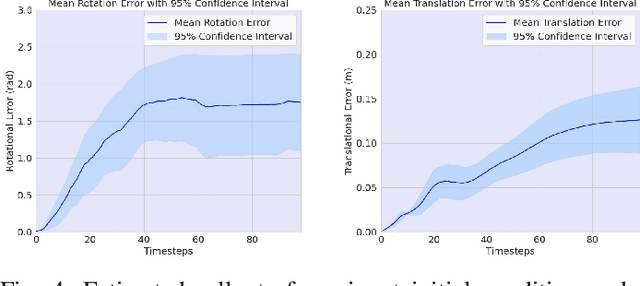

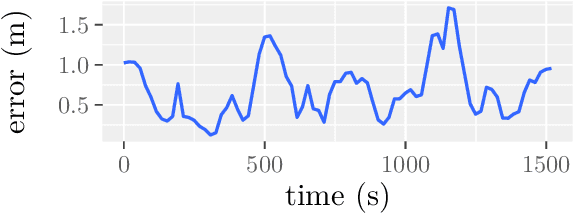

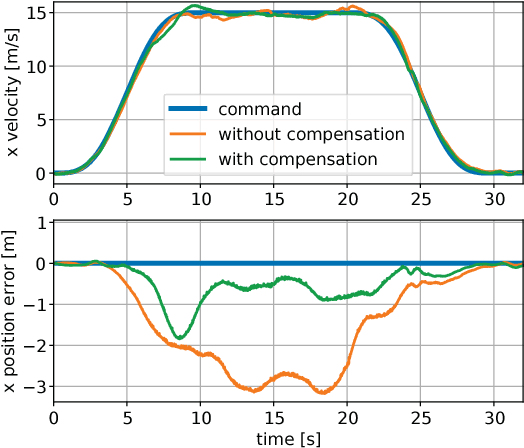

OCCAM: Online Continuous Controller Adaptation with Meta-Learned Models

Jun 25, 2024Abstract:Control tuning and adaptation present a significant challenge to the usage of robots in diverse environments. It is often nontrivial to find a single set of control parameters by hand that work well across the broad array of environments and conditions that a robot might encounter. Automated adaptation approaches must utilize prior knowledge about the system while adapting to significant domain shifts to find new control parameters quickly. In this work, we present a general framework for online controller adaptation that deals with these challenges. We combine meta-learning with Bayesian recursive estimation to learn prior predictive models of system performance that quickly adapt to online data, even when there is significant domain shift. These predictive models can be used as cost functions within efficient sampling-based optimization routines to find new control parameters online that maximize system performance. Our framework is powerful and flexible enough to adapt controllers for four diverse systems: a simulated race car, a simulated quadrupedal robot, and a simulated and physical quadrotor.

Enhancing Scene Graph Generation with Hierarchical Relationships and Commonsense Knowledge

Nov 21, 2023

Abstract:This work presents an enhanced approach to generating scene graphs by incorporating a relationship hierarchy and commonsense knowledge. Specifically, we propose a Bayesian classification head that exploits an informative hierarchical structure. It jointly predicts the super-category or type of relationship between the two objects, along with the detailed relationship under each super-category. We design a commonsense validation pipeline that uses a large language model to critique the results from the scene graph prediction system and then use that feedback to enhance the model performance. The system requires no external large language model assistance at test time, making it more convenient for practical applications. Experiments on the Visual Genome and the OpenImage V6 datasets demonstrate that harnessing hierarchical relationships enhances the model performance by a large margin. The proposed Bayesian head can also be incorporated as a portable module in existing scene graph generation algorithms to improve their results. In addition, the commonsense validation enables the model to generate an extensive set of reasonable predictions beyond dataset annotations.

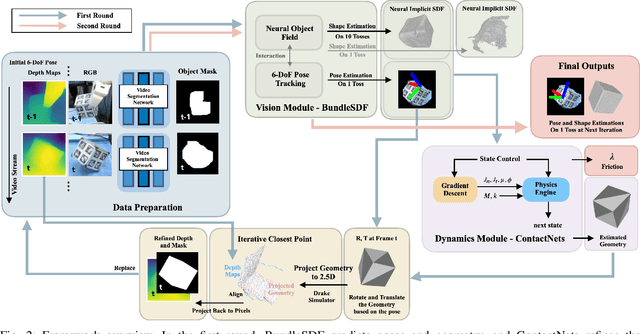

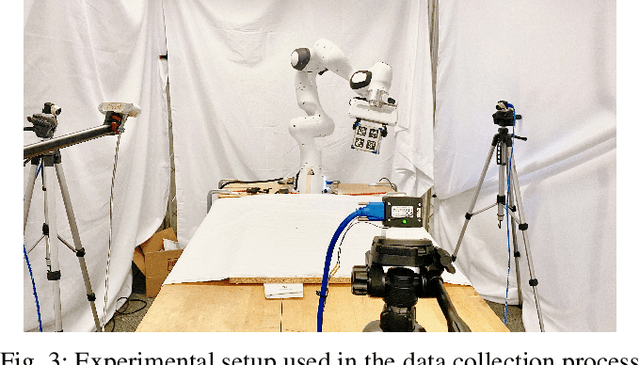

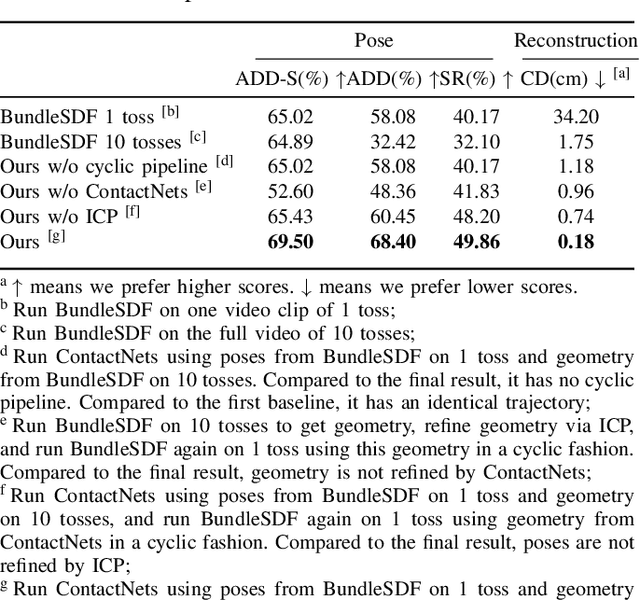

Instance-Agnostic Geometry and Contact Dynamics Learning

Sep 28, 2023

Abstract:This work presents an instance-agnostic learning framework that fuses vision with dynamics to simultaneously learn shape, pose trajectories, and physical properties via the use of geometry as a shared representation. Unlike many contact learning approaches that assume motion capture input and a known shape prior for the collision model, our proposed framework learns an object's geometric and dynamic properties from RGBD video, without requiring either category-level or instance-level shape priors. We integrate a vision system, BundleSDF, with a dynamics system, ContactNets, and propose a cyclic training pipeline to use the output from the dynamics module to refine the poses and the geometry from the vision module, using perspective reprojection. Experiments demonstrate our framework's ability to learn the geometry and dynamics of rigid and convex objects and improve upon the current tracking framework.

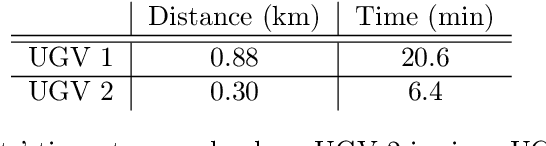

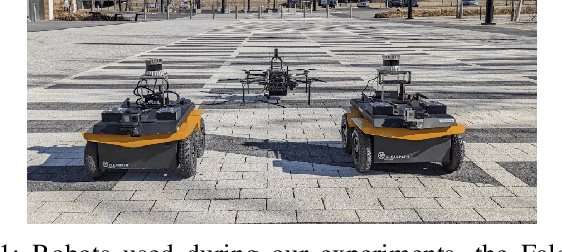

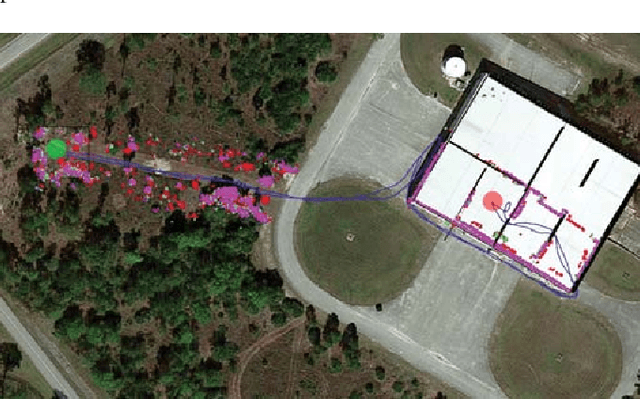

Stronger Together: Air-Ground Robotic Collaboration Using Semantics

Jun 28, 2022

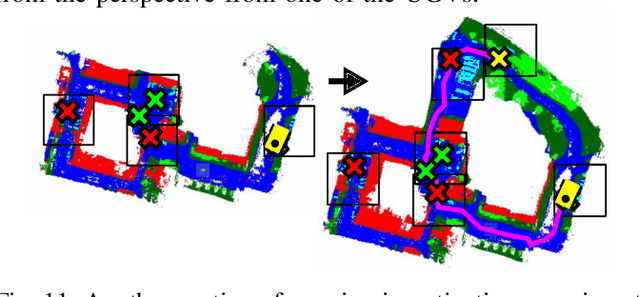

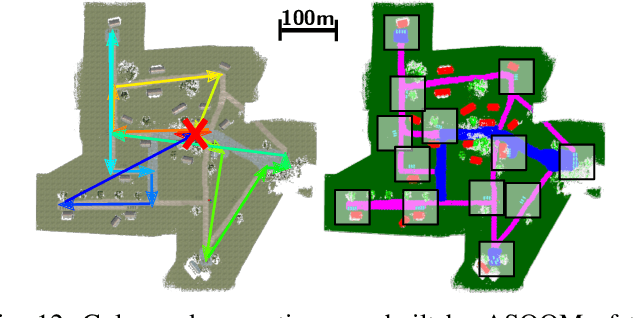

Abstract:In this work, we present an end-to-end heterogeneous multi-robot system framework where ground robots are able to localize, plan, and navigate in a semantic map created in real time by a high-altitude quadrotor. The ground robots choose and deconflict their targets independently, without any external intervention. Moreover, they perform cross-view localization by matching their local maps with the overhead map using semantics. The communication backbone is opportunistic and distributed, allowing the entire system to operate with no external infrastructure aside from GPS for the quadrotor. We extensively tested our system by performing different missions on top of our framework over multiple experiments in different environments. Our ground robots travelled over 6 km autonomously with minimal intervention in the real world and over 96 km in simulation without interventions.

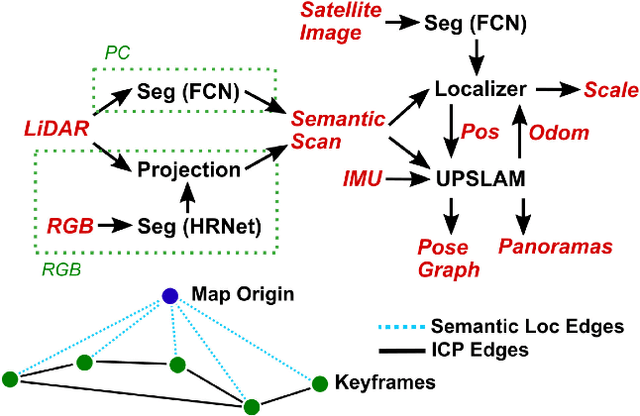

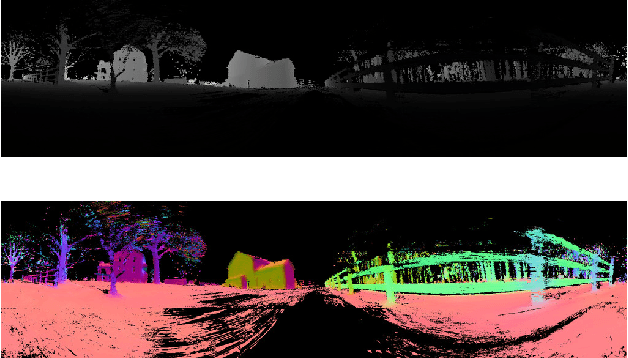

Any Way You Look At It: Semantic Crossview Localization and Mapping with LiDAR

Mar 16, 2022

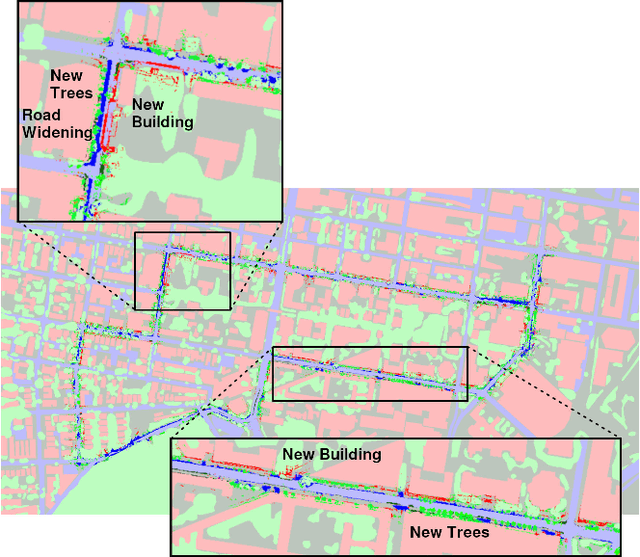

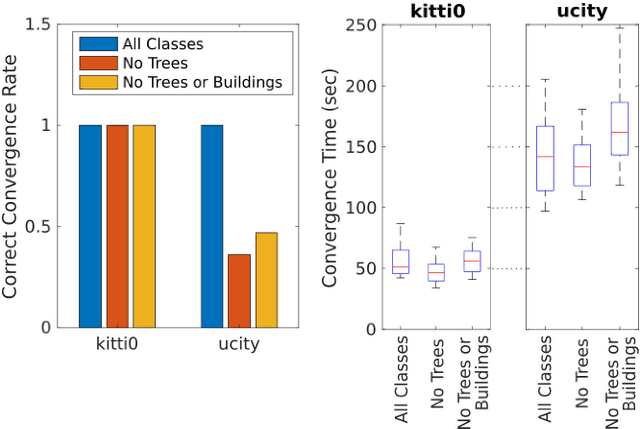

Abstract:Currently, GPS is by far the most popular global localization method. However, it is not always reliable or accurate in all environments. SLAM methods enable local state estimation but provide no means of registering the local map to a global one, which can be important for inter-robot collaboration or human interaction. In this work, we present a real-time method for utilizing semantics to globally localize a robot using only egocentric 3D semantically labelled LiDAR and IMU as well as top-down RGB images obtained from satellites or aerial robots. Additionally, as it runs, our method builds a globally registered, semantic map of the environment. We validate our method on KITTI as well as our own challenging datasets, and show better than 10 meter accuracy, a high degree of robustness, and the ability to estimate the scale of a top-down map on the fly if it is initially unknown.

* Published in the IEEE Robotics and Automation Letters and presented at the IEEE 2021 International Conference on Robotics and Automation. See https://www.youtube.com/watch?v=_qwAoYK9iGU for accompanying video

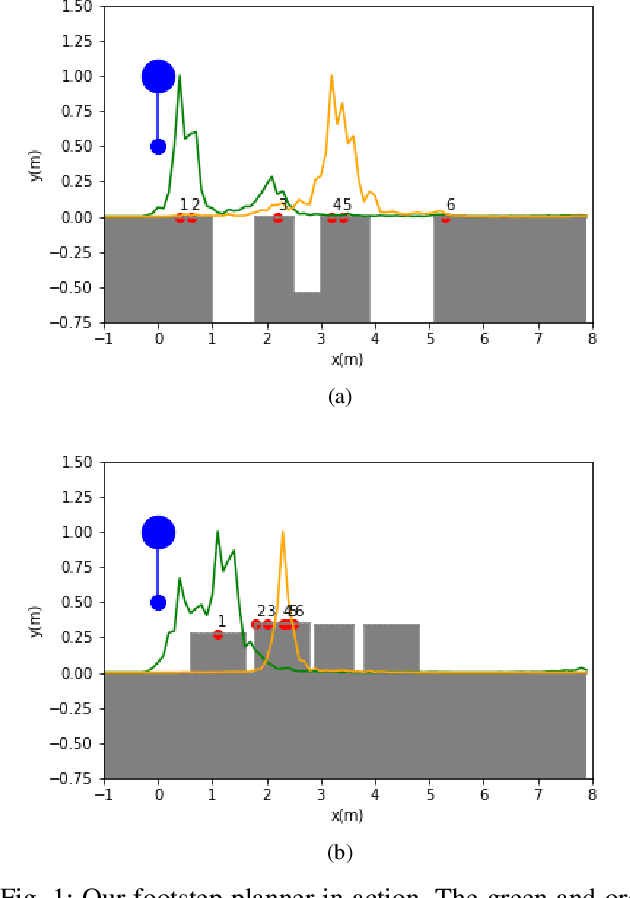

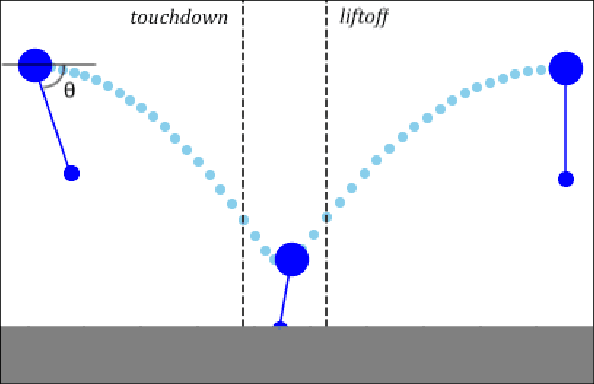

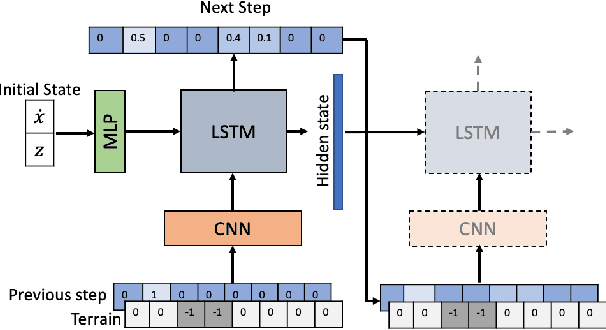

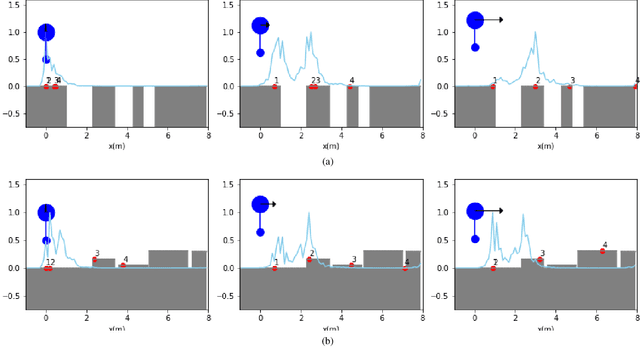

Fast Footstep Planning on Uneven Terrain Using Deep Sequential Models

Dec 14, 2021

Abstract:One of the fundamental challenges in realizing the potential of legged robots is generating plans to traverse challenging terrains. Control actions must be carefully selected so the robot will not crash or slip. The high dimensionality of the joint space makes directly planning low-level actions from onboard perception difficult, and control stacks that do not consider the low-level mechanisms of the robot in planning are ill-suited to handle fine-grained obstacles. One method for dealing with this is selecting footstep locations based on terrain characteristics. However, incorporating robot dynamics into footstep planning requires significant computation, much more than in the quasi-static case. In this work, we present an LSTM-based planning framework that learns probability distributions over likely footstep locations using both terrain lookahead and the robot's dynamics, and leverages the LSTM's sequential nature to find footsteps in linear time. Our framework can also be used as a module to speed up sampling-based planners. We validate our approach on a simulated one-legged hopper over a variety of uneven terrains.

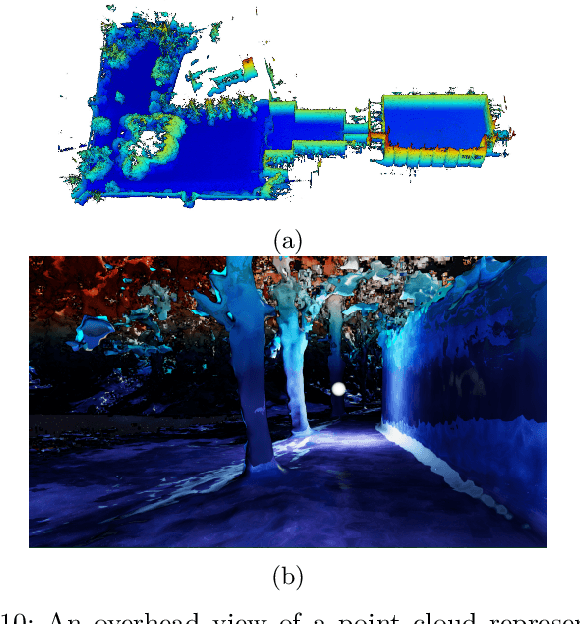

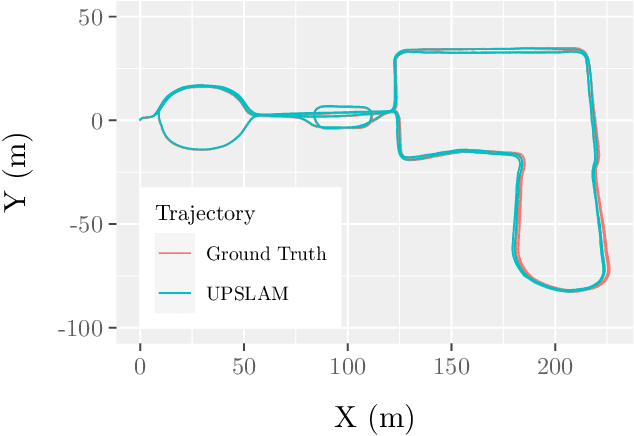

UPSLAM: Union of Panoramas SLAM

Jan 03, 2021

Abstract:We present an empirical investigation of a new mapping system based on a graph of panoramic depth images. Panoramic images efficiently capture range measurements taken by a spinning lidar sensor, recording fine detail on the order of a few centimeters within maps of expansive scope on the order of tens of millions of cubic meters. The flexibility of the system is demonstrated by running the same mapping software against data collected by hand-carrying a sensor around a laboratory space at walking pace, moving it outdoors through a campus environment at running pace, driving the sensor on a small wheeled vehicle on- and off-road, flying the sensor through a forest, carrying it on the back of a legged robot navigating an underground coal mine, and mounting it on the roof of a car driven on public roads. The full 3D maps are built online with a median update time of less than ten milliseconds on an embedded NVIDIA Jetson AGX Xavier system.

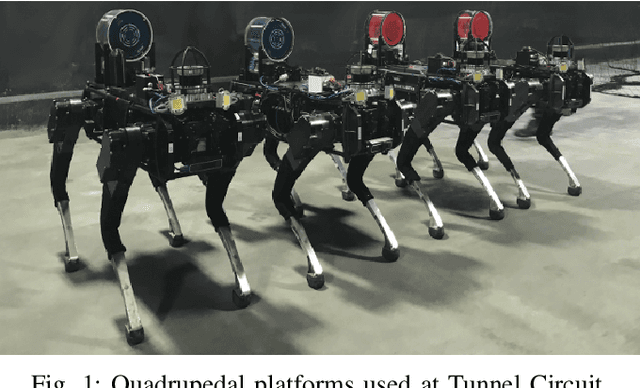

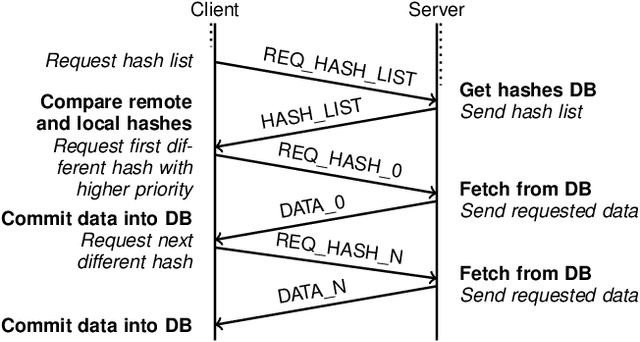

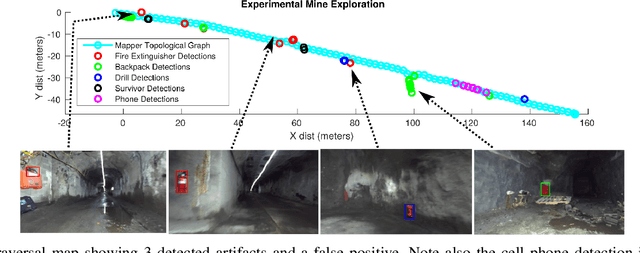

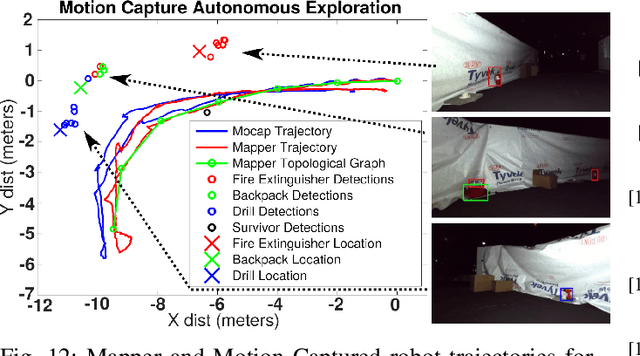

Mine Tunnel Exploration using Multiple Quadrupedal Robots

Sep 20, 2019

Abstract:Robotic exploration of underground environments is a particularly challenging problem due to communication, endurance, and traversability constraints which necessitate high degrees of autonomy and agility. These challenges are further enhanced by the need to minimize human intervention for practical applications. While legged robots have the ability to traverse extremely challenging terrain, they also engender further inherent challenges for planning, estimation, and control. In this work, we describe a fully autonomous system for multi-robot mine exploration and mapping using legged quadrupeds, as well as a distributed database mesh networking system for reporting data. In addition, we show results from the DARPA Subterranean Challenge (SubT) Tunnel Circuit demonstrating localization of artifacts after traversals of hundreds of meters. To our knowledge, these experiments represent the first fully autonomous exploration of an unknown GNSS-denied environment undertaken by legged robots.

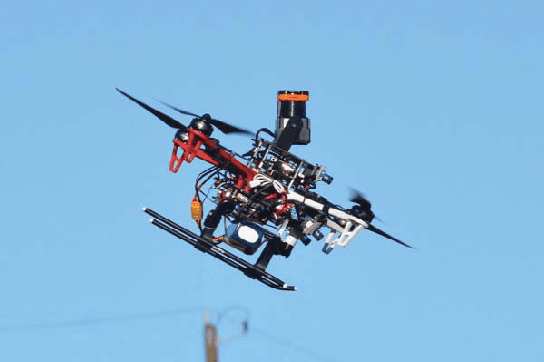

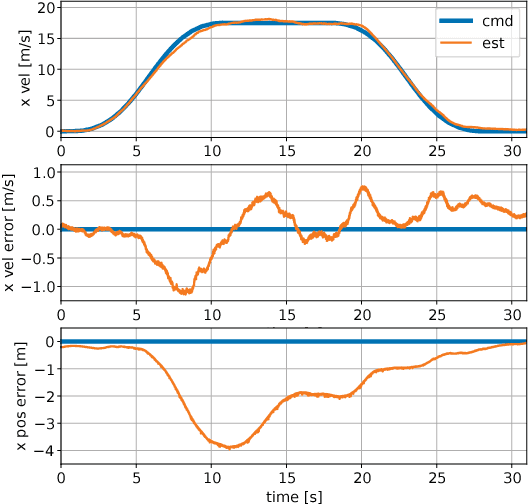

Experiments in Fast, Autonomous, GPS-Denied Quadrotor Flight

Jun 19, 2018

Abstract:High speed navigation through unknown environments is a challenging problem in robotics. It requires fast computation and tight integration of all the subsystems on the robot such that the latency in the perception-action loop is as small as possible. Aerial robots add a limitation of payload capacity, which restricts the amount of computation that can be carried onboard. This requires efficient algorithms for each component in the navigation system. In this paper, we describe our quadrotor system which is able to smoothly navigate through mixed indoor and outdoor environments and is able to fly at speeds of more than 18 m/s. We provide an overview of our system and details about the specific component technologies that enable the high speed navigation capability of our platform. We demonstrate the robustness of our system through high speed autonomous flights and navigation through a variety of obstacle rich environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge