UPSLAM: Union of Panoramas SLAM

Paper and Code

Jan 03, 2021

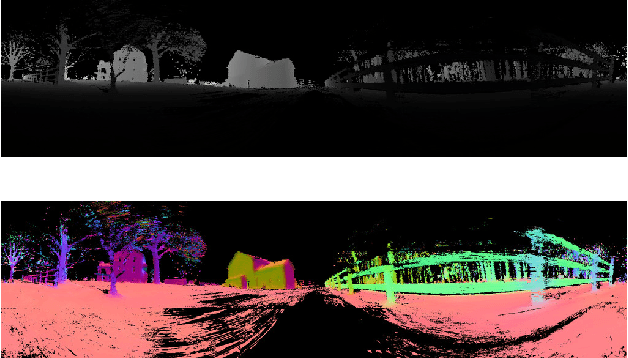

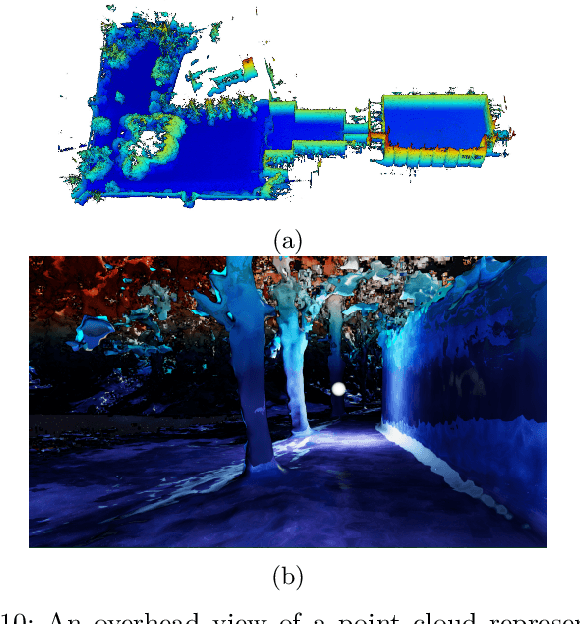

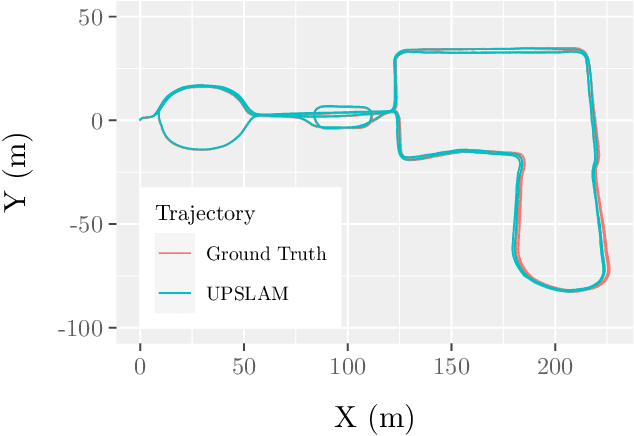

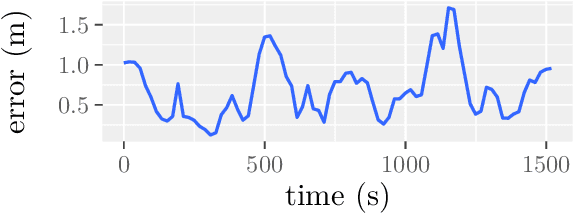

We present an empirical investigation of a new mapping system based on a graph of panoramic depth images. Panoramic images efficiently capture range measurements taken by a spinning lidar sensor, recording fine detail on the order of a few centimeters within maps of expansive scope on the order of tens of millions of cubic meters. The flexibility of the system is demonstrated by running the same mapping software against data collected by hand-carrying a sensor around a laboratory space at walking pace, moving it outdoors through a campus environment at running pace, driving the sensor on a small wheeled vehicle on- and off-road, flying the sensor through a forest, carrying it on the back of a legged robot navigating an underground coal mine, and mounting it on the roof of a car driven on public roads. The full 3D maps are built online with a median update time of less than ten milliseconds on an embedded NVIDIA Jetson AGX Xavier system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge