Sikang Liu

Multi-modal Integrated Prediction and Decision-making with Adaptive Interaction Modality Explorations

Aug 25, 2024

Abstract:Navigating dense and dynamic environments poses a significant challenge for autonomous driving systems, owing to the intricate nature of multimodal interaction, wherein the actions of various traffic participants and the autonomous vehicle are complex and implicitly coupled. In this paper, we propose a novel framework, Multi-modal Integrated predictioN and Decision-making (MIND), which addresses the challenges by efficiently generating joint predictions and decisions covering multiple distinctive interaction modalities. Specifically, MIND leverages learning-based scenario predictions to obtain integrated predictions and decisions with social-consistent interaction modality and utilizes a modality-aware dynamic branching mechanism to generate scenario trees that efficiently capture the evolutions of distinctive interaction modalities with low variation of interaction uncertainty along the planning horizon. The scenario trees are seamlessly utilized by the contingency planning under interaction uncertainty to obtain clear and considerate maneuvers accounting for multi-modal evolutions. Comprehensive experimental results in the closed-loop simulation based on the real-world driving dataset showcase superior performance to other strong baselines under various driving contexts.

SIMPL: A Simple and Efficient Multi-agent Motion Prediction Baseline for Autonomous Driving

Feb 04, 2024Abstract:This paper presents a Simple and effIcient Motion Prediction baseLine (SIMPL) for autonomous vehicles. Unlike conventional agent-centric methods with high accuracy but repetitive computations and scene-centric methods with compromised accuracy and generalizability, SIMPL delivers real-time, accurate motion predictions for all relevant traffic participants. To achieve improvements in both accuracy and inference speed, we propose a compact and efficient global feature fusion module that performs directed message passing in a symmetric manner, enabling the network to forecast future motion for all road users in a single feed-forward pass and mitigating accuracy loss caused by viewpoint shifting. Additionally, we investigate the continuous trajectory parameterization using Bernstein basis polynomials in trajectory decoding, allowing evaluations of states and their higher-order derivatives at any desired time point, which is valuable for downstream planning tasks. As a strong baseline, SIMPL exhibits highly competitive performance on Argoverse 1 & 2 motion forecasting benchmarks compared with other state-of-the-art methods. Furthermore, its lightweight design and low inference latency make SIMPL highly extensible and promising for real-world onboard deployment. We open-source the code at https://github.com/HKUST-Aerial-Robotics/SIMPL.

MARC: Multipolicy and Risk-aware Contingency Planning for Autonomous Driving

Sep 12, 2023

Abstract:Generating safe and non-conservative behaviors in dense, dynamic environments remains challenging for automated vehicles due to the stochastic nature of traffic participants' behaviors and their implicit interaction with the ego vehicle. This paper presents a novel planning framework, Multipolicy And Risk-aware Contingency planning (MARC), that systematically addresses these challenges by enhancing the multipolicy-based pipelines from both behavior and motion planning aspects. Specifically, MARC realizes a critical scenario set that reflects multiple possible futures conditioned on each semantic-level ego policy. Then, the generated policy-conditioned scenarios are further formulated into a tree-structured representation with a dynamic branchpoint based on the scene-level divergence. Moreover, to generate diverse driving maneuvers, we introduce risk-aware contingency planning, a bi-level optimization algorithm that simultaneously considers multiple future scenarios and user-defined risk tolerance levels. Owing to the more unified combination of behavior and motion planning layers, our framework achieves efficient decision-making and human-like driving maneuvers. Comprehensive experimental results demonstrate superior performance to other strong baselines in various environments.

Towards Search-based Motion Planning for Micro Aerial Vehicles

Oct 07, 2018

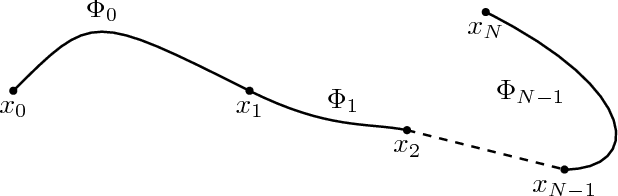

Abstract:Search-based motion planning has been used for mobile robots in many applications. However, it has not been fully developed and applied for planning full state trajectories of Micro Aerial Vehicles (MAVs) due to their complicated dynamics and the requirement of real-time computation. In this paper, we explore a search-based motion planning framework that plans dynamically feasible, collision-free, and resolution optimal and complete trajectories. This paper extends the search-based planning approach to address three important scenarios for MAVs navigation: (i) planning safe trajectories in the presence of motion uncertainty; (ii) planning with constraints on field-of-view and (iii) planning in dynamic environments. We show that these problems can be solved effectively and efficiently using the proposed search-based planning with motion primitives.

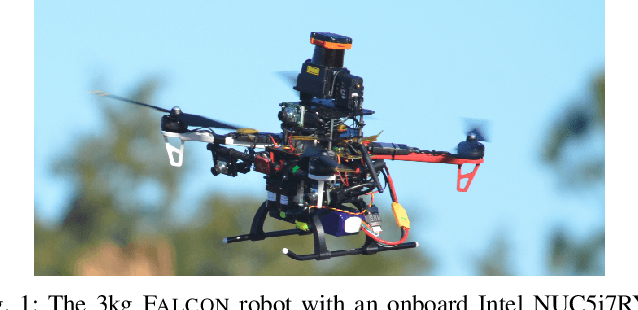

The Open Vision Computer: An Integrated Sensing and Compute System for Mobile Robots

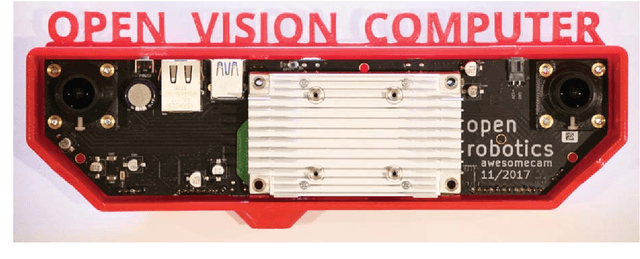

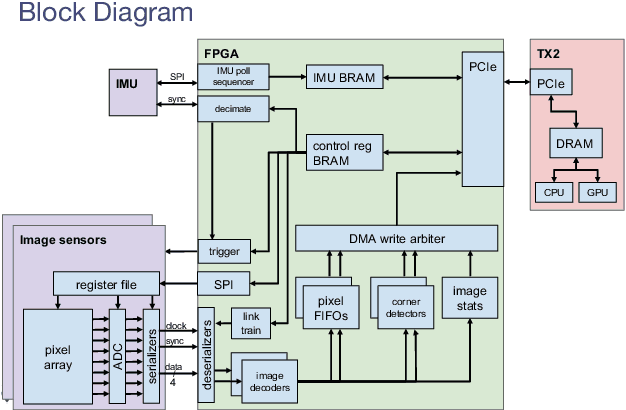

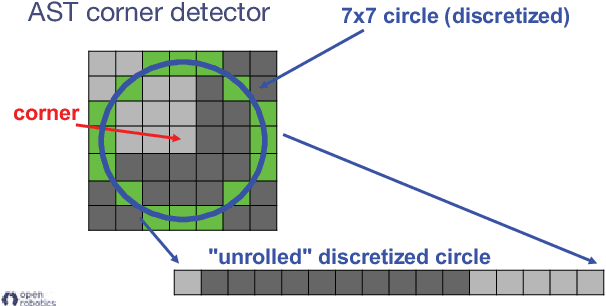

Sep 20, 2018

Abstract:In this paper we describe the Open Vision Computer (OVC) which was designed to support high speed, vision guided autonomous drone flight. In particular our aim was to develop a system that would be suitable for relatively small-scale flying platforms where size, weight, power consumption and computational performance were all important considerations. This manuscript describes the primary features of our OVC system and explains how they are used to support fully autonomous indoor and outdoor exploration and navigation operations on our Falcon 250 quadrotor platform.

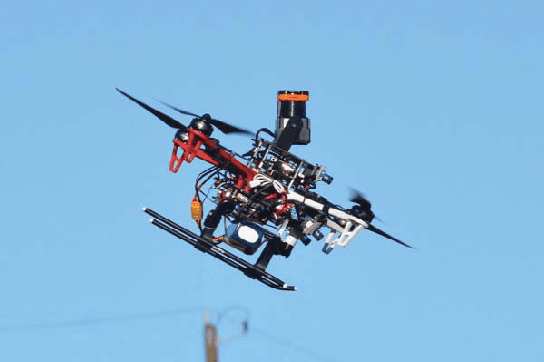

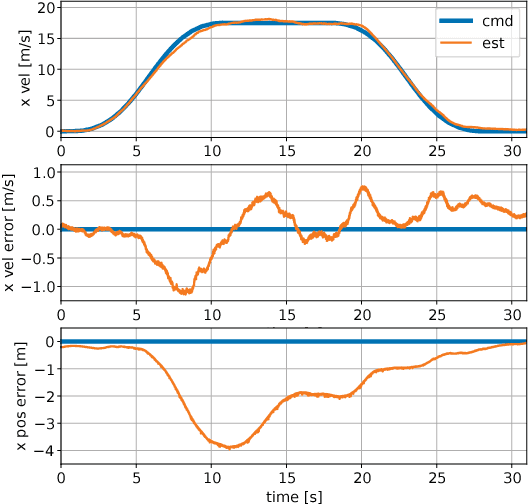

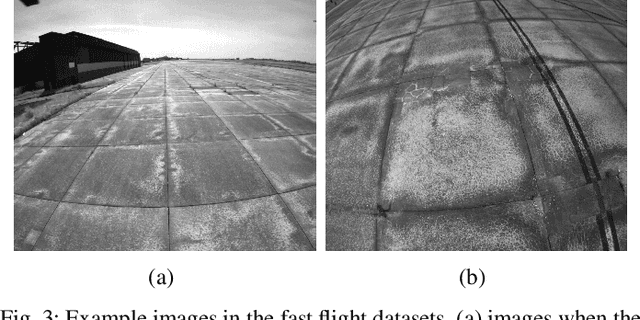

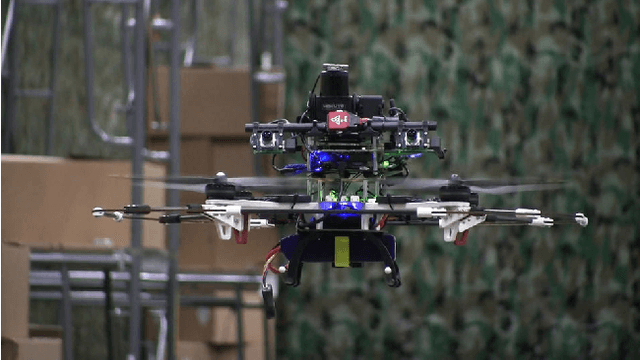

Experiments in Fast, Autonomous, GPS-Denied Quadrotor Flight

Jun 19, 2018

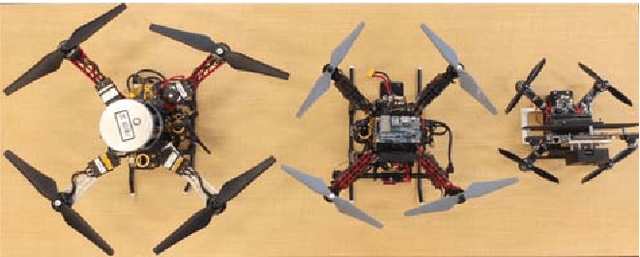

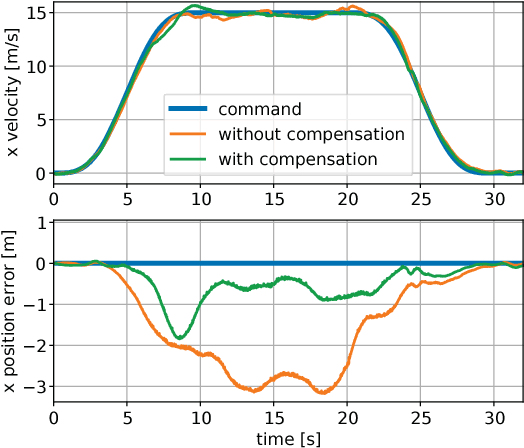

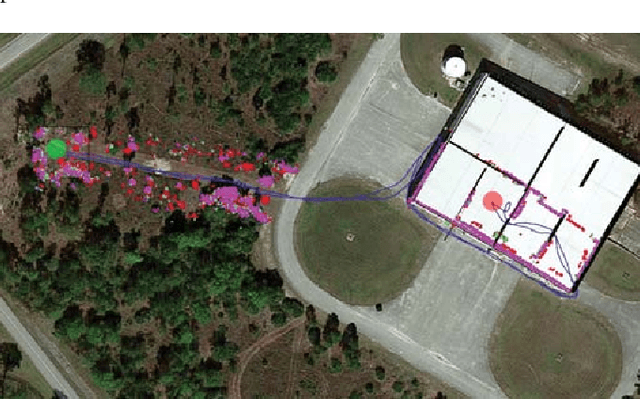

Abstract:High speed navigation through unknown environments is a challenging problem in robotics. It requires fast computation and tight integration of all the subsystems on the robot such that the latency in the perception-action loop is as small as possible. Aerial robots add a limitation of payload capacity, which restricts the amount of computation that can be carried onboard. This requires efficient algorithms for each component in the navigation system. In this paper, we describe our quadrotor system which is able to smoothly navigate through mixed indoor and outdoor environments and is able to fly at speeds of more than 18 m/s. We provide an overview of our system and details about the specific component technologies that enable the high speed navigation capability of our platform. We demonstrate the robustness of our system through high speed autonomous flights and navigation through a variety of obstacle rich environments.

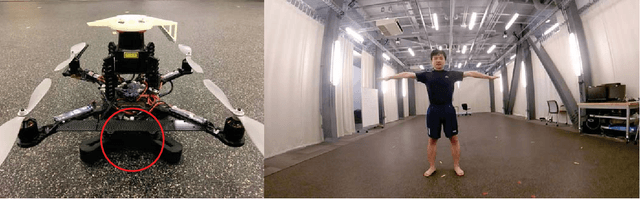

Human Motion Capture Using a Drone

Apr 17, 2018

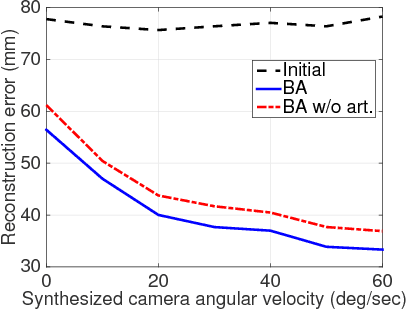

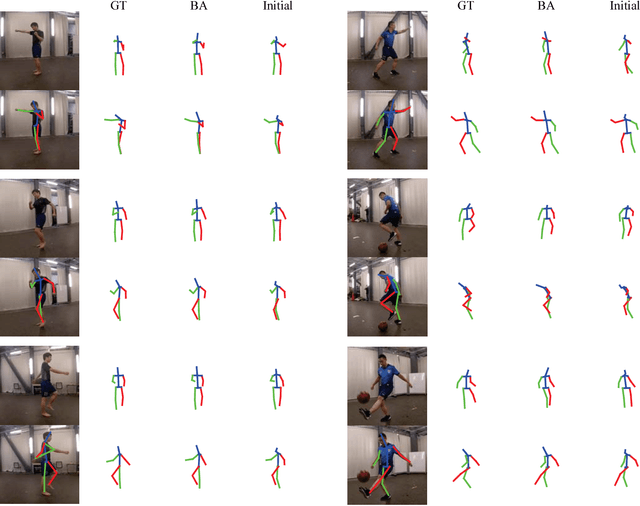

Abstract:Current motion capture (MoCap) systems generally require markers and multiple calibrated cameras, which can be used only in constrained environments. In this work we introduce a drone-based system for 3D human MoCap. The system only needs an autonomously flying drone with an on-board RGB camera and is usable in various indoor and outdoor environments. A reconstruction algorithm is developed to recover full-body motion from the video recorded by the drone. We argue that, besides the capability of tracking a moving subject, a flying drone also provides fast varying viewpoints, which is beneficial for motion reconstruction. We evaluate the accuracy of the proposed system using our new DroCap dataset and also demonstrate its applicability for MoCap in the wild using a consumer drone.

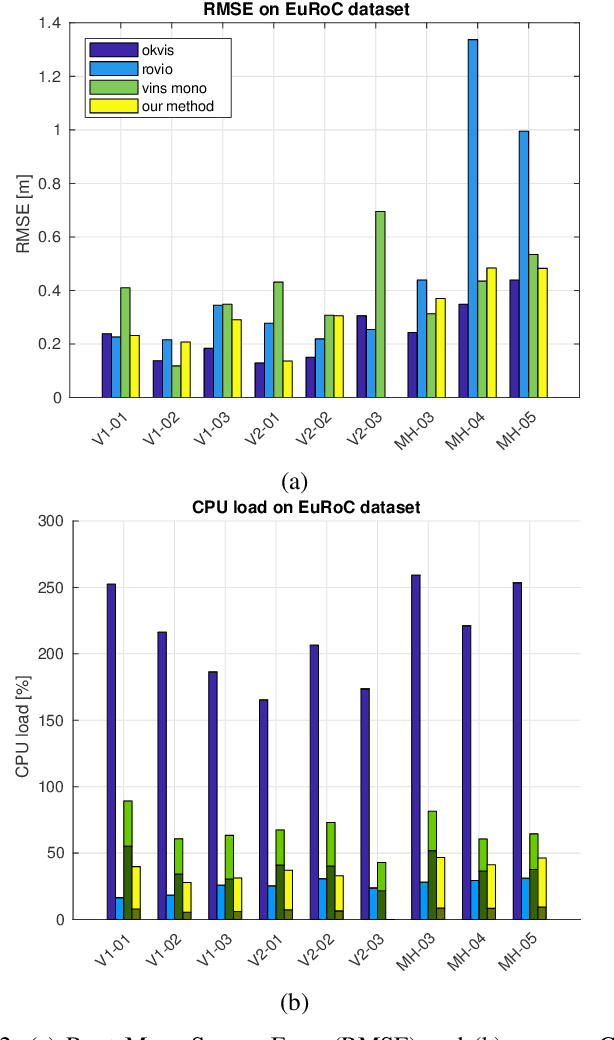

Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight

Jan 10, 2018

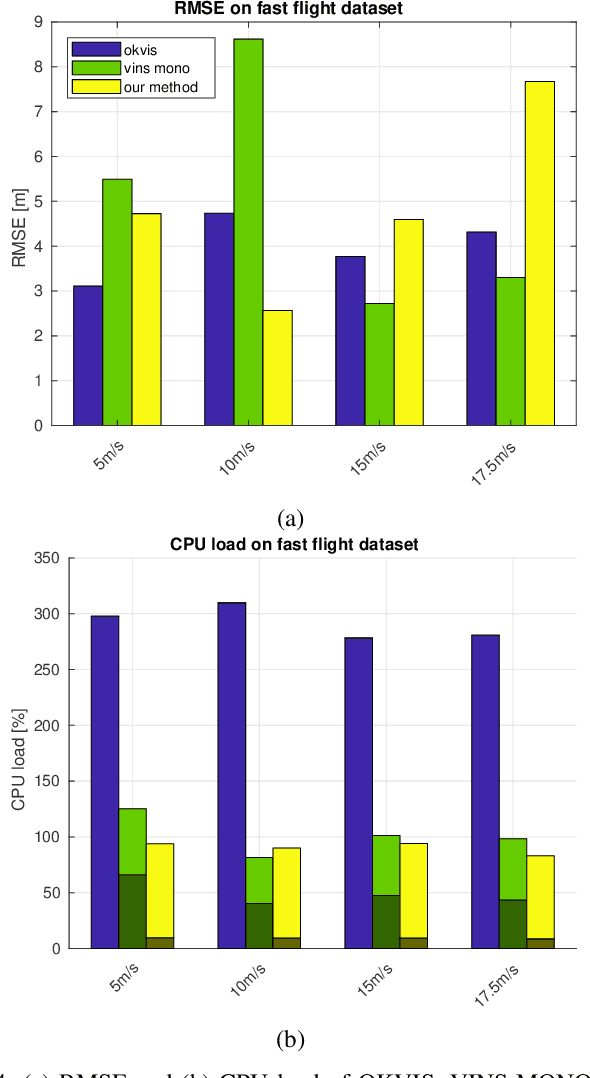

Abstract:In recent years, vision-aided inertial odometry for state estimation has matured significantly. However, we still encounter challenges in terms of improving the computational efficiency and robustness of the underlying algorithms for applications in autonomous flight with micro aerial vehicles in which it is difficult to use high quality sensors and pow- erful processors because of constraints on size and weight. In this paper, we present a filter-based stereo visual inertial odometry that uses the Multi-State Constraint Kalman Filter (MSCKF) [1]. Previous work on stereo visual inertial odometry has resulted in solutions that are computationally expensive. We demonstrate that our Stereo Multi-State Constraint Kalman Filter (S-MSCKF) is comparable to state-of-art monocular solutions in terms of computational cost, while providing signifi- cantly greater robustness. We evaluate our S-MSCKF algorithm and compare it with state-of-art methods including OKVIS, ROVIO, and VINS-MONO on both the EuRoC dataset, and our own experimental datasets demonstrating fast autonomous flight with maximum speed of 17.5m/s in indoor and outdoor environments. Our implementation of the S-MSCKF is available at https://github.com/KumarRobotics/msckf_vio.

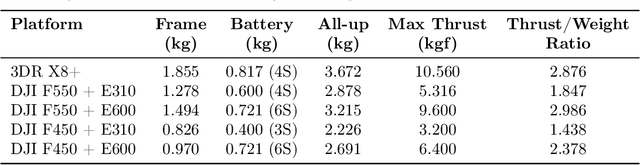

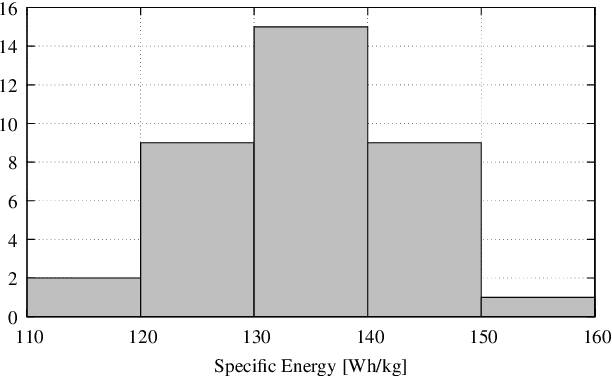

Fast, Autonomous Flight in GPS-Denied and Cluttered Environments

Dec 06, 2017

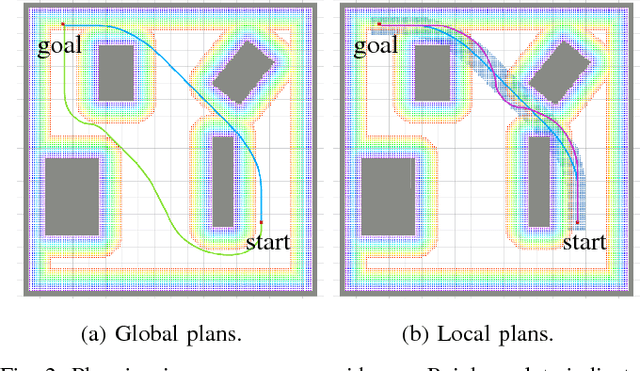

Abstract:One of the most challenging tasks for a flying robot is to autonomously navigate between target locations quickly and reliably while avoiding obstacles in its path, and with little to no a-priori knowledge of the operating environment. This challenge is addressed in the present paper. We describe the system design and software architecture of our proposed solution, and showcase how all the distinct components can be integrated to enable smooth robot operation. We provide critical insight on hardware and software component selection and development, and present results from extensive experimental testing in real-world warehouse environments. Experimental testing reveals that our proposed solution can deliver fast and robust aerial robot autonomous navigation in cluttered, GPS-denied environments.

Search-based Motion Planning for Aggressive Flight in SE(3)

Oct 07, 2017

Abstract:Quadrotors with large thrust-to-weight ratios are able to track aggressive trajectories with sharp turns and high accelerations. In this work, we develop a search-based trajectory planning approach that exploits the quadrotor maneuverability to generate sequences of motion primitives in cluttered environments. We model the quadrotor body as an ellipsoid and compute its flight attitude along trajectories in order to check for collisions against obstacles. The ellipsoid model allows the quadrotor to pass through gaps that are smaller than its diameter with non-zero pitch or roll angles. Without any prior information about the location of gaps and associated attitude constraints, our algorithm is able to find a safe and optimal trajectory that guides the robot to its goal as fast as possible. To accelerate planning, we first perform a lower dimensional search and use it as a heuristic to guide the generation of a final dynamically feasible trajectory. We analyze critical discretization parameters of motion primitive planning and demonstrate the feasibility of the generated trajectories in various simulations and real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge