Hersh Sanghvi

OCCAM: Online Continuous Controller Adaptation with Meta-Learned Models

Jun 25, 2024Abstract:Control tuning and adaptation present a significant challenge to the usage of robots in diverse environments. It is often nontrivial to find a single set of control parameters by hand that work well across the broad array of environments and conditions that a robot might encounter. Automated adaptation approaches must utilize prior knowledge about the system while adapting to significant domain shifts to find new control parameters quickly. In this work, we present a general framework for online controller adaptation that deals with these challenges. We combine meta-learning with Bayesian recursive estimation to learn prior predictive models of system performance that quickly adapt to online data, even when there is significant domain shift. These predictive models can be used as cost functions within efficient sampling-based optimization routines to find new control parameters online that maximize system performance. Our framework is powerful and flexible enough to adapt controllers for four diverse systems: a simulated race car, a simulated quadrupedal robot, and a simulated and physical quadrotor.

Recent Approaches for Perceptive Legged Locomotion

Sep 21, 2022

Abstract:As both legged robots and embedded compute have become more capable, researchers have started to focus on field deployment of these robots. Robust autonomy in unstructured environments requires perception of the world around the robot in order to avoid hazards. However, incorporating perception online while maintaining agile motion is more challenging for legged robots than other mobile robots due to the complex planners and controllers required to handle the dynamics of locomotion. This report will compare three recent approaches for perceptive locomotion and discuss the different ways in which vision can be used to enable legged autonomy.

Fast Footstep Planning on Uneven Terrain Using Deep Sequential Models

Dec 14, 2021

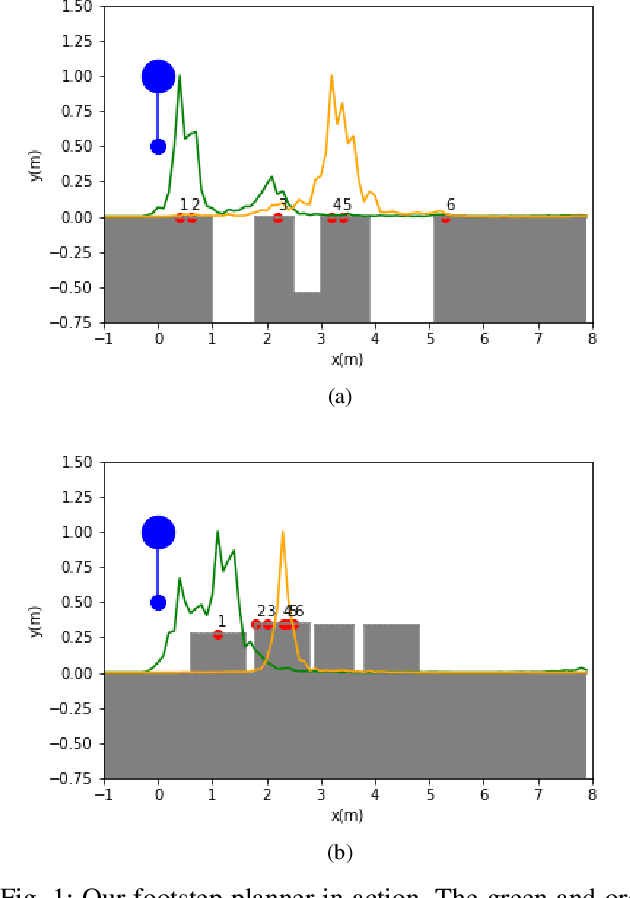

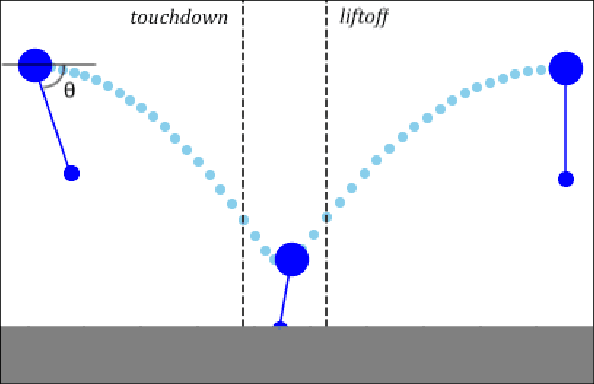

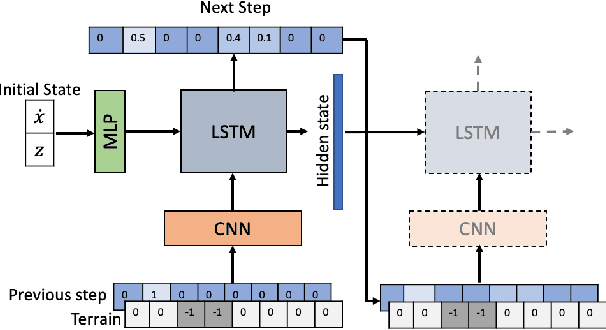

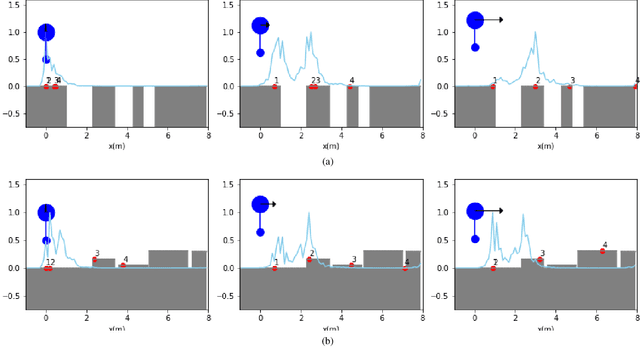

Abstract:One of the fundamental challenges in realizing the potential of legged robots is generating plans to traverse challenging terrains. Control actions must be carefully selected so the robot will not crash or slip. The high dimensionality of the joint space makes directly planning low-level actions from onboard perception difficult, and control stacks that do not consider the low-level mechanisms of the robot in planning are ill-suited to handle fine-grained obstacles. One method for dealing with this is selecting footstep locations based on terrain characteristics. However, incorporating robot dynamics into footstep planning requires significant computation, much more than in the quasi-static case. In this work, we present an LSTM-based planning framework that learns probability distributions over likely footstep locations using both terrain lookahead and the robot's dynamics, and leverages the LSTM's sequential nature to find footsteps in linear time. Our framework can also be used as a module to speed up sampling-based planners. We validate our approach on a simulated one-legged hopper over a variety of uneven terrains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge