Benjamin Ramtoula

Polytechnique Montreal

3D Foundation Model-Based Loop Closing for Decentralized Collaborative SLAM

Feb 02, 2026Abstract:Decentralized Collaborative Simultaneous Localization And Mapping (C-SLAM) techniques often struggle to identify map overlaps due to significant viewpoint variations among robots. Motivated by recent advancements in 3D foundation models, which can register images despite large viewpoint differences, we propose a robust loop closing approach that leverages these models to establish inter-robot measurements. In contrast to resource-intensive methods requiring full 3D reconstruction within a centralized map, our approach integrates foundation models into existing SLAM pipelines, yielding scalable and robust multi-robot mapping. Our contributions include: (1) integrating 3D foundation models to reliably estimate relative poses from monocular image pairs within decentralized C-SLAM; (2) introducing robust outlier mitigation techniques critical to the use of these relative poses; and (3) developing specialized pose graph optimization formulations that efficiently resolve scale ambiguities. We evaluate our method against state-of-the-art approaches, demonstrating improvements in localization and mapping accuracy, alongside significant gains in computational and memory efficiency. These results highlight the potential of our approach for deployment in large-scale multi-robot scenarios.

VDNA-PR: Using General Dataset Representations for Robust Sequential Visual Place Recognition

Mar 14, 2024Abstract:This paper adapts a general dataset representation technique to produce robust Visual Place Recognition (VPR) descriptors, crucial to enable real-world mobile robot localisation. Two parallel lines of work on VPR have shown, on one side, that general-purpose off-the-shelf feature representations can provide robustness to domain shifts, and, on the other, that fused information from sequences of images improves performance. In our recent work on measuring domain gaps between image datasets, we proposed a Visual Distribution of Neuron Activations (VDNA) representation to represent datasets of images. This representation can naturally handle image sequences and provides a general and granular feature representation derived from a general-purpose model. Moreover, our representation is based on tracking neuron activation values over the list of images to represent and is not limited to a particular neural network layer, therefore having access to high- and low-level concepts. This work shows how VDNAs can be used for VPR by learning a very lightweight and simple encoder to generate task-specific descriptors. Our experiments show that our representation can allow for better robustness than current solutions to serious domain shifts away from the training data distribution, such as to indoor environments and aerial imagery.

What you see is what you get: Experience ranking with deep neural dataset-to-dataset similarity for topological localisation

Oct 20, 2023Abstract:Recalling the most relevant visual memories for localisation or understanding a priori the likely outcome of localisation effort against a particular visual memory is useful for efficient and robust visual navigation. Solutions to this problem should be divorced from performance appraisal against ground truth - as this is not available at run-time - and should ideally be based on generalisable environmental observations. For this, we propose applying the recently developed Visual DNA as a highly scalable tool for comparing datasets of images - in this work, sequences of map and live experiences. In the case of localisation, important dataset differences impacting performance are modes of appearance change, including weather, lighting, and season. Specifically, for any deep architecture which is used for place recognition by matching feature volumes at a particular layer, we use distribution measures to compare neuron-wise activation statistics between live images and multiple previously recorded past experiences, with a potentially large seasonal (winter/summer) or time of day (day/night) shift. We find that differences in these statistics correlate to performance when localising using a past experience with the same appearance gap. We validate our approach over the Nordland cross-season dataset as well as data from Oxford's University Parks with lighting and mild seasonal change, showing excellent ability of our system to rank actual localisation performance across candidate experiences.

Visual DNA: Representing and Comparing Images using Distributions of Neuron Activations

Apr 20, 2023

Abstract:Selecting appropriate datasets is critical in modern computer vision. However, no general-purpose tools exist to evaluate the extent to which two datasets differ. For this, we propose representing images - and by extension datasets - using Distributions of Neuron Activations (DNAs). DNAs fit distributions, such as histograms or Gaussians, to activations of neurons in a pre-trained feature extractor through which we pass the image(s) to represent. This extractor is frozen for all datasets, and we rely on its generally expressive power in feature space. By comparing two DNAs, we can evaluate the extent to which two datasets differ with granular control over the comparison attributes of interest, providing the ability to customise the way distances are measured to suit the requirements of the task at hand. Furthermore, DNAs are compact, representing datasets of any size with less than 15 megabytes. We demonstrate the value of DNAs by evaluating their applicability on several tasks, including conditional dataset comparison, synthetic image evaluation, and transfer learning, and across diverse datasets, ranging from synthetic cat images to celebrity faces and urban driving scenes.

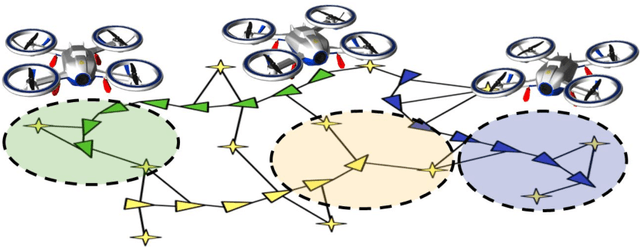

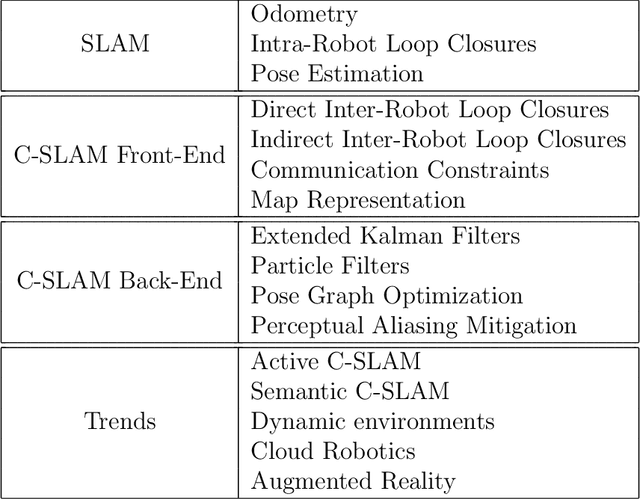

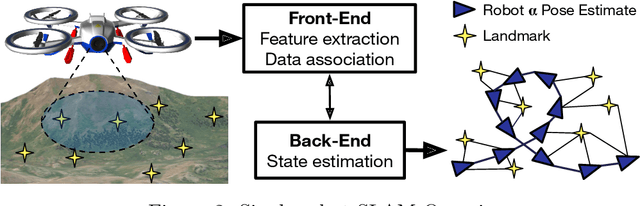

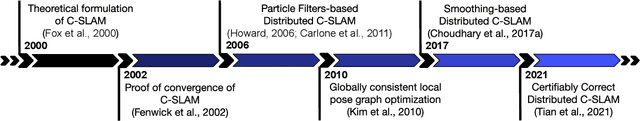

Towards Collaborative Simultaneous Localization and Mapping: a Survey of the Current Research Landscape

Aug 18, 2021

Abstract:Motivated by the tremendous progress we witnessed in recent years, this paper presents a survey of the scientific literature on the topic of Collaborative Simultaneous Localization and Mapping (C-SLAM), also known as multi-robot SLAM. With fleets of self-driving cars on the horizon and the rise of multi-robot systems in industrial applications, we believe that Collaborative SLAM will soon become a cornerstone of future robotic applications. In this survey, we introduce the basic concepts of C-SLAM and present a thorough literature review. We also outline the major challenges and limitations of C-SLAM in terms of robustness, communication, and resource management. We conclude by exploring the area's current trends and promising research avenues.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

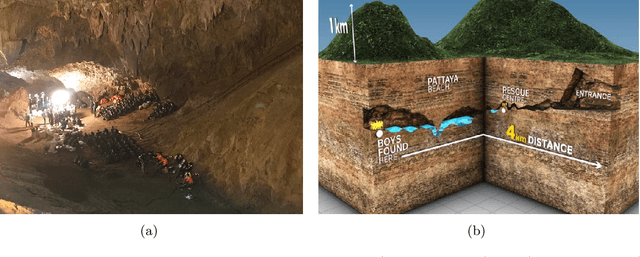

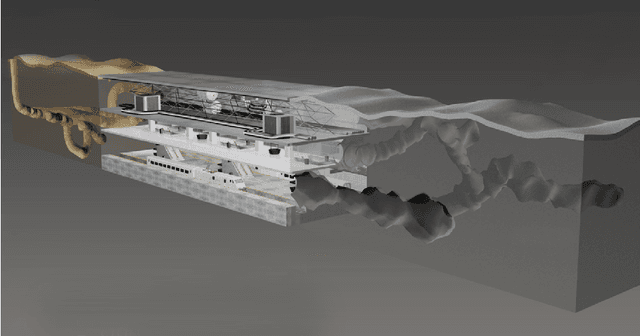

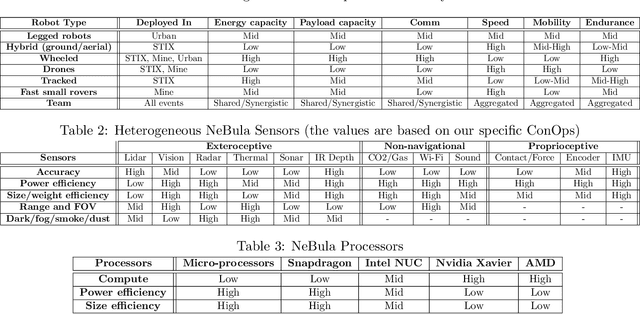

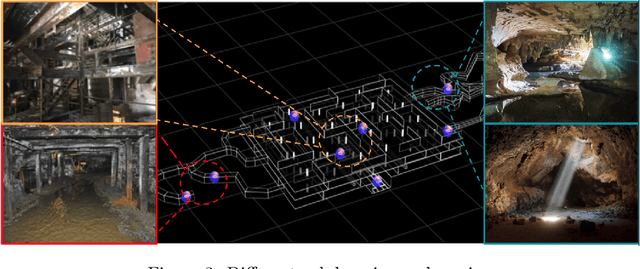

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

MSL-RAPTOR: A 6DoF Relative Pose Tracker for Onboard Robotic Perception

Dec 16, 2020

Abstract:Determining the relative position and orientation of objects in an environment is a fundamental building block for a wide range of robotics applications. To accomplish this task efficiently in practical settings, a method must be fast, use common sensors, and generalize easily to new objects and environments. We present MSL-RAPTOR, a two-stage algorithm for tracking a rigid body with a monocular camera. The image is first processed by an efficient neural network-based front-end to detect new objects and track 2D bounding boxes between frames. The class label and bounding box is passed to the back-end that updates the object's pose using an unscented Kalman filter (UKF). The measurement posterior is fed back to the 2D tracker to improve robustness. The object's class is identified so a class-specific UKF can be used if custom dynamics and constraints are known. Adapting to track the pose of new classes only requires providing a trained 2D object detector or labeled 2D bounding box data, as well as the approximate size of the objects. The performance of MSL-RAPTOR is first verified on the NOCS-REAL275 dataset, achieving results comparable to RGB-D approaches despite not using depth measurements. When tracking a flying drone from onboard another drone, it outperforms the fastest comparable method in speed by a factor of 3, while giving lower translation and rotation median errors by 66% and 23% respectively.

CAPRICORN: Communication Aware Place Recognition using Interpretable Constellations of Objects in Robot Networks

Oct 19, 2019

Abstract:Using multiple robots for exploring and mapping environments can provide improved robustness and performance, but it can be difficult to implement. In particular, limited communication bandwidth is a considerable constraint when a robot needs to determine if it has visited a location that was previously explored by another robot, as it requires for robots to share descriptions of places they have visited. One way to compress this description is to use constellations, groups of 3D points that correspond to the estimate of a set of relative object positions. Constellations maintain the same pattern from different viewpoints and can be robust to illumination changes or dynamic elements. We present a method to extract from these constellations compact spatial and semantic descriptors of the objects in a scene. We use this representation in a 2-step decentralized loop closure verification: first, we distribute the compact semantic descriptors to determine which other robots might have seen scenes with similar objects; then we query matching robots with the full constellation to validate the match using geometric information. The proposed method requires less memory, is more interpretable than global image descriptors, and could be useful for other tasks and interactions with the environment. We validate our system's performance on a TUM RGB-D SLAM sequence and show its benefits in terms of bandwidth requirements.

DOOR-SLAM: Distributed, Online, and Outlier Resilient SLAM for Robotic Teams

Sep 26, 2019

Abstract:To achieve collaborative tasks, robots in a team need to have a shared understanding of the environment and their location within it. Distributed Simultaneous Localization and Mapping (SLAM) offers a practical solution to localize the robots without relying on an external positioning system (e.g. GPS) and with minimal information exchange. Unfortunately, current distributed SLAM systems are vulnerable to perception outliers and therefore tend to use very conservative parameters for inter-robot place recognition. However, being too conservative comes at the cost of rejecting many valid loop closure candidates, which results in less accurate trajectory estimates. This paper introduces DOOR-SLAM, a fully distributed SLAM system with an outlier rejection mechanism that can work with less conservative parameters. DOOR-SLAM is based on peer-to-peer communication and does not require full connectivity among the robots. DOOR-SLAM includes two key modules: a pose graph optimizer combined with a distributed pairwise consistent measurement set maximization algorithm to reject spurious inter-robot loop closures; and a distributed SLAM front-end that detects inter-robot loop closures without exchanging raw sensor data. The system has been evaluated in simulations, benchmarking datasets, and field experiments, including tests in GPS-denied subterranean environments. DOOR-SLAM produces more inter-robot loop closures, successfully rejects outliers, and results in accurate trajectory estimates, while requiring low communication bandwidth. Full source code is available at https://github.com/MISTLab/DOOR-SLAM.git.

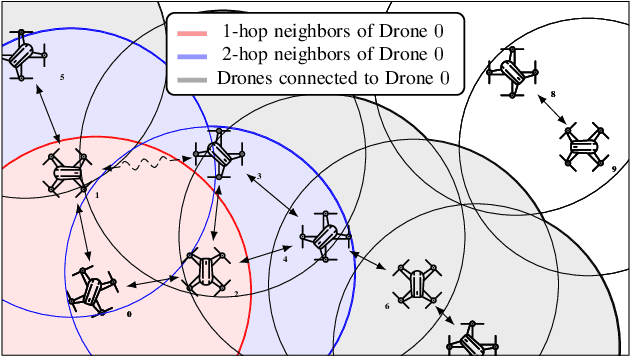

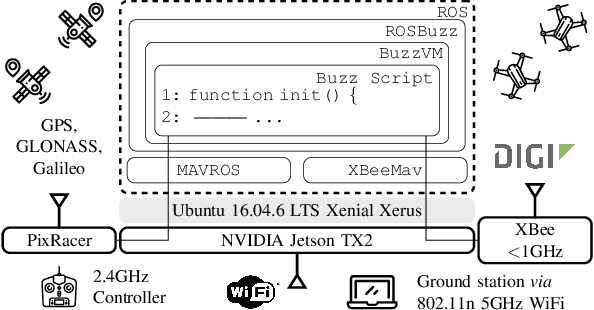

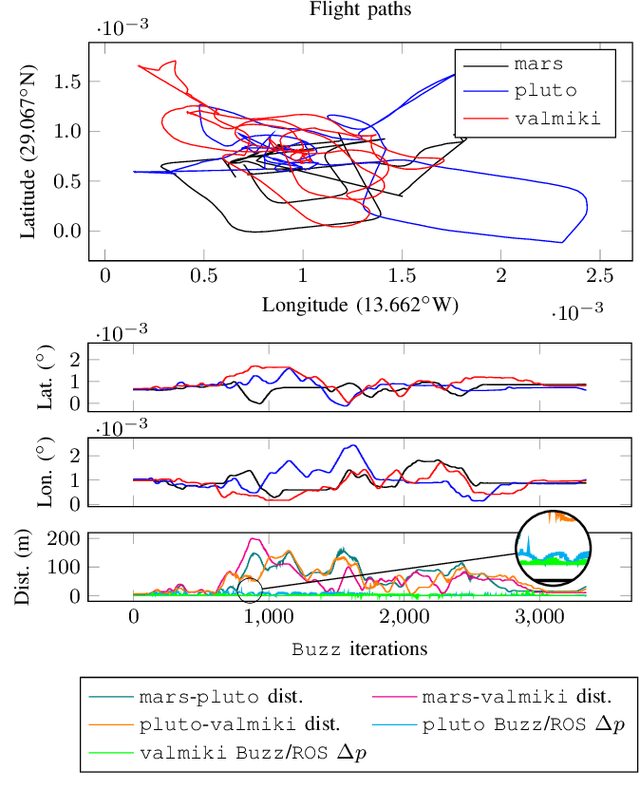

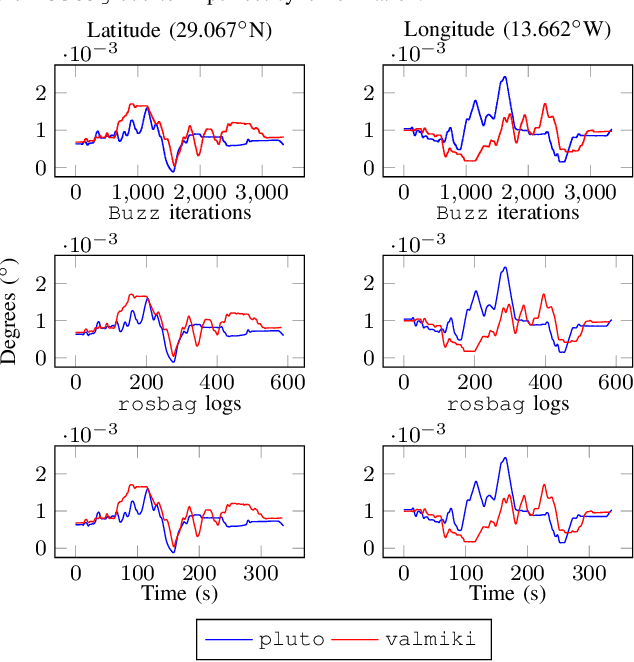

Decentralized Connectivity Control in Quadcopters: a Field Study of Communication Performance

Sep 23, 2019

Abstract:Redundancy and parallelism make decentralized multi-robot systems appealing solutions for the exploration of extreme environments. However, effective cooperation often requires team-wide connectivity and a carefully designed communication strategy. Several recently proposed decentralized connectivity maintenance approaches exploit elegant algebraic results drawn from spectral graph theory. Yet, these proposals are rarely taken beyond simulations or laboratory implementations. In this work, we present two major contributions: (i) we describe the full-stack implementation---from hardware to software---of a decentralized control law for robust connectivity maintenance; and (ii) we assess, in the field, our setup's ability to correctly exchange all the necessary information required to maintain connectivity in a team of quadcopters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge