Pierre-Yves Lajoie

3D Foundation Model-Based Loop Closing for Decentralized Collaborative SLAM

Feb 02, 2026Abstract:Decentralized Collaborative Simultaneous Localization And Mapping (C-SLAM) techniques often struggle to identify map overlaps due to significant viewpoint variations among robots. Motivated by recent advancements in 3D foundation models, which can register images despite large viewpoint differences, we propose a robust loop closing approach that leverages these models to establish inter-robot measurements. In contrast to resource-intensive methods requiring full 3D reconstruction within a centralized map, our approach integrates foundation models into existing SLAM pipelines, yielding scalable and robust multi-robot mapping. Our contributions include: (1) integrating 3D foundation models to reliably estimate relative poses from monocular image pairs within decentralized C-SLAM; (2) introducing robust outlier mitigation techniques critical to the use of these relative poses; and (3) developing specialized pose graph optimization formulations that efficiently resolve scale ambiguities. We evaluate our method against state-of-the-art approaches, demonstrating improvements in localization and mapping accuracy, alongside significant gains in computational and memory efficiency. These results highlight the potential of our approach for deployment in large-scale multi-robot scenarios.

Multi-Robot Decentralized Collaborative SLAM in Planetary Analogue Environments: Dataset, Challenges, and Lessons Learned

Jan 28, 2026Abstract:Decentralized collaborative simultaneous localization and mapping (C-SLAM) is essential to enable multirobot missions in unknown environments without relying on preexisting localization and communication infrastructure. This technology is anticipated to play a key role in the exploration of the Moon, Mars, and other planets. In this article, we share insights and lessons learned from C-SLAM experiments involving three robots operating on a Mars analogue terrain and communicating over an ad hoc network. We examine the impact of limited and intermittent communication on C-SLAM performance, as well as the unique localization challenges posed by planetary-like environments. Additionally, we introduce a novel dataset collected during our experiments, which includes real-time peer-to-peer inter-robot throughput and latency measurements. This dataset aims to support future research on communication-constrained, decentralized multirobot operations.

Frequency-based View Selection in Gaussian Splatting Reconstruction

Sep 24, 2024

Abstract:Three-dimensional reconstruction is a fundamental problem in robotics perception. We examine the problem of active view selection to perform 3D Gaussian Splatting reconstructions with as few input images as possible. Although 3D Gaussian Splatting has made significant progress in image rendering and 3D reconstruction, the quality of the reconstruction is strongly impacted by the selection of 2D images and the estimation of camera poses through Structure-from-Motion (SfM) algorithms. Current methods to select views that rely on uncertainties from occlusions, depth ambiguities, or neural network predictions directly are insufficient to handle the issue and struggle to generalize to new scenes. By ranking the potential views in the frequency domain, we are able to effectively estimate the potential information gain of new viewpoints without ground truth data. By overcoming current constraints on model architecture and efficacy, our method achieves state-of-the-art results in view selection, demonstrating its potential for efficient image-based 3D reconstruction.

Hierarchies define the scalability of robot swarms

May 03, 2024

Abstract:The emerging behaviors of swarms have fascinated scientists and gathered significant interest in the field of robotics. Traditionally, swarms are viewed as egalitarian, with robots sharing identical roles and capabilities. However, recent findings highlight the importance of hierarchy for deploying robot swarms more effectively in diverse scenarios. Despite nature's preference for hierarchies, the robotics field has clung to the egalitarian model, partly due to a lack of empirical evidence for the conditions favoring hierarchies. Our research demonstrates that while egalitarian swarms excel in environments proportionate to their collective sensing abilities, they struggle in larger or more complex settings. Hierarchical swarms, conversely, extend their sensing reach efficiently, proving successful in larger, more unstructured environments with fewer resources. We validated these concepts through simulations and physical robot experiments, using a complex radiation cleanup task. This study paves the way for developing adaptable, hierarchical swarm systems applicable in areas like planetary exploration and autonomous vehicles. Moreover, these insights could deepen our understanding of hierarchical structures in biological organisms.

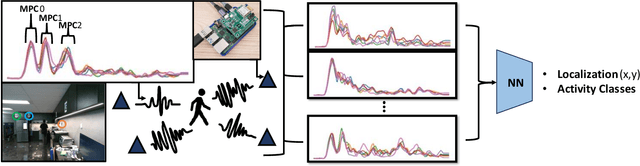

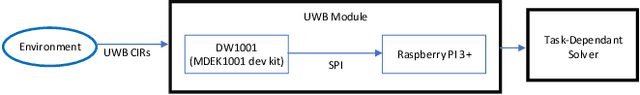

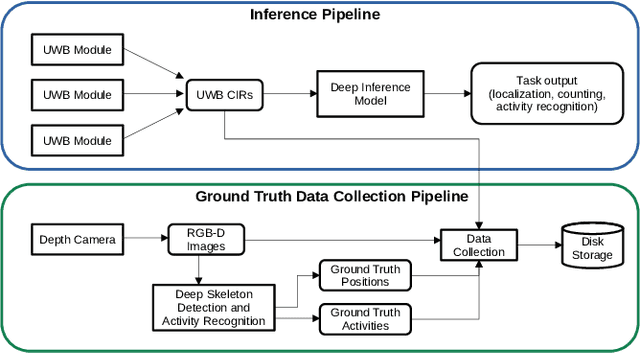

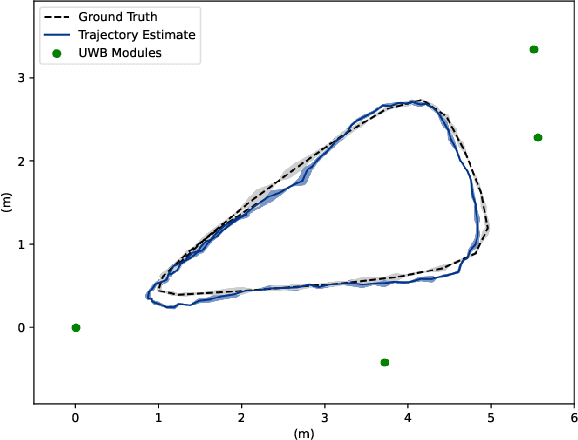

Device-Free Human State Estimation using UWB Multi-Static Radios

Dec 26, 2023

Abstract:We present a human state estimation framework that allows us to estimate the location, and even the activities, of people in an indoor environment without the requirement that they carry a specific devices with them. To achieve this "device free" localization we use a small number of low-cost Ultra-Wide Band (UWB) sensors distributed across the environment of interest. To achieve high quality estimation from the UWB signals merely reflected of people in the environment, we exploit a deep network that can learn to make inferences. The hardware setup consists of commercial off-the-shelf (COTS) single antenna UWB modules for sensing, paired with Raspberry PI units for computational processing and data transfer. We make use of the channel impulse response (CIR) measurements from the UWB sensors to estimate the human state - comprised of location and activity - in a given area. Additionally, we can also estimate the number of humans that occupy this region of interest. In our approach, first, we pre-process the CIR data which involves meticulous aggregation of measurements and extraction of key statistics. Afterwards, we leverage a convolutional deep neural network to map the CIRs into precise location estimates with sub-30 cm accuracy. Similarly, we achieve accurate human activity recognition and occupancy counting results. We show that we can quickly fine-tune our model for new out-of-distribution users, a process that requires only a few minutes of data and a few epochs of training. Our results show that UWB is a promising solution for adaptable smart-home localization and activity recognition problems.

PEOPLEx: PEdestrian Opportunistic Positioning LEveraging IMU, UWB, BLE and WiFi

Nov 30, 2023

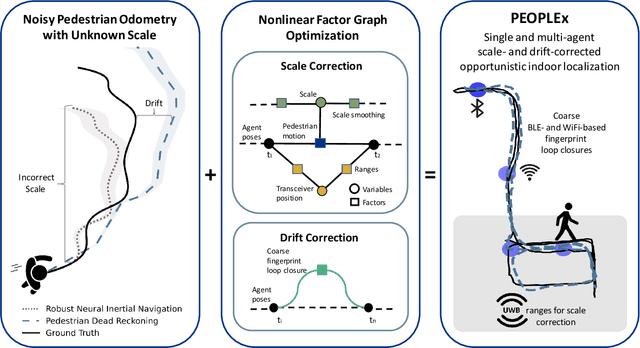

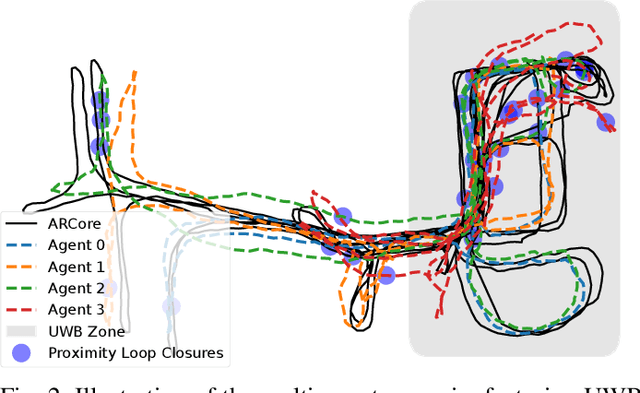

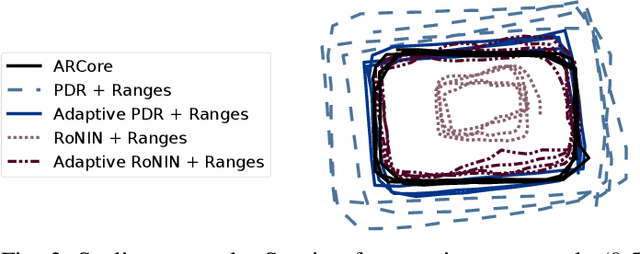

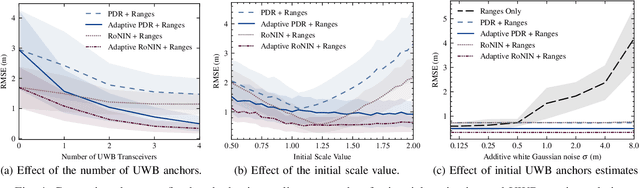

Abstract:This paper advances the field of pedestrian localization by introducing a unifying framework for opportunistic positioning based on nonlinear factor graph optimization. While many existing approaches assume constant availability of one or multiple sensing signals, our methodology employs IMU-based pedestrian inertial navigation as the backbone for sensor fusion, opportunistically integrating Ultra-Wideband (UWB), Bluetooth Low Energy (BLE), and WiFi signals when they are available in the environment. The proposed PEOPLEx framework is designed to incorporate sensing data as it becomes available, operating without any prior knowledge about the environment (e.g. anchor locations, radio frequency maps, etc.). Our contributions are twofold: 1) we introduce an opportunistic multi-sensor and real-time pedestrian positioning framework fusing the available sensor measurements; 2) we develop novel factors for adaptive scaling and coarse loop closures, significantly improving the precision of indoor positioning. Experimental validation confirms that our approach achieves accurate localization estimates in real indoor scenarios using commercial smartphones.

Swarm-SLAM : Sparse Decentralized Collaborative Simultaneous Localization and Mapping Framework for Multi-Robot Systems

Jan 16, 2023

Abstract:Collaborative Simultaneous Localization And Mapping (C-SLAM) is a vital component for successful multi-robot operations in environments without an external positioning system, such as indoors, underground or underwater. In this paper, we introduce Swarm-SLAM, an open-source C-SLAM system that is designed to be scalable, flexible, decentralized, and sparse, which are all key properties in swarm robotics. Our system supports inertial, lidar, stereo, and RGB-D sensing, and it includes a novel inter-robot loop closure prioritization technique that reduces communication and accelerates convergence. We evaluated our ROS-2 implementation on five different datasets, and in a real-world experiment with three robots communicating through an ad-hoc network. Our code is publicly available: https://github.com/MISTLab/Swarm-SLAM

Analyze, Debug, Optimize: Real-Time Tracing for Perception and Mapping Systems in ROS 2

Apr 25, 2022

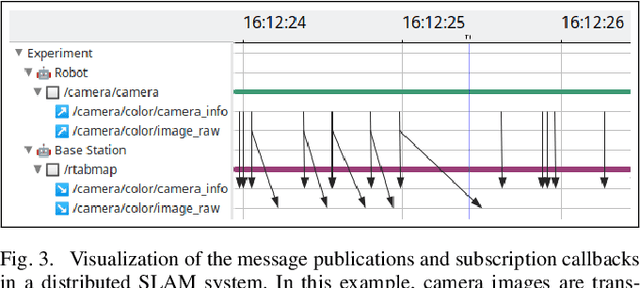

Abstract:Perception and mapping systems are among the most computationally, memory, and bandwidth intensive software components in robotics. Therefore, analysis, debugging, and optimization are crucial to improve perception systems performance in real-time applications. However, standard approaches often depict a partial picture of the actual performance. Fortunately, instrumentation and tracing offer a great opportunity for detailed performance analysis of real-time systems. In this paper, we show how our novel open-source tracing tools and techniques for ROS 2 enable us to identify delays, bottlenecks and critical paths inside centralized, or distributed, perception and mapping systems.

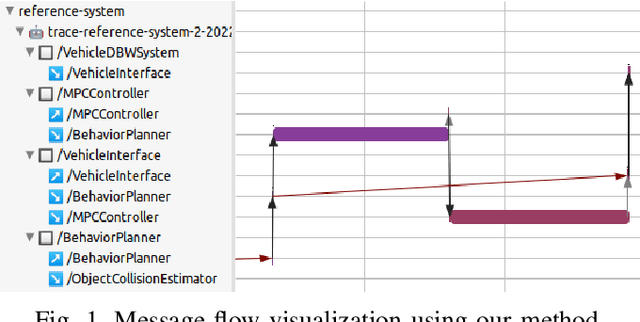

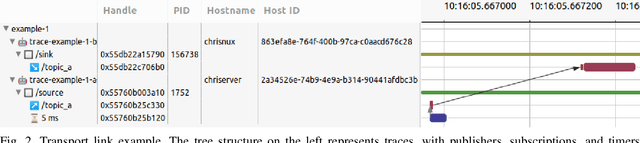

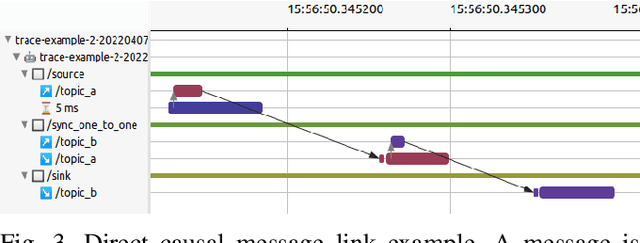

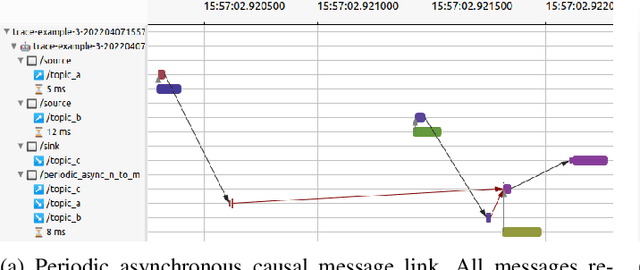

Message Flow Analysis with Complex Causal Links for Distributed ROS 2 Systems

Apr 25, 2022

Abstract:Distributed robotic systems rely heavily on publish-subscribe frameworks, such as ROS, to efficiently implement modular computation graphs. The ROS 2 executor, a high-level task scheduler which handles messages internally, is a performance bottleneck. In previous work, we presented ros2_tracing, a framework with instrumentation and tools for real-time tracing of ROS 2. We now extend on that instrumentation and leverage the tracing tools to propose an analysis and visualization of the flow of messages across distributed ROS 2 systems. Our proposed method detects one-to-many and many-to-many causal links between input and output messages, including indirect causal links through simple user-level annotations. We validate our method on both synthetic and real robotic systems, and demonstrate its low runtime overhead. Moreover, the underlying intermediate execution representation database can be further leveraged to extract additional metrics and high-level results. This can provide valuable timing and scheduling information to further study and improve the ROS 2 executor as well as optimize any ROS 2 system. The source code is available at: https://github.com/christophebedard/ros2-message-flow-analysis.

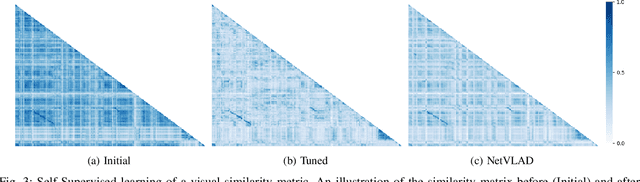

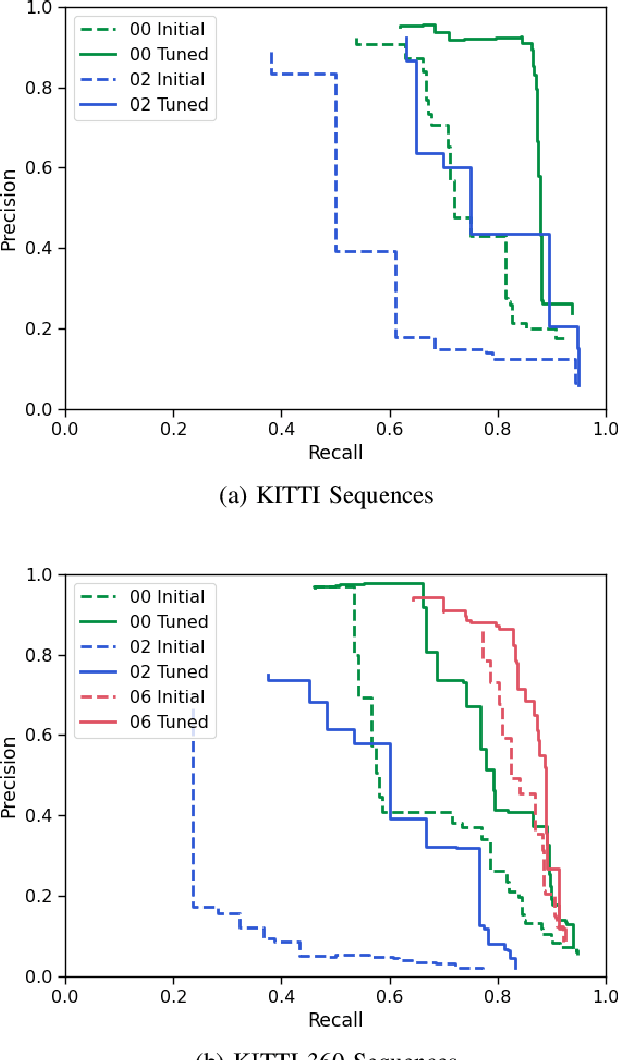

Tune your Place Recognition: Self-Supervised Domain Calibration via Robust SLAM

Mar 08, 2022

Abstract:Visual place recognition techniques based on deep learning, which have imposed themselves as the state-of-the-art in recent years, do not always generalize well to environments that are visually different from the training set. Thus, to achieve top performance, it is sometimes necessary to fine-tune the networks to the target environment. To this end, we propose a completely self-supervised domain calibration procedure based on robust pose graph estimation from Simultaneous Localization and Mapping (SLAM) as the supervision signal without requiring GPS or manual labeling. We first show that the training samples produced by our technique are sufficient to train a visual place recognition system from a pre-trained classification model. Then, we show that our approach can improve the performance of a state-of-the-art technique on a target environment dissimilar from the training set. We believe that this approach will help practitioners to deploy more robust place recognition solutions in real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge