Amal Feriani

Device-Free Human State Estimation using UWB Multi-Static Radios

Dec 26, 2023

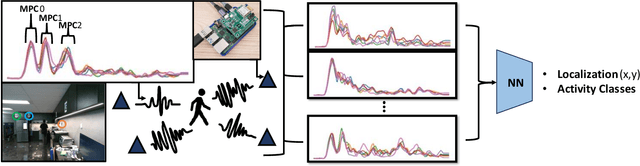

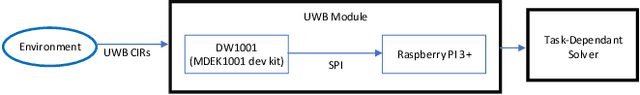

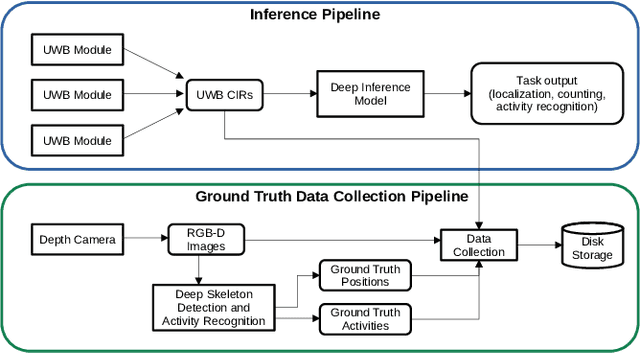

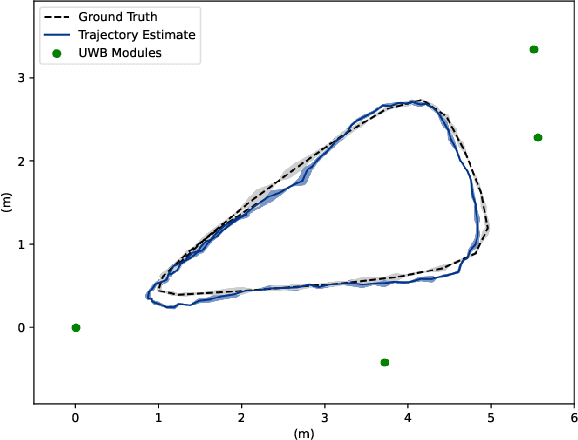

Abstract:We present a human state estimation framework that allows us to estimate the location, and even the activities, of people in an indoor environment without the requirement that they carry a specific devices with them. To achieve this "device free" localization we use a small number of low-cost Ultra-Wide Band (UWB) sensors distributed across the environment of interest. To achieve high quality estimation from the UWB signals merely reflected of people in the environment, we exploit a deep network that can learn to make inferences. The hardware setup consists of commercial off-the-shelf (COTS) single antenna UWB modules for sensing, paired with Raspberry PI units for computational processing and data transfer. We make use of the channel impulse response (CIR) measurements from the UWB sensors to estimate the human state - comprised of location and activity - in a given area. Additionally, we can also estimate the number of humans that occupy this region of interest. In our approach, first, we pre-process the CIR data which involves meticulous aggregation of measurements and extraction of key statistics. Afterwards, we leverage a convolutional deep neural network to map the CIRs into precise location estimates with sub-30 cm accuracy. Similarly, we achieve accurate human activity recognition and occupancy counting results. We show that we can quickly fine-tune our model for new out-of-distribution users, a process that requires only a few minutes of data and a few epochs of training. Our results show that UWB is a promising solution for adaptable smart-home localization and activity recognition problems.

SAGE: Smart home Agent with Grounded Execution

Nov 01, 2023Abstract:This article introduces SAGE (Smart home Agent with Grounded Execution), a framework designed to maximize the flexibility of smart home assistants by replacing manually-defined inference logic with an LLM-powered autonomous agent system. SAGE integrates information about user preferences, device states, and external factors (such as weather and TV schedules) through the orchestration of a collection of tools. SAGE's capabilities include learning user preferences from natural-language utterances, interacting with devices by reading their API documentation, writing code to continuously monitor devices, and understanding natural device references. To evaluate SAGE, we develop a benchmark of 43 highly challenging smart home tasks, where SAGE successfully achieves 23 tasks, significantly outperforming existing LLM-enabled baselines (5/43).

Channel Estimation in RIS-Enabled mmWave Wireless Systems: A Variational Inference Approach

Aug 25, 2023

Abstract:We propose a variational inference (VI)-based channel state information (CSI) estimation approach in a fully-passive reconfigurable intelligent surface (RIS)-aided mmWave single-user single-input multiple-output (SIMO) communication system. Specifically, we first propose a VI-based joint channel estimation method to estimate the user-equipment (UE) to RIS (UE-RIS) and RIS to base station (RIS-BS) channels using uplink training signals in a passive RIS setup. However, updating the phase-shifts based on the instantaneous CSI (I-CSI) leads to a high signaling overhead especially due to the short coherence block of the UE-RIS channel. Therefore, to reduce the signaling complexity, we propose a VI-based method to estimate the RIS-BS channel along with the covariance matrix of the UE-RIS channel that remains quasi-static for a longer period than the instantaneous UE-RIS channel. In the VI framework, we approximate the posterior of the channel gains/covariance matrix with convenient distributions given the received uplink training signals. Then, the learned distributions, which are close to the true posterior distributions in terms of Kullback-Leibler divergence, are leveraged to obtain the maximum a posteriori (MAP) estimation of the considered CSI. The simulation results demonstrate that MAP channel estimation using approximated posteriors yields a capacity that is close to the one achieved with true posteriors, thus demonstrating the effectiveness of the proposed methods. Furthermore, our results show that estimating the channel covariance matrix improves the spectral efficiency by reducing the pilot signaling required to obtain the phase-shifts for the RIS elements in a channel-varying environment.

CeBed: A Benchmark for Deep Data-Driven OFDM Channel Estimation

Jun 23, 2023Abstract:Deep learning has been extensively used in wireless communication problems, including channel estimation. Although several data-driven approaches exist, a fair and realistic comparison between them is difficult due to inconsistencies in the experimental conditions and the lack of a standardized experimental design. In addition, the performance of data-driven approaches is often compared based on empirical analysis. The lack of reproducibility and availability of standardized evaluation tools (e.g., datasets, codebases) hinder the development and progress of data-driven methods for channel estimation and wireless communication in general. In this work, we introduce an initiative to build benchmarks that unify several data-driven OFDM channel estimation approaches. Specifically, we present CeBed (a testbed for channel estimation) including different datasets covering various systems models and propagation conditions along with the implementation of ten deep and traditional baselines. This benchmark considers different practical aspects such as the robustness of the data-driven models, the number and the arrangement of pilots, and the number of receive antennas. This work offers a comprehensive and unified framework to help researchers evaluate and design data-driven channel estimation algorithms.

Mixed-Variable PSO with Fairness on Multi-Objective Field Data Replication in Wireless Networks

Mar 23, 2023

Abstract:Digital twins have shown a great potential in supporting the development of wireless networks. They are virtual representations of 5G/6G systems enabling the design of machine learning and optimization-based techniques. Field data replication is one of the critical aspects of building a simulation-based twin, where the objective is to calibrate the simulation to match field performance measurements. Since wireless networks involve a variety of key performance indicators (KPIs), the replication process becomes a multi-objective optimization problem in which the purpose is to minimize the error between the simulated and field data KPIs. Unlike previous works, we focus on designing a data-driven search method to calibrate the simulator and achieve accurate and reliable reproduction of field performance. This work proposes a search-based algorithm based on mixedvariable particle swarm optimization (PSO) to find the optimal simulation parameters. Furthermore, we extend this solution to account for potential conflicts between the KPIs using {\alpha}-fairness concept to adjust the importance attributed to each KPI during the search. Experiments on field data showcase the effectiveness of our approach to (i) improve the accuracy of the replication, (ii) enhance the fairness between the different KPIs, and (iii) guarantee faster convergence compared to other methods.

Domain Generalization in Machine Learning Models for Wireless Communications: Concepts, State-of-the-Art, and Open Issues

Mar 13, 2023

Abstract:Data-driven machine learning (ML) is promoted as one potential technology to be used in next-generations wireless systems. This led to a large body of research work that applies ML techniques to solve problems in different layers of the wireless transmission link. However, most of these applications rely on supervised learning which assumes that the source (training) and target (test) data are independent and identically distributed (i.i.d). This assumption is often violated in the real world due to domain or distribution shifts between the source and the target data. Thus, it is important to ensure that these algorithms generalize to out-of-distribution (OOD) data. In this context, domain generalization (DG) tackles the OOD-related issues by learning models on different and distinct source domains/datasets with generalization capabilities to unseen new domains without additional finetuning. Motivated by the importance of DG requirements for wireless applications, we present a comprehensive overview of the recent developments in DG and the different sources of domain shift. We also summarize the existing DG methods and review their applications in selected wireless communication problems, and conclude with insights and open questions.

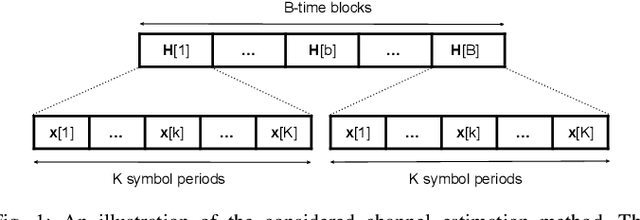

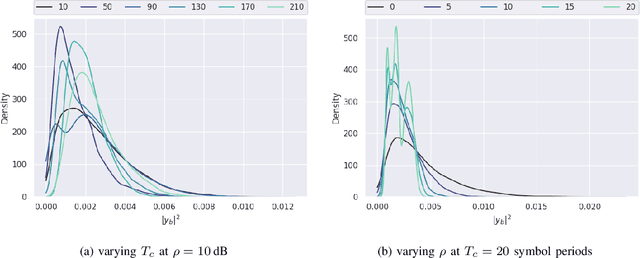

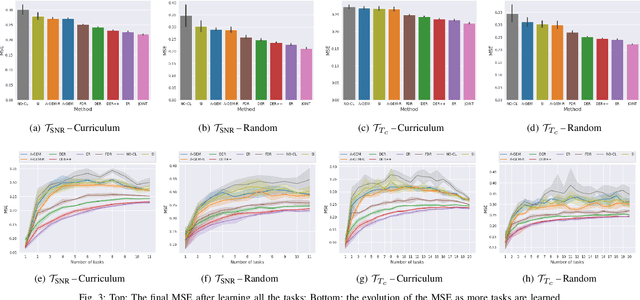

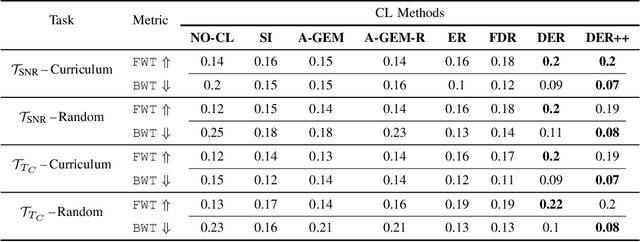

Continual Learning-Based MIMO Channel Estimation: A Benchmarking Study

Nov 19, 2022

Abstract:With the proliferation of deep learning techniques for wireless communication, several works have adopted learning-based approaches to solve the channel estimation problem. While these methods are usually promoted for their computational efficiency at inference time, their use is restricted to specific stationary training settings in terms of communication system parameters, e.g., signal-to-noise ratio (SNR) and coherence time. Therefore, the performance of these learning-based solutions will degrade when the models are tested on different settings than the ones used for training. This motivates our work in which we investigate continual supervised learning (CL) to mitigate the shortcomings of the current approaches. In particular, we design a set of channel estimation tasks wherein we vary different parameters of the channel model. We focus on Gauss-Markov Rayleigh fading channel estimation to assess the impact of non-stationarity on performance in terms of the mean square error (MSE) criterion. We study a selection of state-of-the-art CL methods and we showcase empirically the importance of catastrophic forgetting in continuously evolving channel settings. Our results demonstrate that the CL algorithms can improve the interference performance in two channel estimation tasks governed by changes in the SNR level and coherence time.

Decentralized Multi-Agent Reinforcement Learning for Task Offloading Under Uncertainty

Jul 26, 2021

Abstract:Multi-Agent Reinforcement Learning (MARL) is a challenging subarea of Reinforcement Learning due to the non-stationarity of the environments and the large dimensionality of the combined action space. Deep MARL algorithms have been applied to solve different task offloading problems. However, in real-world applications, information required by the agents (i.e. rewards and states) are subject to noise and alterations. The stability and the robustness of deep MARL to practical challenges is still an open research problem. In this work, we apply state-of-the art MARL algorithms to solve task offloading with reward uncertainty. We show that perturbations in the reward signal can induce decrease in the performance compared to learning with perfect rewards. We expect this paper to stimulate more research in studying and addressing the practical challenges of deploying deep MARL solutions in wireless communications systems.

On the Robustness of Deep Reinforcement Learning in IRS-Aided Wireless Communications Systems

Jul 26, 2021

Abstract:We consider an Intelligent Reflecting Surface (IRS)-aided multiple-input single-output (MISO) system for downlink transmission. We compare the performance of Deep Reinforcement Learning (DRL) and conventional optimization methods in finding optimal phase shifts of the IRS elements to maximize the user signal-to-noise (SNR) ratio. Furthermore, we evaluate the robustness of these methods to channel impairments and changes in the system. We demonstrate numerically that DRL solutions show more robustness to noisy channels and user mobility.

Single and Multi-Agent Deep Reinforcement Learning for AI-Enabled Wireless Networks: A Tutorial

Nov 06, 2020

Abstract:Deep Reinforcement Learning (DRL) has recently witnessed significant advances that have led to multiple successes in solving sequential decision-making problems in various domains, particularly in wireless communications. The future sixth-generation (6G) networks are expected to provide scalable, low-latency, ultra-reliable services empowered by the application of data-driven Artificial Intelligence (AI). The key enabling technologies of future 6G networks, such as intelligent meta-surfaces, aerial networks, and AI at the edge, involve more than one agent which motivates the importance of multi-agent learning techniques. Furthermore, cooperation is central to establishing self-organizing, self-sustaining, and decentralized networks. In this context, this tutorial focuses on the role of DRL with an emphasis on deep Multi-Agent Reinforcement Learning (MARL) for AI-enabled 6G networks. The first part of this paper will present a clear overview of the mathematical frameworks for single-agent RL and MARL. The main idea of this work is to motivate the application of RL beyond the model-free perspective which was extensively adopted in recent years. Thus, we provide a selective description of RL algorithms such as Model-Based RL (MBRL) and cooperative MARL and we highlight their potential applications in 6G wireless networks. Finally, we overview the state-of-the-art of MARL in fields such as Mobile Edge Computing (MEC), Unmanned Aerial Vehicles (UAV) networks, and cell-free massive MIMO, and identify promising future research directions. We expect this tutorial to stimulate more research endeavors to build scalable and decentralized systems based on MARL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge