Sepand Dyanatkar

Composing Open-domain Vision with RAG for Ocean Monitoring and Conservation

Dec 03, 2024

Abstract:Climate change's destruction of marine biodiversity is threatening communities and economies around the world which rely on healthy oceans for their livelihoods. The challenge of applying computer vision to niche, real-world domains such as ocean conservation lies in the dynamic and diverse environments where traditional top-down learning struggle with long-tailed distributions, generalization, and domain transfer. Scalable species identification for ocean monitoring is particularly difficult due to the need to adapt models to new environments and identify rare or unseen species. To overcome these limitations, we propose leveraging bottom-up, open-domain learning frameworks as a resilient, scalable solution for image and video analysis in marine applications. Our preliminary demonstration uses pretrained vision-language models (VLMs) combined with retrieval-augmented generation (RAG) as grounding, leaving the door open for numerous architectural, training and engineering optimizations. We validate this approach through a preliminary application in classifying fish from video onboard fishing vessels, demonstrating impressive emergent retrieval and prediction capabilities without domain-specific training or knowledge of the task itself.

Hierarchies define the scalability of robot swarms

May 03, 2024

Abstract:The emerging behaviors of swarms have fascinated scientists and gathered significant interest in the field of robotics. Traditionally, swarms are viewed as egalitarian, with robots sharing identical roles and capabilities. However, recent findings highlight the importance of hierarchy for deploying robot swarms more effectively in diverse scenarios. Despite nature's preference for hierarchies, the robotics field has clung to the egalitarian model, partly due to a lack of empirical evidence for the conditions favoring hierarchies. Our research demonstrates that while egalitarian swarms excel in environments proportionate to their collective sensing abilities, they struggle in larger or more complex settings. Hierarchical swarms, conversely, extend their sensing reach efficiently, proving successful in larger, more unstructured environments with fewer resources. We validated these concepts through simulations and physical robot experiments, using a complex radiation cleanup task. This study paves the way for developing adaptable, hierarchical swarm systems applicable in areas like planetary exploration and autonomous vehicles. Moreover, these insights could deepen our understanding of hierarchical structures in biological organisms.

Hierarchical Control of Smart Particle Swarms

Apr 14, 2022

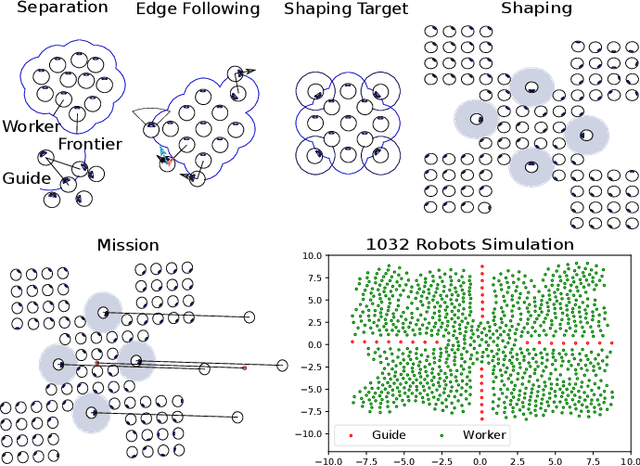

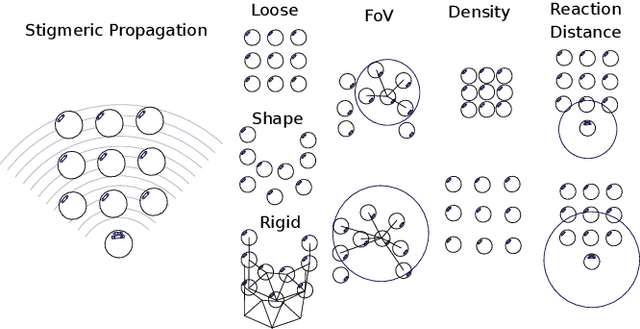

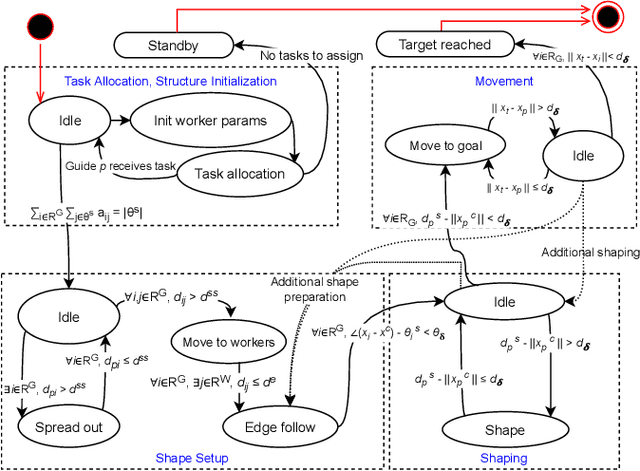

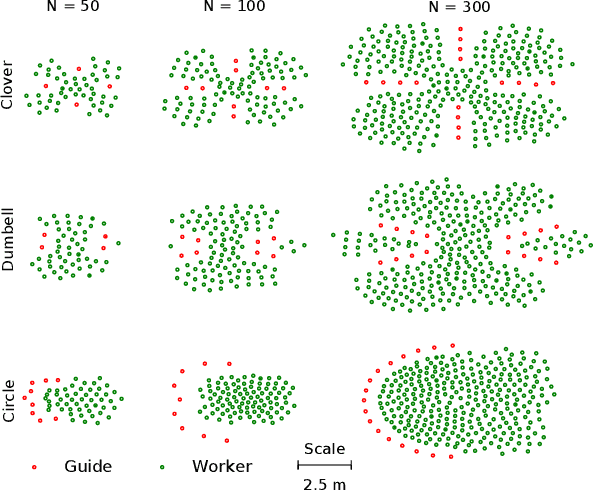

Abstract:We present a method for the control of robot swarms which allows the shaping and the translation of patterns of simple robots ("smart particles"), using two types of devices. These two types represent a hierarchy: a larger group of simple, oblivious robots (which we call the workers) that is governed by simple local attraction forces, and a smaller group (the guides) with sufficient mission knowledge to create and maintain a desired pattern by operating on the local forces of the former. This framework exploits the knowledge of the guides, which coordinate to shape the workers like smart particles by changing their interaction parameters. We study the approach with a large scale simulation experiment in a physics based simulator with up to 1000 robots forming three different patterns. Our experiments reveal that the approach scales well with increasing robot numbers, and presents little pattern distortion for a set of target moving shapes. We evaluate the approach on a physical swarm of robots that use visual inertial odometry to compute their relative positions and obtain results that are comparable with simulation. This work lays foundation for designing and coordinating configurable smart particles, with applications in smart materials and nanomedicine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge