Allan Pinto

Advancing Annotat3D with Harpia: A CUDA-Accelerated Library For Large-Scale Volumetric Data Segmentation

Nov 14, 2025Abstract:High-resolution volumetric imaging techniques, such as X-ray tomography and advanced microscopy, generate increasingly large datasets that challenge existing tools for efficient processing, segmentation, and interactive exploration. This work introduces new capabilities to Annotat3D through Harpia, a new CUDA-based processing library designed to support scalable, interactive segmentation workflows for large 3D datasets in high-performance computing (HPC) and remote-access environments. Harpia features strict memory control, native chunked execution, and a suite of GPU-accelerated filtering, annotation, and quantification tools, enabling reliable operation on datasets exceeding single-GPU memory capacity. Experimental results demonstrate significant improvements in processing speed, memory efficiency, and scalability compared to widely used frameworks such as NVIDIA cuCIM and scikit-image. The system's interactive, human-in-the-loop interface, combined with efficient GPU resource management, makes it particularly suitable for collaborative scientific imaging workflows in shared HPC infrastructures.

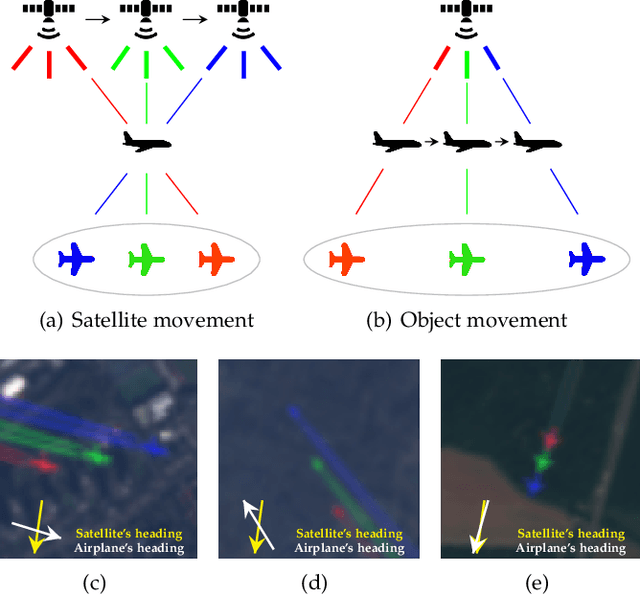

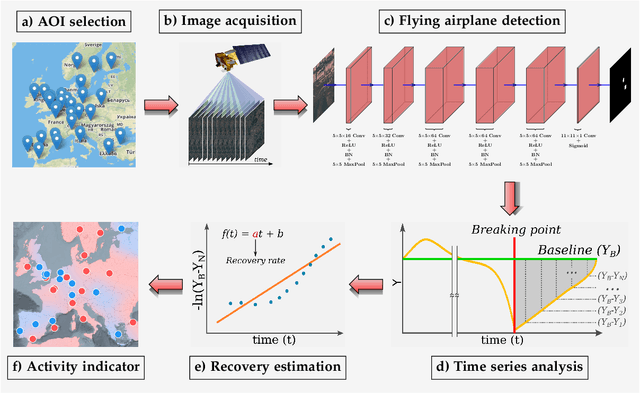

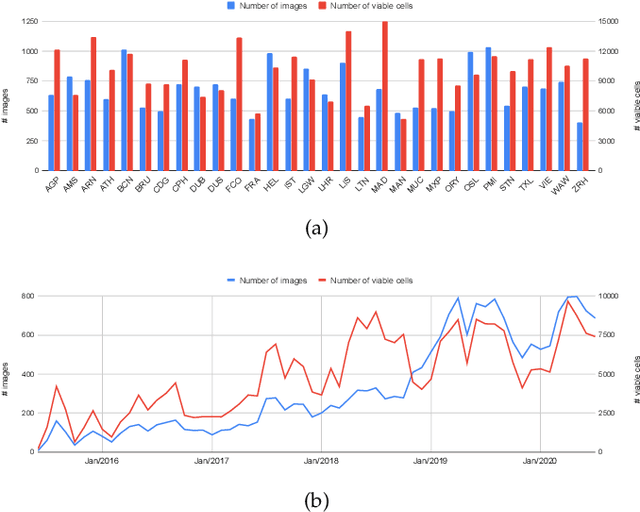

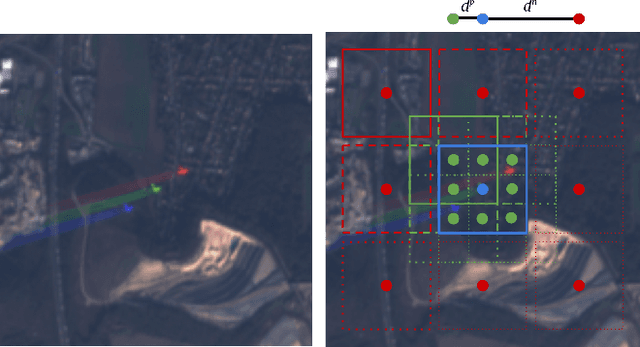

Measuring economic activity from space: a case study using flying airplanes and COVID-19

Apr 21, 2021

Abstract:This work introduces a novel solution to measure economic activity through remote sensing for a wide range of spatial areas. We hypothesized that disturbances in human behavior caused by major life-changing events leave signatures in satellite imagery that allows devising relevant image-based indicators to estimate their impacts and support decision-makers. We present a case study for the COVID-19 coronavirus outbreak, which imposed severe mobility restrictions and caused worldwide disruptions, using flying airplane detection around the 30 busiest airports in Europe to quantify and analyze the lockdown's effects and post-lockdown recovery. Our solution won the Rapid Action Coronavirus Earth observation (RACE) upscaling challenge, sponsored by the European Space Agency and the European Commission, and now integrates the RACE dashboard. This platform combines satellite data and artificial intelligence to promote a progressive and safe reopening of essential activities. Code and CNN models are available at https://github.com/maups/covid19-custom-script-contest

Parallax Motion Effect Generation Through Instance Segmentation And Depth Estimation

Oct 06, 2020

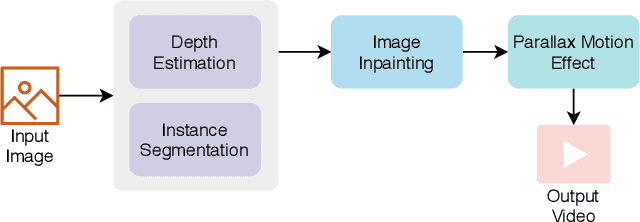

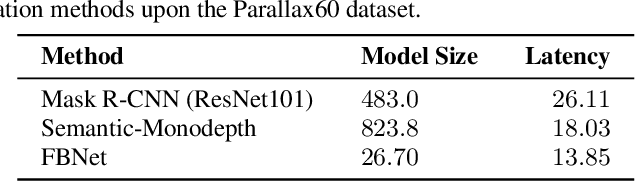

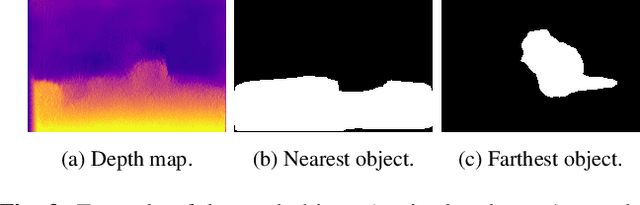

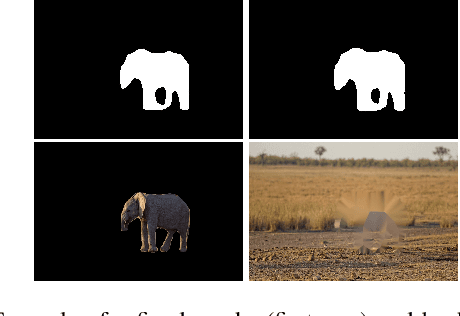

Abstract:Stereo vision is a growing topic in computer vision due to the innumerable opportunities and applications this technology offers for the development of modern solutions, such as virtual and augmented reality applications. To enhance the user's experience in three-dimensional virtual environments, the motion parallax estimation is a promising technique to achieve this objective. In this paper, we propose an algorithm for generating parallax motion effects from a single image, taking advantage of state-of-the-art instance segmentation and depth estimation approaches. This work also presents a comparison against such algorithms to investigate the trade-off between efficiency and quality of the parallax motion effects, taking into consideration a multi-task learning network capable of estimating instance segmentation and depth estimation at once. Experimental results and visual quality assessment indicate that the PyD-Net network (depth estimation) combined with Mask R-CNN or FBNet networks (instance segmentation) can produce parallax motion effects with good visual quality.

* 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates

FaceSpoof Buster: a Presentation Attack Detector Based on Intrinsic Image Properties and Deep Learning

Feb 07, 2019

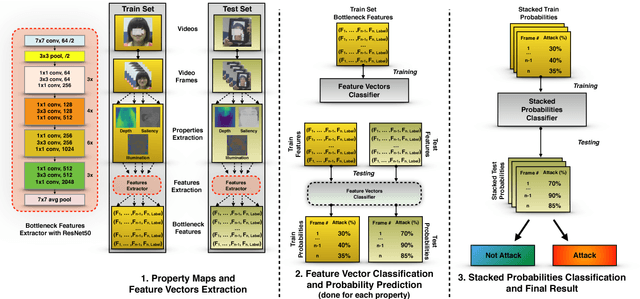

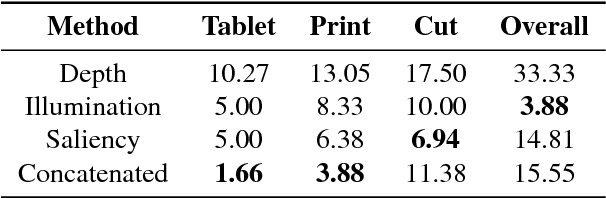

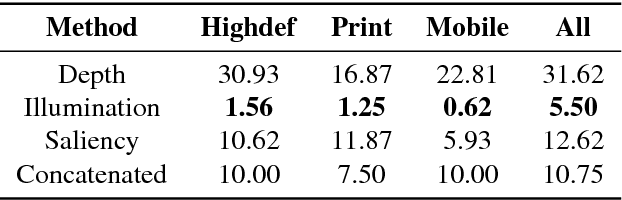

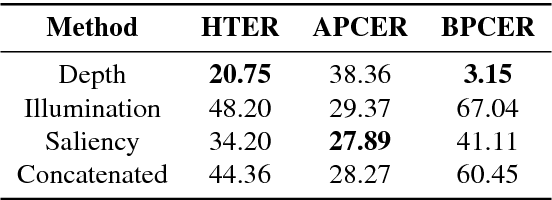

Abstract:Nowadays, the adoption of face recognition for biometric authentication systems is usual, mainly because this is one of the most accessible biometric modalities. Techniques that rely on trespassing these kind of systems by using a forged biometric sample, such as a printed paper or a recorded video of a genuine access, are known as presentation attacks, but may be also referred in the literature as face spoofing. Presentation attack detection is a crucial step for preventing this kind of unauthorized accesses into restricted areas and/or devices. In this paper, we propose a novel approach which relies in a combination between intrinsic image properties and deep neural networks to detect presentation attack attempts. Our method explores depth, salience and illumination maps, associated with a pre-trained Convolutional Neural Network in order to produce robust and discriminant features. Each one of these properties are individually classified and, in the end of the process, they are combined by a meta learning classifier, which achieves outstanding results on the most popular datasets for PAD. Results show that proposed method is able to overpass state-of-the-art results in an inter-dataset protocol, which is defined as the most challenging in the literature.

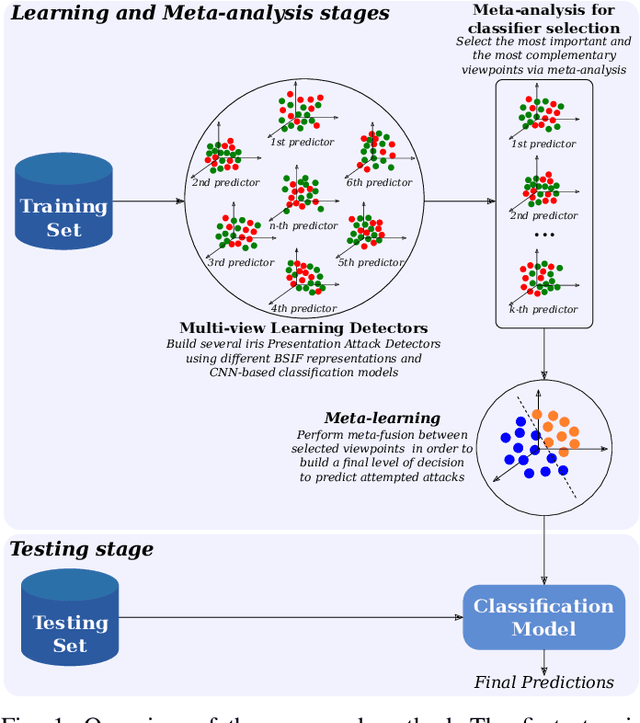

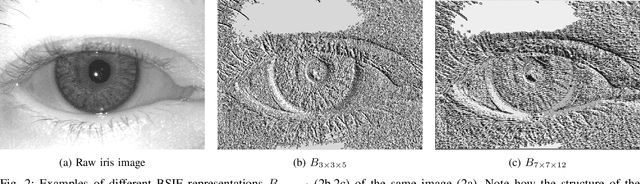

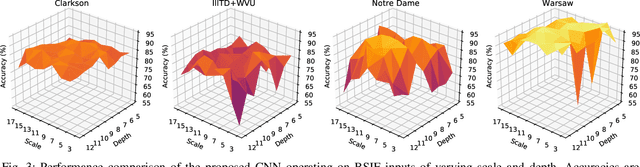

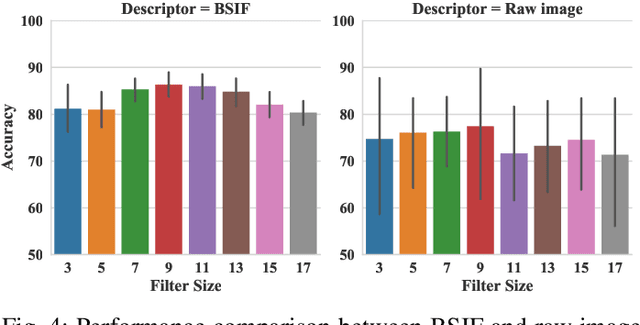

Ensemble of Multi-View Learning Classifiers for Cross-Domain Iris Presentation Attack Detection

Nov 25, 2018

Abstract:The adoption of large-scale iris recognition systems around the world has brought to light the importance of detecting presentation attack images (textured contact lenses and printouts). This work presents a new approach in iris Presentation Attack Detection (PAD), by exploring combinations of Convolutional Neural Networks (CNNs) and transformed input spaces through binarized statistical image features (BSIF). Our method combines lightweight CNNs to classify multiple BSIF views of the input image. Following explorations on complementary input spaces leading to more discriminative features to detect presentation attacks, we also propose an algorithm to select the best (and most discriminative) predictors for the task at hand.An ensemble of predictors makes use of their expected individual performances to aggregate their results into a final prediction. Results show that this technique improves on the current state of the art in iris PAD, outperforming the winner of LivDet-Iris2017 competition both for intra- and cross-dataset scenarios, and illustrating the very difficult nature of the cross-dataset scenario.

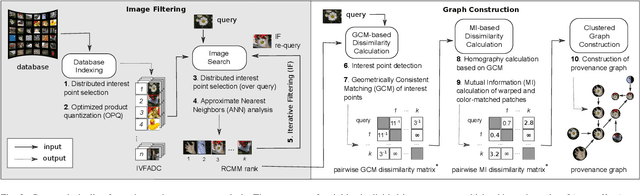

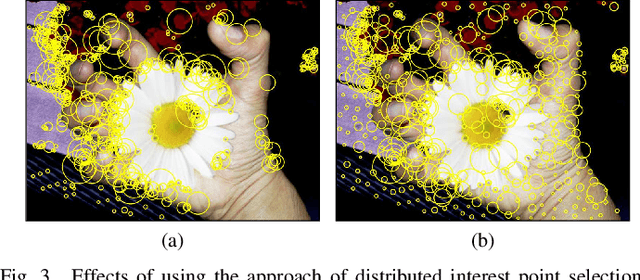

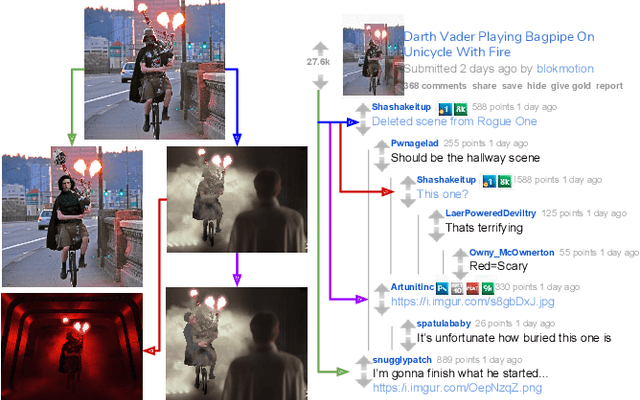

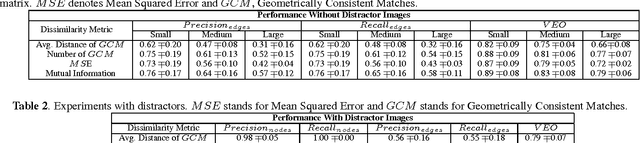

Image Provenance Analysis at Scale

Jan 23, 2018

Abstract:Prior art has shown it is possible to estimate, through image processing and computer vision techniques, the types and parameters of transformations that have been applied to the content of individual images to obtain new images. Given a large corpus of images and a query image, an interesting further step is to retrieve the set of original images whose content is present in the query image, as well as the detailed sequences of transformations that yield the query image given the original images. This is a problem that recently has received the name of image provenance analysis. In these times of public media manipulation ( e.g., fake news and meme sharing), obtaining the history of image transformations is relevant for fact checking and authorship verification, among many other applications. This article presents an end-to-end processing pipeline for image provenance analysis, which works at real-world scale. It employs a cutting-edge image filtering solution that is custom-tailored for the problem at hand, as well as novel techniques for obtaining the provenance graph that expresses how the images, as nodes, are ancestrally connected. A comprehensive set of experiments for each stage of the pipeline is provided, comparing the proposed solution with state-of-the-art results, employing previously published datasets. In addition, this work introduces a new dataset of real-world provenance cases from the social media site Reddit, along with baseline results.

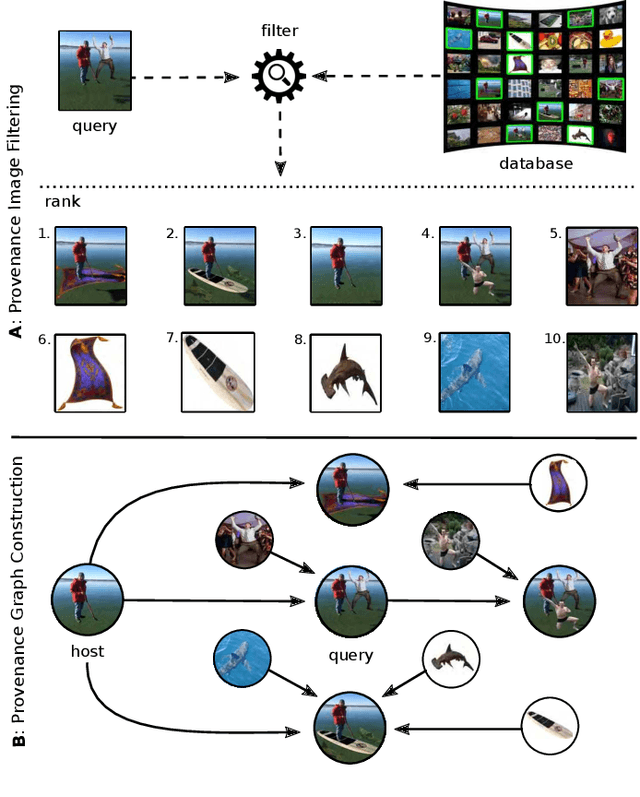

Provenance Filtering for Multimedia Phylogeny

Jun 01, 2017

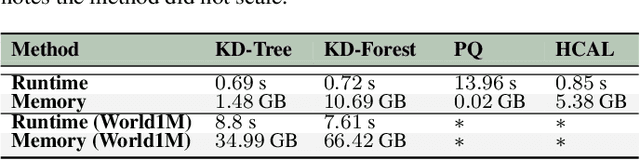

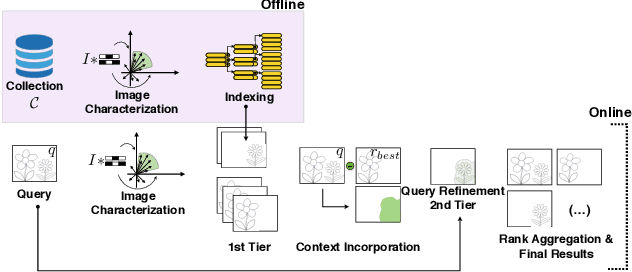

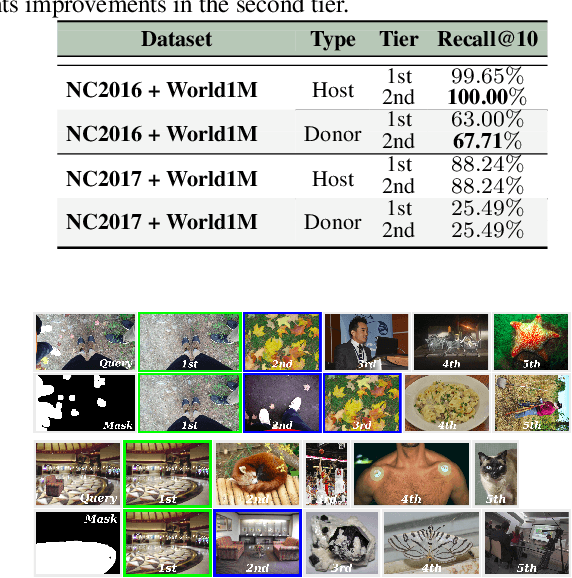

Abstract:Departing from traditional digital forensics modeling, which seeks to analyze single objects in isolation, multimedia phylogeny analyzes the evolutionary processes that influence digital objects and collections over time. One of its integral pieces is provenance filtering, which consists of searching a potentially large pool of objects for the most related ones with respect to a given query, in terms of possible ancestors (donors or contributors) and descendants. In this paper, we propose a two-tiered provenance filtering approach to find all the potential images that might have contributed to the creation process of a given query $q$. In our solution, the first (coarse) tier aims to find the most likely "host" images --- the major donor or background --- contributing to a composite/doctored image. The search is then refined in the second tier, in which we search for more specific (potentially small) parts of the query that might have been extracted from other images and spliced into the query image. Experimental results with a dataset containing more than a million images show that the two-tiered solution underpinned by the context of the query is highly useful for solving this difficult task.

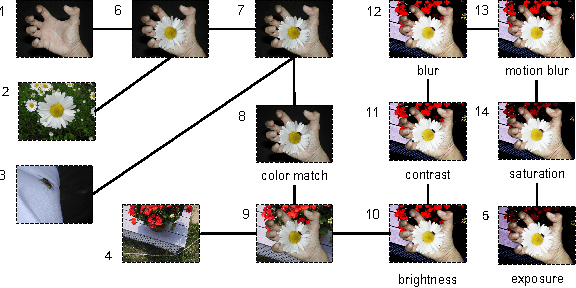

U-Phylogeny: Undirected Provenance Graph Construction in the Wild

May 31, 2017

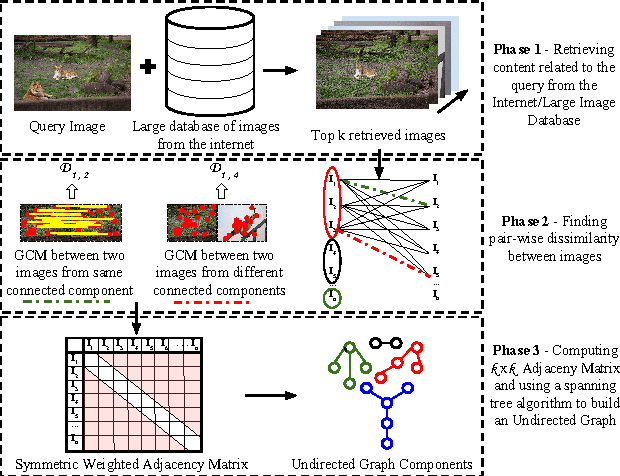

Abstract:Deriving relationships between images and tracing back their history of modifications are at the core of Multimedia Phylogeny solutions, which aim to combat misinformation through doctored visual media. Nonetheless, most recent image phylogeny solutions cannot properly address cases of forged composite images with multiple donors, an area known as multiple parenting phylogeny (MPP). This paper presents a preliminary undirected graph construction solution for MPP, without any strict assumptions. The algorithm is underpinned by robust image representative keypoints and different geometric consistency checks among matching regions in both images to provide regions of interest for direct comparison. The paper introduces a novel technique to geometrically filter the most promising matches as well as to aid in the shared region localization task. The strength of the approach is corroborated by experiments with real-world cases, with and without image distractors (unrelated cases).

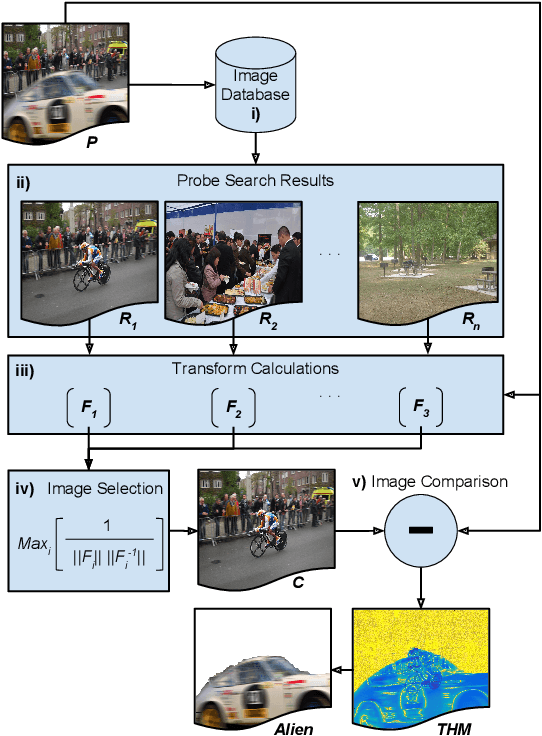

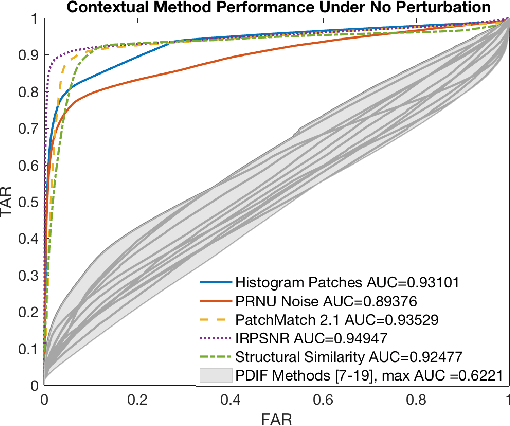

Spotting the Difference: Context Retrieval and Analysis for Improved Forgery Detection and Localization

May 01, 2017

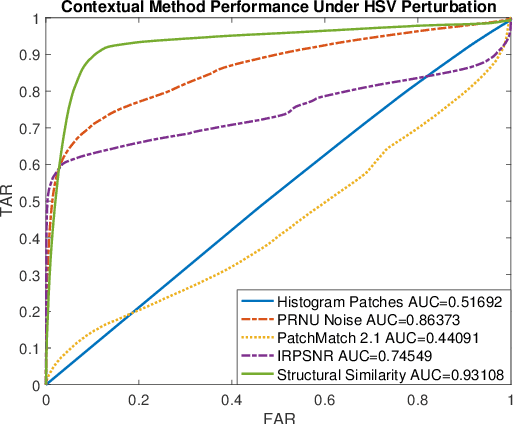

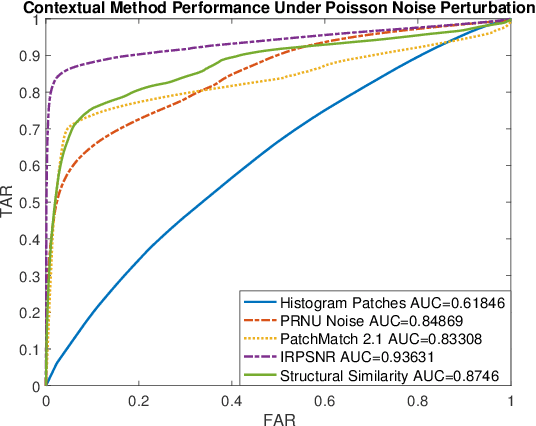

Abstract:As image tampering becomes ever more sophisticated and commonplace, the need for image forensics algorithms that can accurately and quickly detect forgeries grows. In this paper, we revisit the ideas of image querying and retrieval to provide clues to better localize forgeries. We propose a method to perform large-scale image forensics on the order of one million images using the help of an image search algorithm and database to gather contextual clues as to where tampering may have taken place. In this vein, we introduce five new strongly invariant image comparison methods and test their effectiveness under heavy noise, rotation, and color space changes. Lastly, we show the effectiveness of these methods compared to passive image forensics using Nimble [https://www.nist.gov/itl/iad/mig/nimble-challenge], a new, state-of-the-art dataset from the National Institute of Standards and Technology (NIST).

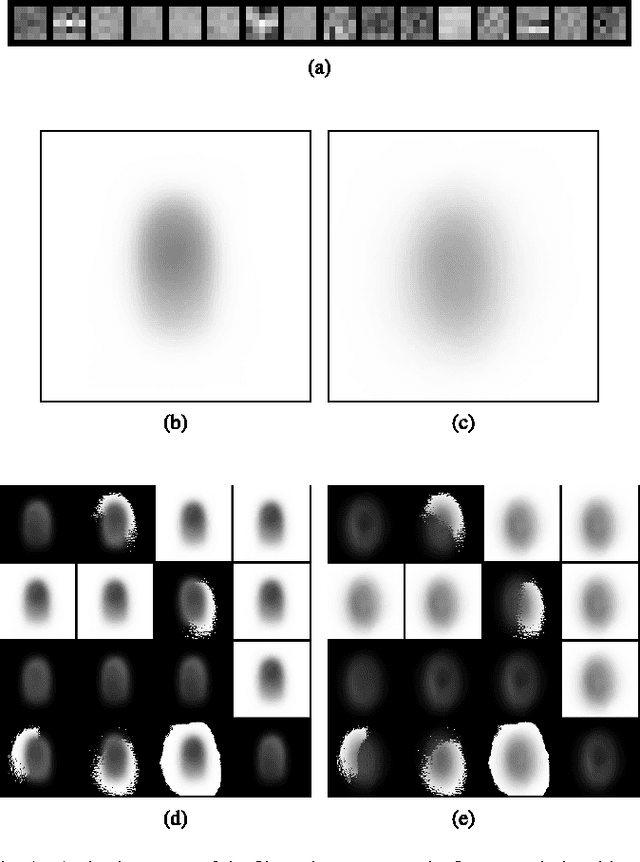

Deep Representations for Iris, Face, and Fingerprint Spoofing Detection

Jan 29, 2015

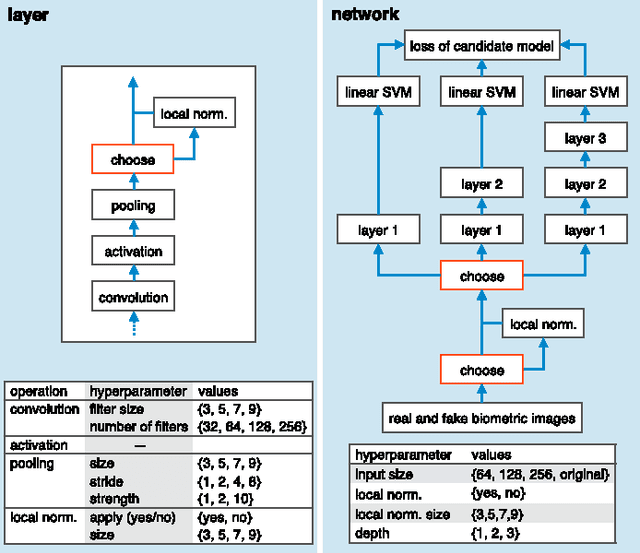

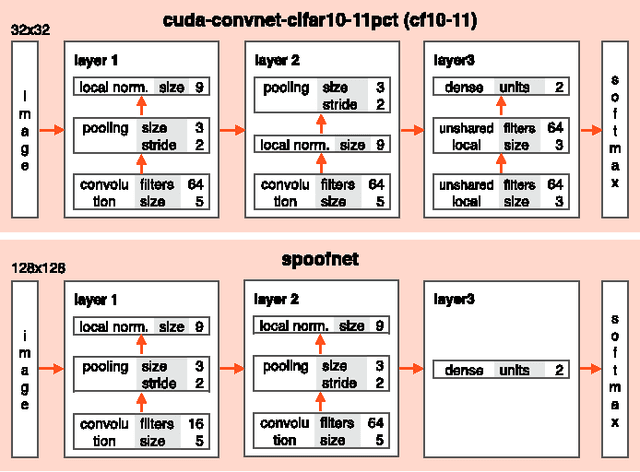

Abstract:Biometrics systems have significantly improved person identification and authentication, playing an important role in personal, national, and global security. However, these systems might be deceived (or "spoofed") and, despite the recent advances in spoofing detection, current solutions often rely on domain knowledge, specific biometric reading systems, and attack types. We assume a very limited knowledge about biometric spoofing at the sensor to derive outstanding spoofing detection systems for iris, face, and fingerprint modalities based on two deep learning approaches. The first approach consists of learning suitable convolutional network architectures for each domain, while the second approach focuses on learning the weights of the network via back-propagation. We consider nine biometric spoofing benchmarks --- each one containing real and fake samples of a given biometric modality and attack type --- and learn deep representations for each benchmark by combining and contrasting the two learning approaches. This strategy not only provides better comprehension of how these approaches interplay, but also creates systems that exceed the best known results in eight out of the nine benchmarks. The results strongly indicate that spoofing detection systems based on convolutional networks can be robust to attacks already known and possibly adapted, with little effort, to image-based attacks that are yet to come.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge