Mauricio Pamplona Segundo

Reducing Training Demands for 3D Gait Recognition with Deep Koopman Operator Constraints

Aug 14, 2023

Abstract:Deep learning research has made many biometric recognition solution viable, but it requires vast training data to achieve real-world generalization. Unlike other biometric traits, such as face and ear, gait samples cannot be easily crawled from the web to form massive unconstrained datasets. As the human body has been extensively studied for different digital applications, one can rely on prior shape knowledge to overcome data scarcity. This work follows the recent trend of fitting a 3D deformable body model into gait videos using deep neural networks to obtain disentangled shape and pose representations for each frame. To enforce temporal consistency in the network, we introduce a new Linear Dynamical Systems (LDS) module and loss based on Koopman operator theory, which provides an unsupervised motion regularization for the periodic nature of gait, as well as a predictive capacity for extending gait sequences. We compare LDS to the traditional adversarial training approach and use the USF HumanID and CASIA-B datasets to show that LDS can obtain better accuracy with less training data. Finally, we also show that our 3D modeling approach is much better than other 3D gait approaches in overcoming viewpoint variation under normal, bag-carrying and clothing change conditions.

Shape-Graph Matching Network (SGM-net): Registration for Statistical Shape Analysis

Aug 14, 2023Abstract:This paper focuses on the statistical analysis of shapes of data objects called shape graphs, a set of nodes connected by articulated curves with arbitrary shapes. A critical need here is a constrained registration of points (nodes to nodes, edges to edges) across objects. This, in turn, requires optimization over the permutation group, made challenging by differences in nodes (in terms of numbers, locations) and edges (in terms of shapes, placements, and sizes) across objects. This paper tackles this registration problem using a novel neural-network architecture and involves an unsupervised loss function developed using the elastic shape metric for curves. This architecture results in (1) state-of-the-art matching performance and (2) an order of magnitude reduction in the computational cost relative to baseline approaches. We demonstrate the effectiveness of the proposed approach using both simulated data and real-world 2D and 3D shape graphs. Code and data will be made publicly available after review to foster research.

Fingerprint Pore Detection: A Survey

Nov 27, 2022Abstract:This work presents the first survey on fingerprint pore detection. The survey provides a general overview of the field and discusses methods, datasets, and evaluation protocols. We also present a baseline method inspired on the state-of-the-art that implements a customizable Fully Convolutional Network, whose hyperparameters were tuned to achieve optimal pore detection rates. Finally, we also reimplementated three other approaches proposed in the literature for evaluation purposes. We have made the source code of (1) the baseline method, (2) the reimplemented approaches, and (3) the training and evaluation processes for two different datasets available to the public to attract more researchers to the field and to facilitate future comparisons under the same conditions. The code is available in the following repository: https://github.com/azimIbragimov/Fingerprint-Pore-Detection-A-Survey

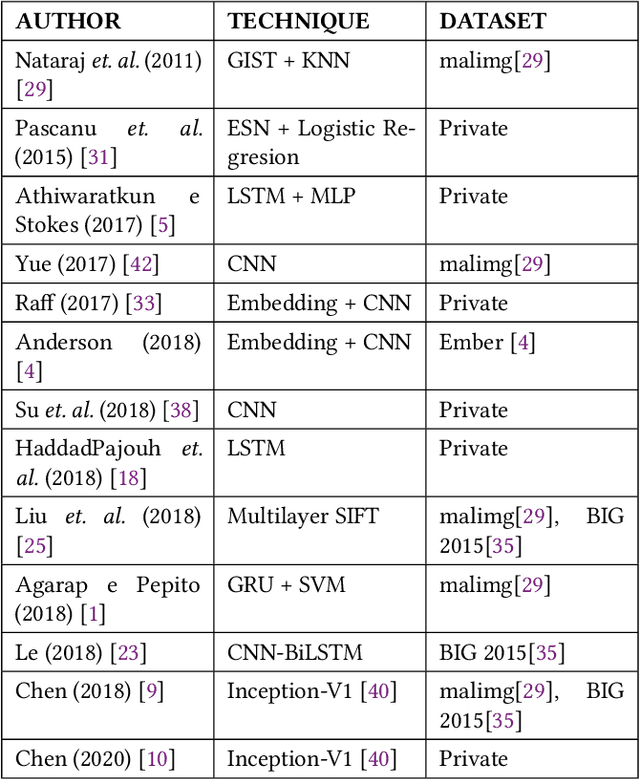

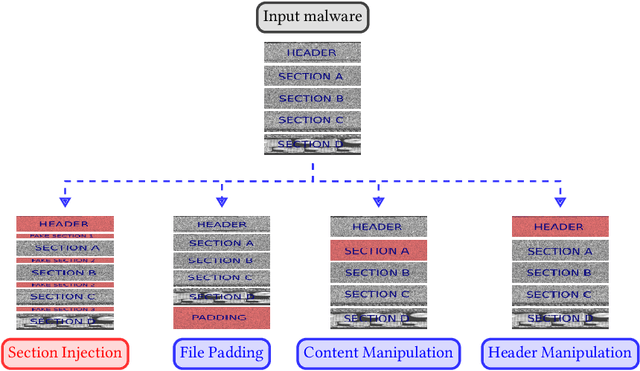

On deceiving malware classification with section injection

Aug 12, 2022

Abstract:We investigate how to modify executable files to deceive malware classification systems. This work's main contribution is a methodology to inject bytes across a malware file randomly and use it both as an attack to decrease classification accuracy but also as a defensive method, augmenting the data available for training. It respects the operating system file format to make sure the malware will still execute after our injection and will not change its behavior. We reproduced five state-of-the-art malware classification approaches to evaluate our injection scheme: one based on GIST+KNN, three CNN variations and one Gated CNN. We performed our experiments on a public dataset with 9,339 malware samples from 25 different families. Our results show that a mere increase of 7% in the malware size causes an accuracy drop between 25% and 40% for malware family classification. They show that a automatic malware classification system may not be as trustworthy as initially reported in the literature. We also evaluate using modified malwares alongside the original ones to increase networks robustness against mentioned attacks. Results show that a combination of reordering malware sections and injecting random data can improve overall performance of the classification. Code available at https://github.com/adeilsonsilva/malware-injection.

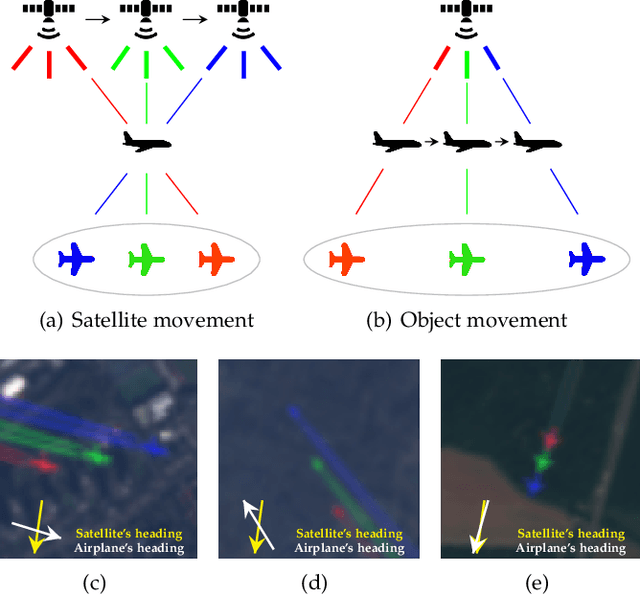

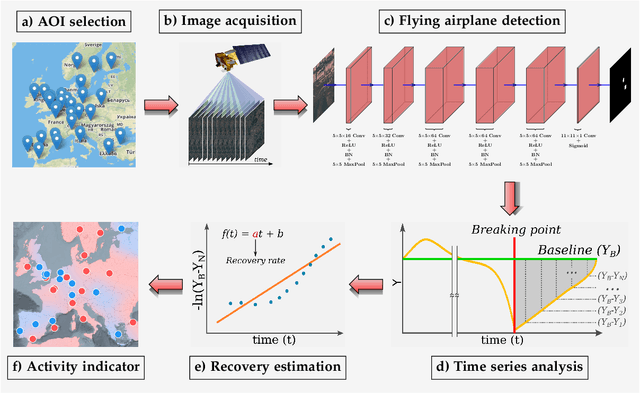

Measuring economic activity from space: a case study using flying airplanes and COVID-19

Apr 21, 2021

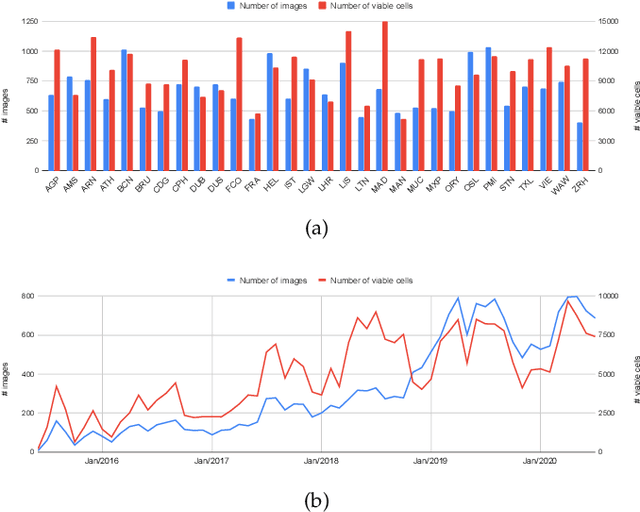

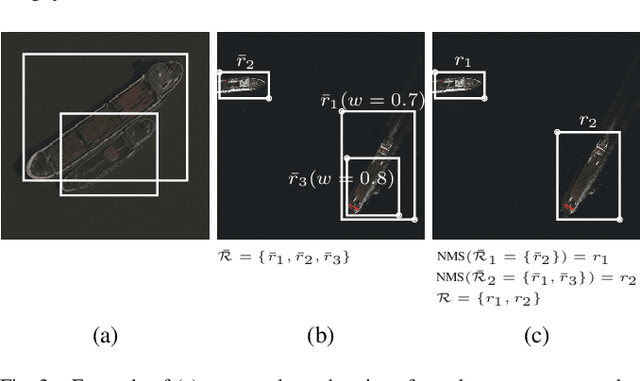

Abstract:This work introduces a novel solution to measure economic activity through remote sensing for a wide range of spatial areas. We hypothesized that disturbances in human behavior caused by major life-changing events leave signatures in satellite imagery that allows devising relevant image-based indicators to estimate their impacts and support decision-makers. We present a case study for the COVID-19 coronavirus outbreak, which imposed severe mobility restrictions and caused worldwide disruptions, using flying airplane detection around the 30 busiest airports in Europe to quantify and analyze the lockdown's effects and post-lockdown recovery. Our solution won the Rapid Action Coronavirus Earth observation (RACE) upscaling challenge, sponsored by the European Space Agency and the European Commission, and now integrates the RACE dashboard. This platform combines satellite data and artificial intelligence to promote a progressive and safe reopening of essential activities. Code and CNN models are available at https://github.com/maups/covid19-custom-script-contest

Measuring Human and Economic Activity from Satellite Imagery to Support City-Scale Decision-Making during COVID-19 Pandemic

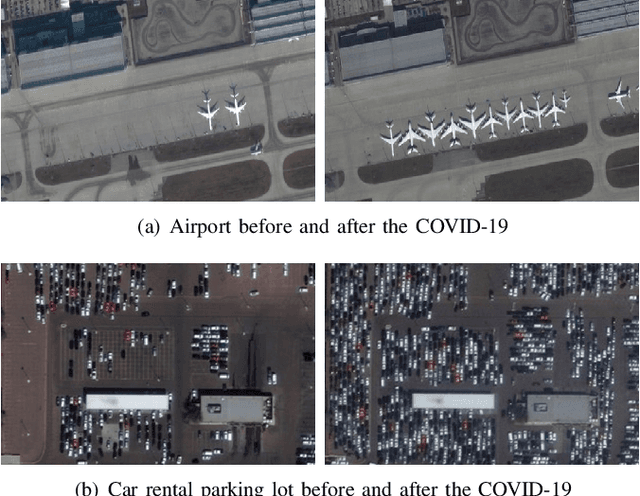

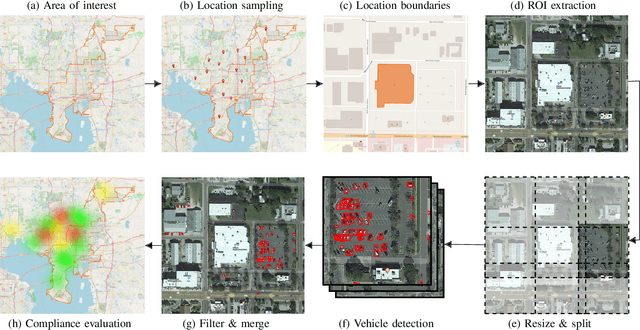

Apr 16, 2020

Abstract:The COVID-19 outbreak forced governments worldwide to impose lockdowns and quarantines over their population to prevent virus transmission. As a consequence, there are disruptions in human and economic activities all over the globe. The recovery process is also expected to be rough. Economic activities impact social behaviors, which leave signatures in satellite images that can be automatically detected and classified. Satellite imagery can support the decision-making of analysts and policymakers by providing a different kind of visibility into the unfolding economic changes. Such information can be useful both during the crisis and also as we recover from it. In this work, we use a deep learning approach that combines strategic location sampling and an ensemble of lightweight convolutional neural networks (CNNs) to recognize specific elements in satellite images and compute economic indicators based on it, automatically. This CNN ensemble framework ranked third place in the US Department of Defense xView challenge, the most advanced benchmark for object detection in satellite images. We show the potential of our framework for temporal analysis using the US IARPA Function Map of the World (fMoW) dataset. We also show results on real examples of different sites before and after the COVID-19 outbreak to demonstrate possibilities. Among the future work is the possibility that with a satellite image dataset that samples a region at a weekly (or biweekly) frequency, we can generate more informative temporal signatures that can predict future economic states. Our code is being made available at https://github.com/maups/covid19-satellite-analysis

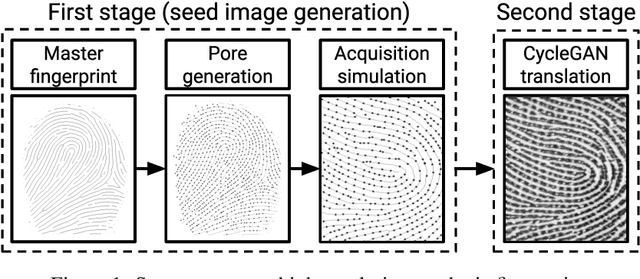

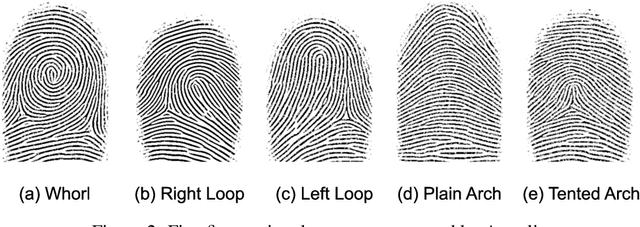

Level Three Synthetic Fingerprint Generation

Feb 13, 2020

Abstract:Today's legal restrictions that protect the privacy of biometric data are hampering fingerprint recognition researches. For instance, all public databases of high-resolution fingerprints ceased to be publicly available. To address this problem, we present an approach to creating high-resolution synthetic fingerprints. We modified a state-of-the-art fingerprint generator to create ridge maps with sweat pores and trained a CycleGAN to transform these maps into realistic prints. We also create a synthetic database of high-resolution fingerprints using the proposed approach to propel further studies in this field without raising any legal issues. We test this database with two existing fingerprint matchers without adjustments to confirm the realism of the generated images. Besides, we provide a visual analysis that highlights the quality of our results compared to the state-of-the-art.

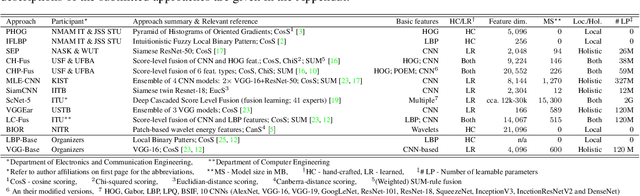

The Unconstrained Ear Recognition Challenge 2019 - ArXiv Version With Appendix

Mar 14, 2019

Abstract:This paper presents a summary of the 2019 Unconstrained Ear Recognition Challenge (UERC), the second in a series of group benchmarking efforts centered around the problem of person recognition from ear images captured in uncontrolled settings. The goal of the challenge is to assess the performance of existing ear recognition techniques on a challenging large-scale ear dataset and to analyze performance of the technology from various viewpoints, such as generalization abilities to unseen data characteristics, sensitivity to rotations, occlusions and image resolution and performance bias on sub-groups of subjects, selected based on demographic criteria, i.e. gender and ethnicity. Research groups from 12 institutions entered the competition and submitted a total of 13 recognition approaches ranging from descriptor-based methods to deep-learning models. The majority of submissions focused on ensemble based methods combining either representations from multiple deep models or hand-crafted with learned image descriptors. Our analysis shows that methods incorporating deep learning models clearly outperform techniques relying solely on hand-crafted descriptors, even though both groups of techniques exhibit similar behaviour when it comes to robustness to various covariates, such presence of occlusions, changes in (head) pose, or variability in image resolution. The results of the challenge also show that there has been considerable progress since the first UERC in 2017, but that there is still ample room for further research in this area.

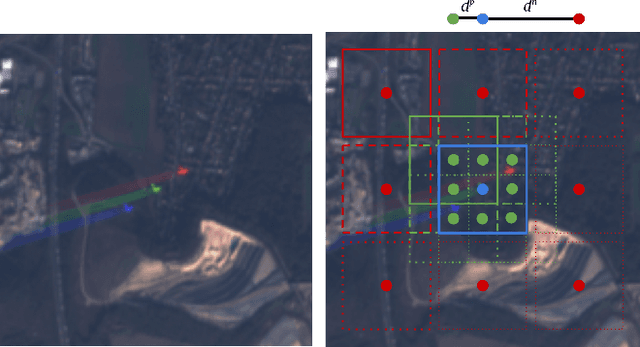

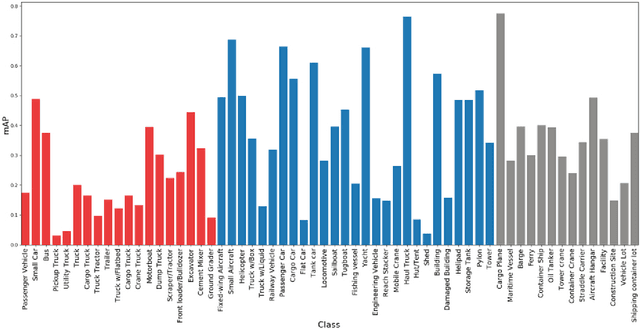

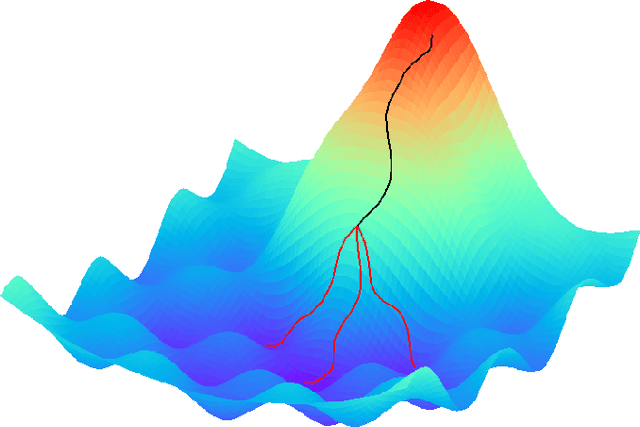

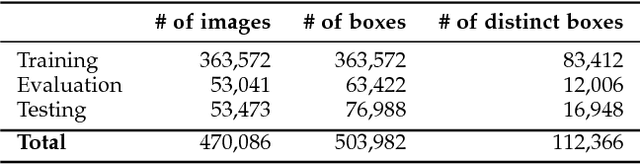

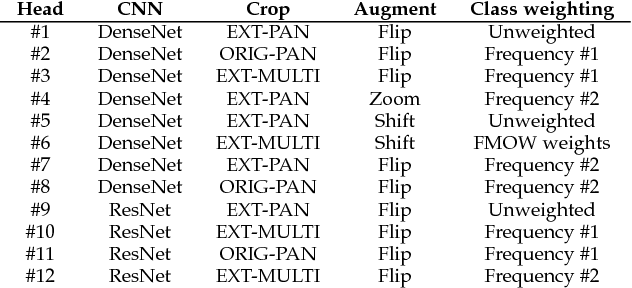

Hydra: an Ensemble of Convolutional Neural Networks for Geospatial Land Classification

Feb 10, 2018

Abstract:We describe in this paper Hydra, an ensemble of convolutional neural networks (CNN) for geospatial land classification. The idea behind Hydra is to create an initial CNN that is coarsely optimized but provides a good starting pointing for further optimization, which will serve as the Hydra's body. Then, the obtained weights are fine tuned multiple times to form an ensemble of CNNs that represent the Hydra's heads. By doing so, we were able to reduce the training time while maintaining the classification performance of the ensemble. We created ensembles using two state-of-the-art CNN architectures, ResNet and DenseNet, to participate in the Functional Map of the World challenge. With this approach, we finished the competition in third place. We also applied the proposed framework to the NWPU-RESISC45 database and achieved the best reported performance so far. Code and CNN models are available at https://github.com/maups/hydra-fmow

Employing Fusion of Learned and Handcrafted Features for Unconstrained Ear Recognition

Oct 20, 2017

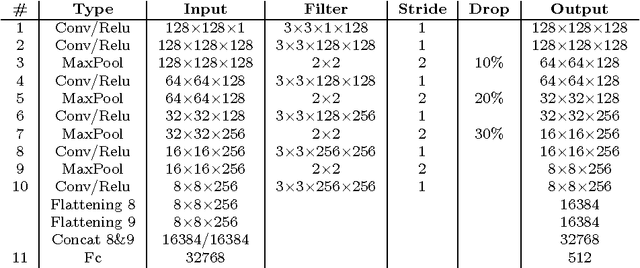

Abstract:We present an unconstrained ear recognition framework that outperforms state-of-the-art systems in different publicly available image databases. To this end, we developed CNN-based solutions for ear normalization and description, we used well-known handcrafted descriptors, and we fused learned and handcrafted features to improve recognition. We designed a two-stage landmark detector that successfully worked under untrained scenarios. We used the results generated to perform a geometric image normalization that boosted the performance of all evaluated descriptors. Our CNN descriptor outperformed other CNN-based works in the literature, specially in more difficult scenarios. The fusion of learned and handcrafted matchers appears to be complementary as it achieved the best performance in all experiments. The obtained results outperformed all other reported results for the UERC challenge, which contains the most difficult database nowadays.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge