Gilbert Rotich

Measuring Human and Economic Activity from Satellite Imagery to Support City-Scale Decision-Making during COVID-19 Pandemic

Apr 16, 2020

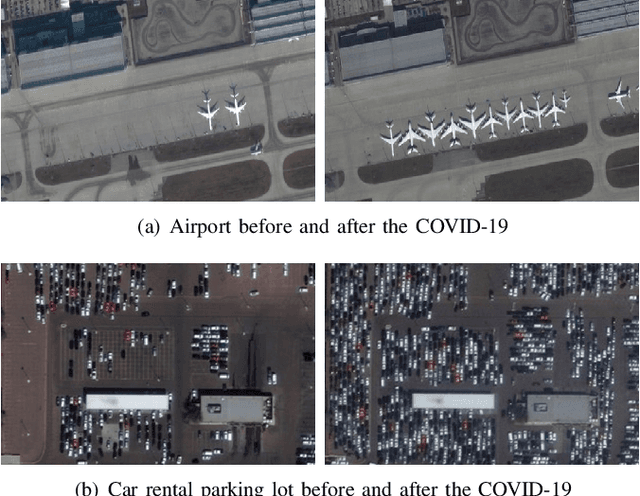

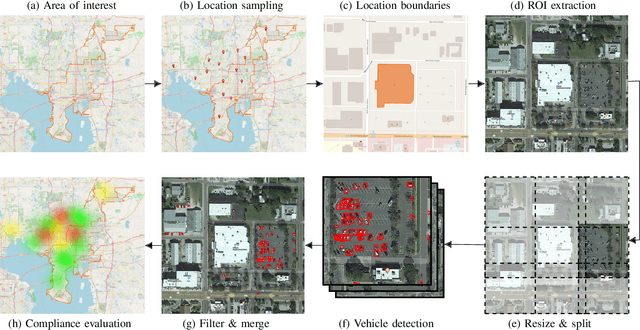

Abstract:The COVID-19 outbreak forced governments worldwide to impose lockdowns and quarantines over their population to prevent virus transmission. As a consequence, there are disruptions in human and economic activities all over the globe. The recovery process is also expected to be rough. Economic activities impact social behaviors, which leave signatures in satellite images that can be automatically detected and classified. Satellite imagery can support the decision-making of analysts and policymakers by providing a different kind of visibility into the unfolding economic changes. Such information can be useful both during the crisis and also as we recover from it. In this work, we use a deep learning approach that combines strategic location sampling and an ensemble of lightweight convolutional neural networks (CNNs) to recognize specific elements in satellite images and compute economic indicators based on it, automatically. This CNN ensemble framework ranked third place in the US Department of Defense xView challenge, the most advanced benchmark for object detection in satellite images. We show the potential of our framework for temporal analysis using the US IARPA Function Map of the World (fMoW) dataset. We also show results on real examples of different sites before and after the COVID-19 outbreak to demonstrate possibilities. Among the future work is the possibility that with a satellite image dataset that samples a region at a weekly (or biweekly) frequency, we can generate more informative temporal signatures that can predict future economic states. Our code is being made available at https://github.com/maups/covid19-satellite-analysis

Resource-Constrained Simultaneous Detection and Labeling of Objects in High-Resolution Satellite Images

Oct 23, 2018

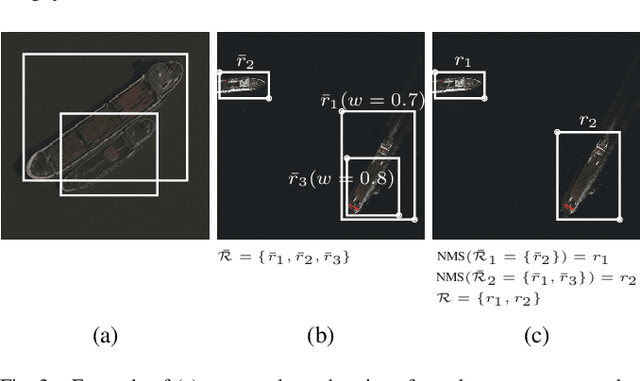

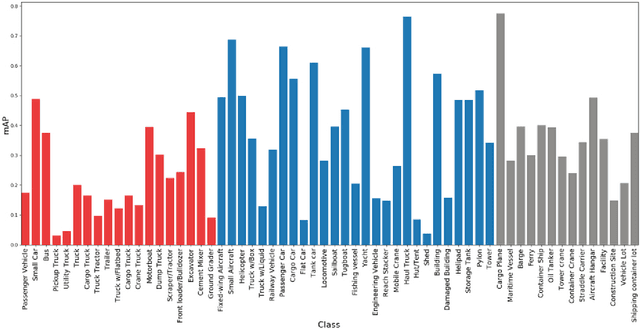

Abstract:We describe a strategy for detection and classification of man-made objects in large high-resolution satellite photos under computational resource constraints. We detect and classify candidate objects by using five pipelines of convolutional neural network processing (CNN), run in parallel. Each pipeline has its own unique strategy for fine tunning parameters, proposal region filtering, and dealing with image scales. The conflicting region proposals are merged based on region confidence and not just based on overlap areas, which improves the quality of the final bounding-box regions selected. We demonstrate this strategy using the recent xView challenge, which is a complex benchmark with more than 1,100 high-resolution images, spanning 800,000 aerial objects around the world covering a total area of 1,400 square kilometers at 0.3 meter ground sample distance. To tackle the resource-constrained problem posed by the xView challenge, where inferences are restricted to be on CPU with 8GB memory limit, we used lightweight CNN's trained with the single shot detector algorithm. Our approach was competitive on sequestered sets; it was ranked third.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge