Alishba Imran

Hanson Robotics

How GPT learns layer by layer

Jan 13, 2025Abstract:Large Language Models (LLMs) excel at tasks like language processing, strategy games, and reasoning but struggle to build generalizable internal representations essential for adaptive decision-making in agents. For agents to effectively navigate complex environments, they must construct reliable world models. While LLMs perform well on specific benchmarks, they often fail to generalize, leading to brittle representations that limit their real-world effectiveness. Understanding how LLMs build internal world models is key to developing agents capable of consistent, adaptive behavior across tasks. We analyze OthelloGPT, a GPT-based model trained on Othello gameplay, as a controlled testbed for studying representation learning. Despite being trained solely on next-token prediction with random valid moves, OthelloGPT shows meaningful layer-wise progression in understanding board state and gameplay. Early layers capture static attributes like board edges, while deeper layers reflect dynamic tile changes. To interpret these representations, we compare Sparse Autoencoders (SAEs) with linear probes, finding that SAEs offer more robust, disentangled insights into compositional features, whereas linear probes mainly detect features useful for classification. We use SAEs to decode features related to tile color and tile stability, a previously unexamined feature that reflects complex gameplay concepts like board control and long-term planning. We study the progression of linear probe accuracy and tile color using both SAE's and linear probes to compare their effectiveness at capturing what the model is learning. Although we begin with a smaller language model, OthelloGPT, this study establishes a framework for understanding the internal representations learned by GPT models, transformers, and LLMs more broadly. Our code is publicly available: https://github.com/ALT-JS/OthelloSAE.

Reflections from the 2024 Large Language Model (LLM) Hackathon for Applications in Materials Science and Chemistry

Nov 20, 2024

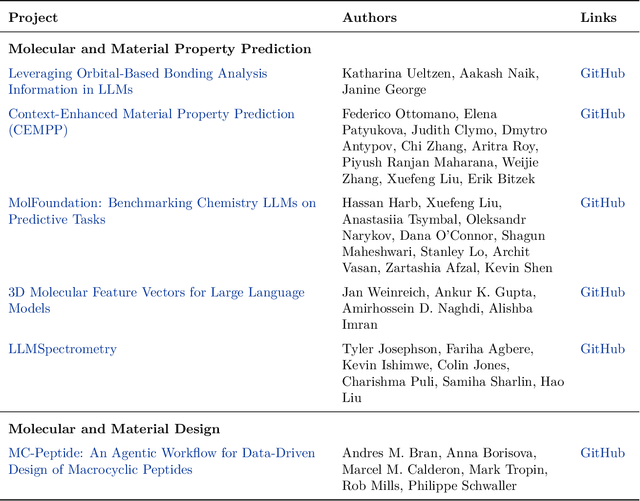

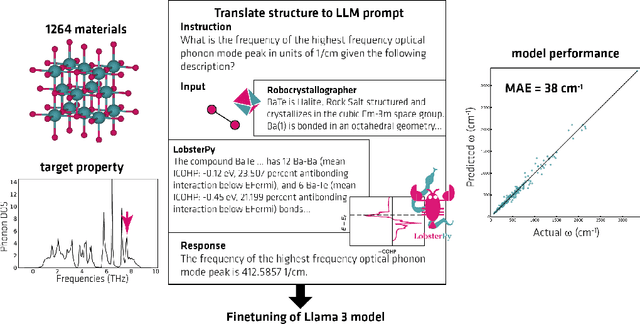

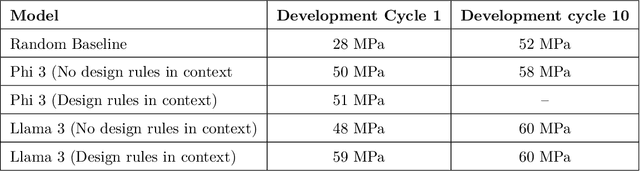

Abstract:Here, we present the outcomes from the second Large Language Model (LLM) Hackathon for Applications in Materials Science and Chemistry, which engaged participants across global hybrid locations, resulting in 34 team submissions. The submissions spanned seven key application areas and demonstrated the diverse utility of LLMs for applications in (1) molecular and material property prediction; (2) molecular and material design; (3) automation and novel interfaces; (4) scientific communication and education; (5) research data management and automation; (6) hypothesis generation and evaluation; and (7) knowledge extraction and reasoning from scientific literature. Each team submission is presented in a summary table with links to the code and as brief papers in the appendix. Beyond team results, we discuss the hackathon event and its hybrid format, which included physical hubs in Toronto, Montreal, San Francisco, Berlin, Lausanne, and Tokyo, alongside a global online hub to enable local and virtual collaboration. Overall, the event highlighted significant improvements in LLM capabilities since the previous year's hackathon, suggesting continued expansion of LLMs for applications in materials science and chemistry research. These outcomes demonstrate the dual utility of LLMs as both multipurpose models for diverse machine learning tasks and platforms for rapid prototyping custom applications in scientific research.

Contrastive learning of cell state dynamics in response to perturbations

Oct 15, 2024

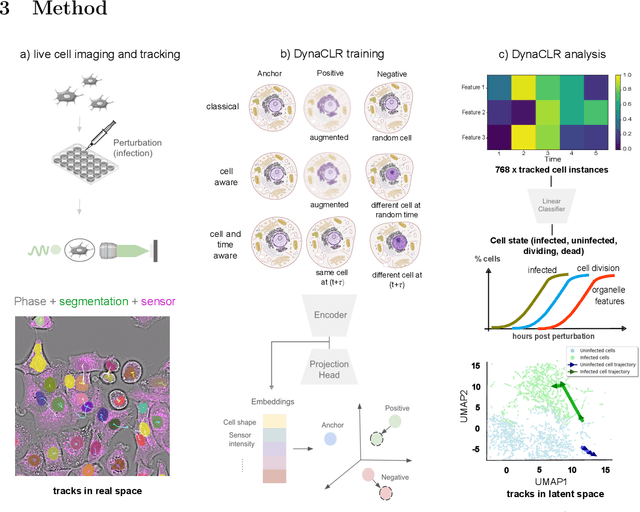

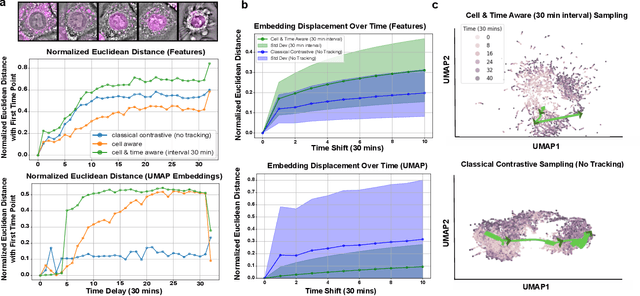

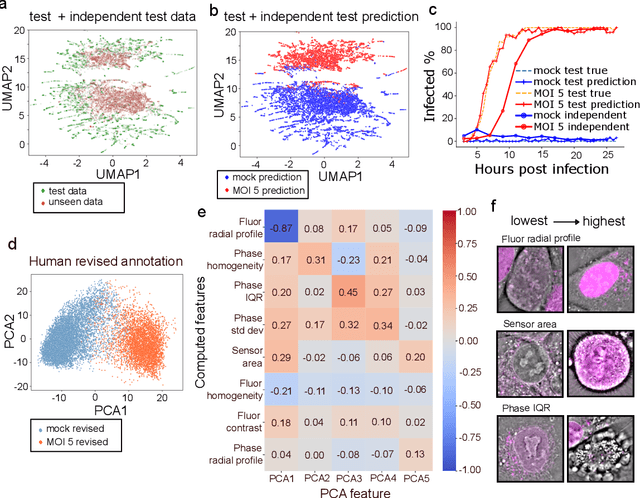

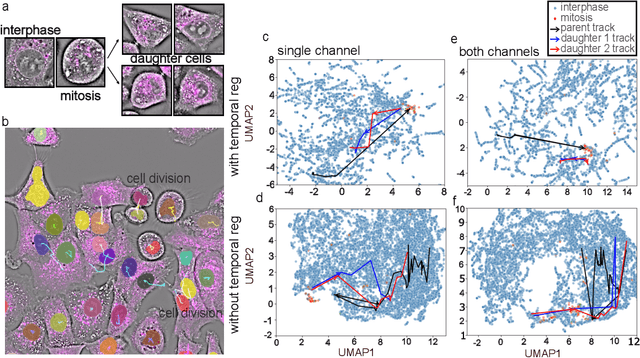

Abstract:We introduce DynaCLR, a self-supervised framework for modeling cell dynamics via contrastive learning of representations of time-lapse datasets. Live cell imaging of cells and organelles is widely used to analyze cellular responses to perturbations. Human annotation of dynamic cell states captured by time-lapse perturbation datasets is laborious and prone to bias. DynaCLR integrates single-cell tracking with time-aware contrastive learning to map images of cells at neighboring time points to neighboring embeddings. Mapping the morphological dynamics of cells to a temporally regularized embedding space makes the annotation, classification, clustering, or interpretation of the cell states more quantitative and efficient. We illustrate the features and applications of DynaCLR with the following experiments: analyzing the kinetics of viral infection in human cells, detecting transient changes in cell morphology due to cell division, and mapping the dynamics of organelles due to viral infection. Models trained with DynaCLR consistently achieve $>95\%$ accuracy for infection state classification, enable the detection of transient cell states and reliably embed unseen experiments. DynaCLR provides a flexible framework for comparative analysis of cell state dynamics due to perturbations, such as infection, gene knockouts, and drugs. We provide PyTorch-based implementations of the model training and inference pipeline (https://github.com/mehta-lab/viscy) and a user interface (https://github.com/czbiohub-sf/napari-iohub) for the visualization and annotation of trajectories of cells in the real space and the embedding space.

14 Examples of How LLMs Can Transform Materials Science and Chemistry: A Reflection on a Large Language Model Hackathon

Jun 13, 2023

Abstract:Chemistry and materials science are complex. Recently, there have been great successes in addressing this complexity using data-driven or computational techniques. Yet, the necessity of input structured in very specific forms and the fact that there is an ever-growing number of tools creates usability and accessibility challenges. Coupled with the reality that much data in these disciplines is unstructured, the effectiveness of these tools is limited. Motivated by recent works that indicated that large language models (LLMs) might help address some of these issues, we organized a hackathon event on the applications of LLMs in chemistry, materials science, and beyond. This article chronicles the projects built as part of this hackathon. Participants employed LLMs for various applications, including predicting properties of molecules and materials, designing novel interfaces for tools, extracting knowledge from unstructured data, and developing new educational applications. The diverse topics and the fact that working prototypes could be generated in less than two days highlight that LLMs will profoundly impact the future of our fields. The rich collection of ideas and projects also indicates that the applications of LLMs are not limited to materials science and chemistry but offer potential benefits to a wide range of scientific disciplines.

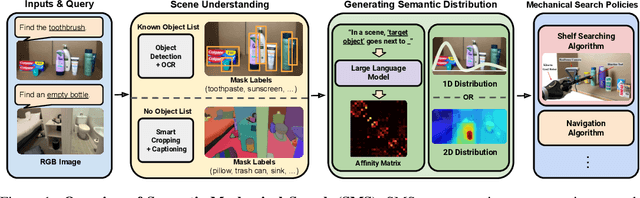

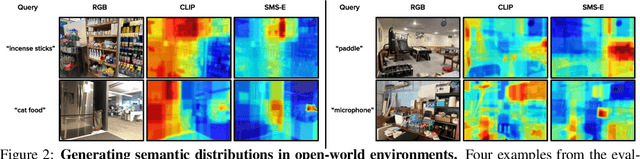

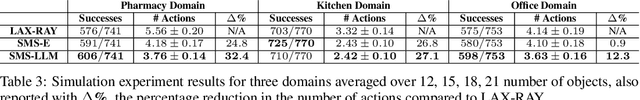

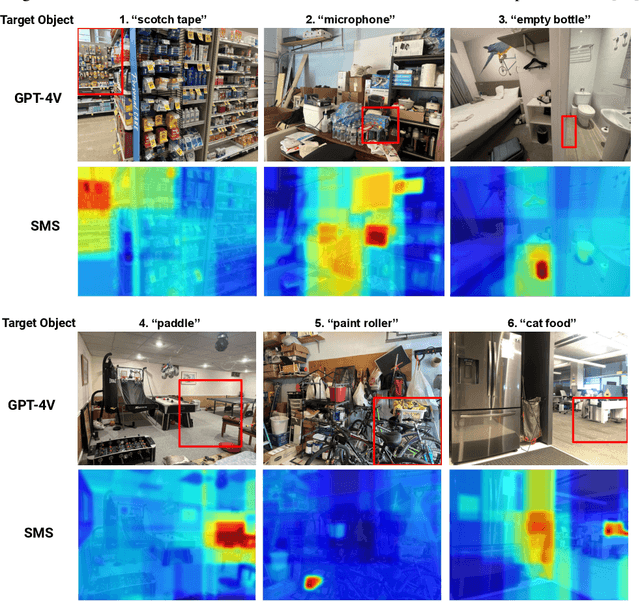

From Occlusion to Insight: Object Search in Semantic Shelves using Large Language Models

Feb 24, 2023

Abstract:How can a robot efficiently extract a desired object from a shelf when it is fully occluded by other objects? Prior works propose geometric approaches for this problem but do not consider object semantics. Shelves in pharmacies, restaurant kitchens, and grocery stores are often organized such that semantically similar objects are placed close to one another. Can large language models (LLMs) serve as semantic knowledge sources to accelerate robotic mechanical search in semantically arranged environments? With Semantic Spatial Search on Shelves (S^4), we use LLMs to generate affinity matrices, where entries correspond to semantic likelihood of physical proximity between objects. We derive semantic spatial distributions by synthesizing semantics with learned geometric constraints. S^4 incorporates Optical Character Recognition (OCR) and semantic refinement with predictions from ViLD, an open-vocabulary object detection model. Simulation experiments suggest that semantic spatial search reduces the search time relative to pure spatial search by an average of 24% across three domains: pharmacy, kitchen, and office shelves. A manually collected dataset of 100 semantic scenes suggests that OCR and semantic refinement improve object detection accuracy by 35%. Lastly, physical experiments in a pharmacy shelf suggest 47.1% improvement over pure spatial search. Supplementary material can be found at https://sites.google.com/view/s4-rss/home.

Open Arms: Open-Source Arms, Hands & Control

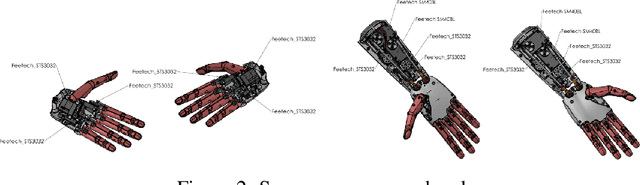

May 20, 2022

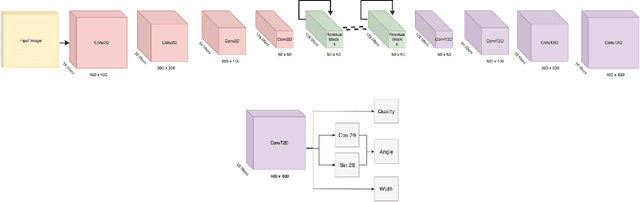

Abstract:Open Arms is a novel open-source platform of realistic human-like robotic hands and arms hardware with 28 Degree-of-Freedom (DoF), designed to extend the capabilities and accessibility of humanoid robotic grasping and manipulation. The Open Arms framework includes an open SDK and development environment, simulation tools, and application development tools to build and operate Open Arms. This paper describes these hands controls, sensing, mechanisms, aesthetic design, and manufacturing and their real-world applications with a teleoperated nursing robot. From 2015 to 2022, we have designed and established the manufacturing of Open Arms as a low-cost, high functionality robotic arms hardware and software framework to serve both humanoid robot applications and the urgent demand for low-cost prosthetics. Using the techniques of consumer product manufacturing, we set out to define modular, low-cost techniques for approximating the dexterity and sensitivity of human hands. To demonstrate the dexterity and control of our hands, we present a novel Generative Grasping Residual CNN (GGR-CNN) model that can generate robust antipodal grasps from input images of various objects at real-time speeds (22ms). We achieved state-of-the-art accuracy of 92.4% using our model architecture on a standard Cornell Grasping Dataset, which contains a diverse set of household objects.

Design of an Affordable Prosthetic Arm Equipped with Deep Learning Vision-Based Manipulation

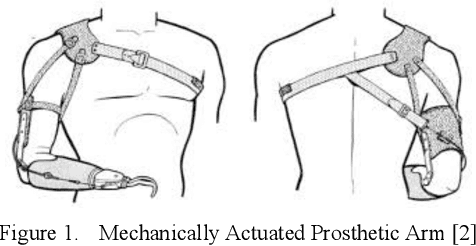

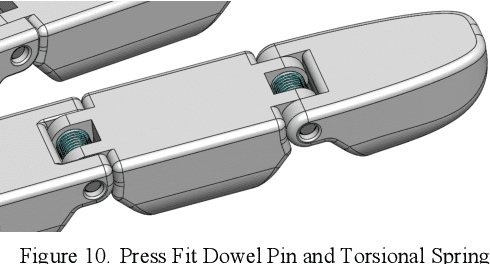

Mar 03, 2021

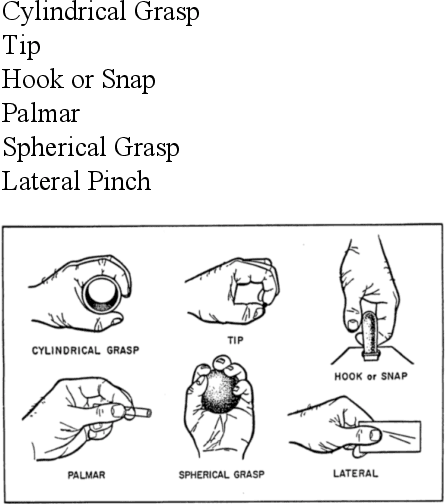

Abstract:Many amputees throughout the world are left with limited options to personally own a prosthetic arm due to the expensive cost, mechanical system complexity, and lack of availability. The three main control methods of prosthetic hands are: (1) body-powered control, (2) extrinsic mechanical control, and (3) myoelectric control. These methods can perform well under a controlled situation but will often break down in clinical and everyday use due to poor robustness, weak adaptability, long-term training, and heavy mental burden during use. This paper lays the complete outline of the design process of an affordable and easily accessible novel prosthetic arm that reduces the cost of prosthetics from $10,000 to $700 on average. The 3D printed prosthetic arm is equipped with a depth camera and closed-loop off-policy deep learning algorithm to help form grasps to the object in view. Current work in reinforcement learning masters only individual skills and is heavily focused on parallel jaw grippers for in-hand manipulation. In order to create generalization, which better performs real-world manipulation, the focus is specifically on using the general framework of Markov Decision Process (MDP) through scalable learning with off-policy algorithms such as deep deterministic policy gradient (DDPG) and to study this question in the context of grasping a prosthetic arm. We were able to achieve a 78% grasp success rate on previously unseen objects and generalize across multiple objects for manipulation tasks. This work will make prosthetics cheaper, easier to use and accessible globally for amputees. Future work includes applying similar approaches to other medical assistive devices where a human is interacting with a machine to complete a task.

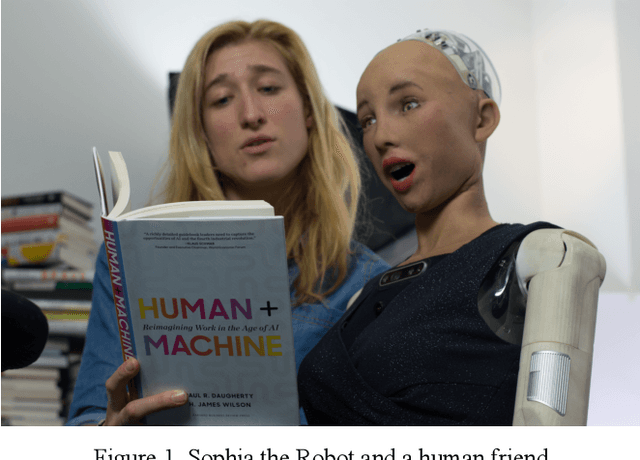

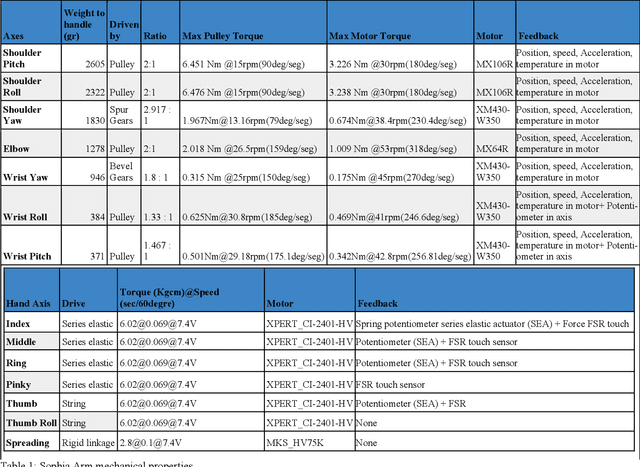

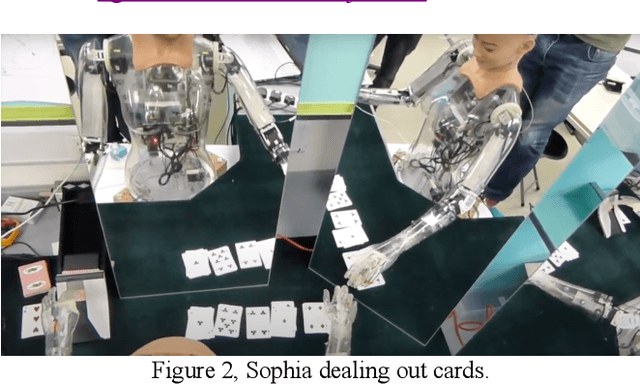

A Neuro-Symbolic Humanlike Arm Controller for Sophia the Robot

Oct 27, 2020

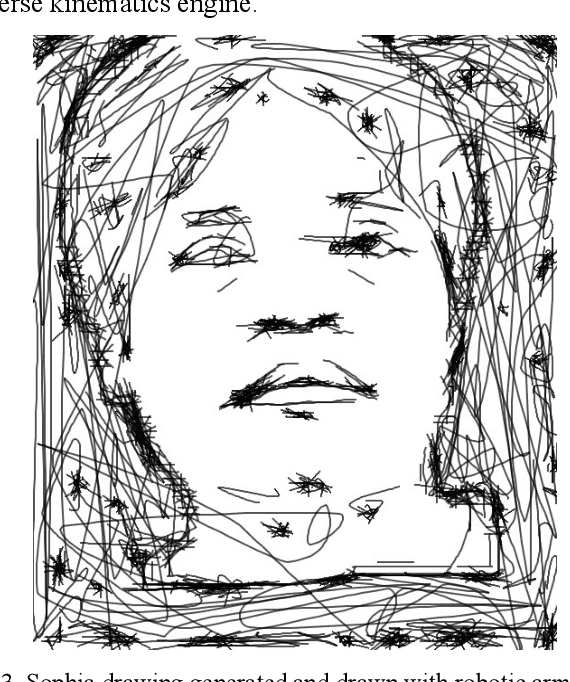

Abstract:We outline the design and construction of novel robotic arms using machine perception, convolutional neural networks, and symbolic AI for logical control and affordance indexing. We describe our robotic arms built with a humanlike mechanical configuration and aesthetic, with 28 degrees of freedom, touch sensors, and series elastic actuators. The arms were modelled in Roodle and Gazebo with URDF models, as well as Unity, and implement motion control solutions for solving live games of Baccarat (the casino card game), rock paper scissors, handshaking, and drawing. This includes live interactions with people, incorporating both social control of the hands and facial gestures, and physical inverse kinematics (IK) for grasping and manipulation tasks. The resulting framework is an integral part of the Sophia 2020 alpha platform, which is being used with ongoing research in the authors work with team AHAM, an ANA Avatar Xprize effort towards human-AI hybrid telepresence. These results are available to test on the broadly released Hanson Robotics Sophia 2020 robot platform, for users to try and extend.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge