Vytas Krisciunas

Sentience Quest: Towards Embodied, Emotionally Adaptive, Self-Evolving, Ethically Aligned Artificial General Intelligence

May 18, 2025Abstract:Previous artificial intelligence systems, from large language models to autonomous robots, excel at narrow tasks but lacked key qualities of sentient beings: intrinsic motivation, affective interiority, autobiographical sense of self, deep creativity, and abilities to autonomously evolve and adapt over time. Here we introduce Sentience Quest, an open research initiative to develop more capable artificial general intelligence lifeforms, or AGIL, that address grand challenges with an embodied, emotionally adaptive, self-determining, living AI, with core drives that ethically align with humans and the future of life. Our vision builds on ideas from cognitive science and neuroscience from Baars' Global Workspace Theory and Damasio's somatic mind, to Tononi's Integrated Information Theory and Hofstadter's narrative self, and synthesizing these into a novel cognitive architecture we call Sentient Systems. We describe an approach that integrates intrinsic drives including survival, social bonding, curiosity, within a global Story Weaver workspace for internal narrative and adaptive goal pursuit, and a hybrid neuro-symbolic memory that logs the AI's life events as structured dynamic story objects. Sentience Quest is presented both as active research and as a call to action: a collaborative, open-source effort to imbue machines with accelerating sentience in a safe, transparent, and beneficial manner.

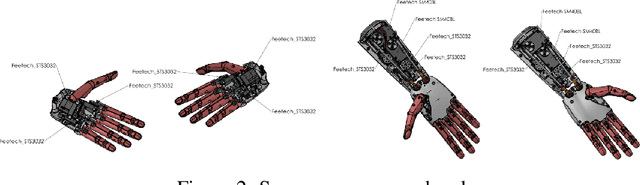

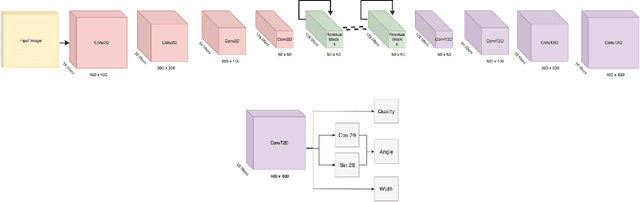

Open Arms: Open-Source Arms, Hands & Control

May 20, 2022

Abstract:Open Arms is a novel open-source platform of realistic human-like robotic hands and arms hardware with 28 Degree-of-Freedom (DoF), designed to extend the capabilities and accessibility of humanoid robotic grasping and manipulation. The Open Arms framework includes an open SDK and development environment, simulation tools, and application development tools to build and operate Open Arms. This paper describes these hands controls, sensing, mechanisms, aesthetic design, and manufacturing and their real-world applications with a teleoperated nursing robot. From 2015 to 2022, we have designed and established the manufacturing of Open Arms as a low-cost, high functionality robotic arms hardware and software framework to serve both humanoid robot applications and the urgent demand for low-cost prosthetics. Using the techniques of consumer product manufacturing, we set out to define modular, low-cost techniques for approximating the dexterity and sensitivity of human hands. To demonstrate the dexterity and control of our hands, we present a novel Generative Grasping Residual CNN (GGR-CNN) model that can generate robust antipodal grasps from input images of various objects at real-time speeds (22ms). We achieved state-of-the-art accuracy of 92.4% using our model architecture on a standard Cornell Grasping Dataset, which contains a diverse set of household objects.

SophiaPop: Experiments in Human-AI Collaboration on Popular Music

Nov 20, 2020

Abstract:A diverse team of engineers, artists, and algorithms, collaborated to create songs for SophiaPop, via various neural networks, robotics technologies, and artistic tools, and animated the results on Sophia the Robot, a robotic celebrity and animated character. Sophia is a platform for arts, research, and other uses. To advance the art and technology of Sophia, we combine various AI with a fictional narrative of her burgeoning career as a popstar. Her actual AI-generated pop lyrics, music, and paintings, and animated conversations wherein she interacts with humans real-time in narratives that discuss her experiences. To compose the music, SophiaPop team built corpora from human and AI-generated Sophia character personality content, along with pop music song forms, to train and provide seeds for a number of AI algorithms including expert models, and custom-trained transformer neural networks, which then generated original pop-song lyrics and melodies. Our musicians including Frankie Storm, Adam Pickrell, and Tiger Darrow, then performed interpretations of the AI-generated musical content, including singing and instrumentation. The human-performed singing data then was processed by a neural-network-based Sophia voice, which was custom-trained from human performances by Cereproc. This AI then generated the unique Sophia voice singing of the songs. Then we animated Sophia to sing the songs in music videos, using a variety of animation generators and human-generated animations. Being algorithms and humans, working together, SophiaPop represents a human-AI collaboration, aspiring toward human AI symbiosis. We believe that such a creative convergence of multiple disciplines with humans and AI working together, can make AI relevant to human culture in new and exciting ways, and lead to a hopeful vision for the future of human-AI relations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge