Abdeldjallil Naceri

Bridging the Sim-to-Real Gap with multipanda ros2: A Real-Time ROS2 Framework for Multimanual Systems

Feb 02, 2026Abstract:We present $multipanda\_ros2$, a novel open-source ROS2 architecture for multi-robot control of Franka Robotics robots. Leveraging ros2 control, this framework provides native ROS2 interfaces for controlling any number of robots from a single process. Our core contributions address key challenges in real-time torque control, including interaction control and robot-environment modeling. A central focus of this work is sustaining a 1kHz control frequency, a necessity for real-time control and a minimum frequency required by safety standards. Moreover, we introduce a controllet-feature design pattern that enables controller-switching delays of $\le 2$ ms, facilitating reproducible benchmarking and complex multi-robot interaction scenarios. To bridge the simulation-to-reality (sim2real) gap, we integrate a high-fidelity MuJoCo simulation with quantitative metrics for both kinematic accuracy and dynamic consistency (torques, forces, and control errors). Furthermore, we demonstrate that real-world inertial parameter identification can significantly improve force and torque accuracy, providing a methodology for iterative physics refinement. Our work extends approaches from soft robotics to rigid dual-arm, contact-rich tasks, showcasing a promising method to reduce the sim2real gap and providing a robust, reproducible platform for advanced robotics research.

Time-Optimized Trajectory Planning for Non-Prehensile Object Transportation in 3D

Aug 29, 2024Abstract:Non-prehensile object transportation offers a way to enhance robotic performance in object manipulation tasks, especially with unstable objects. Effective trajectory planning requires simultaneous consideration of robot motion constraints and object stability. Here, we introduce a physical model for object stability and propose a novel trajectory planning approach for non-prehensile transportation along arbitrary straight lines in 3D space. Validation with a 7-DoF Franka Panda robot confirms improved transportation speed via tray rotation integration while ensuring object stability and robot motion constraints.

Autonomous and Teleoperation Control of a Drawing Robot Avatar

Jul 29, 2024

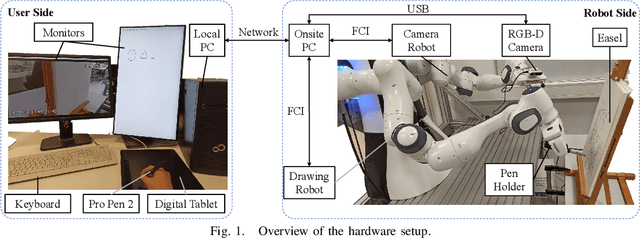

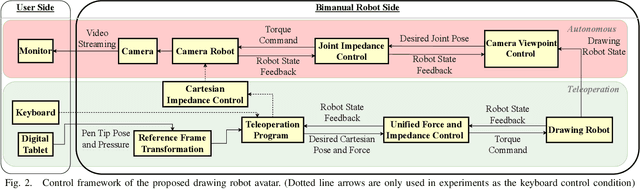

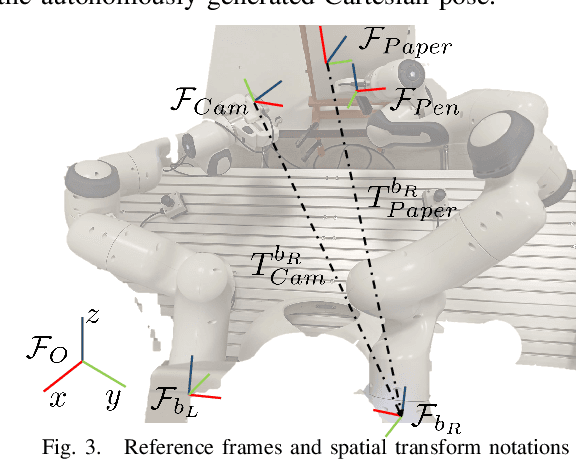

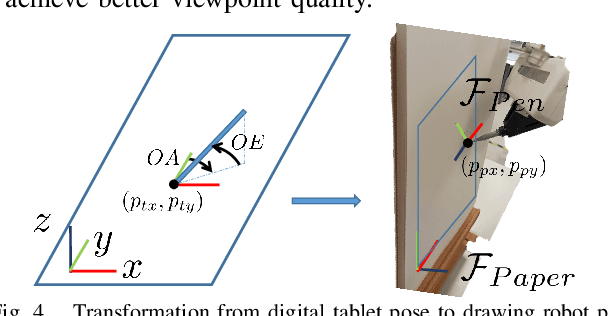

Abstract:A drawing robot avatar is a robotic system that allows for telepresence-based drawing, enabling users to remotely control a robotic arm and create drawings in real-time from a remote location. The proposed control framework aims to improve bimanual robot telepresence quality by reducing the user workload and required prior knowledge through the automation of secondary or auxiliary tasks. The introduced novel method calculates the near-optimal Cartesian end-effector pose in terms of visual feedback quality for the attached eye-to-hand camera with motion constraints in consideration. The effectiveness is demonstrated by conducting user studies of drawing reference shapes using the implemented robot avatar compared to stationary and teleoperated camera pose conditions. Our results demonstrate that the proposed control framework offers improved visual feedback quality and drawing performance.

Pragmatic auditing: a pilot-driven approach for auditing Machine Learning systems

May 21, 2024

Abstract:The growing adoption and deployment of Machine Learning (ML) systems came with its share of ethical incidents and societal concerns. It also unveiled the necessity to properly audit these systems in light of ethical principles. For such a novel type of algorithmic auditing to become standard practice, two main prerequisites need to be available: A lifecycle model that is tailored towards transparency and accountability, and a principled risk assessment procedure that allows the proper scoping of the audit. Aiming to make a pragmatic step towards a wider adoption of ML auditing, we present a respective procedure that extends the AI-HLEG guidelines published by the European Commission. Our audit procedure is based on an ML lifecycle model that explicitly focuses on documentation, accountability, and quality assurance; and serves as a common ground for alignment between the auditors and the audited organisation. We describe two pilots conducted on real-world use cases from two different organisations and discuss the shortcomings of ML algorithmic auditing as well as future directions thereof.

Anthropomorphic Grasping with Neural Object Shape Completion

Nov 09, 2023Abstract:The progressive prevalence of robots in human-suited environments has given rise to a myriad of object manipulation techniques, in which dexterity plays a paramount role. It is well-established that humans exhibit extraordinary dexterity when handling objects. Such dexterity seems to derive from a robust understanding of object properties (such as weight, size, and shape), as well as a remarkable capacity to interact with them. Hand postures commonly demonstrate the influence of specific regions on objects that need to be grasped, especially when objects are partially visible. In this work, we leverage human-like object understanding by reconstructing and completing their full geometry from partial observations, and manipulating them using a 7-DoF anthropomorphic robot hand. Our approach has significantly improved the grasping success rates of baselines with only partial reconstruction by nearly 30% and achieved over 150 successful grasps with three different object categories. This demonstrates our approach's consistent ability to predict and execute grasping postures based on the completed object shapes from various directions and positions in real-world scenarios. Our work opens up new possibilities for enhancing robotic applications that require precise grasping and manipulation skills of real-world reconstructed objects.

LoHoRavens: A Long-Horizon Language-Conditioned Benchmark for Robotic Tabletop Manipulation

Oct 23, 2023

Abstract:The convergence of embodied agents and large language models (LLMs) has brought significant advancements to embodied instruction following. Particularly, the strong reasoning capabilities of LLMs make it possible for robots to perform long-horizon tasks without expensive annotated demonstrations. However, public benchmarks for testing the long-horizon reasoning capabilities of language-conditioned robots in various scenarios are still missing. To fill this gap, this work focuses on the tabletop manipulation task and releases a simulation benchmark, \textit{LoHoRavens}, which covers various long-horizon reasoning aspects spanning color, size, space, arithmetics and reference. Furthermore, there is a key modality bridging problem for long-horizon manipulation tasks with LLMs: how to incorporate the observation feedback during robot execution for the LLM's closed-loop planning, which is however less studied by prior work. We investigate two methods of bridging the modality gap: caption generation and learnable interface for incorporating explicit and implicit observation feedback to the LLM, respectively. These methods serve as the two baselines for our proposed benchmark. Experiments show that both methods struggle to solve some tasks, indicating long-horizon manipulation tasks are still challenging for current popular models. We expect the proposed public benchmark and baselines can help the community develop better models for long-horizon tabletop manipulation tasks.

Care3D: An Active 3D Object Detection Dataset of Real Robotic-Care Environments

Oct 09, 2023

Abstract:As labor shortage increases in the health sector, the demand for assistive robotics grows. However, the needed test data to develop those robots is scarce, especially for the application of active 3D object detection, where no real data exists at all. This short paper counters this by introducing such an annotated dataset of real environments. The captured environments represent areas which are already in use in the field of robotic health care research. We further provide ground truth data within one room, for assessing SLAM algorithms running directly on a health care robot.

A Concise Overview of Safety Aspects in Human-Robot Interaction

Sep 18, 2023

Abstract:As of today, robots exhibit impressive agility but also pose potential hazards to humans using/collaborating with them. Consequently, safety is considered the most paramount factor in human-robot interaction (HRI). This paper presents a multi-layered safety architecture, integrating both physical and cognitive aspects for effective HRI. We outline critical requirements for physical safety layers as service modules that can be arbitrarily queried. Further, we showcase an HRI scheme that addresses human factors and perceived safety as high-level constraints on a validated impact safety paradigm. The aim is to enable safety certification of human-friendly robots across various HRI scenarios.

Towards Language-Based Modulation of Assistive Robots through Multimodal Models

Jun 27, 2023

Abstract:In the field of Geriatronics, enabling effective and transparent communication between humans and robots is crucial for enhancing the acceptance and performance of assistive robots. Our early-stage research project investigates the potential of language-based modulation as a means to improve human-robot interaction. We propose to explore real-time modulation during task execution, leveraging language cues, visual references, and multimodal inputs. By developing transparent and interpretable methods, we aim to enable robots to adapt and respond to language commands, enhancing their usability and flexibility. Through the exchange of insights and knowledge at the workshop, we seek to gather valuable feedback to advance our research and contribute to the development of interactive robotic systems for Geriatronics and beyond.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge