Marc P. Hauer

Pragmatic auditing: a pilot-driven approach for auditing Machine Learning systems

May 21, 2024

Abstract:The growing adoption and deployment of Machine Learning (ML) systems came with its share of ethical incidents and societal concerns. It also unveiled the necessity to properly audit these systems in light of ethical principles. For such a novel type of algorithmic auditing to become standard practice, two main prerequisites need to be available: A lifecycle model that is tailored towards transparency and accountability, and a principled risk assessment procedure that allows the proper scoping of the audit. Aiming to make a pragmatic step towards a wider adoption of ML auditing, we present a respective procedure that extends the AI-HLEG guidelines published by the European Commission. Our audit procedure is based on an ML lifecycle model that explicitly focuses on documentation, accountability, and quality assurance; and serves as a common ground for alignment between the auditors and the audited organisation. We describe two pilots conducted on real-world use cases from two different organisations and discuss the shortcomings of ML algorithmic auditing as well as future directions thereof.

Quantitative study about the estimated impact of the AI Act

Mar 29, 2023Abstract:With the Proposal for a Regulation laying down harmonised rules on Artificial Intelligence (AI Act) the European Union provides the first regulatory document that applies to the entire complex of AI systems. While some fear that the regulation leaves too much room for interpretation and thus bring little benefit to society, others expect that the regulation is too restrictive and, thus, blocks progress and innovation, as well as hinders the economic success of companies within the EU. Without a systematic approach, it is difficult to assess how it will actually impact the AI landscape. In this paper, we suggest a systematic approach that we applied on the initial draft of the AI Act that has been released in April 2021. We went through several iterations of compiling the list of AI products and projects in and from Germany, which the Lernende Systeme platform lists, and then classified them according to the AI Act together with experts from the fields of computer science and law. Our study shows a need for more concrete formulation, since for some provisions it is often unclear whether they are applicable in a specific case or not. Apart from that, it turns out that only about 30\% of the AI systems considered would be regulated by the AI Act, the rest would be classified as low-risk. However, as the database is not representative, the results only provide a first assessment. The process presented can be applied to any collections, and also repeated when regulations are about to change. This allows fears of over- or under-regulation to be investigated before the regulations comes into effect.

Towards a Common Testing Terminology for Software Engineering and Artificial Intelligence Experts

Sep 06, 2021

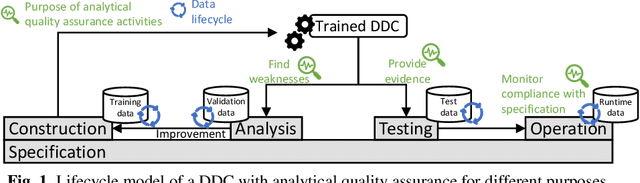

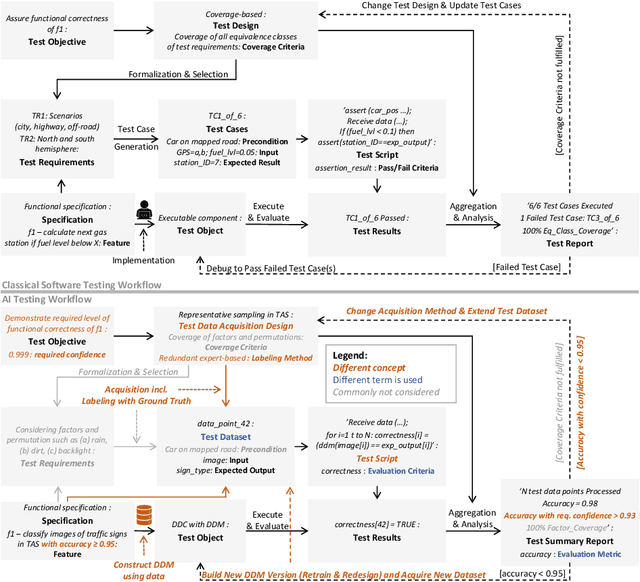

Abstract:Analytical quality assurance, especially testing, is an integral part of software-intensive system development. With the increased usage of Artificial Intelligence (AI) and Machine Learning (ML) as part of such systems, this becomes more difficult as well-understood software testing approaches cannot be applied directly to the AI-enabled parts of the system. The required adaptation of classical testing approaches and development of new concepts for AI would benefit from a deeper understanding and exchange between AI and software engineering experts. A major obstacle on this way, we see in the different terminologies used in the two communities. As we consider a mutual understanding of the testing terminology as a key, this paper contributes a mapping between the most important concepts from classical software testing and AI testing. In the mapping, we highlight differences in relevance and naming of the mapped concepts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge