Eckehard Steinbach

REVNET: Rotation-Equivariant Point Cloud Completion via Vector Neuron Anchor Transformer

Jan 13, 2026Abstract:Incomplete point clouds captured by 3D sensors often result in the loss of both geometric and semantic information. Most existing point cloud completion methods are built on rotation-variant frameworks trained with data in canonical poses, limiting their applicability in real-world scenarios. While data augmentation with random rotations can partially mitigate this issue, it significantly increases the learning burden and still fails to guarantee robust performance under arbitrary poses. To address this challenge, we propose the Rotation-Equivariant Anchor Transformer (REVNET), a novel framework built upon the Vector Neuron (VN) network for robust point cloud completion under arbitrary rotations. To preserve local details, we represent partial point clouds as sets of equivariant anchors and design a VN Missing Anchor Transformer to predict the positions and features of missing anchors. Furthermore, we extend VN networks with a rotation-equivariant bias formulation and a ZCA-based layer normalization to improve feature expressiveness. Leveraging the flexible conversion between equivariant and invariant VN features, our model can generate point coordinates with greater stability. Experimental results show that our method outperforms state-of-the-art approaches on the synthetic MVP dataset in the equivariant setting. On the real-world KITTI dataset, REVNET delivers competitive results compared to non-equivariant networks, without requiring input pose alignment. The source code will be released on GitHub under URL: https://github.com/nizhf/REVNET.

FOODER: Real-time Facial Authentication and Expression Recognition

Dec 19, 2025

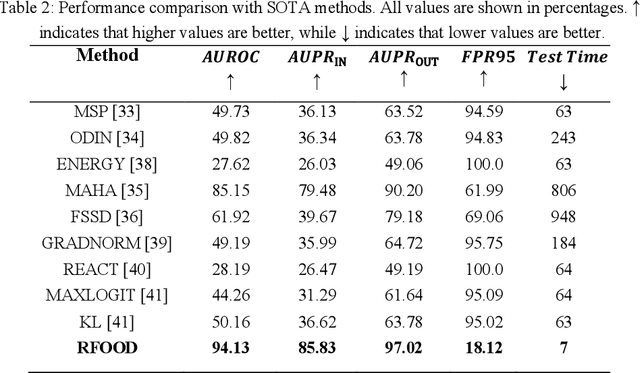

Abstract:Out-of-distribution (OOD) detection is essential for the safe deployment of neural networks, as it enables the identification of samples outside the training domain. We present FOODER, a real-time, privacy-preserving radar-based framework that integrates OOD-based facial authentication with facial expression recognition. FOODER operates using low-cost frequency-modulated continuous-wave (FMCW) radar and exploits both range-Doppler and micro range-Doppler representations. The authentication module employs a multi-encoder multi-decoder architecture with Body Part (BP) and Intermediate Linear Encoder-Decoder (ILED) components to classify a single enrolled individual as in-distribution while detecting all other faces as OOD. Upon successful authentication, an expression recognition module is activated. Concatenated radar representations are processed by a ResNet block to distinguish between dynamic and static facial expressions. Based on this categorization, two specialized MobileViT networks are used to classify dynamic expressions (smile, shock) and static expressions (neutral, anger). This hierarchical design enables robust facial authentication and fine-grained expression recognition while preserving user privacy by relying exclusively on radar data. Experiments conducted on a dataset collected with a 60 GHz short-range FMCW radar demonstrate that FOODER achieves an AUROC of 94.13% and an FPR95 of 18.12% for authentication, along with an average expression recognition accuracy of 94.70%. FOODER outperforms state-of-the-art OOD detection methods and several transformer-based architectures while operating efficiently in real time.

ACCOR: Attention-Enhanced Complex-Valued Contrastive Learning for Occluded Object Classification Using mmWave Radar IQ Signals

Dec 12, 2025

Abstract:Millimeter-wave (mmWave) radar has emerged as a robust sensing modality for several areas, offering reliable operation under adverse environmental conditions. Its ability to penetrate lightweight materials such as packaging or thin walls enables non-visual sensing in industrial and automated environments and can provide robotic platforms with enhanced environmental perception when used alongside optical sensors. Recent work with MIMO mmWave radar has demonstrated its ability to penetrate cardboard packaging for occluded object classification. However, existing models leave room for improvement and warrant a more thorough evaluation across different sensing frequencies. In this paper, we propose ACCOR, an attention-enhanced complex-valued contrastive learning approach for radar, enabling robust occluded object classification. We process complex-valued IQ radar signals using a complex-valued CNN backbone, followed by a multi-head attention layer and a hybrid loss. Our proposed loss combines a weighted cross-entropy term with a supervised contrastive term. We further extend an existing 64 GHz dataset with a 67 GHz subset of the occluded objects and evaluate our model using both center frequencies. Performance evaluation demonstrates that our approach outperforms prior radar-specific models and image classification models with adapted input, achieving classification accuracies of 96.60% at 64 GHz and 93.59% at 67 GHz for ten different objects. These results demonstrate the benefits of complex-valued deep learning with attention and contrastive learning for mmWave radar-based occluded object classification in industrial and automated environments.

RadarFuseNet: Complex-Valued Attention-Based Fusion of IQ Time- and Frequency-Domain Radar Features for Classification Tasks

Dec 12, 2025Abstract:Millimeter-wave (mmWave) radar has emerged as a compact and powerful sensing modality for advanced perception tasks that leverage machine learning techniques. It is particularly effective in scenarios where vision-based sensors fail to capture reliable information, such as detecting occluded objects or distinguishing between different surface materials in indoor environments. Due to the non-linear characteristics of mmWave radar signals, deep learning-based methods are well suited for extracting relevant information from in-phase and quadrature (IQ) data. However, the current state of the art in IQ signal-based occluded-object and material classification still offers substantial potential for further improvement. In this paper, we propose a bidirectional cross-attention fusion network that combines IQ-signal and FFT-transformed radar features obtained by distinct complex-valued convolutional neural networks (CNNs). The proposed method achieves improved performance and robustness compared to standalone complex-valued CNNs. We achieve a near-perfect material classification accuracy of 99.92% on samples collected at same sensor-to-surface distances used during training, and an improved accuracy of 67.38% on samples measured at previously unseen distances, demonstrating improved generalization ability across varying measurement conditions. Furthermore, the accuracy for occluded object classification improves from 91.99% using standalone complex-valued CNNs to 94.20% using our proposed approach.

BIR-Adapter: A Low-Complexity Diffusion Model Adapter for Blind Image Restoration

Sep 08, 2025Abstract:This paper introduces BIR-Adapter, a low-complexity blind image restoration adapter for diffusion models. The BIR-Adapter enables the utilization of the prior of pre-trained large-scale diffusion models on blind image restoration without training any auxiliary feature extractor. We take advantage of the robustness of pretrained models. We extract features from degraded images via the model itself and extend the self-attention mechanism with these degraded features. We introduce a sampling guidance mechanism to reduce hallucinations. We perform experiments on synthetic and real-world degradations and demonstrate that BIR-Adapter achieves competitive or better performance compared to state-of-the-art methods while having significantly lower complexity. Additionally, its adapter-based design enables integration into other diffusion models, enabling broader applications in image restoration tasks. We showcase this by extending a super-resolution-only model to perform better under additional unknown degradations.

Touch-Augmented Gaussian Splatting for Enhanced 3D Scene Reconstruction

Aug 11, 2025Abstract:This paper presents a multimodal framework that integrates touch signals (contact points and surface normals) into 3D Gaussian Splatting (3DGS). Our approach enhances scene reconstruction, particularly under challenging conditions like low lighting, limited camera viewpoints, and occlusions. Different from the visual-only method, the proposed approach incorporates spatially selective touch measurements to refine both the geometry and appearance of the 3D Gaussian representation. To guide the touch exploration, we introduce a two-stage sampling scheme that initially probes sparse regions and then concentrates on high-uncertainty boundaries identified from the reconstructed mesh. A geometric loss is proposed to ensure surface smoothness, resulting in improved geometry. Experimental results across diverse scenarios show consistent improvements in geometric accuracy. In the most challenging case with severe occlusion, the Chamfer Distance is reduced by over 15x, demonstrating the effectiveness of integrating touch cues into 3D Gaussian Splatting. Furthermore, our approach maintains a fully online pipeline, underscoring its feasibility in visually degraded environments.

Technical Report for Egocentric Mistake Detection for the HoloAssist Challenge

Jun 06, 2025Abstract:In this report, we address the task of online mistake detection, which is vital in domains like industrial automation and education, where real-time video analysis allows human operators to correct errors as they occur. While previous work focuses on procedural errors involving action order, broader error types must be addressed for real-world use. We introduce an online mistake detection framework that handles both procedural and execution errors (e.g., motor slips or tool misuse). Upon detecting an error, we use a large language model (LLM) to generate explanatory feedback. Experiments on the HoloAssist benchmark confirm the effectiveness of our approach, where our approach is placed second on the mistake detection task.

FARE: A Deep Learning-Based Framework for Radar-based Face Recognition and Out-of-distribution Detection

Jan 14, 2025

Abstract:In this work, we propose a novel pipeline for face recognition and out-of-distribution (OOD) detection using short-range FMCW radar. The proposed system utilizes Range-Doppler and micro Range-Doppler Images. The architecture features a primary path (PP) responsible for the classification of in-distribution (ID) faces, complemented by intermediate paths (IPs) dedicated to OOD detection. The network is trained in two stages: first, the PP is trained using triplet loss to optimize ID face classification. In the second stage, the PP is frozen, and the IPs-comprising simple linear autoencoder networks-are trained specifically for OOD detection. Using our dataset generated with a 60 GHz FMCW radar, our method achieves an ID classification accuracy of 99.30% and an OOD detection AUROC of 96.91%.

FERT: Real-Time Facial Expression Recognition with Short-Range FMCW Radar

Nov 18, 2024

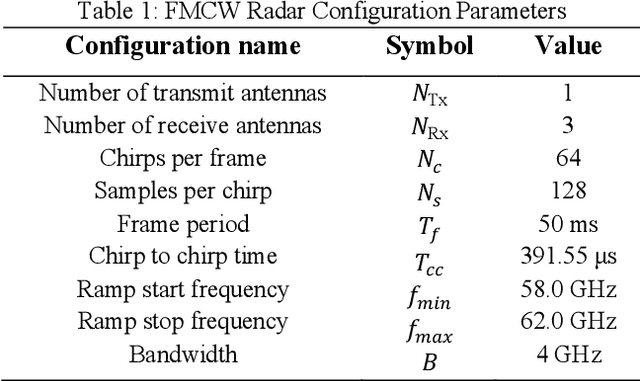

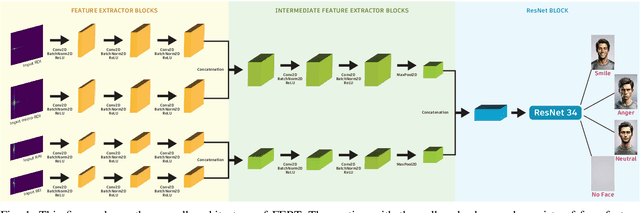

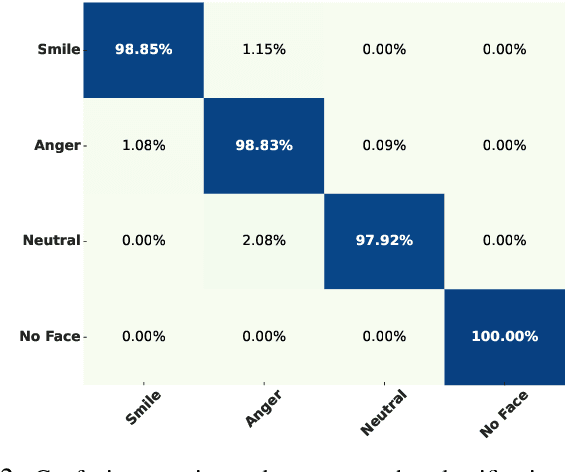

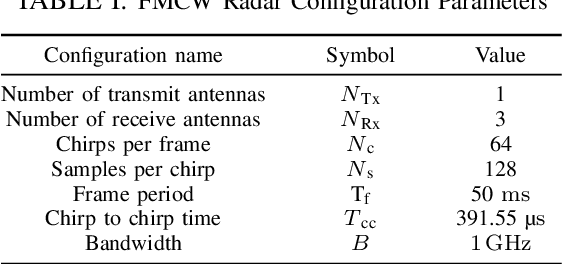

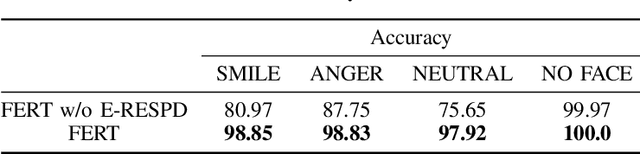

Abstract:This study proposes a novel approach for real-time facial expression recognition utilizing short-range Frequency-Modulated Continuous-Wave (FMCW) radar equipped with one transmit (Tx), and three receive (Rx) antennas. The system leverages four distinct modalities simultaneously: Range-Doppler images (RDIs), micro range-Doppler Images (micro-RDIs), range azimuth images (RAIs), and range elevation images (REIs). Our innovative architecture integrates feature extractor blocks, intermediate feature extractor blocks, and a ResNet block to accurately classify facial expressions into smile, anger, neutral, and no-face classes. Our model achieves an average classification accuracy of 98.91% on the dataset collected using a 60 GHz short-range FMCW radar. The proposed solution operates in real-time in a person-independent manner, which shows the potential use of low-cost FMCW radars for effective facial expression recognition in various applications.

LEMON: Localized Editing with Mesh Optimization and Neural Shaders

Sep 18, 2024Abstract:In practical use cases, polygonal mesh editing can be faster than generating new ones, but it can still be challenging and time-consuming for users. Existing solutions for this problem tend to focus on a single task, either geometry or novel view synthesis, which often leads to disjointed results between the mesh and view. In this work, we propose LEMON, a mesh editing pipeline that combines neural deferred shading with localized mesh optimization. Our approach begins by identifying the most important vertices in the mesh for editing, utilizing a segmentation model to focus on these key regions. Given multi-view images of an object, we optimize a neural shader and a polygonal mesh while extracting the normal map and the rendered image from each view. By using these outputs as conditioning data, we edit the input images with a text-to-image diffusion model and iteratively update our dataset while deforming the mesh. This process results in a polygonal mesh that is edited according to the given text instruction, preserving the geometric characteristics of the initial mesh while focusing on the most significant areas. We evaluate our pipeline using the DTU dataset, demonstrating that it generates finely-edited meshes more rapidly than the current state-of-the-art methods. We include our code and additional results in the supplementary material.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge