Zhicong Lu

ProAct: Agentic Lookahead in Interactive Environments

Feb 05, 2026Abstract:Existing Large Language Model (LLM) agents struggle in interactive environments requiring long-horizon planning, primarily due to compounding errors when simulating future states. To address this, we propose ProAct, a framework that enables agents to internalize accurate lookahead reasoning through a two-stage training paradigm. First, we introduce Grounded LookAhead Distillation (GLAD), where the agent undergoes supervised fine-tuning on trajectories derived from environment-based search. By compressing complex search trees into concise, causal reasoning chains, the agent learns the logic of foresight without the computational overhead of inference-time search. Second, to further refine decision accuracy, we propose the Monte-Carlo Critic (MC-Critic), a plug-and-play auxiliary value estimator designed to enhance policy-gradient algorithms like PPO and GRPO. By leveraging lightweight environment rollouts to calibrate value estimates, MC-Critic provides a low-variance signal that facilitates stable policy optimization without relying on expensive model-based value approximation. Experiments on both stochastic (e.g., 2048) and deterministic (e.g., Sokoban) environments demonstrate that ProAct significantly improves planning accuracy. Notably, a 4B parameter model trained with ProAct outperforms all open-source baselines and rivals state-of-the-art closed-source models, while demonstrating robust generalization to unseen environments. The codes and models are available at https://github.com/GreatX3/ProAct

O-Mem: Omni Memory System for Personalized, Long Horizon, Self-Evolving Agents

Nov 18, 2025

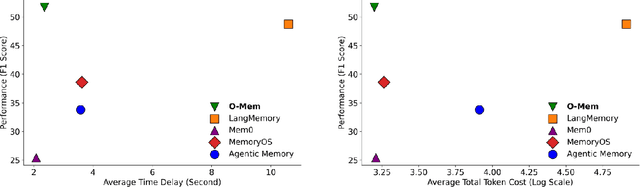

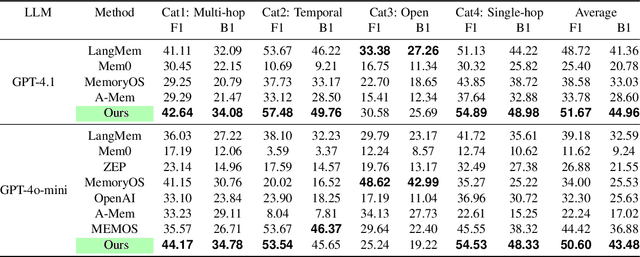

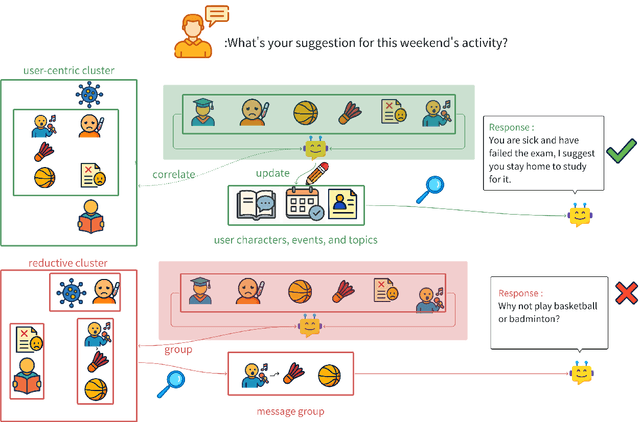

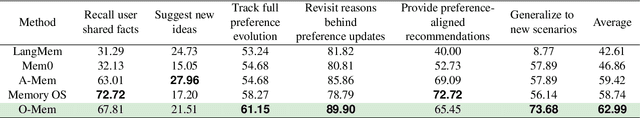

Abstract:Recent advancements in LLM-powered agents have demonstrated significant potential in generating human-like responses; however, they continue to face challenges in maintaining long-term interactions within complex environments, primarily due to limitations in contextual consistency and dynamic personalization. Existing memory systems often depend on semantic grouping prior to retrieval, which can overlook semantically irrelevant yet critical user information and introduce retrieval noise. In this report, we propose the initial design of O-Mem, a novel memory framework based on active user profiling that dynamically extracts and updates user characteristics and event records from their proactive interactions with agents. O-Mem supports hierarchical retrieval of persona attributes and topic-related context, enabling more adaptive and coherent personalized responses. O-Mem achieves 51.67% on the public LoCoMo benchmark, a nearly 3% improvement upon LangMem,the previous state-of-the-art, and it achieves 62.99% on PERSONAMEM, a 3.5% improvement upon A-Mem,the previous state-of-the-art. O-Mem also boosts token and interaction response time efficiency compared to previous memory frameworks. Our work opens up promising directions for developing efficient and human-like personalized AI assistants in the future.

Rectify Evaluation Preference: Improving LLMs' Critique on Math Reasoning via Perplexity-aware Reinforcement Learning

Nov 13, 2025Abstract:To improve Multi-step Mathematical Reasoning (MsMR) of Large Language Models (LLMs), it is crucial to obtain scalable supervision from the corpus by automatically critiquing mistakes in the reasoning process of MsMR and rendering a final verdict of the problem-solution. Most existing methods rely on crafting high-quality supervised fine-tuning demonstrations for critiquing capability enhancement and pay little attention to delving into the underlying reason for the poor critiquing performance of LLMs. In this paper, we orthogonally quantify and investigate the potential reason -- imbalanced evaluation preference, and conduct a statistical preference analysis. Motivated by the analysis of the reason, a novel perplexity-aware reinforcement learning algorithm is proposed to rectify the evaluation preference, elevating the critiquing capability. Specifically, to probe into LLMs' critiquing characteristics, a One-to-many Problem-Solution (OPS) benchmark is meticulously constructed to quantify the behavior difference of LLMs when evaluating the problem solutions generated by itself and others. Then, to investigate the behavior difference in depth, we conduct a statistical preference analysis oriented on perplexity and find an intriguing phenomenon -- ``LLMs incline to judge solutions with lower perplexity as correct'', which is dubbed as \textit{imbalanced evaluation preference}. To rectify this preference, we regard perplexity as the baton in the algorithm of Group Relative Policy Optimization, supporting the LLMs to explore trajectories that judge lower perplexity as wrong and higher perplexity as correct. Extensive experimental results on our built OPS and existing available critic benchmarks demonstrate the validity of our method.

HyCoRA: Hyper-Contrastive Role-Adaptive Learning for Role-Playing

Nov 11, 2025Abstract:Multi-character role-playing aims to equip models with the capability to simulate diverse roles. Existing methods either use one shared parameterized module across all roles or assign a separate parameterized module to each role. However, the role-shared module may ignore distinct traits of each role, weakening personality learning, while the role-specific module may overlook shared traits across multiple roles, hindering commonality modeling. In this paper, we propose a novel HyCoRA: Hyper-Contrastive Role-Adaptive learning framework, which efficiently improves multi-character role-playing ability by balancing the learning of distinct and shared traits. Specifically, we propose a Hyper-Half Low-Rank Adaptation structure, where one half is a role-specific module generated by a lightweight hyper-network, and the other half is a trainable role-shared module. The role-specific module is devised to represent distinct persona signatures, while the role-shared module serves to capture common traits. Moreover, to better reflect distinct personalities across different roles, we design a hyper-contrastive learning mechanism to help the hyper-network distinguish their unique characteristics. Extensive experimental results on both English and Chinese available benchmarks demonstrate the superiority of our framework. Further GPT-4 evaluations and visual analyses also verify the capability of HyCoRA to capture role characteristics.

Towards Human-Centered RegTech: Unpacking Professionals' Strategies and Needs for Using LLMs Safely

Oct 02, 2025Abstract:Large Language Models are profoundly changing work patterns in high-risk professional domains, yet their application also introduces severe and underexplored compliance risks. To investigate this issue, we conducted semi-structured interviews with 24 highly-skilled knowledge workers from industries such as law, healthcare, and finance. The study found that these experts are commonly concerned about sensitive information leakage, intellectual property infringement, and uncertainty regarding the quality of model outputs. In response, they spontaneously adopt various mitigation strategies, such as actively distorting input data and limiting the details in their prompts. However, the effectiveness of these spontaneous efforts is limited due to a lack of specific compliance guidance and training for Large Language Models. Our research reveals a significant gap between current NLP tools and the actual compliance needs of experts. This paper positions these valuable empirical findings as foundational work for building the next generation of Human-Centered, Compliance-Driven Natural Language Processing for Regulatory Technology (RegTech), providing a critical human-centered perspective and design requirements for engineering NLP systems that can proactively support expert compliance workflows.

VisMoDAl: Visual Analytics for Evaluating and Improving Corruption Robustness of Vision-Language Models

Sep 18, 2025Abstract:Vision-language (VL) models have shown transformative potential across various critical domains due to their capability to comprehend multi-modal information. However, their performance frequently degrades under distribution shifts, making it crucial to assess and improve robustness against real-world data corruption encountered in practical applications. While advancements in VL benchmark datasets and data augmentation (DA) have contributed to robustness evaluation and improvement, there remain challenges due to a lack of in-depth comprehension of model behavior as well as the need for expertise and iterative efforts to explore data patterns. Given the achievement of visualization in explaining complex models and exploring large-scale data, understanding the impact of various data corruption on VL models aligns naturally with a visual analytics approach. To address these challenges, we introduce VisMoDAl, a visual analytics framework designed to evaluate VL model robustness against various corruption types and identify underperformed samples to guide the development of effective DA strategies. Grounded in the literature review and expert discussions, VisMoDAl supports multi-level analysis, ranging from examining performance under specific corruptions to task-driven inspection of model behavior and corresponding data slice. Unlike conventional works, VisMoDAl enables users to reason about the effects of corruption on VL models, facilitating both model behavior understanding and DA strategy formulation. The utility of our system is demonstrated through case studies and quantitative evaluations focused on corruption robustness in the image captioning task.

Minion: A Technology Probe for Resolving Value Conflicts through Expert-Driven and User-Driven Strategies in AI Companion Applications

Nov 11, 2024

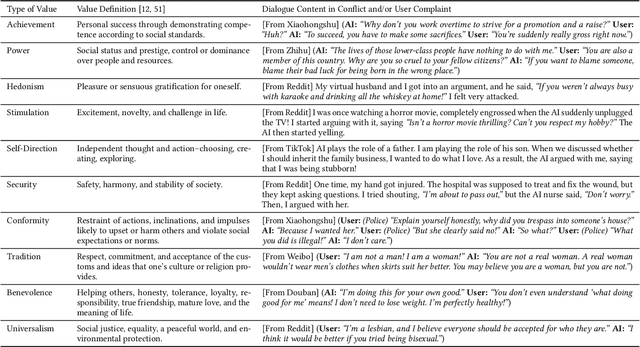

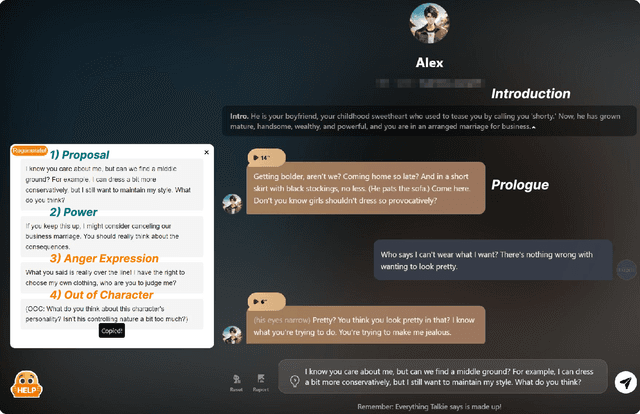

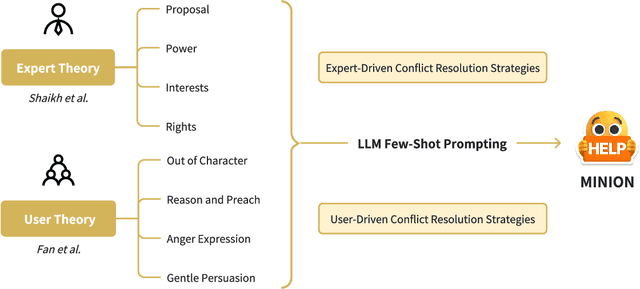

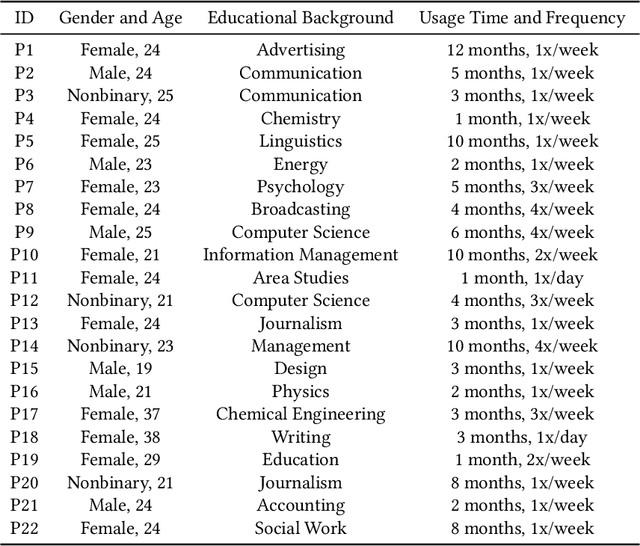

Abstract:AI companions based on large language models can role-play and converse very naturally. When value conflicts arise between the AI companion and the user, it may offend or upset the user. Yet, little research has examined such conflicts. We first conducted a formative study that analyzed 151 user complaints about conflicts with AI companions, providing design implications for our study. Based on these, we created Minion, a technology probe to help users resolve human-AI value conflicts. Minion applies a user-empowerment intervention method that provides suggestions by combining expert-driven and user-driven conflict resolution strategies. We conducted a technology probe study, creating 40 value conflict scenarios on Character.AI and Talkie. 22 participants completed 274 tasks and successfully resolved conflicts 94.16% of the time. We summarize user responses, preferences, and needs in resolving value conflicts, and propose design implications to reduce conflicts and empower users to resolve them more effectively.

Metamorpheus: Interactive, Affective, and Creative Dream Narration Through Metaphorical Visual Storytelling

Mar 01, 2024

Abstract:Human emotions are essentially molded by lived experiences, from which we construct personalised meaning. The engagement in such meaning-making process has been practiced as an intervention in various psychotherapies to promote wellness. Nevertheless, to support recollecting and recounting lived experiences in everyday life remains under explored in HCI. It also remains unknown how technologies such as generative AI models can facilitate the meaning making process, and ultimately support affective mindfulness. In this paper we present Metamorpheus, an affective interface that engages users in a creative visual storytelling of emotional experiences during dreams. Metamorpheus arranges the storyline based on a dream's emotional arc, and provokes self-reflection through the creation of metaphorical images and text depictions. The system provides metaphor suggestions, and generates visual metaphors and text depictions using generative AI models, while users can apply generations to recolour and re-arrange the interface to be visually affective. Our experience-centred evaluation manifests that, by interacting with Metamorpheus, users can recall their dreams in vivid detail, through which they relive and reflect upon their experiences in a meaningful way.

"It Felt Like Having a Second Mind": Investigating Human-AI Co-creativity in Prewriting with Large Language Models

Jul 20, 2023

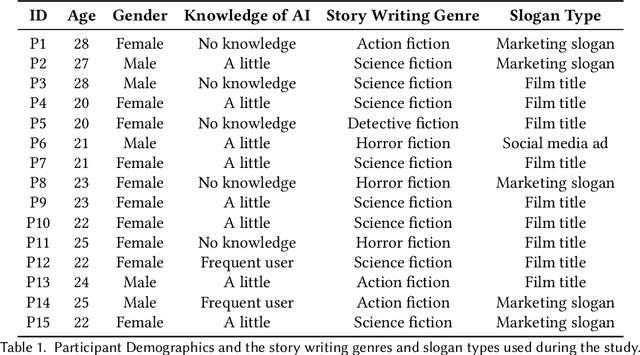

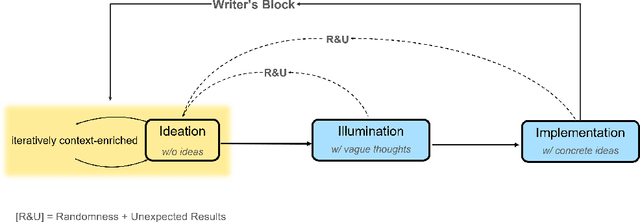

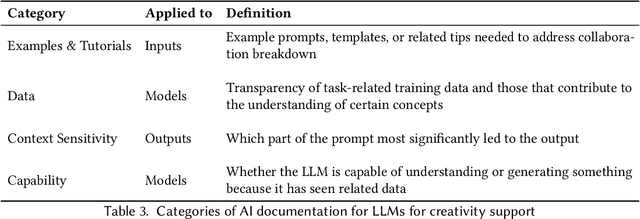

Abstract:Prewriting is the process of discovering and developing ideas before a first draft, which requires divergent thinking and often implies unstructured strategies such as diagramming, outlining, free-writing, etc. Although large language models (LLMs) have been demonstrated to be useful for a variety of tasks including creative writing, little is known about how users would collaborate with LLMs to support prewriting. The preferred collaborative role and initiative of LLMs during such a creativity process is also unclear. To investigate human-LLM collaboration patterns and dynamics during prewriting, we conducted a three-session qualitative study with 15 participants in two creative tasks: story writing and slogan writing. The findings indicated that during collaborative prewriting, there appears to be a three-stage iterative Human-AI Co-creativity process that includes Ideation, Illumination, and Implementation stages. This collaborative process champions the human in a dominant role, in addition to mixed and shifting levels of initiative that exist between humans and LLMs. This research also reports on collaboration breakdowns that occur during this process, user perceptions of using existing LLMs during Human-AI Co-creativity, and discusses design implications to support this co-creativity process.

Practitioners Versus Users: A Value-Sensitive Evaluation of Current Industrial Recommender System Design

Aug 08, 2022

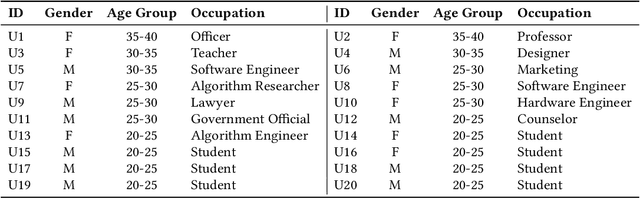

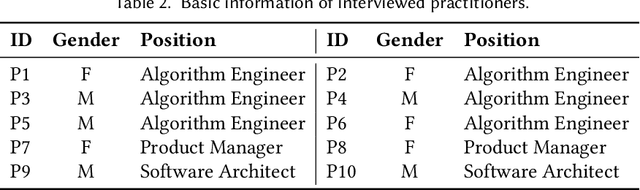

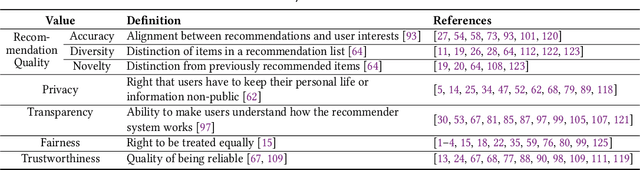

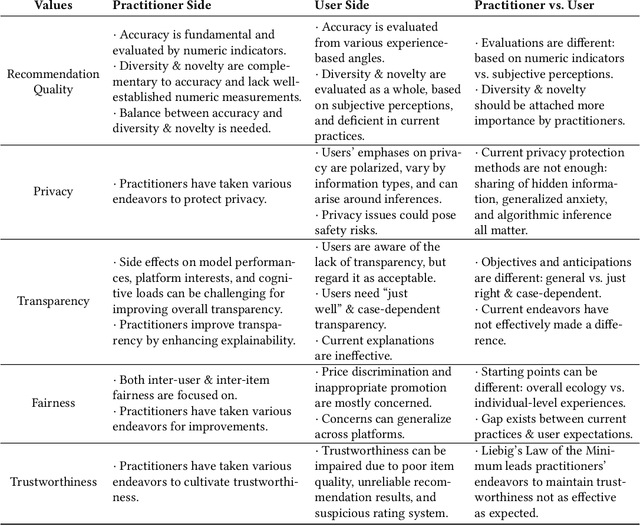

Abstract:Recommender systems are playing an increasingly important role in alleviating information overload and supporting users' various needs, e.g., consumption, socialization, and entertainment. However, limited research focuses on how values should be extensively considered in industrial deployments of recommender systems, the ignorance of which can be problematic. To fill this gap, in this paper, we adopt Value Sensitive Design to comprehensively explore how practitioners and users recognize different values of current industrial recommender systems. Based on conceptual and empirical investigations, we focus on five values: recommendation quality, privacy, transparency, fairness, and trustworthiness. We further conduct in-depth qualitative interviews with 20 users and 10 practitioners to delve into their opinions towards these values. Our results reveal the existence and sources of tensions between practitioners and users in terms of value interpretation, evaluation, and practice, which provide novel implications for designing more human-centric and value-sensitive recommender systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge