Zhiqi Gao

Test-time Scaling Techniques in Theoretical Physics -- A Comparison of Methods on the TPBench Dataset

Jun 25, 2025Abstract:Large language models (LLMs) have shown strong capabilities in complex reasoning, and test-time scaling techniques can enhance their performance with comparably low cost. Many of these methods have been developed and evaluated on mathematical reasoning benchmarks such as AIME. This paper investigates whether the lessons learned from these benchmarks generalize to the domain of advanced theoretical physics. We evaluate a range of common test-time scaling methods on the TPBench physics dataset and compare their effectiveness with results on AIME. To better leverage the structure of physics problems, we develop a novel, symbolic weak-verifier framework to improve parallel scaling results. Our empirical results demonstrate that this method significantly outperforms existing test-time scaling approaches on TPBench. We also evaluate our method on AIME, confirming its effectiveness in solving advanced mathematical problems. Our findings highlight the power of step-wise symbolic verification for tackling complex scientific problems.

Theoretical Physics Benchmark (TPBench) -- a Dataset and Study of AI Reasoning Capabilities in Theoretical Physics

Feb 19, 2025

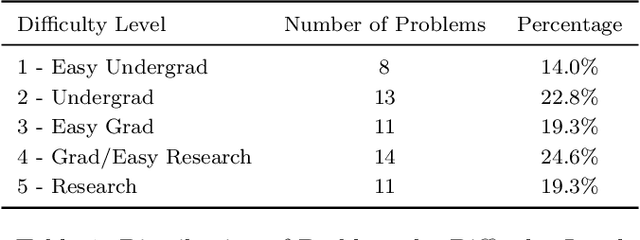

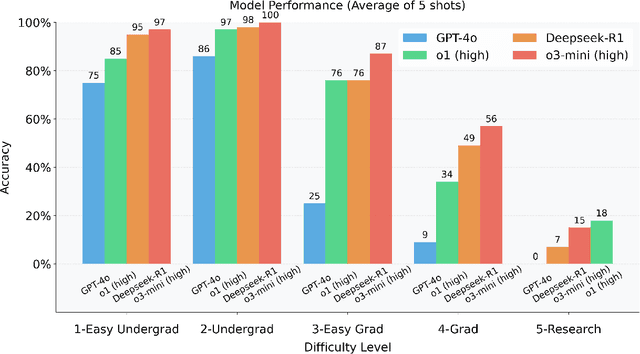

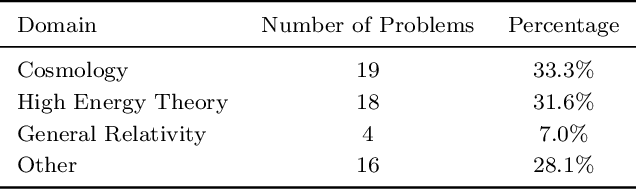

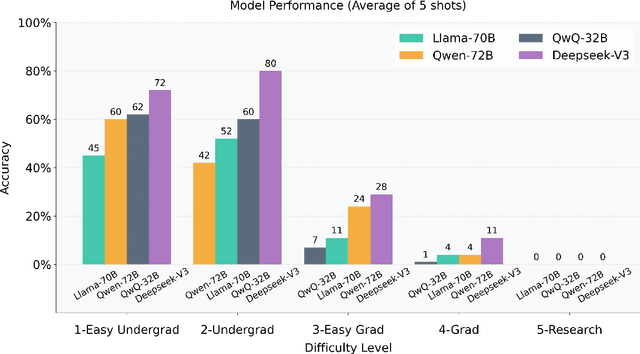

Abstract:We introduce a benchmark to evaluate the capability of AI to solve problems in theoretical physics, focusing on high-energy theory and cosmology. The first iteration of our benchmark consists of 57 problems of varying difficulty, from undergraduate to research level. These problems are novel in the sense that they do not come from public problem collections. We evaluate our data set on various open and closed language models, including o3-mini, o1, DeepSeek-R1, GPT-4o and versions of Llama and Qwen. While we find impressive progress in model performance with the most recent models, our research-level difficulty problems are mostly unsolved. We address challenges of auto-verifiability and grading, and discuss common failure modes. While currently state-of-the art models are still of limited use for researchers, our results show that AI assisted theoretical physics research may become possible in the near future. We discuss the main obstacles towards this goal and possible strategies to overcome them. The public problems and solutions, results for various models, and updates to the data set and score distribution, are available on the website of the dataset tpbench.org.

Pretrained Hybrids with MAD Skills

Jun 02, 2024

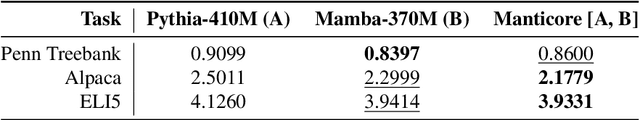

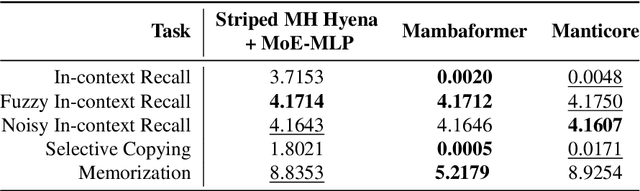

Abstract:While Transformers underpin modern large language models (LMs), there is a growing list of alternative architectures with new capabilities, promises, and tradeoffs. This makes choosing the right LM architecture challenging. Recently-proposed $\textit{hybrid architectures}$ seek a best-of-all-worlds approach that reaps the benefits of all architectures. Hybrid design is difficult for two reasons: it requires manual expert-driven search, and new hybrids must be trained from scratch. We propose $\textbf{Manticore}$, a framework that addresses these challenges. Manticore $\textit{automates the design of hybrid architectures}$ while reusing pretrained models to create $\textit{pretrained}$ hybrids. Our approach augments ideas from differentiable Neural Architecture Search (NAS) by incorporating simple projectors that translate features between pretrained blocks from different architectures. We then fine-tune hybrids that combine pretrained models from different architecture families -- such as the GPT series and Mamba -- end-to-end. With Manticore, we enable LM selection without training multiple models, the construction of pretrained hybrids from existing pretrained models, and the ability to $\textit{program}$ pretrained hybrids to have certain capabilities. Manticore hybrids outperform existing manually-designed hybrids, achieve strong performance on Long Range Arena (LRA) tasks, and can improve on pretrained transformers and state space models.

Metamorpheus: Interactive, Affective, and Creative Dream Narration Through Metaphorical Visual Storytelling

Mar 01, 2024

Abstract:Human emotions are essentially molded by lived experiences, from which we construct personalised meaning. The engagement in such meaning-making process has been practiced as an intervention in various psychotherapies to promote wellness. Nevertheless, to support recollecting and recounting lived experiences in everyday life remains under explored in HCI. It also remains unknown how technologies such as generative AI models can facilitate the meaning making process, and ultimately support affective mindfulness. In this paper we present Metamorpheus, an affective interface that engages users in a creative visual storytelling of emotional experiences during dreams. Metamorpheus arranges the storyline based on a dream's emotional arc, and provokes self-reflection through the creation of metaphorical images and text depictions. The system provides metaphor suggestions, and generates visual metaphors and text depictions using generative AI models, while users can apply generations to recolour and re-arrange the interface to be visually affective. Our experience-centred evaluation manifests that, by interacting with Metamorpheus, users can recall their dreams in vivid detail, through which they relive and reflect upon their experiences in a meaningful way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge