Zhanjie Zhang

LMMRec: LLM-driven Motivation-aware Multimodal Recommendation

Feb 05, 2026Abstract:Motivation-based recommendation systems uncover user behavior drivers. Motivation modeling, crucial for decision-making and content preference, explains recommendation generation. Existing methods often treat motivation as latent variables from interaction data, neglecting heterogeneous information like review text. In multimodal motivation fusion, two challenges arise: 1) achieving stable cross-modal alignment amid noise, and 2) identifying features reflecting the same underlying motivation across modalities. To address these, we propose LLM-driven Motivation-aware Multimodal Recommendation (LMMRec), a model-agnostic framework leveraging large language models for deep semantic priors and motivation understanding. LMMRec uses chain-of-thought prompting to extract fine-grained user and item motivations from text. A dual-encoder architecture models textual and interaction-based motivations for cross-modal alignment, while Motivation Coordination Strategy and Interaction-Text Correspondence Method mitigate noise and semantic drift through contrastive learning and momentum updates. Experiments on three datasets show LMMRec achieves up to a 4.98\% performance improvement.

StyMam: A Mamba-Based Generator for Artistic Style Transfer

Jan 19, 2026Abstract:Image style transfer aims to integrate the visual patterns of a specific artistic style into a content image while preserving its content structure. Existing methods mainly rely on the generative adversarial network (GAN) or stable diffusion (SD). GAN-based approaches using CNNs or Transformers struggle to jointly capture local and global dependencies, leading to artifacts and disharmonious patterns. SD-based methods reduce such issues but often fail to preserve content structures and suffer from slow inference. To address these issues, we revisit GAN and propose a mamba-based generator, termed as StyMam, to produce high-quality stylized images without introducing artifacts and disharmonious patterns. Specifically, we introduce a mamba-based generator with a residual dual-path strip scanning mechanism and a channel-reweighted spatial attention module. The former efficiently captures local texture features, while the latter models global dependencies. Finally, extensive qualitative and quantitative experiments demonstrate that the proposed method outperforms state-of-the-art algorithms in both quality and speed.

MoFu: Scale-Aware Modulation and Fourier Fusion for Multi-Subject Video Generation

Dec 26, 2025Abstract:Multi-subject video generation aims to synthesize videos from textual prompts and multiple reference images, ensuring that each subject preserves natural scale and visual fidelity. However, current methods face two challenges: scale inconsistency, where variations in subject size lead to unnatural generation, and permutation sensitivity, where the order of reference inputs causes subject distortion. In this paper, we propose MoFu, a unified framework that tackles both challenges. For scale inconsistency, we introduce Scale-Aware Modulation (SMO), an LLM-guided module that extracts implicit scale cues from the prompt and modulates features to ensure consistent subject sizes. To address permutation sensitivity, we present a simple yet effective Fourier Fusion strategy that processes the frequency information of reference features via the Fast Fourier Transform to produce a unified representation. Besides, we design a Scale-Permutation Stability Loss to jointly encourage scale-consistent and permutation-invariant generation. To further evaluate these challenges, we establish a dedicated benchmark with controlled variations in subject scale and reference permutation. Extensive experiments demonstrate that MoFu significantly outperforms existing methods in preserving natural scale, subject fidelity, and overall visual quality.

Lay2Story: Extending Diffusion Transformers for Layout-Togglable Story Generation

Aug 12, 2025Abstract:Storytelling tasks involving generating consistent subjects have gained significant attention recently. However, existing methods, whether training-free or training-based, continue to face challenges in maintaining subject consistency due to the lack of fine-grained guidance and inter-frame interaction. Additionally, the scarcity of high-quality data in this field makes it difficult to precisely control storytelling tasks, including the subject's position, appearance, clothing, expression, and posture, thereby hindering further advancements. In this paper, we demonstrate that layout conditions, such as the subject's position and detailed attributes, effectively facilitate fine-grained interactions between frames. This not only strengthens the consistency of the generated frame sequence but also allows for precise control over the subject's position, appearance, and other key details. Building on this, we introduce an advanced storytelling task: Layout-Togglable Storytelling, which enables precise subject control by incorporating layout conditions. To address the lack of high-quality datasets with layout annotations for this task, we develop Lay2Story-1M, which contains over 1 million 720p and higher-resolution images, processed from approximately 11,300 hours of cartoon videos. Building on Lay2Story-1M, we create Lay2Story-Bench, a benchmark with 3,000 prompts designed to evaluate the performance of different methods on this task. Furthermore, we propose Lay2Story, a robust framework based on the Diffusion Transformers (DiTs) architecture for Layout-Togglable Storytelling tasks. Through both qualitative and quantitative experiments, we find that our method outperforms the previous state-of-the-art (SOTA) techniques, achieving the best results in terms of consistency, semantic correlation, and aesthetic quality.

SPAST: Arbitrary Style Transfer with Style Priors via Pre-trained Large-scale Model

May 13, 2025Abstract:Given an arbitrary content and style image, arbitrary style transfer aims to render a new stylized image which preserves the content image's structure and possesses the style image's style. Existing arbitrary style transfer methods are based on either small models or pre-trained large-scale models. The small model-based methods fail to generate high-quality stylized images, bringing artifacts and disharmonious patterns. The pre-trained large-scale model-based methods can generate high-quality stylized images but struggle to preserve the content structure and cost long inference time. To this end, we propose a new framework, called SPAST, to generate high-quality stylized images with less inference time. Specifically, we design a novel Local-global Window Size Stylization Module (LGWSSM)tofuse style features into content features. Besides, we introduce a novel style prior loss, which can dig out the style priors from a pre-trained large-scale model into the SPAST and motivate the SPAST to generate high-quality stylized images with short inference time.We conduct abundant experiments to verify that our proposed method can generate high-quality stylized images and less inference time compared with the SOTA arbitrary style transfer methods.

U-StyDiT: Ultra-high Quality Artistic Style Transfer Using Diffusion Transformers

Mar 11, 2025Abstract:Ultra-high quality artistic style transfer refers to repainting an ultra-high quality content image using the style information learned from the style image. Existing artistic style transfer methods can be categorized into style reconstruction-based and content-style disentanglement-based style transfer approaches. Although these methods can generate some artistic stylized images, they still exhibit obvious artifacts and disharmonious patterns, which hinder their ability to produce ultra-high quality artistic stylized images. To address these issues, we propose a novel artistic image style transfer method, U-StyDiT, which is built on transformer-based diffusion (DiT) and learns content-style disentanglement, generating ultra-high quality artistic stylized images. Specifically, we first design a Multi-view Style Modulator (MSM) to learn style information from a style image from local and global perspectives, conditioning U-StyDiT to generate stylized images with the learned style information. Then, we introduce a StyDiT Block to learn content and style conditions simultaneously from a style image. Additionally, we propose an ultra-high quality artistic image dataset, Aes4M, comprising 10 categories, each containing 400,000 style images. This dataset effectively solves the problem that the existing style transfer methods cannot produce high-quality artistic stylized images due to the size of the dataset and the quality of the images in the dataset. Finally, the extensive qualitative and quantitative experiments validate that our U-StyDiT can create higher quality stylized images compared to state-of-the-art artistic style transfer methods. To our knowledge, our proposed method is the first to address the generation of ultra-high quality stylized images using transformer-based diffusion.

WISA: World Simulator Assistant for Physics-Aware Text-to-Video Generation

Mar 11, 2025Abstract:Recent rapid advancements in text-to-video (T2V) generation, such as SoRA and Kling, have shown great potential for building world simulators. However, current T2V models struggle to grasp abstract physical principles and generate videos that adhere to physical laws. This challenge arises primarily from a lack of clear guidance on physical information due to a significant gap between abstract physical principles and generation models. To this end, we introduce the World Simulator Assistant (WISA), an effective framework for decomposing and incorporating physical principles into T2V models. Specifically, WISA decomposes physical principles into textual physical descriptions, qualitative physical categories, and quantitative physical properties. To effectively embed these physical attributes into the generation process, WISA incorporates several key designs, including Mixture-of-Physical-Experts Attention (MoPA) and a Physical Classifier, enhancing the model's physics awareness. Furthermore, most existing datasets feature videos where physical phenomena are either weakly represented or entangled with multiple co-occurring processes, limiting their suitability as dedicated resources for learning explicit physical principles. We propose a novel video dataset, WISA-32K, collected based on qualitative physical categories. It consists of 32,000 videos, representing 17 physical laws across three domains of physics: dynamics, thermodynamics, and optics. Experimental results demonstrate that WISA can effectively enhance the compatibility of T2V models with real-world physical laws, achieving a considerable improvement on the VideoPhy benchmark. The visual exhibitions of WISA and WISA-32K are available in the https://360cvgroup.github.io/WISA/.

DyArtbank: Diverse Artistic Style Transfer via Pre-trained Stable Diffusion and Dynamic Style Prompt Artbank

Mar 11, 2025

Abstract:Artistic style transfer aims to transfer the learned style onto an arbitrary content image. However, most existing style transfer methods can only render consistent artistic stylized images, making it difficult for users to get enough stylized images to enjoy. To solve this issue, we propose a novel artistic style transfer framework called DyArtbank, which can generate diverse and highly realistic artistic stylized images. Specifically, we introduce a Dynamic Style Prompt ArtBank (DSPA), a set of learnable parameters. It can learn and store the style information from the collection of artworks, dynamically guiding pre-trained stable diffusion to generate diverse and highly realistic artistic stylized images. DSPA can also generate random artistic image samples with the learned style information, providing a new idea for data augmentation. Besides, a Key Content Feature Prompt (KCFP) module is proposed to provide sufficient content prompts for pre-trained stable diffusion to preserve the detailed structure of the input content image. Extensive qualitative and quantitative experiments verify the effectiveness of our proposed method. Code is available: https://github.com/Jamie-Cheung/DyArtbank

RelaCtrl: Relevance-Guided Efficient Control for Diffusion Transformers

Feb 21, 2025

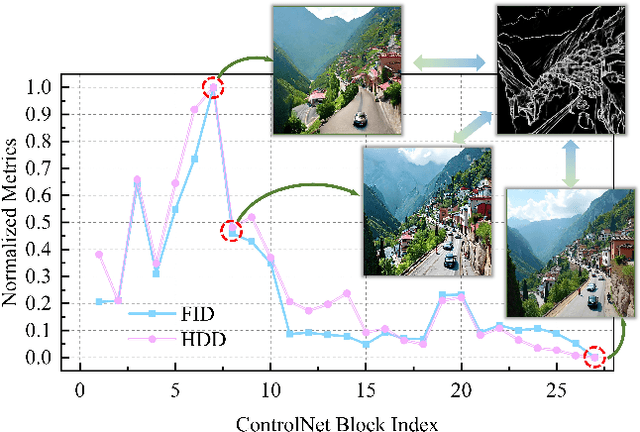

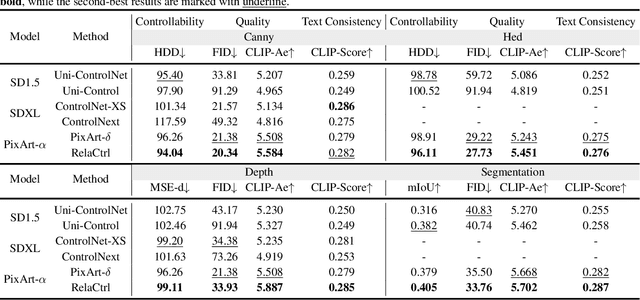

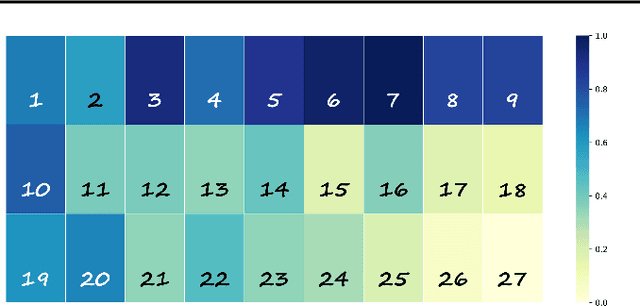

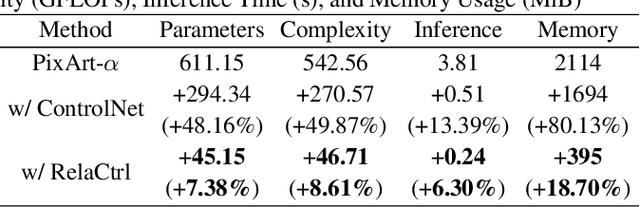

Abstract:The Diffusion Transformer plays a pivotal role in advancing text-to-image and text-to-video generation, owing primarily to its inherent scalability. However, existing controlled diffusion transformer methods incur significant parameter and computational overheads and suffer from inefficient resource allocation due to their failure to account for the varying relevance of control information across different transformer layers. To address this, we propose the Relevance-Guided Efficient Controllable Generation framework, RelaCtrl, enabling efficient and resource-optimized integration of control signals into the Diffusion Transformer. First, we evaluate the relevance of each layer in the Diffusion Transformer to the control information by assessing the "ControlNet Relevance Score"-i.e., the impact of skipping each control layer on both the quality of generation and the control effectiveness during inference. Based on the strength of the relevance, we then tailor the positioning, parameter scale, and modeling capacity of the control layers to reduce unnecessary parameters and redundant computations. Additionally, to further improve efficiency, we replace the self-attention and FFN in the commonly used copy block with the carefully designed Two-Dimensional Shuffle Mixer (TDSM), enabling efficient implementation of both the token mixer and channel mixer. Both qualitative and quantitative experimental results demonstrate that our approach achieves superior performance with only 15% of the parameters and computational complexity compared to PixArt-delta.

Rethink Arbitrary Style Transfer with Transformer and Contrastive Learning

Apr 21, 2024

Abstract:Arbitrary style transfer holds widespread attention in research and boasts numerous practical applications. The existing methods, which either employ cross-attention to incorporate deep style attributes into content attributes or use adaptive normalization to adjust content features, fail to generate high-quality stylized images. In this paper, we introduce an innovative technique to improve the quality of stylized images. Firstly, we propose Style Consistency Instance Normalization (SCIN), a method to refine the alignment between content and style features. In addition, we have developed an Instance-based Contrastive Learning (ICL) approach designed to understand the relationships among various styles, thereby enhancing the quality of the resulting stylized images. Recognizing that VGG networks are more adept at extracting classification features and need to be better suited for capturing style features, we have also introduced the Perception Encoder (PE) to capture style features. Extensive experiments demonstrate that our proposed method generates high-quality stylized images and effectively prevents artifacts compared with the existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge