Quanwei Zhang

Lay2Story: Extending Diffusion Transformers for Layout-Togglable Story Generation

Aug 12, 2025Abstract:Storytelling tasks involving generating consistent subjects have gained significant attention recently. However, existing methods, whether training-free or training-based, continue to face challenges in maintaining subject consistency due to the lack of fine-grained guidance and inter-frame interaction. Additionally, the scarcity of high-quality data in this field makes it difficult to precisely control storytelling tasks, including the subject's position, appearance, clothing, expression, and posture, thereby hindering further advancements. In this paper, we demonstrate that layout conditions, such as the subject's position and detailed attributes, effectively facilitate fine-grained interactions between frames. This not only strengthens the consistency of the generated frame sequence but also allows for precise control over the subject's position, appearance, and other key details. Building on this, we introduce an advanced storytelling task: Layout-Togglable Storytelling, which enables precise subject control by incorporating layout conditions. To address the lack of high-quality datasets with layout annotations for this task, we develop Lay2Story-1M, which contains over 1 million 720p and higher-resolution images, processed from approximately 11,300 hours of cartoon videos. Building on Lay2Story-1M, we create Lay2Story-Bench, a benchmark with 3,000 prompts designed to evaluate the performance of different methods on this task. Furthermore, we propose Lay2Story, a robust framework based on the Diffusion Transformers (DiTs) architecture for Layout-Togglable Storytelling tasks. Through both qualitative and quantitative experiments, we find that our method outperforms the previous state-of-the-art (SOTA) techniques, achieving the best results in terms of consistency, semantic correlation, and aesthetic quality.

SPAST: Arbitrary Style Transfer with Style Priors via Pre-trained Large-scale Model

May 13, 2025Abstract:Given an arbitrary content and style image, arbitrary style transfer aims to render a new stylized image which preserves the content image's structure and possesses the style image's style. Existing arbitrary style transfer methods are based on either small models or pre-trained large-scale models. The small model-based methods fail to generate high-quality stylized images, bringing artifacts and disharmonious patterns. The pre-trained large-scale model-based methods can generate high-quality stylized images but struggle to preserve the content structure and cost long inference time. To this end, we propose a new framework, called SPAST, to generate high-quality stylized images with less inference time. Specifically, we design a novel Local-global Window Size Stylization Module (LGWSSM)tofuse style features into content features. Besides, we introduce a novel style prior loss, which can dig out the style priors from a pre-trained large-scale model into the SPAST and motivate the SPAST to generate high-quality stylized images with short inference time.We conduct abundant experiments to verify that our proposed method can generate high-quality stylized images and less inference time compared with the SOTA arbitrary style transfer methods.

DyArtbank: Diverse Artistic Style Transfer via Pre-trained Stable Diffusion and Dynamic Style Prompt Artbank

Mar 11, 2025

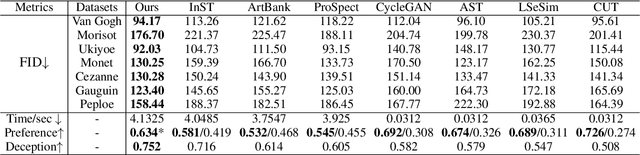

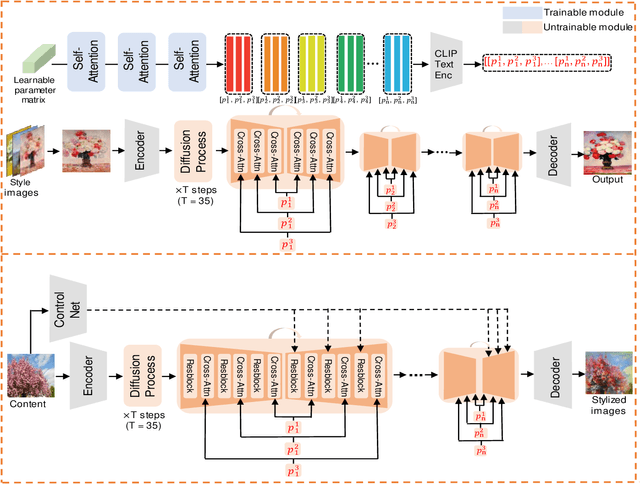

Abstract:Artistic style transfer aims to transfer the learned style onto an arbitrary content image. However, most existing style transfer methods can only render consistent artistic stylized images, making it difficult for users to get enough stylized images to enjoy. To solve this issue, we propose a novel artistic style transfer framework called DyArtbank, which can generate diverse and highly realistic artistic stylized images. Specifically, we introduce a Dynamic Style Prompt ArtBank (DSPA), a set of learnable parameters. It can learn and store the style information from the collection of artworks, dynamically guiding pre-trained stable diffusion to generate diverse and highly realistic artistic stylized images. DSPA can also generate random artistic image samples with the learned style information, providing a new idea for data augmentation. Besides, a Key Content Feature Prompt (KCFP) module is proposed to provide sufficient content prompts for pre-trained stable diffusion to preserve the detailed structure of the input content image. Extensive qualitative and quantitative experiments verify the effectiveness of our proposed method. Code is available: https://github.com/Jamie-Cheung/DyArtbank

GLCAN: Global-Local Collaborative Auxiliary Network for Local Learning

Jun 01, 2024

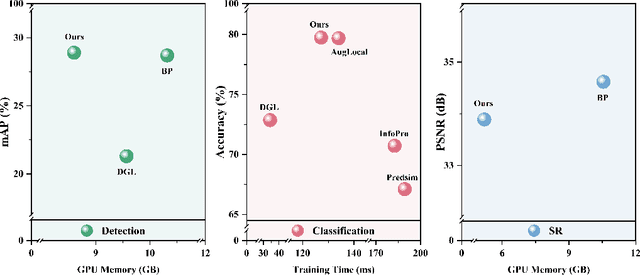

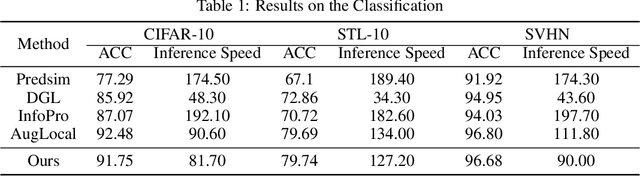

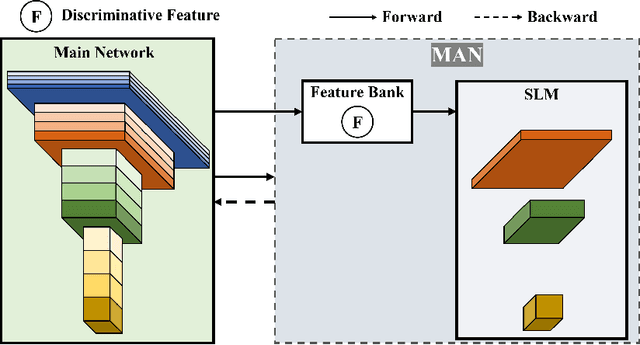

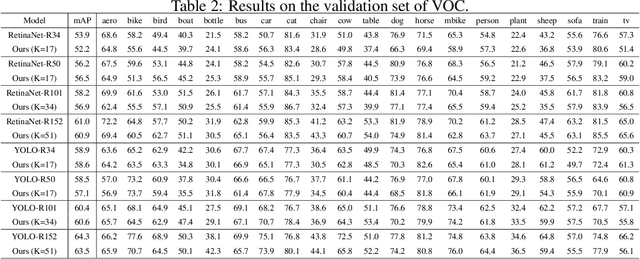

Abstract:Traditional deep neural networks typically use end-to-end backpropagation, which often places a big burden on GPU memory. Another promising training method is local learning, which involves splitting the network into blocks and training them in parallel with the help of an auxiliary network. Local learning has been widely studied and applied to image classification tasks, and its performance is comparable to that of end-to-end method. However, different image tasks often rely on different feature representations, which is difficult for typical auxiliary networks to adapt to. To solve this problem, we propose the construction method of Global-Local Collaborative Auxiliary Network (GLCAN), which provides a macroscopic design approach for auxiliary networks. This is the first demonstration that local learning methods can be successfully applied to other tasks such as object detection and super-resolution. GLCAN not only saves a lot of GPU memory, but also has comparable performance to an end-to-end approach on data sets for multiple different tasks.

Rethink Arbitrary Style Transfer with Transformer and Contrastive Learning

Apr 21, 2024

Abstract:Arbitrary style transfer holds widespread attention in research and boasts numerous practical applications. The existing methods, which either employ cross-attention to incorporate deep style attributes into content attributes or use adaptive normalization to adjust content features, fail to generate high-quality stylized images. In this paper, we introduce an innovative technique to improve the quality of stylized images. Firstly, we propose Style Consistency Instance Normalization (SCIN), a method to refine the alignment between content and style features. In addition, we have developed an Instance-based Contrastive Learning (ICL) approach designed to understand the relationships among various styles, thereby enhancing the quality of the resulting stylized images. Recognizing that VGG networks are more adept at extracting classification features and need to be better suited for capturing style features, we have also introduced the Perception Encoder (PE) to capture style features. Extensive experiments demonstrate that our proposed method generates high-quality stylized images and effectively prevents artifacts compared with the existing state-of-the-art methods.

Towards Highly Realistic Artistic Style Transfer via Stable Diffusion with Step-aware and Layer-aware Prompt

Apr 17, 2024

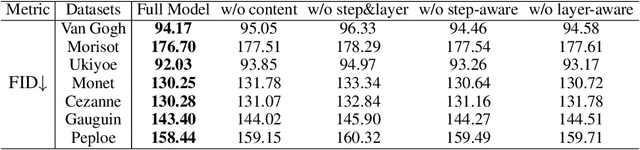

Abstract:Artistic style transfer aims to transfer the learned artistic style onto an arbitrary content image, generating artistic stylized images. Existing generative adversarial network-based methods fail to generate highly realistic stylized images and always introduce obvious artifacts and disharmonious patterns. Recently, large-scale pre-trained diffusion models opened up a new way for generating highly realistic artistic stylized images. However, diffusion model-based methods generally fail to preserve the content structure of input content images well, introducing some undesired content structure and style patterns. To address the above problems, we propose a novel pre-trained diffusion-based artistic style transfer method, called LSAST, which can generate highly realistic artistic stylized images while preserving the content structure of input content images well, without bringing obvious artifacts and disharmonious style patterns. Specifically, we introduce a Step-aware and Layer-aware Prompt Space, a set of learnable prompts, which can learn the style information from the collection of artworks and dynamically adjusts the input images' content structure and style pattern. To train our prompt space, we propose a novel inversion method, called Step-ware and Layer-aware Prompt Inversion, which allows the prompt space to learn the style information of the artworks collection. In addition, we inject a pre-trained conditional branch of ControlNet into our LSAST, which further improved our framework's ability to maintain content structure. Extensive experiments demonstrate that our proposed method can generate more highly realistic artistic stylized images than the state-of-the-art artistic style transfer methods.

ArtBank: Artistic Style Transfer with Pre-trained Diffusion Model and Implicit Style Prompt Bank

Dec 11, 2023

Abstract:Artistic style transfer aims to repaint the content image with the learned artistic style. Existing artistic style transfer methods can be divided into two categories: small model-based approaches and pre-trained large-scale model-based approaches. Small model-based approaches can preserve the content strucuture, but fail to produce highly realistic stylized images and introduce artifacts and disharmonious patterns; Pre-trained large-scale model-based approaches can generate highly realistic stylized images but struggle with preserving the content structure. To address the above issues, we propose ArtBank, a novel artistic style transfer framework, to generate highly realistic stylized images while preserving the content structure of the content images. Specifically, to sufficiently dig out the knowledge embedded in pre-trained large-scale models, an Implicit Style Prompt Bank (ISPB), a set of trainable parameter matrices, is designed to learn and store knowledge from the collection of artworks and behave as a visual prompt to guide pre-trained large-scale models to generate highly realistic stylized images while preserving content structure. Besides, to accelerate training the above ISPB, we propose a novel Spatial-Statistical-based self-Attention Module (SSAM). The qualitative and quantitative experiments demonstrate the superiority of our proposed method over state-of-the-art artistic style transfer methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge