Zeyun Yu

Department of Computer Science, University of Wisconsin-Milwaukee, Milwaukee, WI, USA

Wound Tissue Segmentation in Diabetic Foot Ulcer Images Using Deep Learning: A Pilot Study

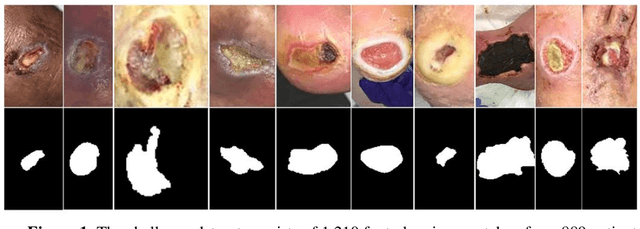

Jun 23, 2024Abstract:Identifying individual tissues, so-called tissue segmentation, in diabetic foot ulcer (DFU) images is a challenging task and little work has been published, largely due to the limited availability of a clinical image dataset. To address this gap, we have created a DFUTissue dataset for the research community to evaluate wound tissue segmentation algorithms. The dataset contains 110 images with tissues labeled by wound experts and 600 unlabeled images. Additionally, we conducted a pilot study on segmenting wound characteristics including fibrin, granulation, and callus using deep learning. Due to the limited amount of annotated data, our framework consists of both supervised learning (SL) and semi-supervised learning (SSL) phases. In the SL phase, we propose a hybrid model featuring a Mix Transformer (MiT-b3) in the encoder and a CNN in the decoder, enhanced by the integration of a parallel spatial and channel squeeze-and-excitation (P-scSE) module known for its efficacy in improving boundary accuracy. The SSL phase employs a pseudo-labeling-based approach, iteratively identifying and incorporating valuable unlabeled images to enhance overall segmentation performance. Comparative evaluations with state-of-the-art methods are conducted for both SL and SSL phases. The SL achieves a Dice Similarity Coefficient (DSC) of 84.89%, which has been improved to 87.64% in the SSL phase. Furthermore, the results are benchmarked against two widely used SSL approaches: Generative Adversarial Networks and Cross-Consistency Training. Additionally, our hybrid model outperforms the state-of-the-art methods with a 92.99% DSC in performing binary segmentation of DFU wound areas when tested on the Chronic Wound dataset. Codes and data are available at https://github.com/uwm-bigdata/DFUTissueSegNet.

A Deep Learning Approach to Teeth Segmentation and Orientation from Panoramic X-rays

Oct 26, 2023Abstract:Accurate teeth segmentation and orientation are fundamental in modern oral healthcare, enabling precise diagnosis, treatment planning, and dental implant design. In this study, we present a comprehensive approach to teeth segmentation and orientation from panoramic X-ray images, leveraging deep learning techniques. We build our model based on FUSegNet, a popular model originally developed for wound segmentation, and introduce modifications by incorporating grid-based attention gates into the skip connections. We introduce oriented bounding box (OBB) generation through principal component analysis (PCA) for precise tooth orientation estimation. Evaluating our approach on the publicly available DNS dataset, comprising 543 panoramic X-ray images, we achieve the highest Intersection-over-Union (IoU) score of 82.43% and Dice Similarity Coefficient (DSC) score of 90.37% among compared models in teeth instance segmentation. In OBB analysis, we obtain the Rotated IoU (RIoU) score of 82.82%. We also conduct detailed analyses of individual tooth labels and categorical performance, shedding light on strengths and weaknesses. The proposed model's accuracy and versatility offer promising prospects for improving dental diagnoses, treatment planning, and personalized healthcare in the oral domain. Our generated OBB coordinates and codes are available at https://github.com/mrinal054/Instance_teeth_segmentation.

Integrated Image and Location Analysis for Wound Classification: A Deep Learning Approach

Aug 24, 2023Abstract:The global burden of acute and chronic wounds presents a compelling case for enhancing wound classification methods, a vital step in diagnosing and determining optimal treatments. Recognizing this need, we introduce an innovative multi-modal network based on a deep convolutional neural network for categorizing wounds into four categories: diabetic, pressure, surgical, and venous ulcers. Our multi-modal network uses wound images and their corresponding body locations for more precise classification. A unique aspect of our methodology is incorporating a body map system that facilitates accurate wound location tagging, improving upon traditional wound image classification techniques. A distinctive feature of our approach is the integration of models such as VGG16, ResNet152, and EfficientNet within a novel architecture. This architecture includes elements like spatial and channel-wise Squeeze-and-Excitation modules, Axial Attention, and an Adaptive Gated Multi-Layer Perceptron, providing a robust foundation for classification. Our multi-modal network was trained and evaluated on two distinct datasets comprising relevant images and corresponding location information. Notably, our proposed network outperformed traditional methods, reaching an accuracy range of 74.79% to 100% for Region of Interest (ROI) without location classifications, 73.98% to 100% for ROI with location classifications, and 78.10% to 100% for whole image classifications. This marks a significant enhancement over previously reported performance metrics in the literature. Our results indicate the potential of our multi-modal network as an effective decision-support tool for wound image classification, paving the way for its application in various clinical contexts.

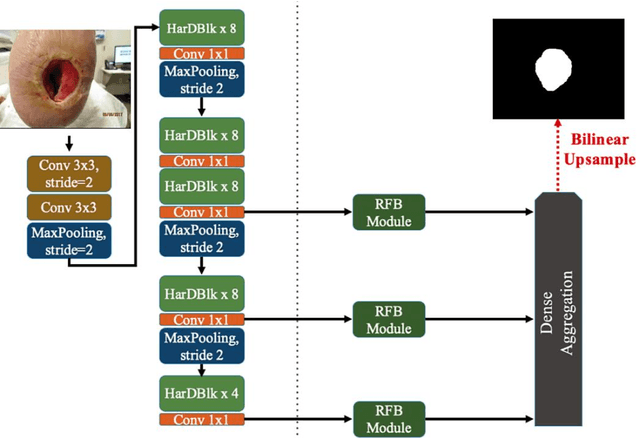

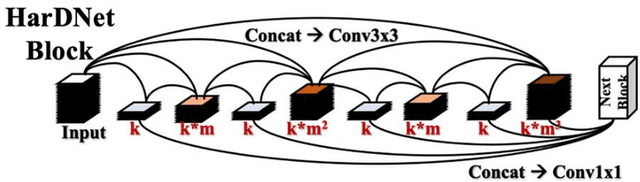

FUSegNet: A Deep Convolutional Neural Network for Foot Ulcer Segmentation

May 04, 2023

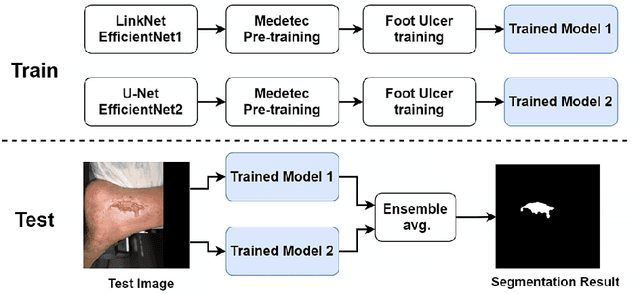

Abstract:This paper presents FUSegNet, a new model for foot ulcer segmentation in diabetes patients, which uses the pre-trained EfficientNet-b7 as a backbone to address the issue of limited training samples. A modified spatial and channel squeeze-and-excitation (scSE) module called parallel scSE or P-scSE is proposed that combines additive and max-out scSE. A new arrangement is introduced for the module by fusing it in the middle of each decoder stage. As the top decoder stage carries a limited number of feature maps, max-out scSE is bypassed there to form a shorted P-scSE. A set of augmentations, comprising geometric, morphological, and intensity-based augmentations, is applied before feeding the data into the network. The proposed model is first evaluated on a publicly available chronic wound dataset where it achieves a data-based dice score of 92.70%, which is the highest score among the reported approaches. The model outperforms other scSE-based UNet models in terms of Pratt's figure of merits (PFOM) scores in most categories, which evaluates the accuracy of edge localization. The model is then tested in the MICCAI 2021 FUSeg challenge, where a variation of FUSegNet called x-FUSegNet is submitted. The x-FUSegNet model, which takes the average of outputs obtained by FUSegNet using 5-fold cross-validation, achieves a dice score of 89.23%, placing it at the top of the FUSeg Challenge leaderboard. The source code for the model is available on https://github.com/mrinal054/FUSegNet.

MultiNet with Transformers: A Model for Cancer Diagnosis Using Images

Jan 21, 2023

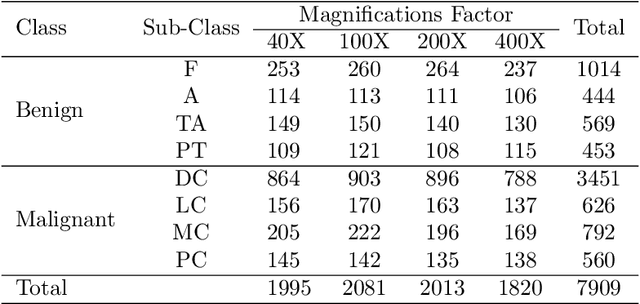

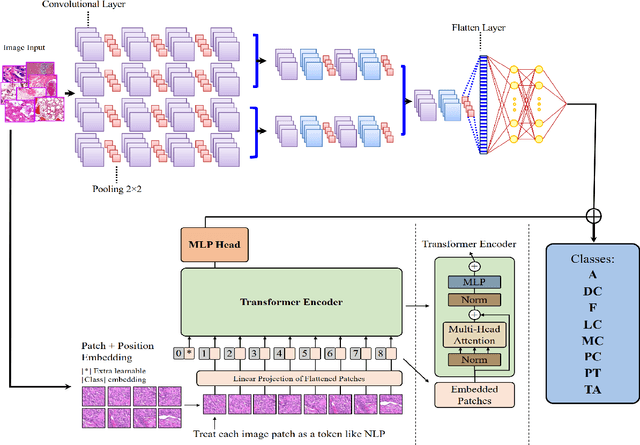

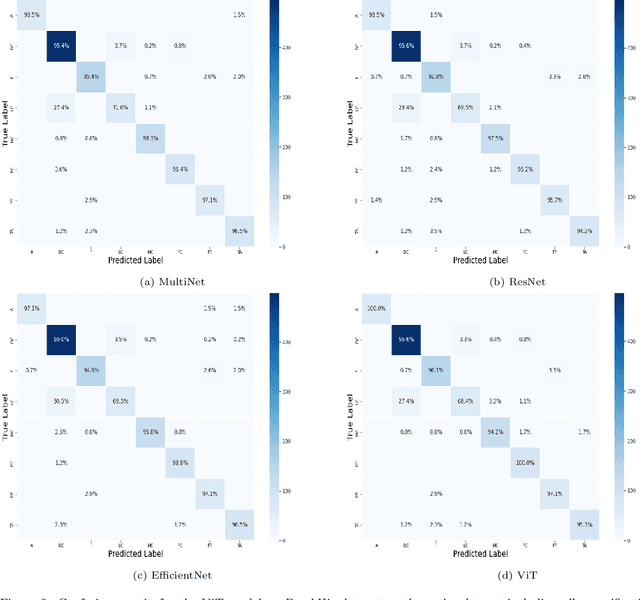

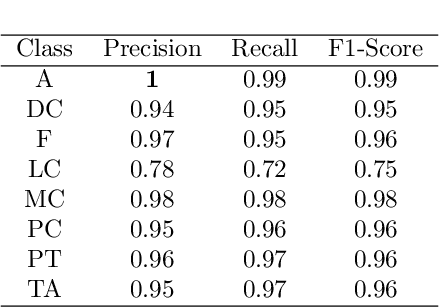

Abstract:Cancer is a leading cause of death in many countries. An early diagnosis of cancer based on biomedical imaging ensures effective treatment and a better prognosis. However, biomedical imaging presents challenges to both clinical institutions and researchers. Physiological anomalies are often characterized by slight abnormalities in individual cells or tissues, making them difficult to detect visually. Traditionally, anomalies are diagnosed by radiologists and pathologists with extensive training. This procedure, however, demands the participation of professionals and incurs a substantial cost. The cost makes large-scale biological image classification impractical. In this study, we provide unique deep neural network designs for multiclass classification of medical images, in particular cancer images. We incorporated transformers into a multiclass framework to take advantage of data-gathering capability and perform more accurate classifications. We evaluated models on publicly accessible datasets using various measures to ensure the reliability of the models. Extensive assessment metrics suggest this method can be used for a multitude of classification tasks.

Biomedical image analysis competitions: The state of current participation practice

Dec 16, 2022Abstract:The number of international benchmarking competitions is steadily increasing in various fields of machine learning (ML) research and practice. So far, however, little is known about the common practice as well as bottlenecks faced by the community in tackling the research questions posed. To shed light on the status quo of algorithm development in the specific field of biomedical imaging analysis, we designed an international survey that was issued to all participants of challenges conducted in conjunction with the IEEE ISBI 2021 and MICCAI 2021 conferences (80 competitions in total). The survey covered participants' expertise and working environments, their chosen strategies, as well as algorithm characteristics. A median of 72% challenge participants took part in the survey. According to our results, knowledge exchange was the primary incentive (70%) for participation, while the reception of prize money played only a minor role (16%). While a median of 80 working hours was spent on method development, a large portion of participants stated that they did not have enough time for method development (32%). 25% perceived the infrastructure to be a bottleneck. Overall, 94% of all solutions were deep learning-based. Of these, 84% were based on standard architectures. 43% of the respondents reported that the data samples (e.g., images) were too large to be processed at once. This was most commonly addressed by patch-based training (69%), downsampling (37%), and solving 3D analysis tasks as a series of 2D tasks. K-fold cross-validation on the training set was performed by only 37% of the participants and only 50% of the participants performed ensembling based on multiple identical models (61%) or heterogeneous models (39%). 48% of the respondents applied postprocessing steps.

Wound Severity Classification using Deep Neural Network

Apr 17, 2022

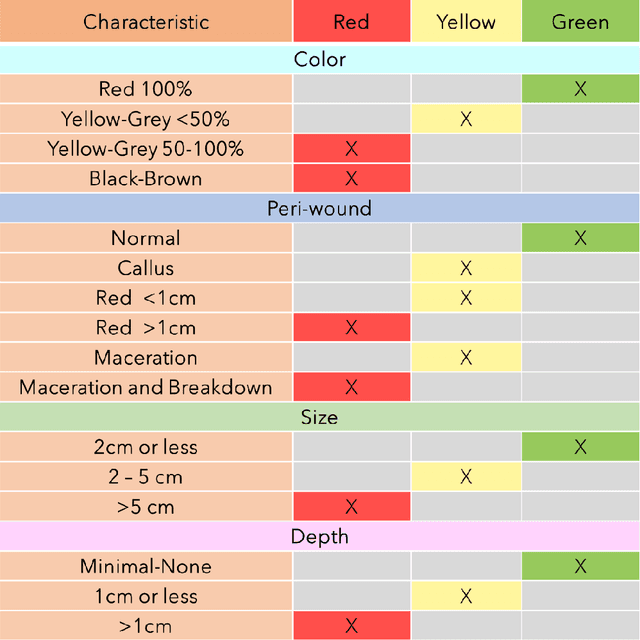

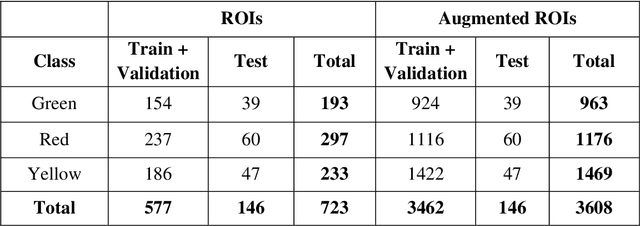

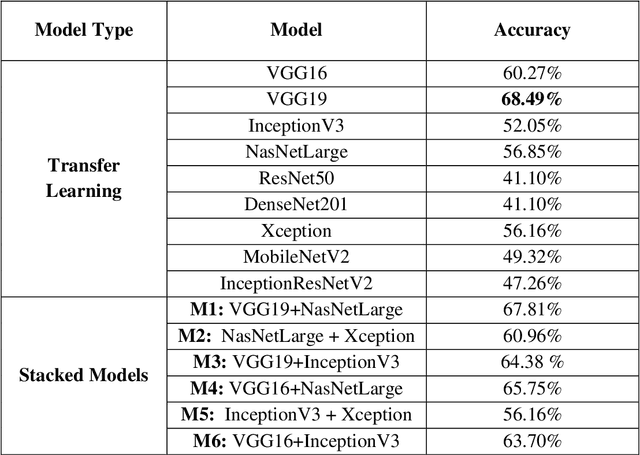

Abstract:The classification of wound severity is a critical step in wound diagnosis. An effective classifier can help wound professionals categorize wound conditions more quickly and affordably, allowing them to choose the best treatment option. This study used wound photos to construct a deep neural network-based wound severity classifier that classified them into one of three classes: green, yellow, or red. The green class denotes wounds still in the early stages of healing and are most likely to recover with adequate care. Wounds in the yellow category require more attention and treatment than those in the green category. Finally, the red class denotes the most severe wounds that require prompt attention and treatment. A dataset containing different types of wound images is designed with the help of wound specialists. Nine deep learning models are used with applying the concept of transfer learning. Several stacked models are also developed by concatenating these transfer learning models. The maximum accuracy achieved on multi-class classification is 68.49%. In addition, we achieved 78.79%, 81.40%, and 77.57% accuracies on green vs. yellow, green vs. red, and yellow vs. red classifications for binary classifications.

S-R2F2U-Net: A single-stage model for teeth segmentation

Apr 06, 2022

Abstract:Precision tooth segmentation is crucial in the oral sector because it provides location information for orthodontic therapy, clinical diagnosis, and surgical treatments. In this paper, we investigate residual, recurrent, and attention networks to segment teeth from panoramic dental images. Based on our findings, we suggest three single-stage models: Single Recurrent R2U-Net (S-R2U-Net), Single Recurrent Filter Double R2U-Net (S-R2F2U-Net), and Single Recurrent Attention Enabled Filter Double (S-R2F2-Attn-U-Net). Particularly, S-R2F2U-Net outperforms state-of-the-art models in terms of accuracy and dice score. A hybrid loss function combining the cross-entropy loss and dice loss is used to train the model. In addition, it reduces around 45% of model parameters compared to the R2U-Net model. Models are trained and evaluated on a benchmark dataset containing 1500 dental panoramic X-ray images. S-R2F2U-Net achieves 97.31% of accuracy and 93.26% of dice score, showing superiority over the state-of-the-art methods. Codes are available at https://github.com/mrinal054/teethSeg_sr2f2u-net.git.

Machine learning techniques to identify antibiotic resistance in patients diagnosed with various skin and soft tissue infections

Feb 28, 2022

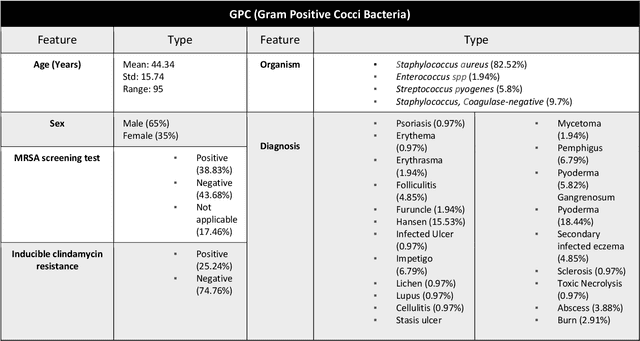

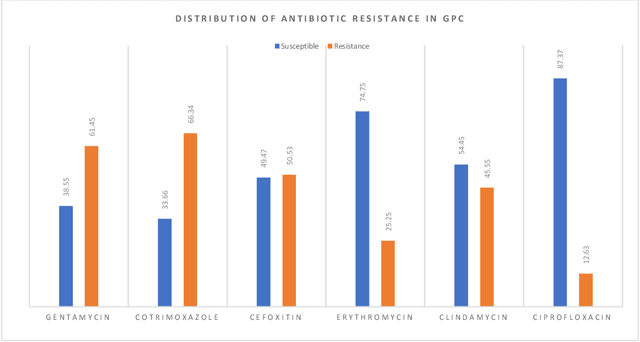

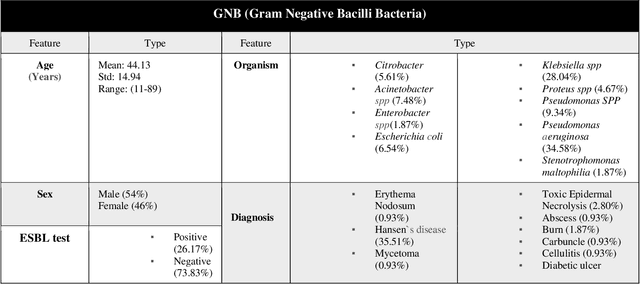

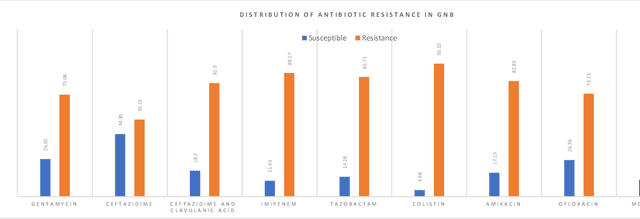

Abstract:Skin and soft tissue infections (SSTIs) are among the most frequently observed diseases in ambulatory and hospital settings. Resistance of diverse bacterial pathogens to antibiotics is a significant cause of severe SSTIs, and treatment failure results in morbidity, mortality, and increased cost of hospitalization. Therefore, antimicrobial surveillance is essential to predict antibiotic resistance trends and monitor the results of medical interventions. To address this, we developed machine learning (ML) models (deep and conventional algorithms) to predict antimicrobial resistance using antibiotic susceptibility testing (ABST) data collected from patients clinically diagnosed with primary and secondary pyoderma over a period of one year. We trained an individual ML algorithm on each antimicrobial family to determine whether a Gram-Positive Cocci (GPC) or Gram-Negative Bacilli (GNB) bacteria will resist the corresponding antibiotic. For this purpose, clinical and demographic features from the patient and data from ABST were employed in training. We achieved an Area Under the Curve (AUC) of 0.68-0.98 in GPC and 0.56-0.93 in GNB bacteria, depending on the antimicrobial family. We also conducted a correlation analysis to determine the linear relationship between each feature and antimicrobial families in different bacteria. ML techniques suggest that a predictable nonlinear relationship exists between patients' clinical-demographic characteristics and antibiotic resistance; however, the accuracy of this prediction depends on the type of the antimicrobial family.

FUSeg: The Foot Ulcer Segmentation Challenge

Jan 02, 2022

Abstract:Acute and chronic wounds with varying etiologies burden the healthcare systems economically. The advanced wound care market is estimated to reach $22 billion by 2024. Wound care professionals provide proper diagnosis and treatment with heavy reliance on images and image documentation. Segmentation of wound boundaries in images is a key component of the care and diagnosis protocol since it is important to estimate the area of the wound and provide quantitative measurement for the treatment. Unfortunately, this process is very time-consuming and requires a high level of expertise. Recently automatic wound segmentation methods based on deep learning have shown promising performance but require large datasets for training and it is unclear which methods perform better. To address these issues, we propose the Foot Ulcer Segmentation challenge (FUSeg) organized in conjunction with the 2021 International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI). We built a wound image dataset containing 1,210 foot ulcer images collected over 2 years from 889 patients. It is pixel-wise annotated by wound care experts and split into a training set with 1010 images and a testing set with 200 images for evaluation. Teams around the world developed automated methods to predict wound segmentations on the testing set of which annotations were kept private. The predictions were evaluated and ranked based on the average Dice coefficient. The FUSeg challenge remains an open challenge as a benchmark for wound segmentation after the conference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge