Keke Chen

Auditing Approximate Machine Unlearning for Differentially Private Models

Aug 26, 2025Abstract:Approximate machine unlearning aims to remove the effect of specific data from trained models to ensure individuals' privacy. Existing methods focus on the removed records and assume the retained ones are unaffected. However, recent studies on the \emph{privacy onion effect} indicate this assumption might be incorrect. Especially when the model is differentially private, no study has explored whether the retained ones still meet the differential privacy (DP) criterion under existing machine unlearning methods. This paper takes a holistic approach to auditing both unlearned and retained samples' privacy risks after applying approximate unlearning algorithms. We propose the privacy criteria for unlearned and retained samples, respectively, based on the perspectives of DP and membership inference attacks (MIAs). To make the auditing process more practical, we also develop an efficient MIA, A-LiRA, utilizing data augmentation to reduce the cost of shadow model training. Our experimental findings indicate that existing approximate machine unlearning algorithms may inadvertently compromise the privacy of retained samples for differentially private models, and we need differentially private unlearning algorithms. For reproducibility, we have pubished our code: https://anonymous.4open.science/r/Auditing-machine-unlearning-CB10/README.md

Membership Inference Attacks on LLM-based Recommender Systems

Aug 26, 2025Abstract:Large language models (LLMs) based Recommender Systems (RecSys) can flexibly adapt recommendation systems to different domains. It utilizes in-context learning (ICL), i.e., the prompts, to customize the recommendation functions, which include sensitive historical user-specific item interactions, e.g., implicit feedback like clicked items or explicit product reviews. Such private information may be exposed to novel privacy attack. However, no study has been done on this important issue. We design four membership inference attacks (MIAs), aiming to reveal whether victims' historical interactions have been used by system prompts. They are \emph{direct inquiry, hallucination, similarity, and poisoning attacks}, each of which utilizes the unique features of LLMs or RecSys. We have carefully evaluated them on three LLMs that have been used to develop ICL-LLM RecSys and two well-known RecSys benchmark datasets. The results confirm that the MIA threat on LLM RecSys is realistic: direct inquiry and poisoning attacks showing significantly high attack advantages. We have also analyzed the factors affecting these attacks, such as the number of shots in system prompts and the position of the victim in the shots.

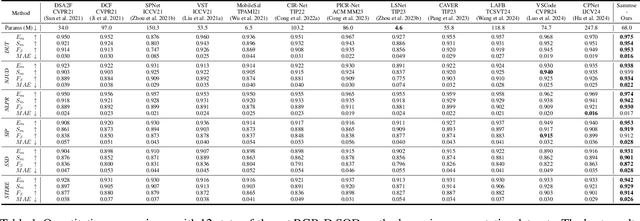

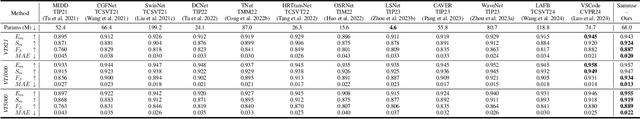

Alignment-Free RGB-T Salient Object Detection: A Large-scale Dataset and Progressive Correlation Network

Dec 19, 2024

Abstract:Alignment-free RGB-Thermal (RGB-T) salient object detection (SOD) aims to achieve robust performance in complex scenes by directly leveraging the complementary information from unaligned visible-thermal image pairs, without requiring manual alignment. However, the labor-intensive process of collecting and annotating image pairs limits the scale of existing benchmarks, hindering the advancement of alignment-free RGB-T SOD. In this paper, we construct a large-scale and high-diversity unaligned RGB-T SOD dataset named UVT20K, comprising 20,000 image pairs, 407 scenes, and 1256 object categories. All samples are collected from real-world scenarios with various challenges, such as low illumination, image clutter, complex salient objects, and so on. To support the exploration for further research, each sample in UVT20K is annotated with a comprehensive set of ground truths, including saliency masks, scribbles, boundaries, and challenge attributes. In addition, we propose a Progressive Correlation Network (PCNet), which models inter- and intra-modal correlations on the basis of explicit alignment to achieve accurate predictions in unaligned image pairs. Extensive experiments conducted on unaligned and aligned datasets demonstrate the effectiveness of our method.Code and dataset are available at https://github.com/Angknpng/PCNet.

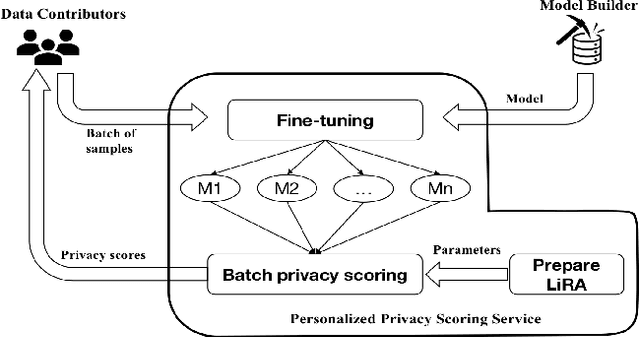

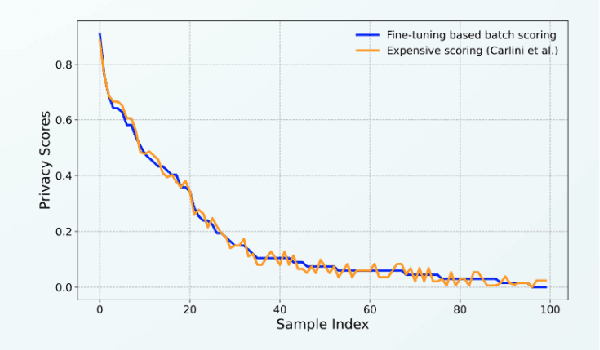

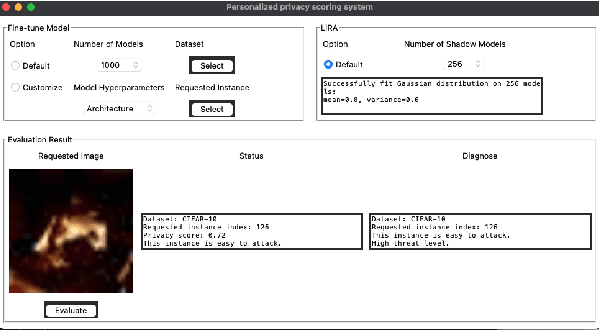

FT-PrivacyScore: Personalized Privacy Scoring Service for Machine Learning Participation

Oct 30, 2024

Abstract:Training data privacy has been a top concern in AI modeling. While methods like differentiated private learning allow data contributors to quantify acceptable privacy loss, model utility is often significantly damaged. In practice, controlled data access remains a mainstream method for protecting data privacy in many industrial and research environments. In controlled data access, authorized model builders work in a restricted environment to access sensitive data, which can fully preserve data utility with reduced risk of data leak. However, unlike differential privacy, there is no quantitative measure for individual data contributors to tell their privacy risk before participating in a machine learning task. We developed the demo prototype FT-PrivacyScore to show that it's possible to efficiently and quantitatively estimate the privacy risk of participating in a model fine-tuning task. The demo source code will be available at \url{https://github.com/RhincodonE/demo_privacy_scoring}.

Calibrating Practical Privacy Risks for Differentially Private Machine Learning

Oct 30, 2024Abstract:Differential privacy quantifies privacy through the privacy budget $\epsilon$, yet its practical interpretation is complicated by variations across models and datasets. Recent research on differentially private machine learning and membership inference has highlighted that with the same theoretical $\epsilon$ setting, the likelihood-ratio-based membership inference (LiRA) attacking success rate (ASR) may vary according to specific datasets and models, which might be a better indicator for evaluating real-world privacy risks. Inspired by this practical privacy measure, we study the approaches that can lower the attacking success rate to allow for more flexible privacy budget settings in model training. We find that by selectively suppressing privacy-sensitive features, we can achieve lower ASR values without compromising application-specific data utility. We use the SHAP and LIME model explainer to evaluate feature sensitivities and develop feature-masking strategies. Our findings demonstrate that the LiRA $ASR^M$ on model $M$ can properly indicate the inherent privacy risk of a dataset for modeling, and it's possible to modify datasets to enable the use of larger theoretical $\epsilon$ settings to achieve equivalent practical privacy protection. We have conducted extensive experiments to show the inherent link between ASR and the dataset's privacy risk. By carefully selecting features to mask, we can preserve more data utility with equivalent practical privacy protection and relaxed $\epsilon$ settings. The implementation details are shared online at the provided GitHub URL \url{https://anonymous.4open.science/r/On-sensitive-features-and-empirical-epsilon-lower-bounds-BF67/}.

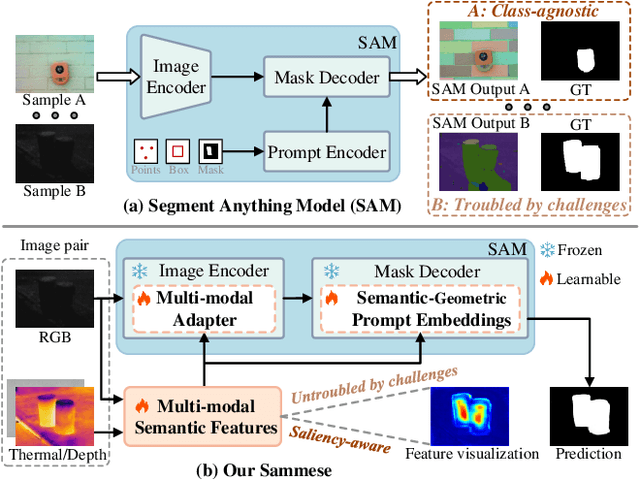

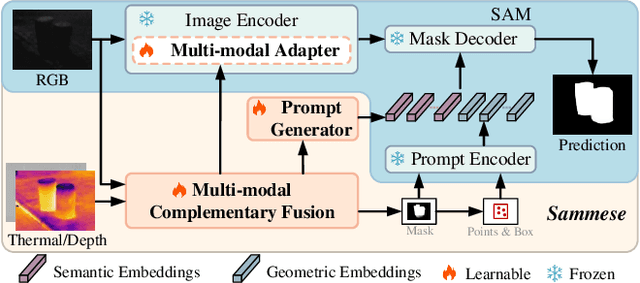

Adapting Segment Anything Model to Multi-modal Salient Object Detection with Semantic Feature Fusion Guidance

Aug 27, 2024

Abstract:Although most existing multi-modal salient object detection (SOD) methods demonstrate effectiveness through training models from scratch, the limited multi-modal data hinders these methods from reaching optimality. In this paper, we propose a novel framework to explore and exploit the powerful feature representation and zero-shot generalization ability of the pre-trained Segment Anything Model (SAM) for multi-modal SOD. Despite serving as a recent vision fundamental model, driving the class-agnostic SAM to comprehend and detect salient objects accurately is non-trivial, especially in challenging scenes. To this end, we develop \underline{SAM} with se\underline{m}antic f\underline{e}ature fu\underline{s}ion guidanc\underline{e} (Sammese), which incorporates multi-modal saliency-specific knowledge into SAM to adapt SAM to multi-modal SOD tasks. However, it is difficult for SAM trained on single-modal data to directly mine the complementary benefits of multi-modal inputs and comprehensively utilize them to achieve accurate saliency prediction.To address these issues, we first design a multi-modal complementary fusion module to extract robust multi-modal semantic features by integrating information from visible and thermal or depth image pairs. Then, we feed the extracted multi-modal semantic features into both the SAM image encoder and mask decoder for fine-tuning and prompting, respectively. Specifically, in the image encoder, a multi-modal adapter is proposed to adapt the single-modal SAM to multi-modal information. In the mask decoder, a semantic-geometric prompt generation strategy is proposed to produce corresponding embeddings with various saliency cues. Extensive experiments on both RGB-D and RGB-T SOD benchmarks show the effectiveness of the proposed framework.

Wound Tissue Segmentation in Diabetic Foot Ulcer Images Using Deep Learning: A Pilot Study

Jun 23, 2024Abstract:Identifying individual tissues, so-called tissue segmentation, in diabetic foot ulcer (DFU) images is a challenging task and little work has been published, largely due to the limited availability of a clinical image dataset. To address this gap, we have created a DFUTissue dataset for the research community to evaluate wound tissue segmentation algorithms. The dataset contains 110 images with tissues labeled by wound experts and 600 unlabeled images. Additionally, we conducted a pilot study on segmenting wound characteristics including fibrin, granulation, and callus using deep learning. Due to the limited amount of annotated data, our framework consists of both supervised learning (SL) and semi-supervised learning (SSL) phases. In the SL phase, we propose a hybrid model featuring a Mix Transformer (MiT-b3) in the encoder and a CNN in the decoder, enhanced by the integration of a parallel spatial and channel squeeze-and-excitation (P-scSE) module known for its efficacy in improving boundary accuracy. The SSL phase employs a pseudo-labeling-based approach, iteratively identifying and incorporating valuable unlabeled images to enhance overall segmentation performance. Comparative evaluations with state-of-the-art methods are conducted for both SL and SSL phases. The SL achieves a Dice Similarity Coefficient (DSC) of 84.89%, which has been improved to 87.64% in the SSL phase. Furthermore, the results are benchmarked against two widely used SSL approaches: Generative Adversarial Networks and Cross-Consistency Training. Additionally, our hybrid model outperforms the state-of-the-art methods with a 92.99% DSC in performing binary segmentation of DFU wound areas when tested on the Chronic Wound dataset. Codes and data are available at https://github.com/uwm-bigdata/DFUTissueSegNet.

Adaptive Domain Inference Attack

Dec 22, 2023Abstract:As deep neural networks are increasingly deployed in sensitive application domains, such as healthcare and security, it's necessary to understand what kind of sensitive information can be inferred from these models. Existing model-targeted attacks all assume the attacker has known the application domain or training data distribution, which plays an essential role in successful attacks. Can removing the domain information from model APIs protect models from these attacks? This paper studies this critical problem. Unfortunately, even with minimal knowledge, i.e., accessing the model as an unnamed function without leaking the meaning of input and output, the proposed adaptive domain inference attack (ADI) can still successfully estimate relevant subsets of training data. We show that the extracted relevant data can significantly improve, for instance, the performance of model-inversion attacks. Specifically, the ADI method utilizes a concept hierarchy built on top of a large collection of available public and private datasets and a novel algorithm to adaptively tune the likelihood of leaf concepts showing up in the unseen training data. The ADI attack not only extracts partial training data at the concept level, but also converges fast and requires much fewer target-model accesses than another domain inference attack, GDI.

A Comparative Study of Image Disguising Methods for Confidential Outsourced Learning

Dec 31, 2022

Abstract:Large training data and expensive model tweaking are standard features of deep learning for images. As a result, data owners often utilize cloud resources to develop large-scale complex models, which raises privacy concerns. Existing solutions are either too expensive to be practical or do not sufficiently protect the confidentiality of data and models. In this paper, we study and compare novel \emph{image disguising} mechanisms, DisguisedNets and InstaHide, aiming to achieve a better trade-off among the level of protection for outsourced DNN model training, the expenses, and the utility of data. DisguisedNets are novel combinations of image blocktization, block-level random permutation, and two block-level secure transformations: random multidimensional projection (RMT) and AES pixel-level encryption (AES). InstaHide is an image mixup and random pixel flipping technique \cite{huang20}. We have analyzed and evaluated them under a multi-level threat model. RMT provides a better security guarantee than InstaHide, under the Level-1 adversarial knowledge with well-preserved model quality. In contrast, AES provides a security guarantee under the Level-2 adversarial knowledge, but it may affect model quality more. The unique features of image disguising also help us to protect models from model-targeted attacks. We have done an extensive experimental evaluation to understand how these methods work in different settings for different datasets.

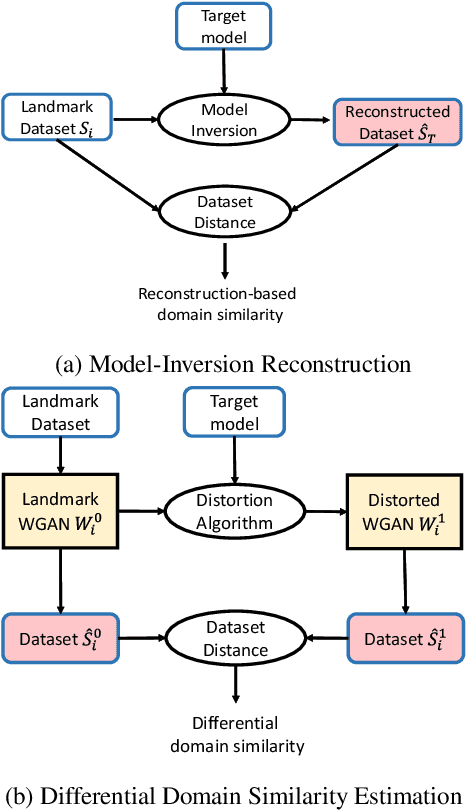

GAN-based Domain Inference Attack

Dec 22, 2022

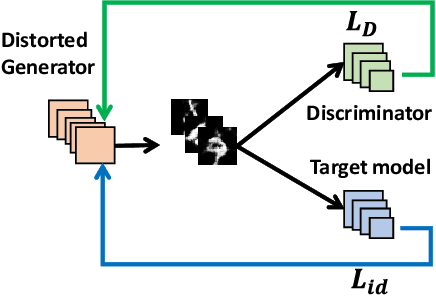

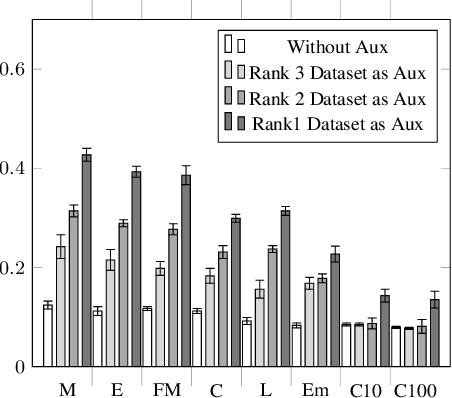

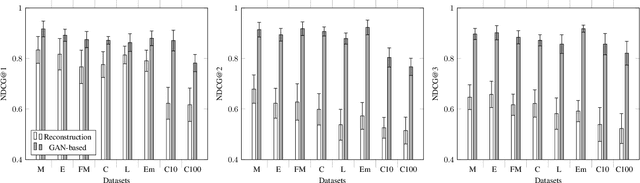

Abstract:Model-based attacks can infer training data information from deep neural network models. These attacks heavily depend on the attacker's knowledge of the application domain, e.g., using it to determine the auxiliary data for model-inversion attacks. However, attackers may not know what the model is used for in practice. We propose a generative adversarial network (GAN) based method to explore likely or similar domains of a target model -- the model domain inference (MDI) attack. For a given target (classification) model, we assume that the attacker knows nothing but the input and output formats and can use the model to derive the prediction for any input in the desired form. Our basic idea is to use the target model to affect a GAN training process for a candidate domain's dataset that is easy to obtain. We find that the target model may distract the training procedure less if the domain is more similar to the target domain. We then measure the distraction level with the distance between GAN-generated datasets, which can be used to rank candidate domains for the target model. Our experiments show that the auxiliary dataset from an MDI top-ranked domain can effectively boost the result of model-inversion attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge