Disguised-Nets: Image Disguising for Privacy-preserving Deep Learning

Paper and Code

Feb 05, 2019

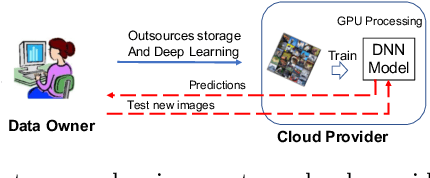

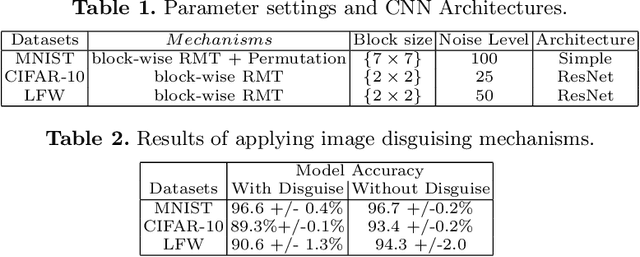

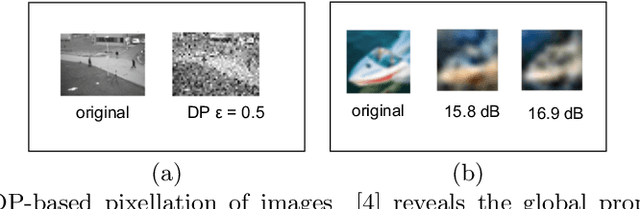

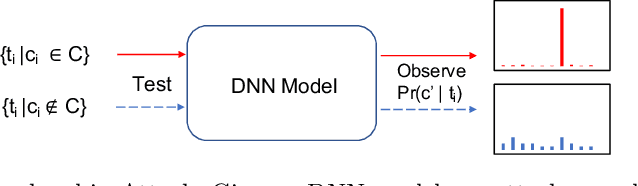

Due to the high training costs of deep learning, model developers often rent cloud GPU servers to achieve better efficiency. However, this practice raises privacy concerns. An adversarial party may be interested in 1) personal identifiable information encoded in the training data and the learned models, 2) misusing the sensitive models for its own benefits, or 3) launching model inversion (MIA) and generative adversarial network (GAN) attacks to reconstruct replicas of training data (e.g., sensitive images). Learning from encrypted data seems impractical due to the large training data and expensive learning algorithms, while differential-privacy based approaches have to make significant trade-offs between privacy and model quality. We investigate the use of image disguising techniques to protect both data and model privacy. Our preliminary results show that with block-wise permutation and transformations, surprisingly, disguised images still give reasonably well performing deep neural networks (DNN). The disguised images are also resilient to the deep-learning enhanced visual discrimination attack and provide an extra layer of protection from MIA and GAN attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge