D. M. Anisuzzaman

Department of Computer Science, University of Wisconsin-Milwaukee, Milwaukee, WI, USA

Wound Severity Classification using Deep Neural Network

Apr 17, 2022

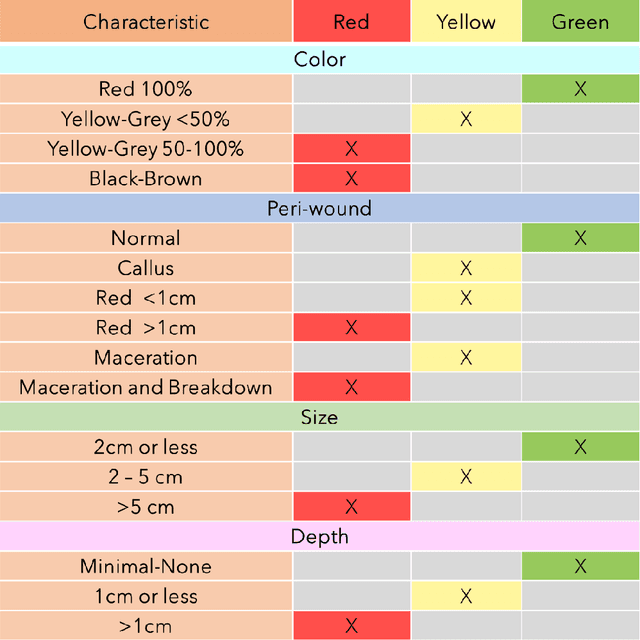

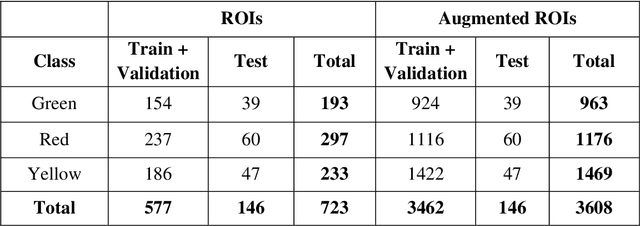

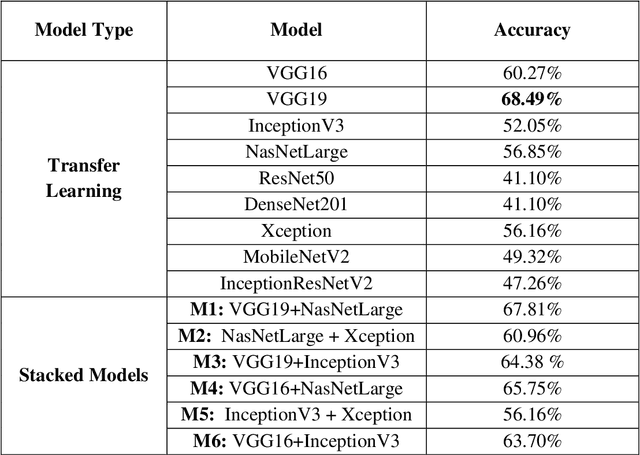

Abstract:The classification of wound severity is a critical step in wound diagnosis. An effective classifier can help wound professionals categorize wound conditions more quickly and affordably, allowing them to choose the best treatment option. This study used wound photos to construct a deep neural network-based wound severity classifier that classified them into one of three classes: green, yellow, or red. The green class denotes wounds still in the early stages of healing and are most likely to recover with adequate care. Wounds in the yellow category require more attention and treatment than those in the green category. Finally, the red class denotes the most severe wounds that require prompt attention and treatment. A dataset containing different types of wound images is designed with the help of wound specialists. Nine deep learning models are used with applying the concept of transfer learning. Several stacked models are also developed by concatenating these transfer learning models. The maximum accuracy achieved on multi-class classification is 68.49%. In addition, we achieved 78.79%, 81.40%, and 77.57% accuracies on green vs. yellow, green vs. red, and yellow vs. red classifications for binary classifications.

Multi-modal Wound Classification using Wound Image and Location by Deep Neural Network

Sep 14, 2021

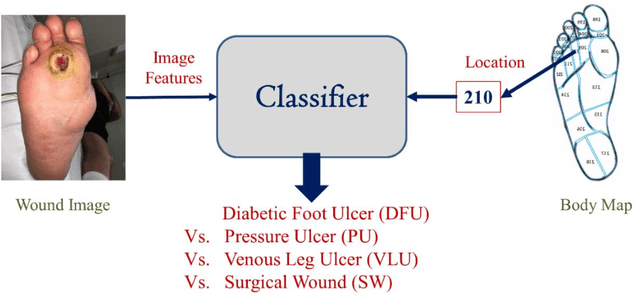

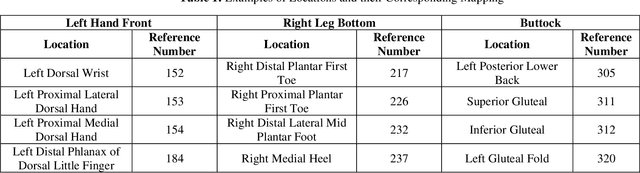

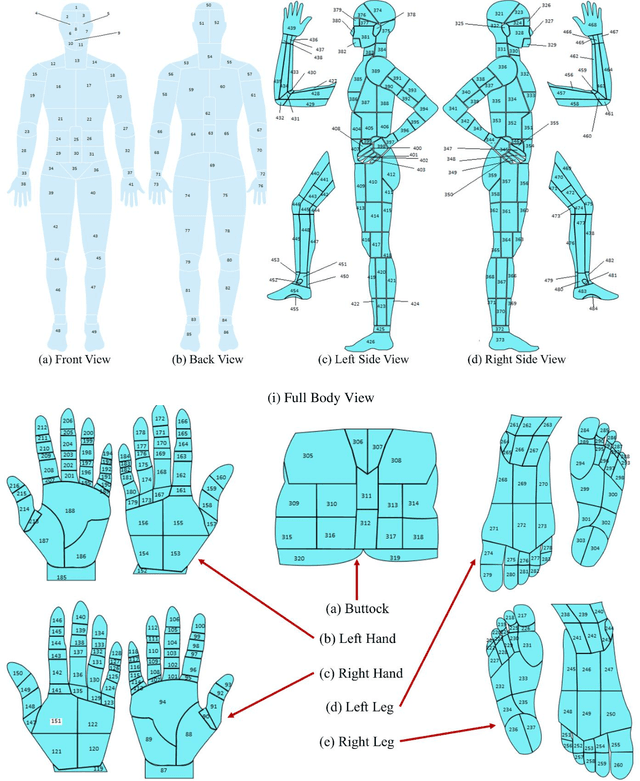

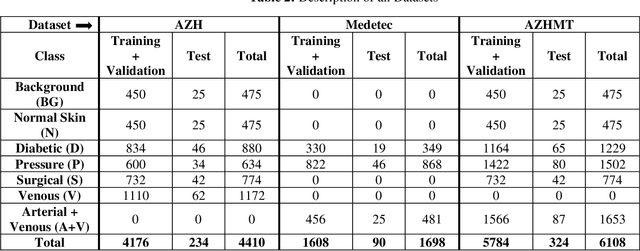

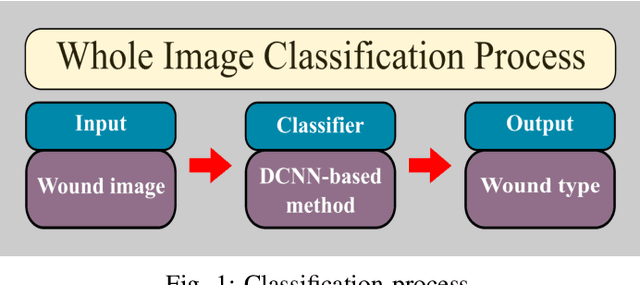

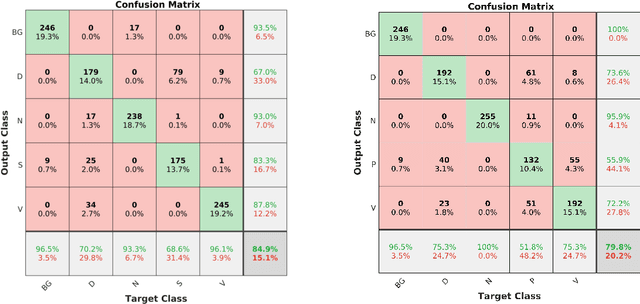

Abstract:Wound classification is an essential step of wound diagnosis. An efficient classifier can assist wound specialists in classifying wound types with less financial and time costs and help them decide an optimal treatment procedure. This study developed a deep neural network-based multi-modal classifier using wound images and their corresponding locations to categorize wound images into multiple classes, including diabetic, pressure, surgical, and venous ulcers. A body map is also developed to prepare the location data, which can help wound specialists tag wound locations more efficiently. Three datasets containing images and their corresponding location information are designed with the help of wound specialists. The multi-modal network is developed by concatenating the image-based and location-based classifier's outputs with some other modifications. The maximum accuracy on mixed-class classifications (containing background and normal skin) varies from 77.33% to 100% on different experiments. The maximum accuracy on wound-class classifications (containing only diabetic, pressure, surgical, and venous) varies from 72.95% to 98.08% on different experiments. The proposed multi-modal network also shows a significant improvement in results from the previous works of literature.

Synthesizing time-series wound prognosis factors from electronic medical records using generative adversarial networks

May 03, 2021

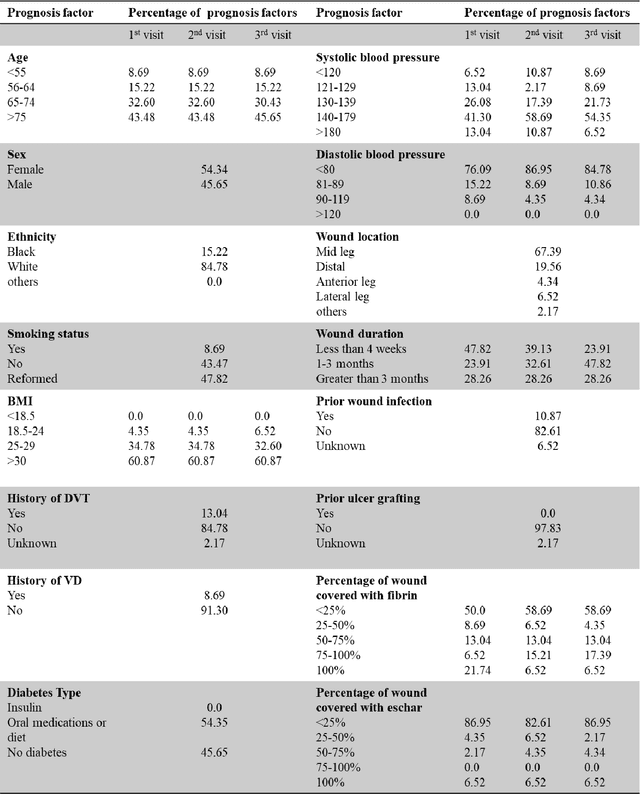

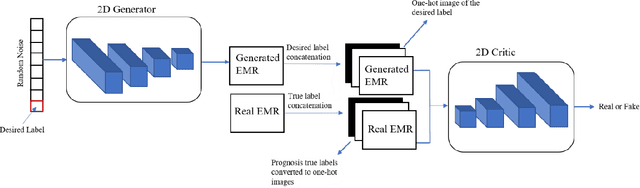

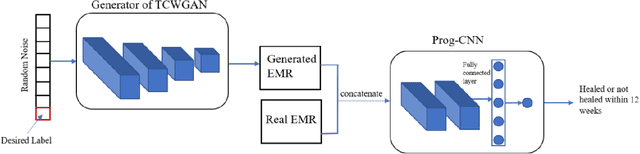

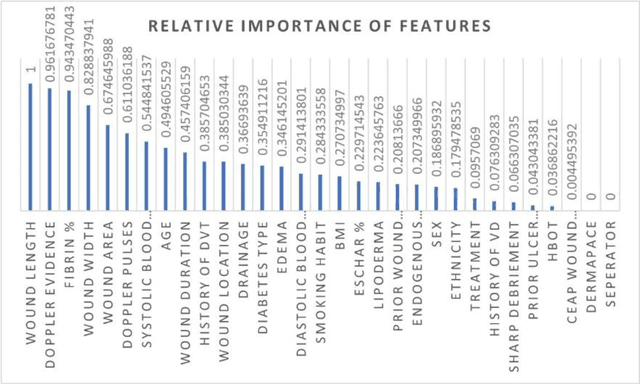

Abstract:Wound prognostic models not only provide an estimate of wound healing time to motivate patients to follow up their treatments but also can help clinicians to decide whether to use a standard care or adjuvant therapies and to assist them with designing clinical trials. However, collecting prognosis factors from Electronic Medical Records (EMR) of patients is challenging due to privacy, sensitivity, and confidentiality. In this study, we developed time series medical generative adversarial networks (GANs) to generate synthetic wound prognosis factors using very limited information collected during routine care in a specialized wound care facility. The generated prognosis variables are used in developing a predictive model for chronic wound healing trajectory. Our novel medical GAN can produce both continuous and categorical features from EMR. Moreover, we applied temporal information to our model by considering data collected from the weekly follow-ups of patients. Conditional training strategies were utilized to enhance training and generate classified data in terms of healing or non-healing. The ability of the proposed model to generate realistic EMR data was evaluated by TSTR (test on the synthetic, train on the real), discriminative accuracy, and visualization. We utilized samples generated by our proposed GAN in training a prognosis model to demonstrate its real-life application. Using the generated samples in training predictive models improved the classification accuracy by 6.66-10.01% compared to the previous EMR-GAN. Additionally, the suggested prognosis classifier has achieved the area under the curve (AUC) of 0.975, 0.968, and 0.849 when training the network using data from the first three visits, first two visits, and first visit, respectively. These results indicate a significant improvement in wound healing prediction compared to the previous prognosis models.

Multiclass Wound Image Classification using an Ensemble Deep CNN-based Classifier

Oct 19, 2020

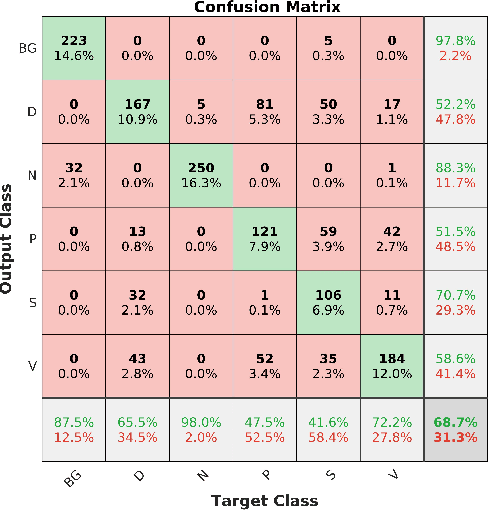

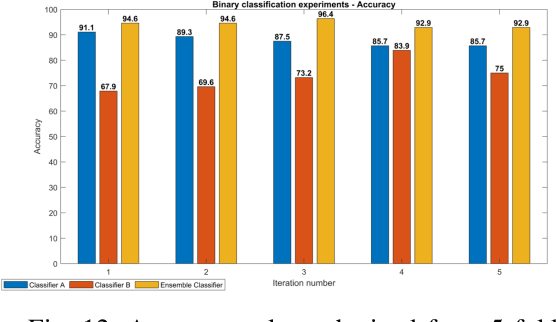

Abstract:Acute and chronic wounds are a challenge to healthcare systems around the world and affect many people's lives annually. Wound classification is a key step in wound diagnosis that would help clinicians to identify an optimal treatment procedure. Hence, having a high-performance classifier assists the specialists in the field to classify the wounds with less financial and time costs. Different machine learning and deep learning-based wound classification methods have been proposed in the literature. In this study, we have developed an ensemble Deep Convolutional Neural Network-based classifier to classify wound images including surgical, diabetic, and venous ulcers, into multi-classes. The output classification scores of two classifiers (patch-wise and image-wise) are fed into a Multi-Layer Perceptron to provide a superior classification performance. A 5-fold cross-validation approach is used to evaluate the proposed method. We obtained maximum and average classification accuracy values of 96.4% and 94.28% for binary and 91.9\% and 87.7\% for 3-class classification problems. The results show that our proposed method can be used effectively as a decision support system in classification of wound images or other related clinical applications.

Image Based Artificial Intelligence in Wound Assessment: A Systematic Review

Sep 15, 2020

Abstract:Efficient and effective assessment of acute and chronic wounds can help wound care teams in clinical practice to greatly improve wound diagnosis, optimize treatment plans, ease the workload and achieve health related quality of life to the patient population. While artificial intelligence (AI) has found wide applications in health-related sciences and technology, AI-based systems remain to be developed clinically and computationally for high-quality wound care. To this end, we have carried out a systematic review of intelligent image-based data analysis and system developments for wound assessment. Specifically, we provide an extensive review of research methods on wound measurement (segmentation) and wound diagnosis (classification). We also reviewed recent work on wound assessment systems (including hardware, software, and mobile apps). More than 250 articles were retrieved from various publication databases and online resources, and 115 of them were carefully selected to cover the breadth and depth of most recent and relevant work to convey the current review to its fulfillment.

A Mobile App for Wound Localization using Deep Learning

Sep 15, 2020

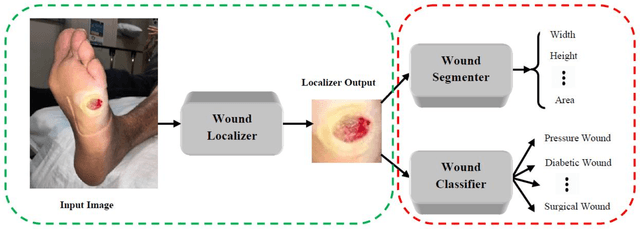

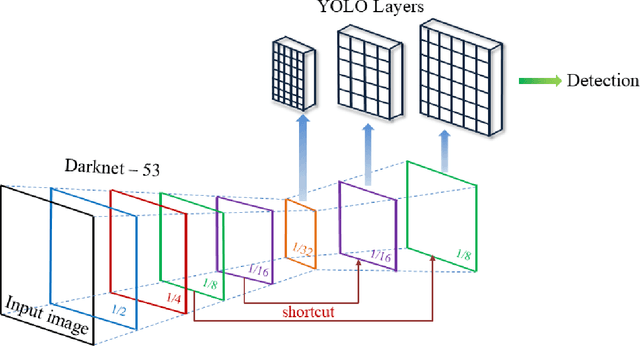

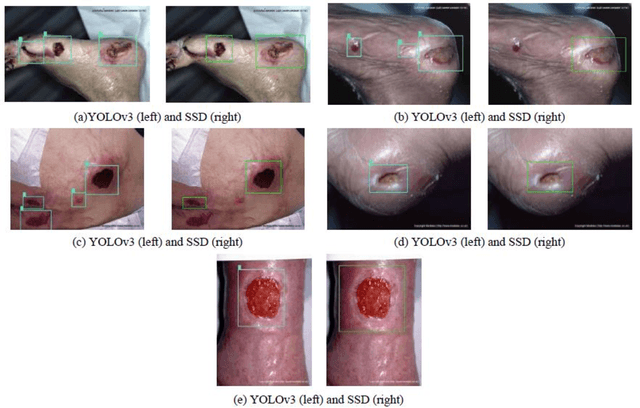

Abstract:We present an automated wound localizer from 2D wound and ulcer images by using deep neural network, as the first step towards building an automated and complete wound diagnostic system. The wound localizer has been developed by using YOLOv3 model, which is then turned into an iOS mobile application. The developed localizer can detect the wound and its surrounding tissues and isolate the localized wounded region from images, which would be very helpful for future processing such as wound segmentation and classification due to the removal of unnecessary regions from wound images. For Mobile App development with video processing, a lighter version of YOLOv3 named tiny-YOLOv3 has been used. The model is trained and tested on our own image dataset in collaboration with AZH Wound and Vascular Center, Milwaukee, Wisconsin. The YOLOv3 model is compared with SSD model, showing that YOLOv3 gives a mAP value of 93.9%, which is much better than the SSD model (86.4%). The robustness and reliability of these models are also tested on a publicly available dataset named Medetec and shows a very good performance as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge