Yusheng Zhang

NTIRE 2023 Quality Assessment of Video Enhancement Challenge

Jul 19, 2023

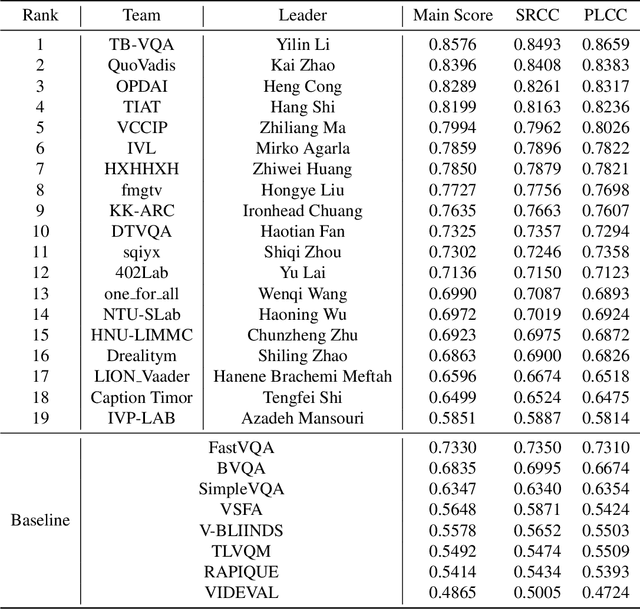

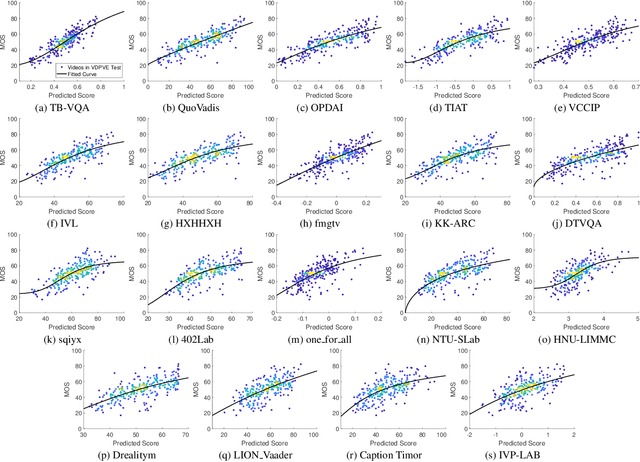

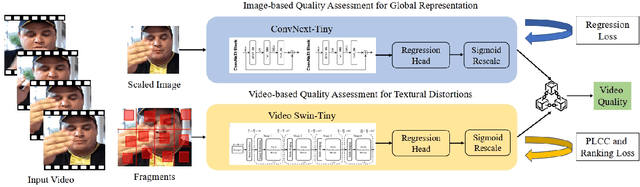

Abstract:This paper reports on the NTIRE 2023 Quality Assessment of Video Enhancement Challenge, which will be held in conjunction with the New Trends in Image Restoration and Enhancement Workshop (NTIRE) at CVPR 2023. This challenge is to address a major challenge in the field of video processing, namely, video quality assessment (VQA) for enhanced videos. The challenge uses the VQA Dataset for Perceptual Video Enhancement (VDPVE), which has a total of 1211 enhanced videos, including 600 videos with color, brightness, and contrast enhancements, 310 videos with deblurring, and 301 deshaked videos. The challenge has a total of 167 registered participants. 61 participating teams submitted their prediction results during the development phase, with a total of 3168 submissions. A total of 176 submissions were submitted by 37 participating teams during the final testing phase. Finally, 19 participating teams submitted their models and fact sheets, and detailed the methods they used. Some methods have achieved better results than baseline methods, and the winning methods have demonstrated superior prediction performance.

The Monocular Depth Estimation Challenge

Nov 22, 2022

Abstract:This paper summarizes the results of the first Monocular Depth Estimation Challenge (MDEC) organized at WACV2023. This challenge evaluated the progress of self-supervised monocular depth estimation on the challenging SYNS-Patches dataset. The challenge was organized on CodaLab and received submissions from 4 valid teams. Participants were provided a devkit containing updated reference implementations for 16 State-of-the-Art algorithms and 4 novel techniques. The threshold for acceptance for novel techniques was to outperform every one of the 16 SotA baselines. All participants outperformed the baseline in traditional metrics such as MAE or AbsRel. However, pointcloud reconstruction metrics were challenging to improve upon. We found predictions were characterized by interpolation artefacts at object boundaries and errors in relative object positioning. We hope this challenge is a valuable contribution to the community and encourage authors to participate in future editions.

Image Quality Assessment with Gradient Siamese Network

Aug 08, 2022

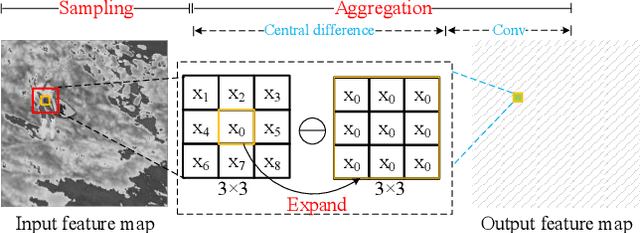

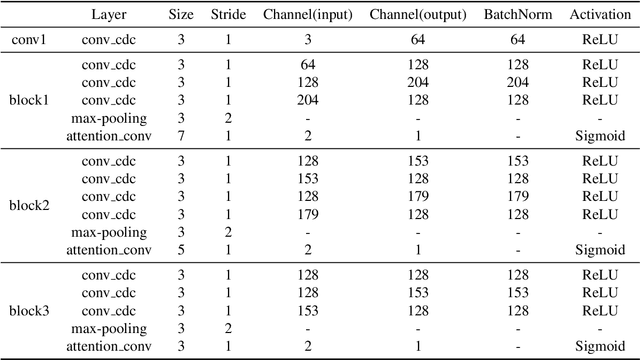

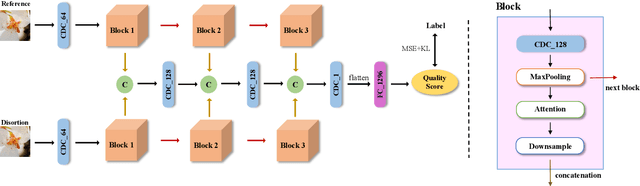

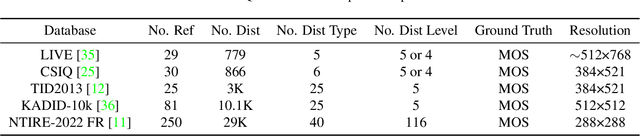

Abstract:In this work, we introduce Gradient Siamese Network (GSN) for image quality assessment. The proposed method is skilled in capturing the gradient features between distorted images and reference images in full-reference image quality assessment(IQA) task. We utilize Central Differential Convolution to obtain both semantic features and detail difference hidden in image pair. Furthermore, spatial attention guides the network to concentrate on regions related to image detail. For the low-level, mid-level and high-level features extracted by the network, we innovatively design a multi-level fusion method to improve the efficiency of feature utilization. In addition to the common mean square error supervision, we further consider the relative distance among batch samples and successfully apply KL divergence loss to the image quality assessment task. We experimented the proposed algorithm GSN on several publicly available datasets and proved its superior performance. Our network won the second place in NTIRE 2022 Perceptual Image Quality Assessment Challenge track 1 Full-Reference.

* 10 pages, 5 figures, Computer Vision and Pattern Recognition (CVPR) Workshops

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge