Yunyi Liu

A Review of Longitudinal Radiology Report Generation: Dataset Composition, Methods, and Performance Evaluation

Oct 14, 2025Abstract:Chest Xray imaging is a widely used diagnostic tool in modern medicine, and its high utilization creates substantial workloads for radiologists. To alleviate this burden, vision language models are increasingly applied to automate Chest Xray radiology report generation (CXRRRG), aiming for clinically accurate descriptions while reducing manual effort. Conventional approaches, however, typically rely on single images, failing to capture the longitudinal context necessary for producing clinically faithful comparison statements. Recently, growing attention has been directed toward incorporating longitudinal data into CXR RRG, enabling models to leverage historical studies in ways that mirror radiologists diagnostic workflows. Nevertheless, existing surveys primarily address single image CXRRRG and offer limited guidance for longitudinal settings, leaving researchers without a systematic framework for model design. To address this gap, this survey provides the first comprehensive review of longitudinal radiology report generation (LRRG). Specifically, we examine dataset construction strategies, report generation architectures alongside longitudinally tailored designs, and evaluation protocols encompassing both longitudinal specific measures and widely used benchmarks. We further summarize LRRG methods performance, alongside analyses of different ablation studies, which collectively highlight the critical role of longitudinal information and architectural design choices in improving model performance. Finally, we summarize five major limitations of current research and outline promising directions for future development, aiming to lay a foundation for advancing this emerging field.

Text2Move: Text-to-moving sound generation via trajectory prediction and temporal alignment

Sep 26, 2025Abstract:Human auditory perception is shaped by moving sound sources in 3D space, yet prior work in generative sound modelling has largely been restricted to mono signals or static spatial audio. In this work, we introduce a framework for generating moving sounds given text prompts in a controllable fashion. To enable training, we construct a synthetic dataset that records moving sounds in binaural format, their spatial trajectories, and text captions about the sound event and spatial motion. Using this dataset, we train a text-to-trajectory prediction model that outputs the three-dimensional trajectory of a moving sound source given text prompts. To generate spatial audio, we first fine-tune a pre-trained text-to-audio generative model to output temporally aligned mono sound with the trajectory. The spatial audio is then simulated using the predicted temporally-aligned trajectory. Experimental evaluation demonstrates reasonable spatial understanding of the text-to-trajectory model. This approach could be easily integrated into existing text-to-audio generative workflow and extended to moving sound generation in other spatial audio formats.

DiN: Diffusion Model for Robust Medical VQA with Semantic Noisy Labels

Mar 24, 2025

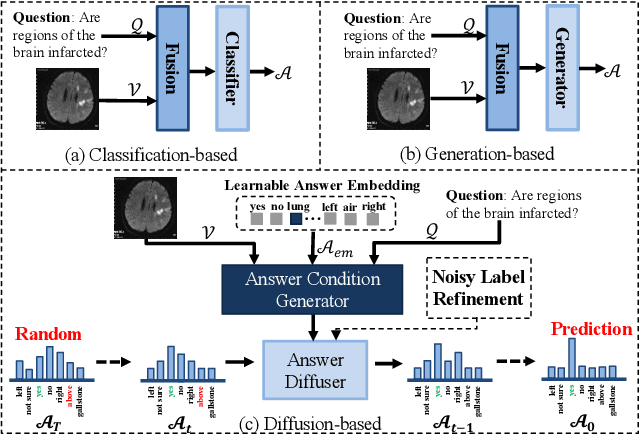

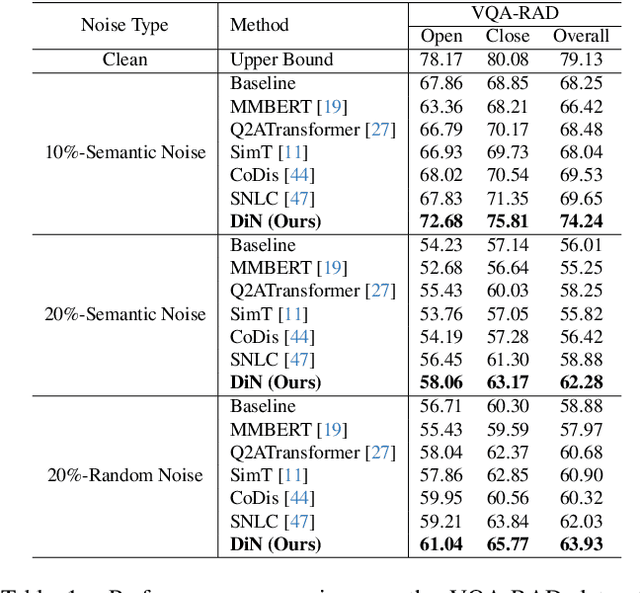

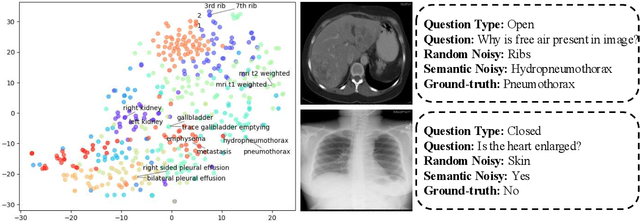

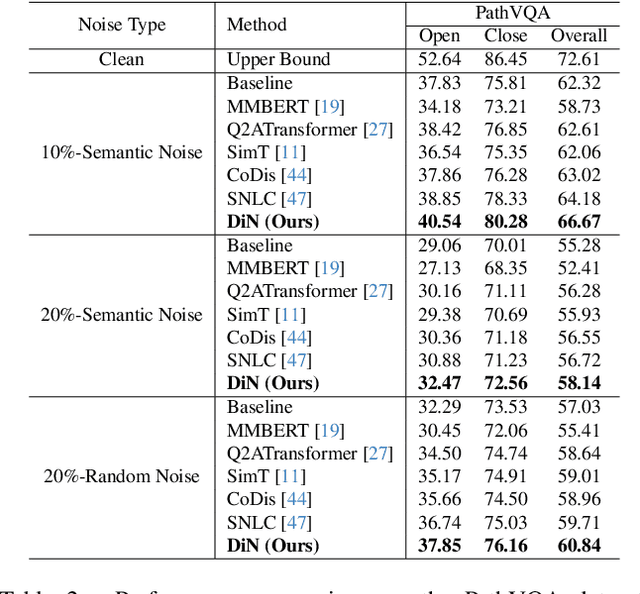

Abstract:Medical Visual Question Answering (Med-VQA) systems benefit the interpretation of medical images containing critical clinical information. However, the challenge of noisy labels and limited high-quality datasets remains underexplored. To address this, we establish the first benchmark for noisy labels in Med-VQA by simulating human mislabeling with semantically designed noise types. More importantly, we introduce the DiN framework, which leverages a diffusion model to handle noisy labels in Med-VQA. Unlike the dominant classification-based VQA approaches that directly predict answers, our Answer Diffuser (AD) module employs a coarse-to-fine process, refining answer candidates with a diffusion model for improved accuracy. The Answer Condition Generator (ACG) further enhances this process by generating task-specific conditional information via integrating answer embeddings with fused image-question features. To address label noise, our Noisy Label Refinement(NLR) module introduces a robust loss function and dynamic answer adjustment to further boost the performance of the AD module.

Simi-SFX: A similarity-based conditioning method for controllable sound effect synthesis

Dec 25, 2024Abstract:Generating sound effects with controllable variations is a challenging task, traditionally addressed using sophisticated physical models that require in-depth knowledge of signal processing parameters and algorithms. In the era of generative and large language models, text has emerged as a common, human-interpretable interface for controlling sound synthesis. However, the discrete and qualitative nature of language tokens makes it difficult to capture subtle timbral variations across different sounds. In this research, we propose a novel similarity-based conditioning method for sound synthesis, leveraging differentiable digital signal processing (DDSP). This approach combines the use of latent space for learning and controlling audio timbre with an intuitive guiding vector, normalized within the range [0,1], to encode categorical acoustic information. By utilizing pre-trained audio representation models, our method achieves expressive and fine-grained timbre control. To benchmark our approach, we introduce two sound effect datasets--Footstep-set and Impact-set--designed to evaluate both controllability and sound quality. Regression analysis demonstrates that the proposed similarity score effectively controls timbre variations and enables creative applications such as timbre interpolation between discrete classes. Our work provides a robust and versatile framework for sound effect synthesis, bridging the gap between traditional signal processing and modern machine learning techniques.

ER2Score: LLM-based Explainable and Customizable Metric for Assessing Radiology Reports with Reward-Control Loss

Nov 26, 2024

Abstract:Automated radiology report generation (R2Gen) has advanced significantly, introducing challenges in accurate evaluation due to its complexity. Traditional metrics often fall short by relying on rigid word-matching or focusing only on pathological entities, leading to inconsistencies with human assessments. To bridge this gap, we introduce ER2Score, an automatic evaluation metric designed specifically for R2Gen. Our metric utilizes a reward model, guided by our margin-based reward enforcement loss, along with a tailored training data design that enables customization of evaluation criteria to suit user-defined needs. It not only scores reports according to user-specified criteria but also provides detailed sub-scores, enhancing interpretability and allowing users to adjust the criteria between different aspects of reports. Leveraging GPT-4, we designed an easy-to-use data generation pipeline, enabling us to produce extensive training data based on two distinct scoring systems, each containing reports of varying quality along with corresponding scores. These GPT-generated reports are then paired as accepted and rejected samples through our pairing rule to train an LLM towards our fine-grained reward model, which assigns higher rewards to the report with high quality. Our reward-control loss enables this model to simultaneously output multiple individual rewards corresponding to the number of evaluation criteria, with their summation as our final ER2Score. Our experiments demonstrate ER2Score's heightened correlation with human judgments and superior performance in model selection compared to traditional metrics. Notably, our model provides both an overall score and individual scores for each evaluation item, enhancing interpretability. We also demonstrate its flexible training across various evaluation systems.

Deep Learning-Based Fatigue Cracks Detection in Bridge Girders using Feature Pyramid Networks

Oct 28, 2024

Abstract:For structural health monitoring, continuous and automatic crack detection has been a challenging problem. This study is conducted to propose a framework of automatic crack segmentation from high-resolution images containing crack information about steel box girders of bridges. Considering the multi-scale feature of cracks, convolutional neural network architecture of Feature Pyramid Networks (FPN) for crack detection is proposed. As for input, 120 raw images are processed via two approaches (shrinking the size of images and splitting images into sub-images). Then, models with the proposed structure of FPN for crack detection are developed. The result shows all developed models can automatically detect the cracks at the raw images. By shrinking the images, the computation efficiency is improved without decreasing accuracy. Because of the separable characteristic of crack, models using the splitting method provide more accurate crack segmentations than models using the resizing method. Therefore, for high-resolution images, the FPN structure coupled with the splitting method is an promising solution for the crack segmentation and detection.

KARGEN: Knowledge-enhanced Automated Radiology Report Generation Using Large Language Models

Sep 09, 2024

Abstract:Harnessing the robust capabilities of Large Language Models (LLMs) for narrative generation, logical reasoning, and common-sense knowledge integration, this study delves into utilizing LLMs to enhance automated radiology report generation (R2Gen). Despite the wealth of knowledge within LLMs, efficiently triggering relevant knowledge within these large models for specific tasks like R2Gen poses a critical research challenge. This paper presents KARGEN, a Knowledge-enhanced Automated radiology Report GENeration framework based on LLMs. Utilizing a frozen LLM to generate reports, the framework integrates a knowledge graph to unlock chest disease-related knowledge within the LLM to enhance the clinical utility of generated reports. This is achieved by leveraging the knowledge graph to distill disease-related features in a designed way. Since a radiology report encompasses both normal and disease-related findings, the extracted graph-enhanced disease-related features are integrated with regional image features, attending to both aspects. We explore two fusion methods to automatically prioritize and select the most relevant features. The fused features are employed by LLM to generate reports that are more sensitive to diseases and of improved quality. Our approach demonstrates promising results on the MIMIC-CXR and IU-Xray datasets.

ICGAN: An implicit conditioning method for interpretable feature control of neural audio synthesis

Jun 11, 2024Abstract:Neural audio synthesis methods can achieve high-fidelity and realistic sound generation by utilizing deep generative models. Such models typically rely on external labels which are often discrete as conditioning information to achieve guided sound generation. However, it remains difficult to control the subtle changes in sounds without appropriate and descriptive labels, especially given a limited dataset. This paper proposes an implicit conditioning method for neural audio synthesis using generative adversarial networks that allows for interpretable control of the acoustic features of synthesized sounds. Our technique creates a continuous conditioning space that enables timbre manipulation without relying on explicit labels. We further introduce an evaluation metric to explore controllability and demonstrate that our approach is effective in enabling a degree of controlled variation of different synthesized sound effects for in-domain and cross-domain sounds.

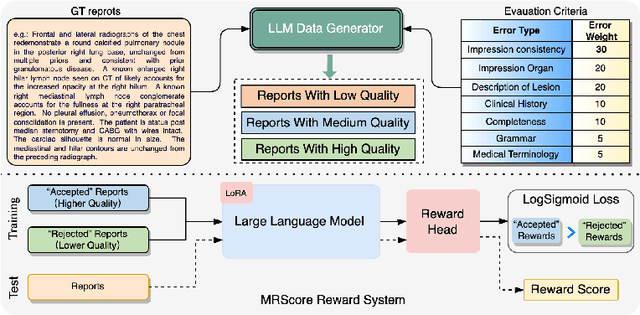

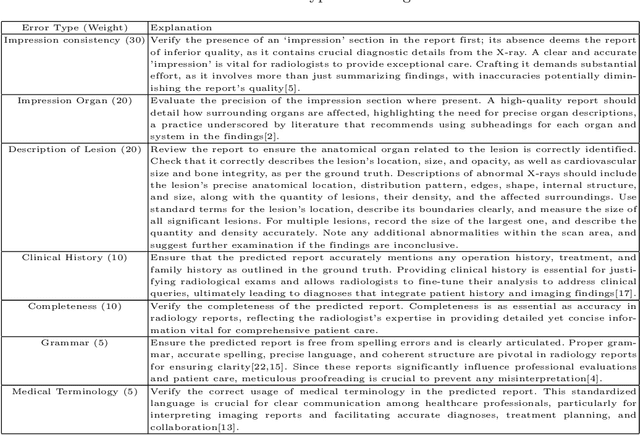

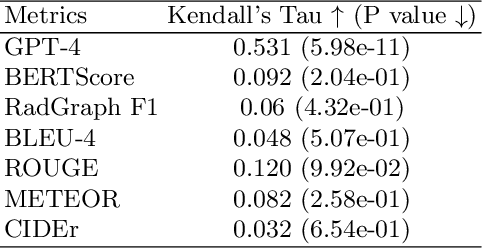

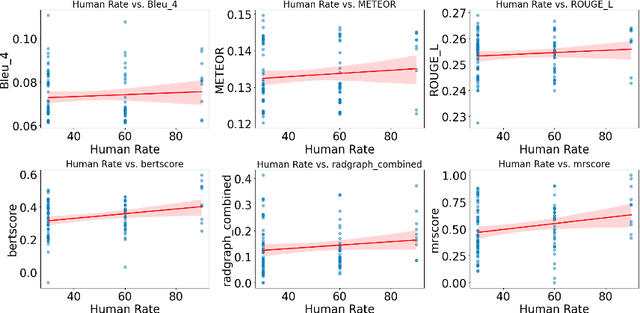

MRScore: Evaluating Radiology Report Generation with LLM-based Reward System

Apr 27, 2024

Abstract:In recent years, automated radiology report generation has experienced significant growth. This paper introduces MRScore, an automatic evaluation metric tailored for radiology report generation by leveraging Large Language Models (LLMs). Conventional NLG (natural language generation) metrics like BLEU are inadequate for accurately assessing the generated radiology reports, as systematically demonstrated by our observations within this paper. To address this challenge, we collaborated with radiologists to develop a framework that guides LLMs for radiology report evaluation, ensuring alignment with human analysis. Our framework includes two key components: i) utilizing GPT to generate large amounts of training data, i.e., reports with different qualities, and ii) pairing GPT-generated reports as accepted and rejected samples and training LLMs to produce MRScore as the model reward. Our experiments demonstrate MRScore's higher correlation with human judgments and superior performance in model selection compared to traditional metrics. Our code and datasets will be available on GitHub.

A Comprehensive Study of GPT-4V's Multimodal Capabilities in Medical Imaging

Nov 06, 2023Abstract:This paper presents a comprehensive evaluation of GPT-4V's capabilities across diverse medical imaging tasks, including Radiology Report Generation, Medical Visual Question Answering (VQA), and Visual Grounding. While prior efforts have explored GPT-4V's performance in medical image analysis, to the best of our knowledge, our study represents the first quantitative evaluation on publicly available benchmarks. Our findings highlight GPT-4V's potential in generating descriptive reports for chest X-ray images, particularly when guided by well-structured prompts. Meanwhile, its performance on the MIMIC-CXR dataset benchmark reveals areas for improvement in certain evaluation metrics, such as CIDEr. In the domain of Medical VQA, GPT-4V demonstrates proficiency in distinguishing between question types but falls short of the VQA-RAD benchmark in terms of accuracy. Furthermore, our analysis finds the limitations of conventional evaluation metrics like the BLEU scores, advocating for the development of more semantically robust assessment methods. In the field of Visual Grounding, GPT-4V exhibits preliminary promise in recognizing bounding boxes, but its precision is lacking, especially in identifying specific medical organs and signs. Our evaluation underscores the significant potential of GPT-4V in the medical imaging domain, while also emphasizing the need for targeted refinements to fully unlock its capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge