Yunwen Lei

University of Birmingham

Generalization and Optimization of SGD with Lookahead

Sep 19, 2025Abstract:The Lookahead optimizer enhances deep learning models by employing a dual-weight update mechanism, which has been shown to improve the performance of underlying optimizers such as SGD. However, most theoretical studies focus on its convergence on training data, leaving its generalization capabilities less understood. Existing generalization analyses are often limited by restrictive assumptions, such as requiring the loss function to be globally Lipschitz continuous, and their bounds do not fully capture the relationship between optimization and generalization. In this paper, we address these issues by conducting a rigorous stability and generalization analysis of the Lookahead optimizer with minibatch SGD. We leverage on-average model stability to derive generalization bounds for both convex and strongly convex problems without the restrictive Lipschitzness assumption. Our analysis demonstrates a linear speedup with respect to the batch size in the convex setting.

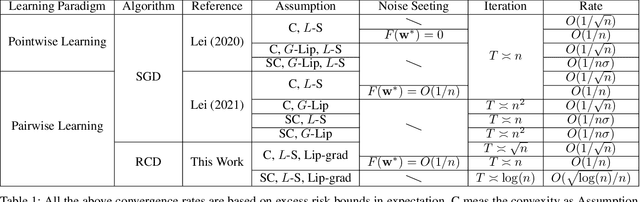

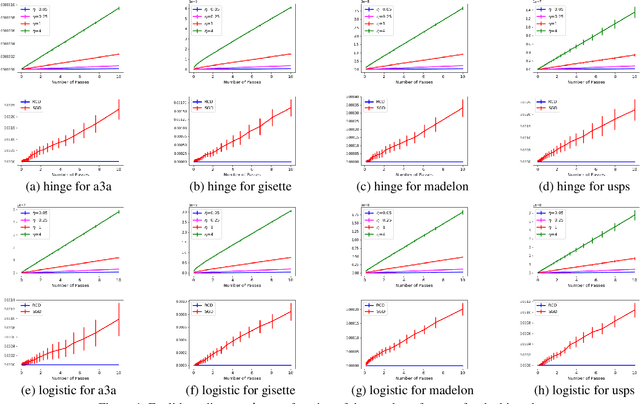

Stability-based Generalization Analysis of Randomized Coordinate Descent for Pairwise Learning

Mar 03, 2025

Abstract:Pairwise learning includes various machine learning tasks, with ranking and metric learning serving as the primary representatives. While randomized coordinate descent (RCD) is popular in various learning problems, there is much less theoretical analysis on the generalization behavior of models trained by RCD, especially under the pairwise learning framework. In this paper, we consider the generalization of RCD for pairwise learning. We measure the on-average argument stability for both convex and strongly convex objective functions, based on which we develop generalization bounds in expectation. The early-stopping strategy is adopted to quantify the balance between estimation and optimization. Our analysis further incorporates the low-noise setting into the excess risk bound to achieve the optimistic bound as $O(1/n)$, where $n$ is the sample size.

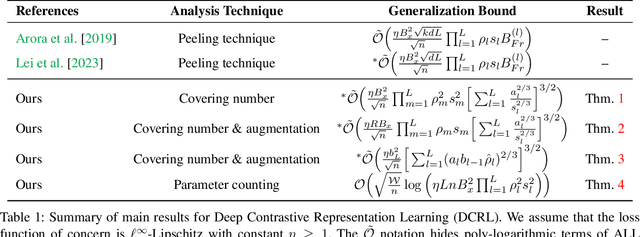

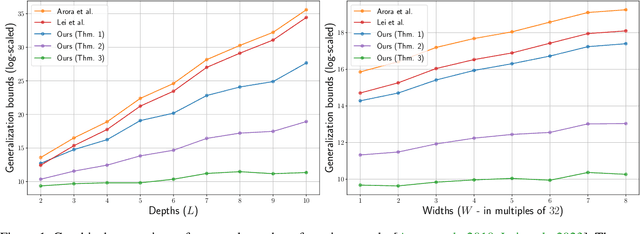

Generalization Analysis for Deep Contrastive Representation Learning

Dec 16, 2024

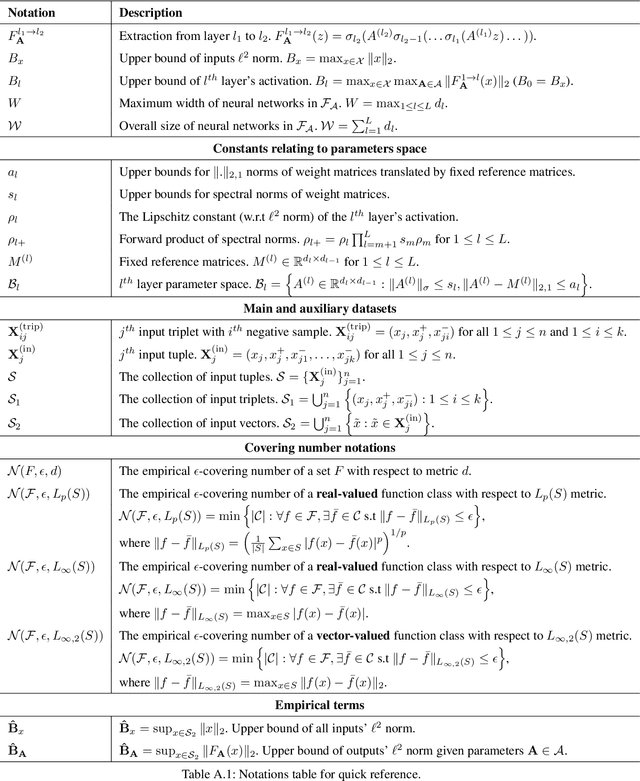

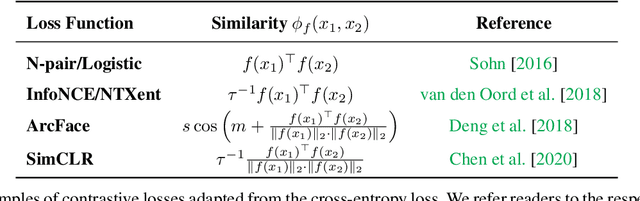

Abstract:In this paper, we present generalization bounds for the unsupervised risk in the Deep Contrastive Representation Learning framework, which employs deep neural networks as representation functions. We approach this problem from two angles. On the one hand, we derive a parameter-counting bound that scales with the overall size of the neural networks. On the other hand, we provide a norm-based bound that scales with the norms of neural networks' weight matrices. Ignoring logarithmic factors, the bounds are independent of $k$, the size of the tuples provided for contrastive learning. To the best of our knowledge, this property is only shared by one other work, which employed a different proof strategy and suffers from very strong exponential dependence on the depth of the network which is due to a use of the peeling technique. Our results circumvent this by leveraging powerful results on covering numbers with respect to uniform norms over samples. In addition, we utilize loss augmentation techniques to further reduce the dependency on matrix norms and the implicit dependence on network depth. In fact, our techniques allow us to produce many bounds for the contrastive learning setting with similar architectural dependencies as in the study of the sample complexity of ordinary loss functions, thereby bridging the gap between the learning theories of contrastive learning and DNNs.

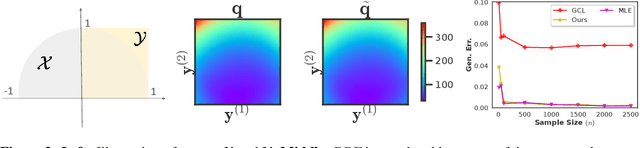

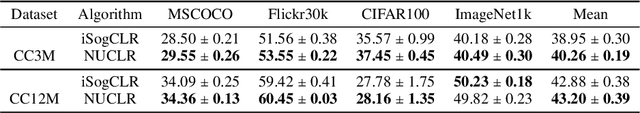

On Discriminative Probabilistic Modeling for Self-Supervised Representation Learning

Oct 11, 2024

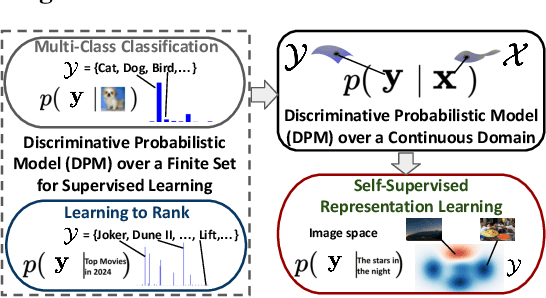

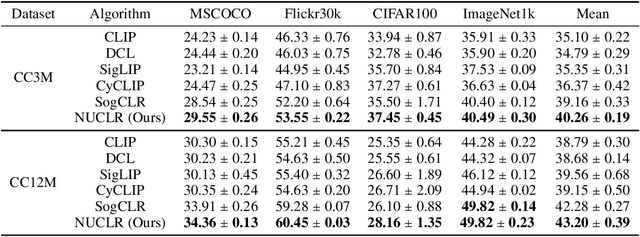

Abstract:We study the discriminative probabilistic modeling problem on a continuous domain for (multimodal) self-supervised representation learning. To address the challenge of computing the integral in the partition function for each anchor data, we leverage the multiple importance sampling (MIS) technique for robust Monte Carlo integration, which can recover InfoNCE-based contrastive loss as a special case. Within this probabilistic modeling framework, we conduct generalization error analysis to reveal the limitation of current InfoNCE-based contrastive loss for self-supervised representation learning and derive insights for developing better approaches by reducing the error of Monte Carlo integration. To this end, we propose a novel non-parametric method for approximating the sum of conditional densities required by MIS through convex optimization, yielding a new contrastive objective for self-supervised representation learning. Moreover, we design an efficient algorithm for solving the proposed objective. We empirically compare our algorithm to representative baselines on the contrastive image-language pretraining task. Experimental results on the CC3M and CC12M datasets demonstrate the superior overall performance of our algorithm.

Bootstrap SGD: Algorithmic Stability and Robustness

Sep 02, 2024

Abstract:In this paper some methods to use the empirical bootstrap approach for stochastic gradient descent (SGD) to minimize the empirical risk over a separable Hilbert space are investigated from the view point of algorithmic stability and statistical robustness. The first two types of approaches are based on averages and are investigated from a theoretical point of view. A generalization analysis for bootstrap SGD of Type 1 and Type 2 based on algorithmic stability is done. Another type of bootstrap SGD is proposed to demonstrate that it is possible to construct purely distribution-free pointwise confidence intervals of the median curve using bootstrap SGD.

Optimizing ADMM and Over-Relaxed ADMM Parameters for Linear Quadratic Problems

Jan 01, 2024

Abstract:The Alternating Direction Method of Multipliers (ADMM) has gained significant attention across a broad spectrum of machine learning applications. Incorporating the over-relaxation technique shows potential for enhancing the convergence rate of ADMM. However, determining optimal algorithmic parameters, including both the associated penalty and relaxation parameters, often relies on empirical approaches tailored to specific problem domains and contextual scenarios. Incorrect parameter selection can significantly hinder ADMM's convergence rate. To address this challenge, in this paper we first propose a general approach to optimize the value of penalty parameter, followed by a novel closed-form formula to compute the optimal relaxation parameter in the context of linear quadratic problems (LQPs). We then experimentally validate our parameter selection methods through random instantiations and diverse imaging applications, encompassing diffeomorphic image registration, image deblurring, and MRI reconstruction.

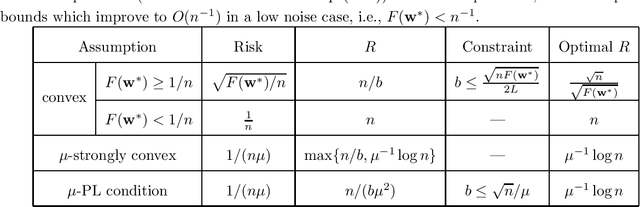

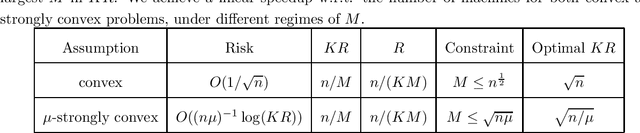

Stability and Generalization for Minibatch SGD and Local SGD

Oct 02, 2023

Abstract:The increasing scale of data propels the popularity of leveraging parallelism to speed up the optimization. Minibatch stochastic gradient descent (minibatch SGD) and local SGD are two popular methods for parallel optimization. The existing theoretical studies show a linear speedup of these methods with respect to the number of machines, which, however, is measured by optimization errors. As a comparison, the stability and generalization of these methods are much less studied. In this paper, we pioneer the stability and generalization analysis of minibatch and local SGD to understand their learnability. We incorporate training errors into the stability analysis, which shows how small training errors help generalization for overparameterized models. Our stability bounds imply optimistic risk bounds which decay fast under a low noise condition. We show both minibatch and local SGD achieve a linear speedup to attain the optimal risk bounds.

Generalization Guarantees of Gradient Descent for Multi-Layer Neural Networks

May 26, 2023

Abstract:Recently, significant progress has been made in understanding the generalization of neural networks (NNs) trained by gradient descent (GD) using the algorithmic stability approach. However, most of the existing research has focused on one-hidden-layer NNs and has not addressed the impact of different network scaling parameters. In this paper, we greatly extend the previous work \cite{lei2022stability,richards2021stability} by conducting a comprehensive stability and generalization analysis of GD for multi-layer NNs. For two-layer NNs, our results are established under general network scaling parameters, relaxing previous conditions. In the case of three-layer NNs, our technical contribution lies in demonstrating its nearly co-coercive property by utilizing a novel induction strategy that thoroughly explores the effects of over-parameterization. As a direct application of our general findings, we derive the excess risk rate of $O(1/\sqrt{n})$ for GD algorithms in both two-layer and three-layer NNs. This sheds light on sufficient or necessary conditions for under-parameterized and over-parameterized NNs trained by GD to attain the desired risk rate of $O(1/\sqrt{n})$. Moreover, we demonstrate that as the scaling parameter increases or the network complexity decreases, less over-parameterization is required for GD to achieve the desired error rates. Additionally, under a low-noise condition, we obtain a fast risk rate of $O(1/n)$ for GD in both two-layer and three-layer NNs.

Generalization Analysis for Contrastive Representation Learning

Feb 28, 2023Abstract:Recently, contrastive learning has found impressive success in advancing the state of the art in solving various machine learning tasks. However, the existing generalization analysis is very limited or even not meaningful. In particular, the existing generalization error bounds depend linearly on the number $k$ of negative examples while it was widely shown in practice that choosing a large $k$ is necessary to guarantee good generalization of contrastive learning in downstream tasks. In this paper, we establish novel generalization bounds for contrastive learning which do not depend on $k$, up to logarithmic terms. Our analysis uses structural results on empirical covering numbers and Rademacher complexities to exploit the Lipschitz continuity of loss functions. For self-bounding Lipschitz loss functions, we further improve our results by developing optimistic bounds which imply fast rates in a low noise condition. We apply our results to learning with both linear representation and nonlinear representation by deep neural networks, for both of which we derive Rademacher complexity bounds to get improved generalization bounds.

Generalization Bounds for Inductive Matrix Completion in Low-noise Settings

Dec 16, 2022Abstract:We study inductive matrix completion (matrix completion with side information) under an i.i.d. subgaussian noise assumption at a low noise regime, with uniform sampling of the entries. We obtain for the first time generalization bounds with the following three properties: (1) they scale like the standard deviation of the noise and in particular approach zero in the exact recovery case; (2) even in the presence of noise, they converge to zero when the sample size approaches infinity; and (3) for a fixed dimension of the side information, they only have a logarithmic dependence on the size of the matrix. Differently from many works in approximate recovery, we present results both for bounded Lipschitz losses and for the absolute loss, with the latter relying on Talagrand-type inequalities. The proofs create a bridge between two approaches to the theoretical analysis of matrix completion, since they consist in a combination of techniques from both the exact recovery literature and the approximate recovery literature.

* 30 Pages, 1 figure; Accepted for publication at AAAI 2023

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge