Yuan Zhuang

MTVHunter: Smart Contracts Vulnerability Detection Based on Multi-Teacher Knowledge Translation

Feb 24, 2025Abstract:Smart contracts, closely intertwined with cryptocurrency transactions, have sparked widespread concerns about considerable financial losses of security issues. To counteract this, a variety of tools have been developed to identify vulnerability in smart contract. However, they fail to overcome two challenges at the same time when faced with smart contract bytecode: (i) strong interference caused by enormous non-relevant instructions; (ii) missing semantics of bytecode due to incomplete data and control flow dependencies. In this paper, we propose a multi-teacher based bytecode vulnerability detection method, namely Multi-Teacher Vulnerability Hunter (MTVHunter), which delivers effective denoising and missing semantic to bytecode under multi-teacher guidance. Specifically, we first propose an instruction denoising teacher to eliminate noise interference by abstract vulnerability pattern and further reflect in contract embeddings. Secondly, we design a novel semantic complementary teacher with neuron distillation, which effectively extracts necessary semantic from source code to replenish the bytecode. Particularly, the proposed neuron distillation accelerate this semantic filling by turning the knowledge transition into a regression task. We conduct experiments on 229,178 real-world smart contracts that concerns four types of common vulnerabilities. Extensive experiments show MTVHunter achieves significantly performance gains over state-of-the-art approaches.

A Robust and Efficient Visual-Inertial Initialization with Probabilistic Normal Epipolar Constraint

Oct 25, 2024

Abstract:Accurate and robust initialization is essential for Visual-Inertial Odometry (VIO), as poor initialization can severely degrade pose accuracy. During initialization, it is crucial to estimate parameters such as accelerometer bias, gyroscope bias, initial velocity, and gravity, etc. The IMU sensor requires precise estimation of gyroscope bias because gyroscope bias affects rotation, velocity and position. Most existing VIO initialization methods adopt Structure from Motion (SfM) to solve for gyroscope bias. However, SfM is not stable and efficient enough in fast motion or degenerate scenes. To overcome these limitations, we extended the rotation-translation-decoupling framework by adding new uncertainty parameters and optimization modules. First, we adopt a gyroscope bias optimizer that incorporates probabilistic normal epipolar constraints. Second, we fuse IMU and visual measurements to solve for velocity, gravity, and scale efficiently. Finally, we design an additional refinement module that effectively diminishes gravity and scale errors. Extensive initialization tests on the EuRoC dataset show that our method reduces the gyroscope bias and rotation estimation error by an average of 16% and 4% respectively. It also significantly reduces the gravity error, with an average reduction of 29%.

Snail-Radar: A large-scale diverse dataset for the evaluation of 4D-radar-based SLAM systems

Jul 16, 2024

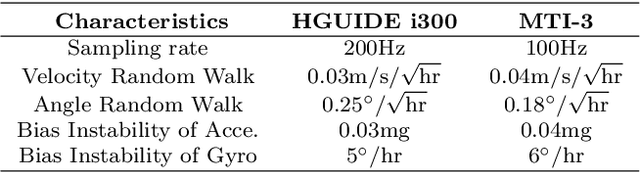

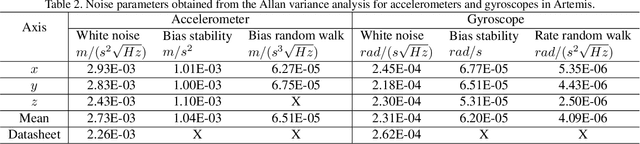

Abstract:4D radars are increasingly favored for odometry and mapping of autonomous systems due to their robustness in harsh weather and dynamic environments. Existing datasets, however, often cover limited areas and are typically captured using a single platform. To address this gap, we present a diverse large-scale dataset specifically designed for 4D radar-based localization and mapping. This dataset was gathered using three different platforms: a handheld device, an e-bike, and an SUV, under a variety of environmental conditions, including clear days, nighttime, and heavy rain. The data collection occurred from September 2023 to February 2024, encompassing diverse settings such as roads in a vegetated campus and tunnels on highways. Each route was traversed multiple times to facilitate place recognition evaluations. The sensor suite included a 3D lidar, 4D radars, stereo cameras, consumer-grade IMUs, and a GNSS/INS system. Sensor data packets were synchronized to GNSS time using a two-step process: a convex hull algorithm was applied to smooth host time jitter, and then odometry and correlation algorithms were used to correct constant time offsets. Extrinsic calibration between sensors was achieved through manual measurements and subsequent nonlinear optimization. The reference motion for the platforms was generated by registering lidar scans to a terrestrial laser scanner (TLS) point cloud map using a lidar inertial odometry (LIO) method in localization mode. Additionally, a data reversion technique was introduced to enable backward LIO processing. We believe this dataset will boost research in radar-based point cloud registration, odometry, mapping, and place recognition.

Tightly-Coupled VLP/INS Integrated Navigation by Inclination Estimation and Blockage Handling

Apr 28, 2024

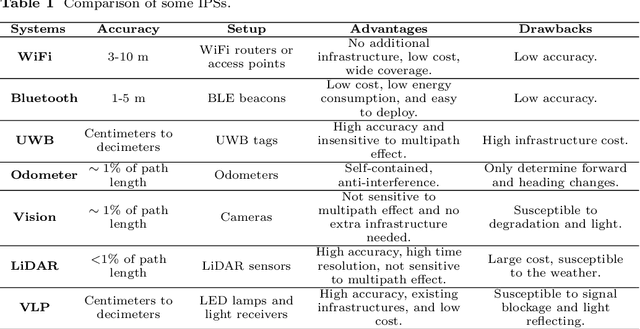

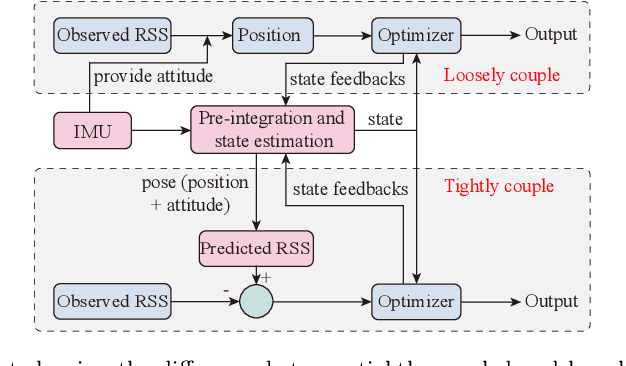

Abstract:Visible Light Positioning (VLP) has emerged as a promising technology capable of delivering indoor localization with high accuracy. In VLP systems that use Photodiodes (PDs) as light receivers, the Received Signal Strength (RSS) is affected by the incidence angle of light, making the inclination of PDs a critical parameter in the positioning model. Currently, most studies assume the inclination to be constant, limiting the applications and positioning accuracy. Additionally, light blockages may severely interfere with the RSS measurements but the literature has not explored blockage detection in real-world experiments. To address these problems, we propose a tightly coupled VLP/INS (Inertial Navigation System) integrated navigation system that uses graph optimization to account for varying PD inclinations and VLP blockages. We also discussed the possibility of simultaneously estimating the robot's pose and the locations of some unknown LEDs. Simulations and two groups of real-world experiments demonstrate the efficiency of our approach, achieving an average positioning accuracy of 10 cm during movement and inclination accuracy within 1 degree despite inclination changes and blockages.

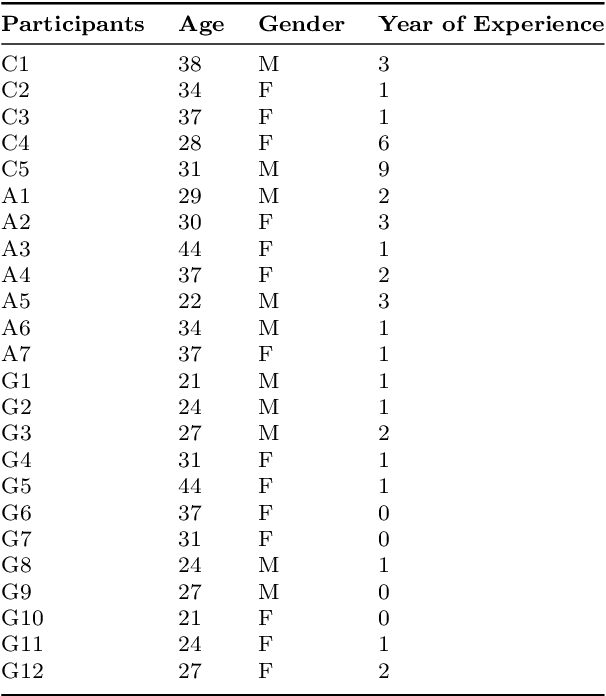

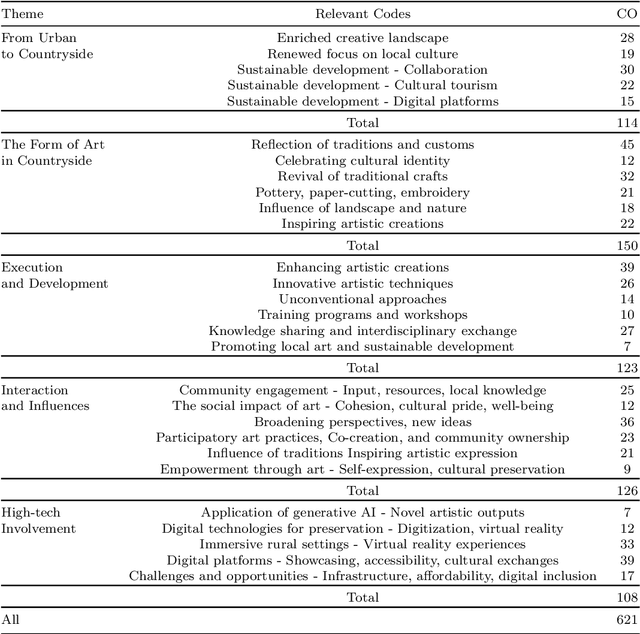

Exploring the Intersection of Complex Aesthetics and Generative AI for Promoting Cultural Creativity in Rural China after the Post-Pandemic Era

Sep 05, 2023

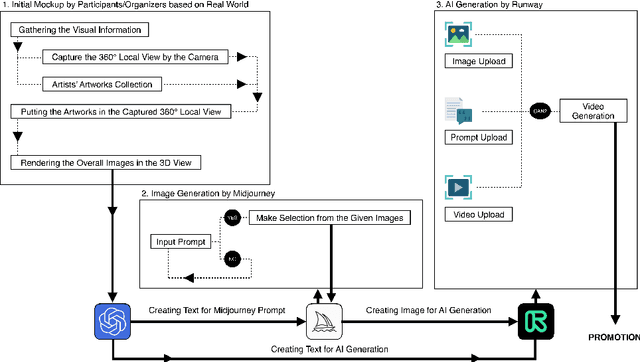

Abstract:This paper explores using generative AI and aesthetics to promote cultural creativity in rural China amidst COVID-19's impact. Through literature reviews, case studies, surveys, and text analysis, it examines art and technology applications in rural contexts and identifies key challenges. The study finds artworks often fail to resonate locally, while reliance on external artists limits sustainability. Hence, nurturing grassroots "artist villagers" through AI is proposed. Our approach involves training machine learning on subjective aesthetics to generate culturally relevant content. Interactive AI media can also boost tourism while preserving heritage. This pioneering research puts forth original perspectives on the intersection of AI and aesthetics to invigorate rural culture. It advocates holistic integration of technology and emphasizes AI's potential as a creative enabler versus replacement. Ultimately, it lays the groundwork for further exploration of leveraging AI innovations to empower rural communities. This timely study contributes to growing interest in emerging technologies to address critical issues facing rural China.

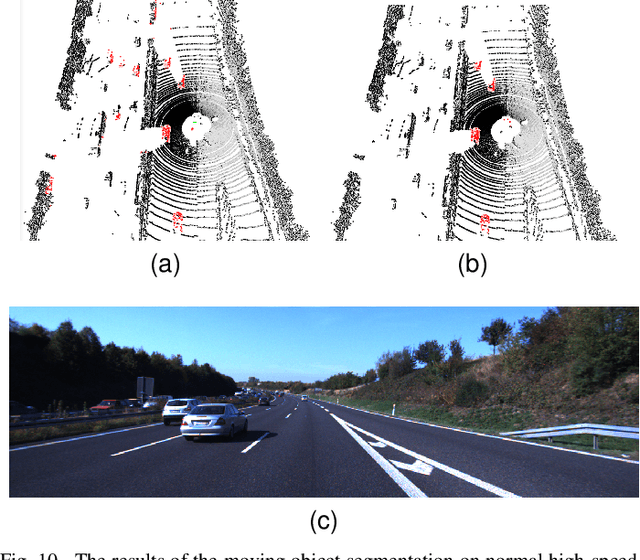

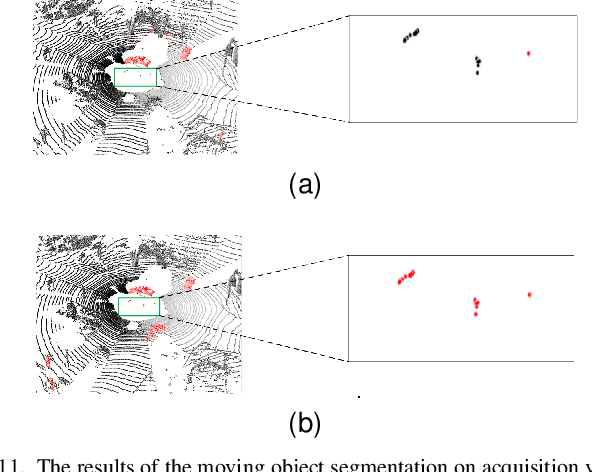

3D-SeqMOS: A Novel Sequential 3D Moving Object Segmentation in Autonomous Driving

Jul 18, 2023

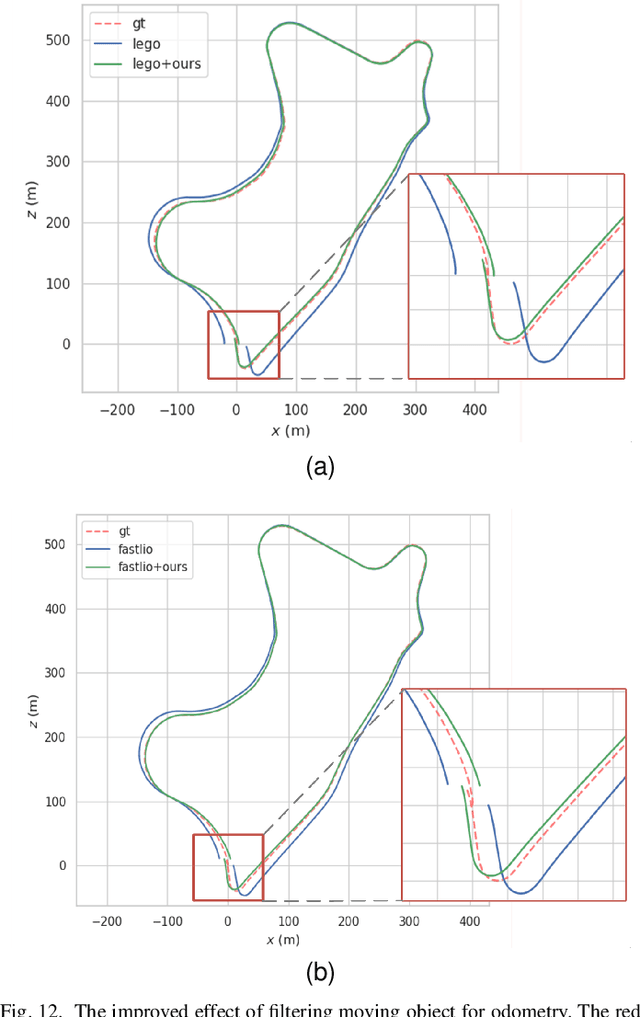

Abstract:For the SLAM system in robotics and autonomous driving, the accuracy of front-end odometry and back-end loop-closure detection determine the whole intelligent system performance. But the LiDAR-SLAM could be disturbed by current scene moving objects, resulting in drift errors and even loop-closure failure. Thus, the ability to detect and segment moving objects is essential for high-precision positioning and building a consistent map. In this paper, we address the problem of moving object segmentation from 3D LiDAR scans to improve the odometry and loop-closure accuracy of SLAM. We propose a novel 3D Sequential Moving-Object-Segmentation (3D-SeqMOS) method that can accurately segment the scene into moving and static objects, such as moving and static cars. Different from the existing projected-image method, we process the raw 3D point cloud and build a 3D convolution neural network for MOS task. In addition, to make full use of the spatio-temporal information of point cloud, we propose a point cloud residual mechanism using the spatial features of current scan and the temporal features of previous residual scans. Besides, we build a complete SLAM framework to verify the effectiveness and accuracy of 3D-SeqMOS. Experiments on SemanticKITTI dataset show that our proposed 3D-SeqMOS method can effectively detect moving objects and improve the accuracy of LiDAR odometry and loop-closure detection. The test results show our 3D-SeqMOS outperforms the state-of-the-art method by 12.4%. We extend the proposed method to the SemanticKITTI: Moving Object Segmentation competition and achieve the 2nd in the leaderboard, showing its effectiveness.

A Review and Comparative Study of Close-Range Geometric Camera Calibration Tools

Jun 15, 2023

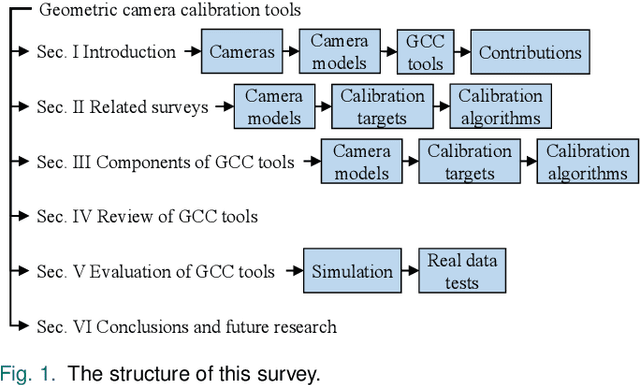

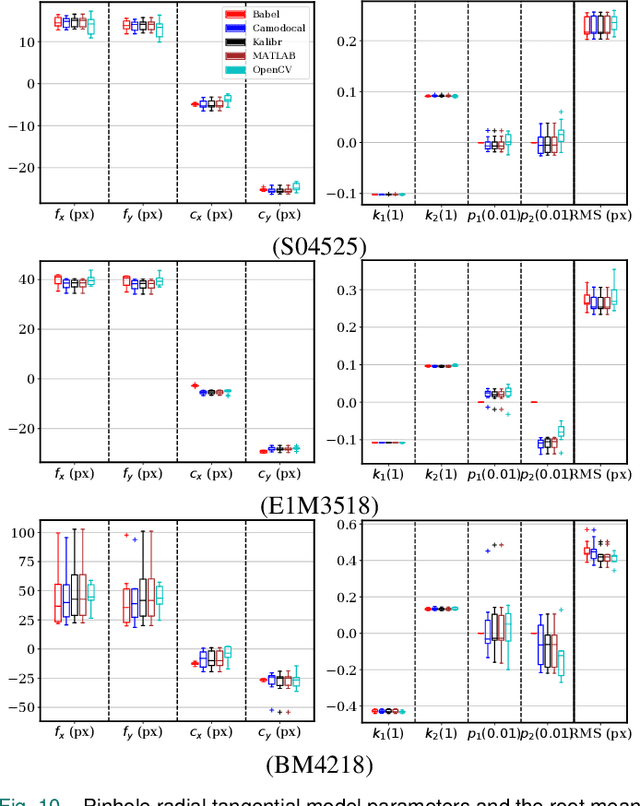

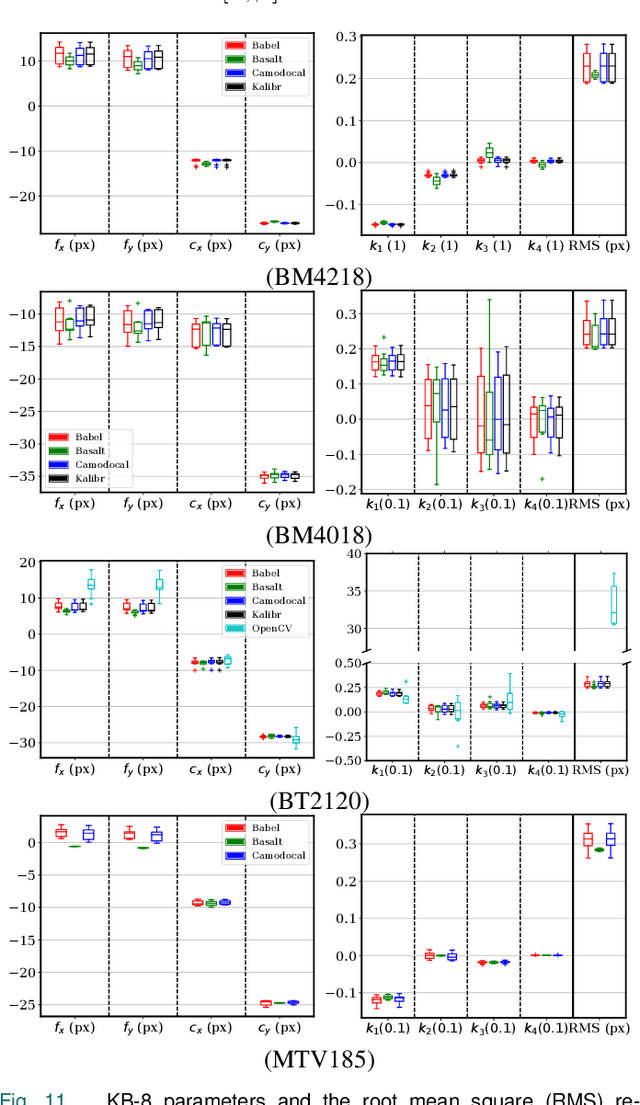

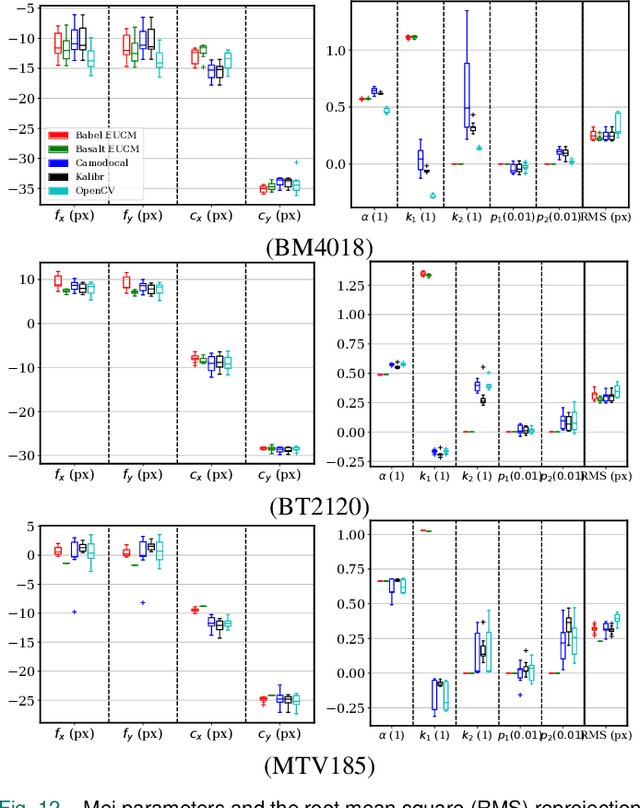

Abstract:In many camera-based applications, it is necessary to find the geometric relationship between incoming rays and image pixels, i.e., the projection model, through the geometric camera calibration (GCC). Aiming to provide practical calibration guidelines, this work surveys and evaluates the existing GCC tools. The survey covers camera models, calibration targets, and algorithms used in these tools, highlighting their properties and the trends in GCC development. The evaluation compares six target-based GCC tools, namely, BabelCalib, Basalt, Camodocal, Kalibr, the MATLAB calibrator, and the OpenCV-based ROS calibrator, with simulated and real data for cameras of wide-angle and fisheye lenses described by three traditional projection models. These tests reveal the strengths and weaknesses of these camera models, as well as the repeatability of these GCC tools. In view of the survey and evaluation, future research directions of GCC are also discussed.

4D iRIOM: 4D Imaging Radar Inertial Odometry and Mapping

Apr 03, 2023Abstract:Millimeter wave radar can measure distances, directions, and Doppler velocity for objects in harsh conditions such as fog. The 4D imaging radar with both vertical and horizontal data resembling an image can also measure objects' height. Previous studies have used 3D radars for ego-motion estimation. But few methods leveraged the rich data of imaging radars, and they usually omitted the mapping aspect, thus leading to inferior odometry accuracy. This paper presents a real-time imaging radar inertial odometry and mapping method, iRIOM, based on the submap concept. To deal with moving objects and multipath reflections, we use the graduated non-convexity method to robustly and efficiently estimate ego-velocity from a single scan. To measure the agreement between sparse non-repetitive radar scan points and submap points, the distribution-to-multi-distribution distance for matches is adopted. The ego-velocity, scan-to-submap matches are fused with the 6D inertial data by an iterative extended Kalman filter to get the platform's 3D position and orientation. A loop closure module is also developed to curb the odometry module's drift. To our knowledge, iRIOM based on the two modules is the first 4D radar inertial SLAM system. On our and third-party data, we show iRIOM's favorable odometry accuracy and mapping consistency against the FastLIO-SLAM and the EKFRIO. Also, the ablation study reveal the benefit of inertial data versus the constant velocity model, and scan-to-submap matching versus scan-to-scan matching.

Observability Analysis and Keyframe-Based Filtering for Visual Inertial Odometry with Full Self-Calibration

Jan 13, 2022

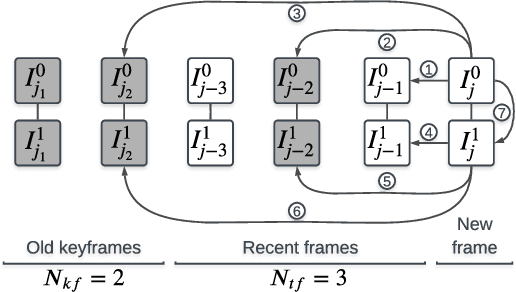

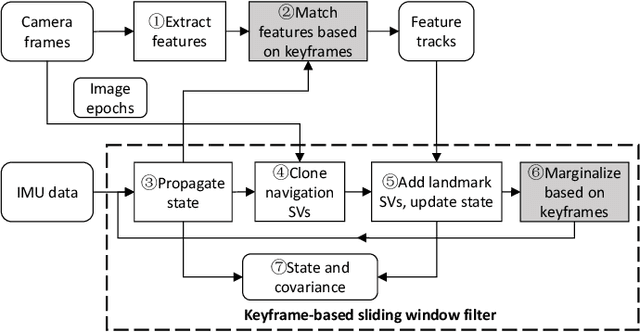

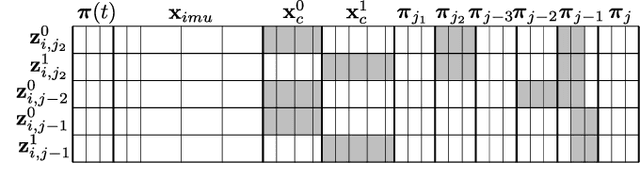

Abstract:Camera-IMU (Inertial Measurement Unit) sensor fusion has been extensively studied in recent decades. Numerous observability analysis and fusion schemes for motion estimation with self-calibration have been presented. However, it has been uncertain whether both camera and IMU intrinsic parameters are observable under general motion. To answer this question, we first prove that for a global shutter camera-IMU system, all intrinsic and extrinsic parameters are observable with an unknown landmark. Given this, time offset and readout time of a rolling shutter (RS) camera also prove to be observable. Next, to validate this analysis and to solve the drift issue of a structureless filter during standstills, we develop a Keyframe-based Sliding Window Filter (KSWF) for odometry and self-calibration, which works with a monocular RS camera or stereo RS cameras. Though the keyframe concept is widely used in vision-based sensor fusion, to our knowledge, KSWF is the first of its kind to support self-calibration. Our simulation and real data tests validated that it is possible to fully calibrate the camera-IMU system using observations of opportunistic landmarks under diverse motion. Real data tests confirmed previous allusions that keeping landmarks in the state vector can remedy the drift in standstill, and showed that the keyframe-based scheme is an alternative cure.

Continuous-Time Spatiotemporal Calibration of a Rolling Shutter Camera---IMU System

Aug 16, 2021

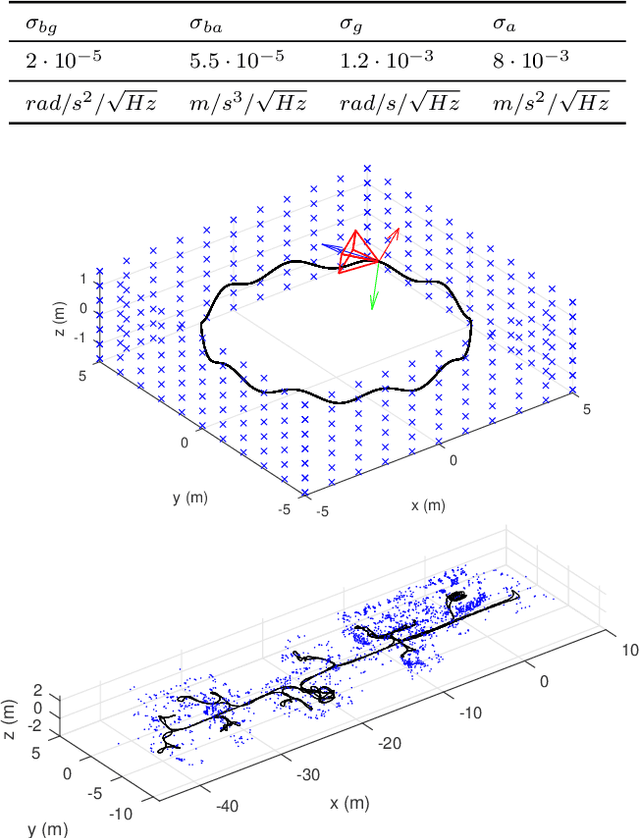

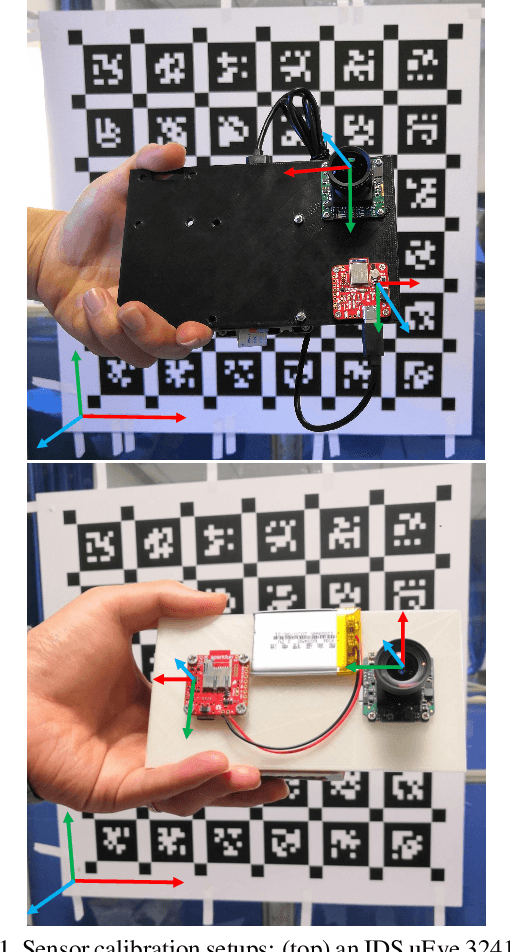

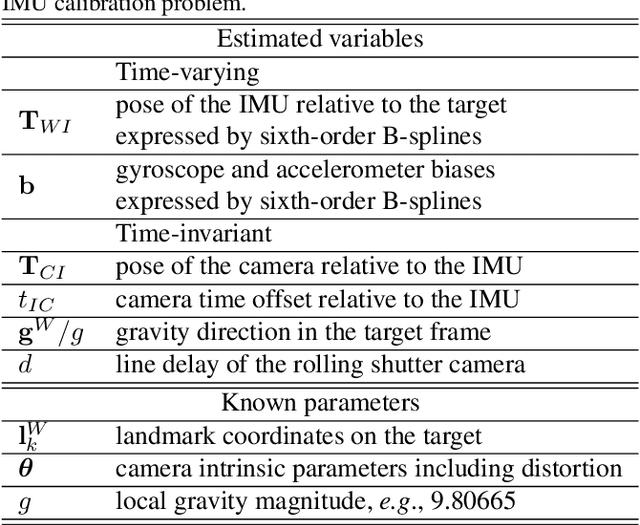

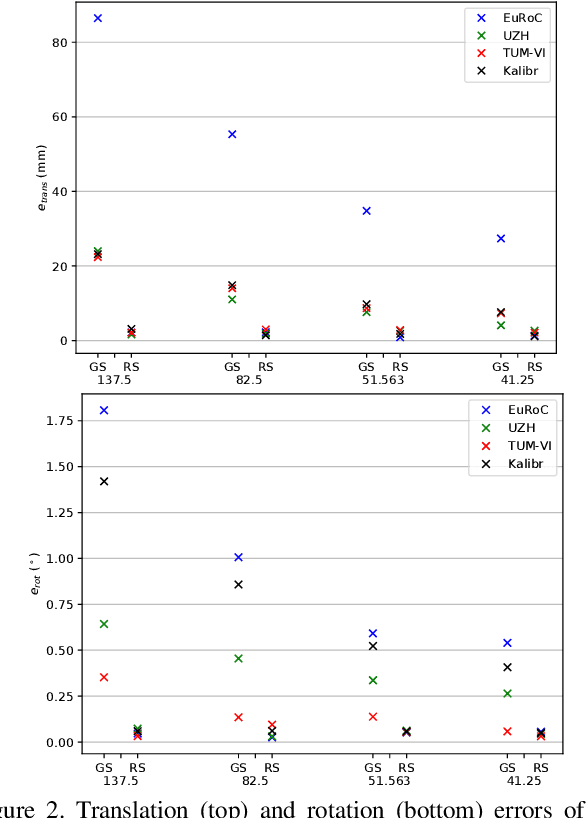

Abstract:The rolling shutter (RS) mechanism is widely used by consumer-grade cameras, which are essential parts in smartphones and autonomous vehicles. The RS effect leads to image distortion upon relative motion between a camera and the scene. This effect needs to be considered in video stabilization, structure from motion, and vision-aided odometry, for which recent studies have improved earlier global shutter (GS) methods by accounting for the RS effect. However, it is still unclear how the RS affects spatiotemporal calibration of the camera in a sensor assembly, which is crucial to good performance in aforementioned applications. This work takes the camera-IMU system as an example and looks into the RS effect on its spatiotemporal calibration. To this end, we develop a calibration method for a RS-camera-IMU system with continuous-time B-splines by using a calibration target. Unlike in calibrating GS cameras, every observation of a landmark on the target has a unique camera pose fitted by continuous-time B-splines. With simulated data generated from four sets of public calibration data, we show that RS can noticeably affect the extrinsic parameters, causing errors about 1$^\circ$ in orientation and 2 $cm$ in translation with a RS setting as in common smartphone cameras. With real data collected by two industrial camera-IMU systems, we find that considering the RS effect gives more accurate and consistent spatiotemporal calibration. Moreover, our method also accurately calibrates the inter-line delay of the RS. The code for simulation and calibration is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge