Yukai Lin

CyberLoc: Towards Accurate Long-term Visual Localization

Jan 06, 2023

Abstract:This technical report introduces CyberLoc, an image-based visual localization pipeline for robust and accurate long-term pose estimation under challenging conditions. The proposed method comprises four modules connected in a sequence. First, a mapping module is applied to build accurate 3D maps of the scene, one map for each reference sequence if there exist multiple reference sequences under different conditions. Second, a single-image-based localization pipeline (retrieval--matching--PnP) is performed to estimate 6-DoF camera poses for each query image, one for each 3D map. Third, a consensus set maximization module is proposed to filter out outlier 6-DoF camera poses, and outputs one 6-DoF camera pose for a query. Finally, a robust pose refinement module is proposed to optimize 6-DoF query poses, taking candidate global 6-DoF camera poses and their corresponding global 2D-3D matches, sparse 2D-2D feature matches between consecutive query images and SLAM poses of the query sequence as input. Experiments on the 4seasons dataset show that our method achieves high accuracy and robustness. In particular, our approach wins the localization challenge of ECCV 2022 workshop on Map-based Localization for Autonomous Driving (MLAD-ECCV2022).

Observability Analysis and Keyframe-Based Filtering for Visual Inertial Odometry with Full Self-Calibration

Jan 13, 2022

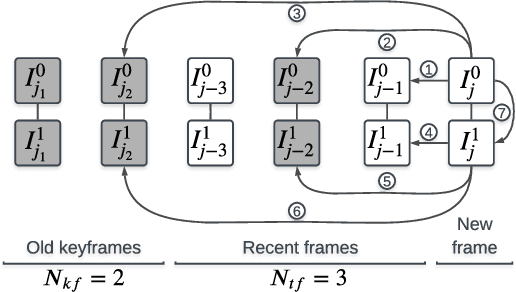

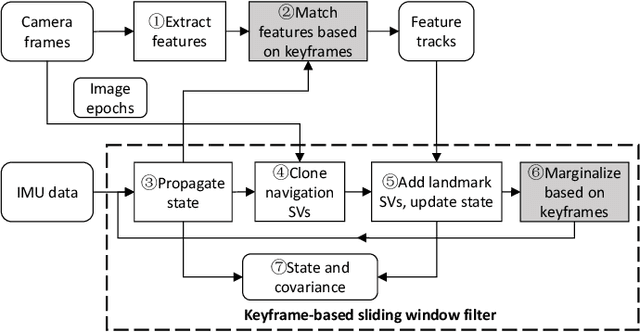

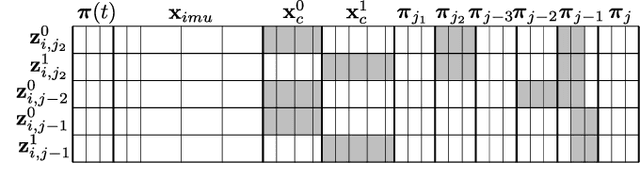

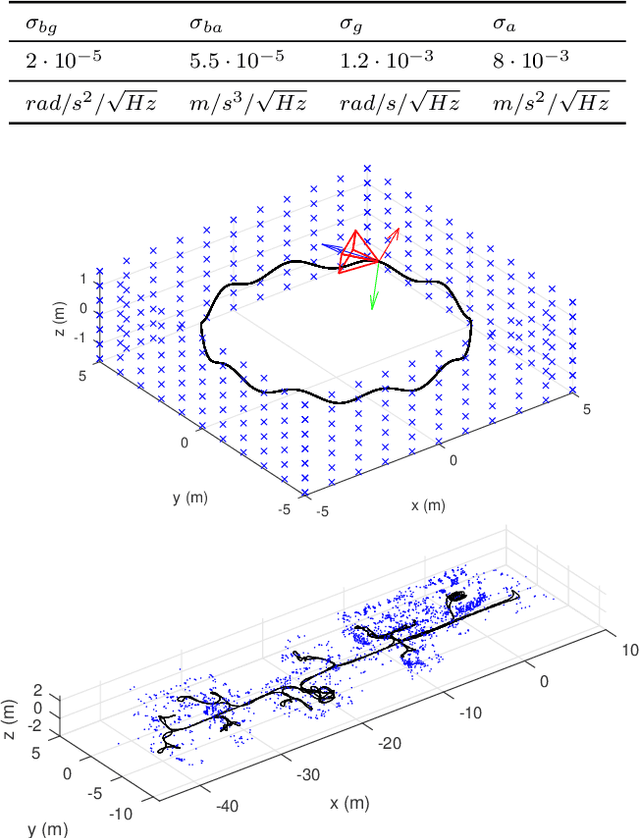

Abstract:Camera-IMU (Inertial Measurement Unit) sensor fusion has been extensively studied in recent decades. Numerous observability analysis and fusion schemes for motion estimation with self-calibration have been presented. However, it has been uncertain whether both camera and IMU intrinsic parameters are observable under general motion. To answer this question, we first prove that for a global shutter camera-IMU system, all intrinsic and extrinsic parameters are observable with an unknown landmark. Given this, time offset and readout time of a rolling shutter (RS) camera also prove to be observable. Next, to validate this analysis and to solve the drift issue of a structureless filter during standstills, we develop a Keyframe-based Sliding Window Filter (KSWF) for odometry and self-calibration, which works with a monocular RS camera or stereo RS cameras. Though the keyframe concept is widely used in vision-based sensor fusion, to our knowledge, KSWF is the first of its kind to support self-calibration. Our simulation and real data tests validated that it is possible to fully calibrate the camera-IMU system using observations of opportunistic landmarks under diverse motion. Real data tests confirmed previous allusions that keeping landmarks in the state vector can remedy the drift in standstill, and showed that the keyframe-based scheme is an alternative cure.

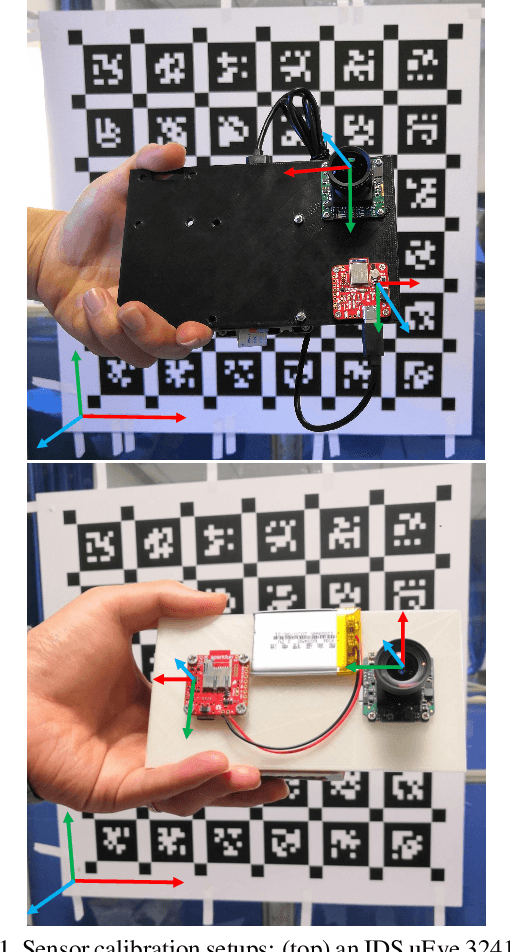

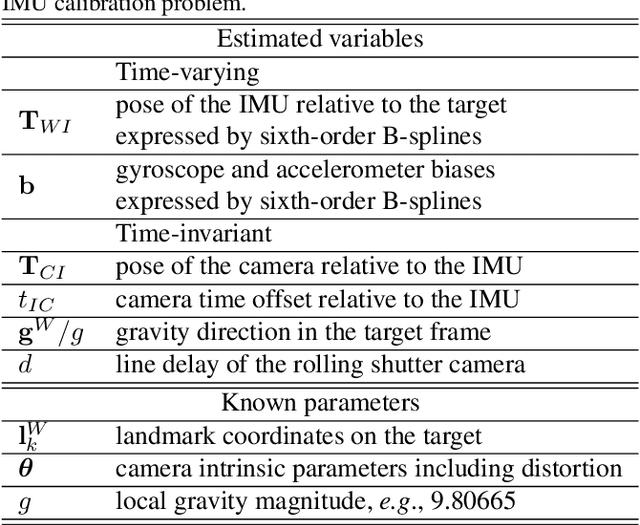

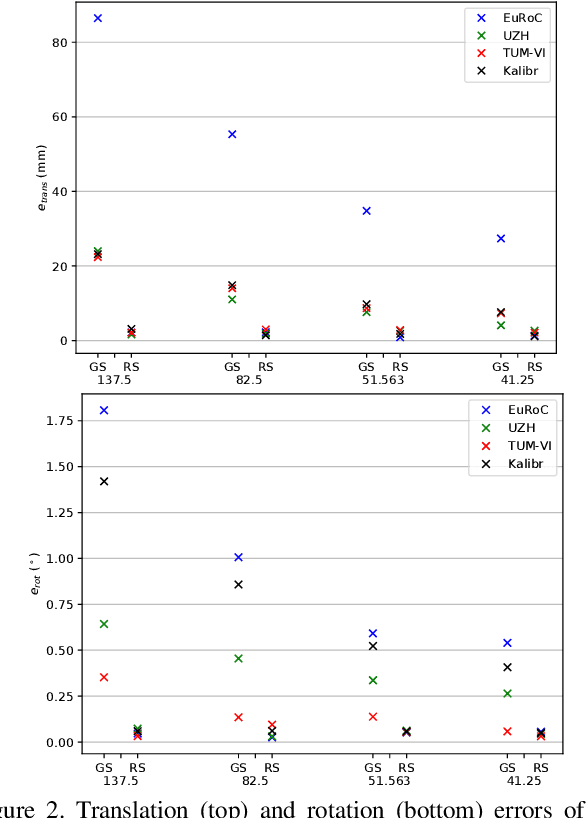

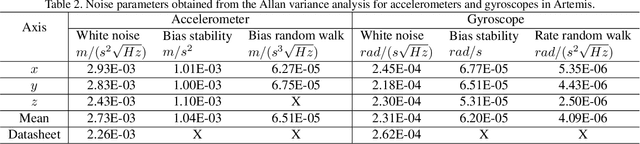

Continuous-Time Spatiotemporal Calibration of a Rolling Shutter Camera---IMU System

Aug 16, 2021

Abstract:The rolling shutter (RS) mechanism is widely used by consumer-grade cameras, which are essential parts in smartphones and autonomous vehicles. The RS effect leads to image distortion upon relative motion between a camera and the scene. This effect needs to be considered in video stabilization, structure from motion, and vision-aided odometry, for which recent studies have improved earlier global shutter (GS) methods by accounting for the RS effect. However, it is still unclear how the RS affects spatiotemporal calibration of the camera in a sensor assembly, which is crucial to good performance in aforementioned applications. This work takes the camera-IMU system as an example and looks into the RS effect on its spatiotemporal calibration. To this end, we develop a calibration method for a RS-camera-IMU system with continuous-time B-splines by using a calibration target. Unlike in calibrating GS cameras, every observation of a landmark on the target has a unique camera pose fitted by continuous-time B-splines. With simulated data generated from four sets of public calibration data, we show that RS can noticeably affect the extrinsic parameters, causing errors about 1$^\circ$ in orientation and 2 $cm$ in translation with a RS setting as in common smartphone cameras. With real data collected by two industrial camera-IMU systems, we find that considering the RS effect gives more accurate and consistent spatiotemporal calibration. Moreover, our method also accurately calibrates the inter-line delay of the RS. The code for simulation and calibration is publicly available.

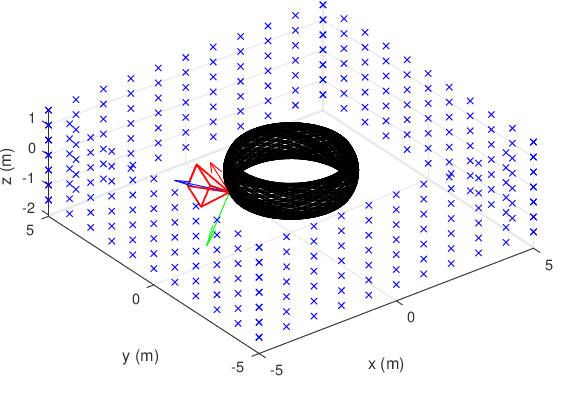

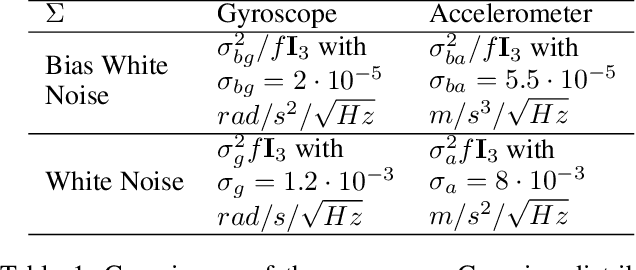

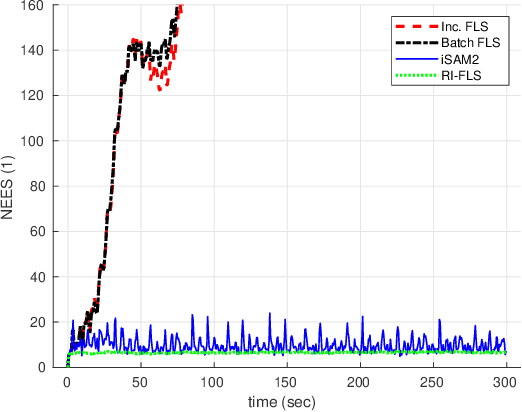

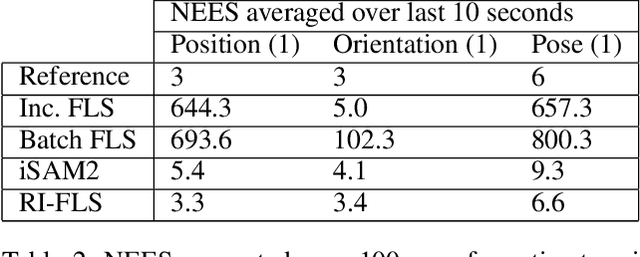

Consistent Right-Invariant Fixed-Lag Smoother with Application to Visual Inertial SLAM

Feb 17, 2021

Abstract:State estimation problems that use relative observations routinely arise in navigation of unmanned aerial vehicles, autonomous ground vehicles, \etc whose proper operation relies on accurate state estimates and reliable covariances. These problems have immanent unobservable directions. Traditional causal estimators, however, usually gain spurious information on the unobservable directions, leading to over confident covariance inconsistent with the actual estimator errors. The consistency problem of fixed-lag smoothers (FLSs) has only been attacked by the first estimate Jacobian (FEJ) technique because of the complexity to analyze their observability property. But the FEJ has several drawbacks hampering its wide adoption. To ensure the consistency of a FLS, this paper introduces the right invariant error formulation into the FLS framework. To our knowledge, we are the first to analyze the observability of a FLS with the right invariant error. Our main contributions are twofold. As the first novelty, to bypass the complexity of analysis with the classic observability matrix, we show that observability analysis of FLSs can be done equivalently on the linearized system. Second, we prove that the inconsistency issue in the traditional FLS can be elegantly solved by the right invariant error formulation without artificially correcting Jacobians. By applying the proposed FLS to the monocular visual inertial simultaneous localization and mapping (SLAM) problem, we confirm that the method consistently estimates covariance similarly to a batch smoother in simulation and that our method achieved comparable accuracy as traditional FLSs on real data.

A Versatile Keyframe-Based Structureless Filter for Visual Inertial Odometry

Jan 02, 2021

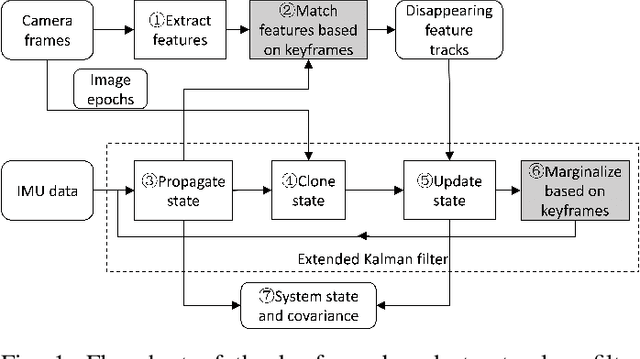

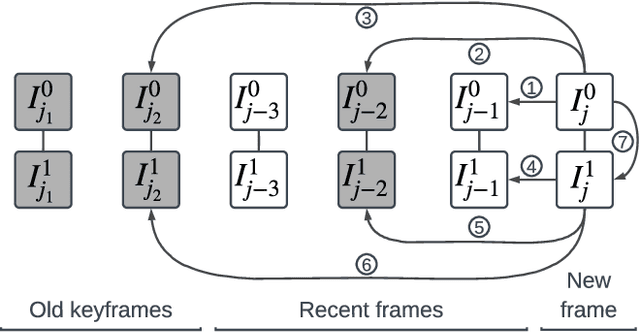

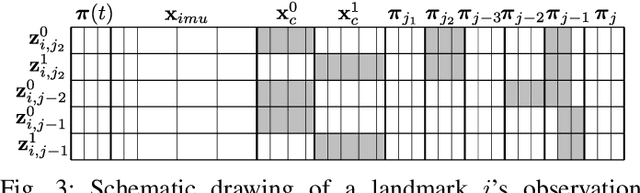

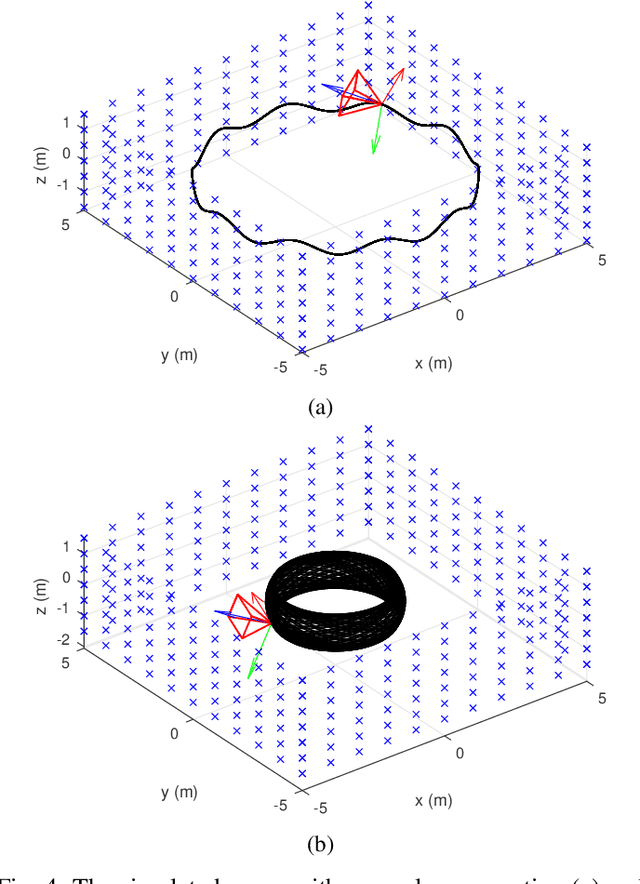

Abstract:Motion estimation by fusing data from at least a camera and an Inertial Measurement Unit (IMU) enables many applications in robotics. However, among the multitude of Visual Inertial Odometry (VIO) methods, few efficiently estimate device motion with consistent covariance, and calibrate sensor parameters online for handling data from consumer sensors. This paper addresses the gap with a Keyframe-based Structureless Filter (KSF). For efficiency, landmarks are not included in the filter's state vector. For robustness, KSF associates feature observations and manages state variables using the concept of keyframes. For flexibility, KSF supports anytime calibration of IMU systematic errors, as well as extrinsic, intrinsic, and temporal parameters of each camera. Estimator consistency and observability of sensor parameters were analyzed by simulation. Sensitivity to design options, e.g., feature matching method and camera count was studied with the EuRoC benchmark. Sensor parameter estimation was evaluated on raw TUM VI sequences and smartphone data. Moreover, pose estimation accuracy was evaluated on EuRoC and TUM VI sequences versus recent VIO methods. These tests confirm that KSF reliably calibrates sensor parameters when the data contain adequate motion, and consistently estimate motion with accuracy rivaling recent VIO methods. Our implementation runs at 42 Hz with stereo camera images on a consumer laptop.

Infrastructure-based Multi-Camera Calibration using Radial Projections

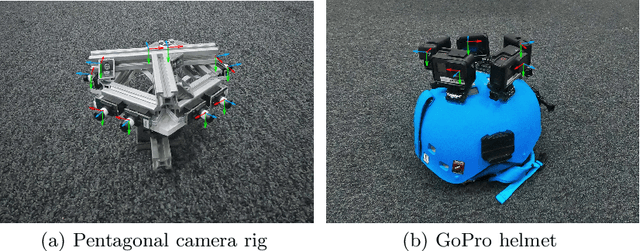

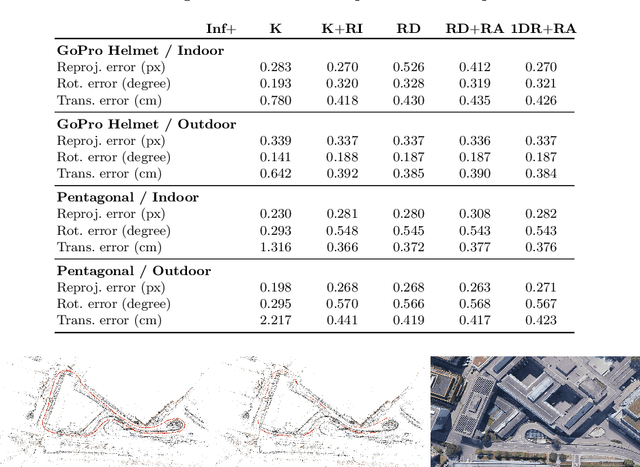

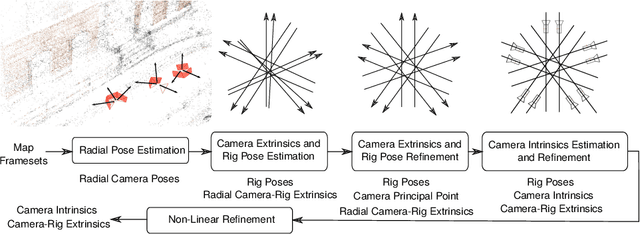

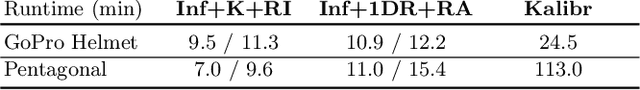

Jul 30, 2020

Abstract:Multi-camera systems are an important sensor platform for intelligent systems such as self-driving cars. Pattern-based calibration techniques can be used to calibrate the intrinsics of the cameras individually. However, extrinsic calibration of systems with little to no visual overlap between the cameras is a challenge. Given the camera intrinsics, infrastucture-based calibration techniques are able to estimate the extrinsics using 3D maps pre-built via SLAM or Structure-from-Motion. In this paper, we propose to fully calibrate a multi-camera system from scratch using an infrastructure-based approach. Assuming that the distortion is mainly radial, we introduce a two-stage approach. We first estimate the camera-rig extrinsics up to a single unknown translation component per camera. Next, we solve for both the intrinsic parameters and the missing translation components. Extensive experiments on multiple indoor and outdoor scenes with multiple multi-camera systems show that our calibration method achieves high accuracy and robustness. In particular, our approach is more robust than the naive approach of first estimating intrinsic parameters and pose per camera before refining the extrinsic parameters of the system. The implementation is available at https://github.com/youkely/InfrasCal.

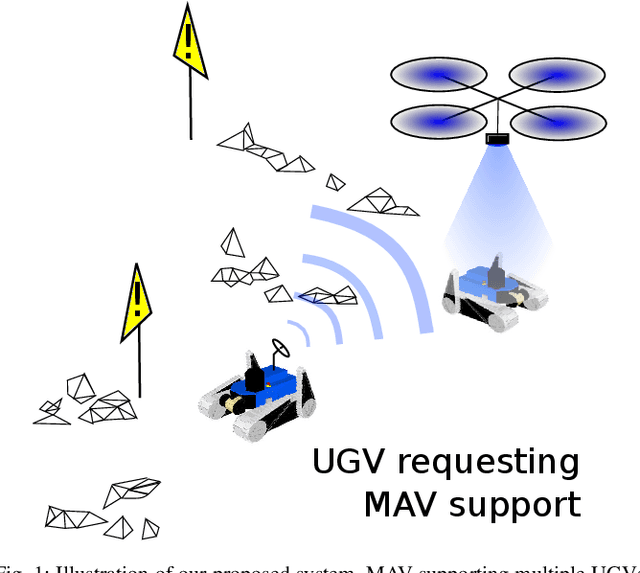

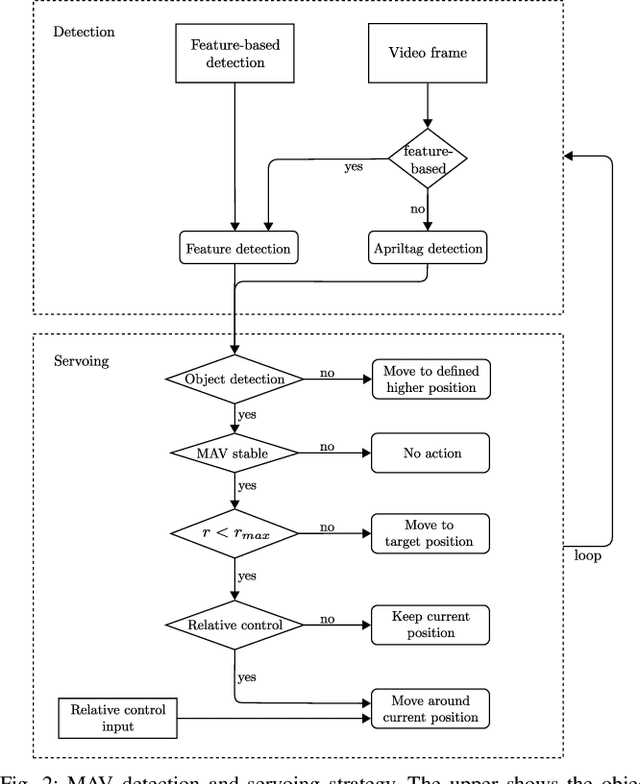

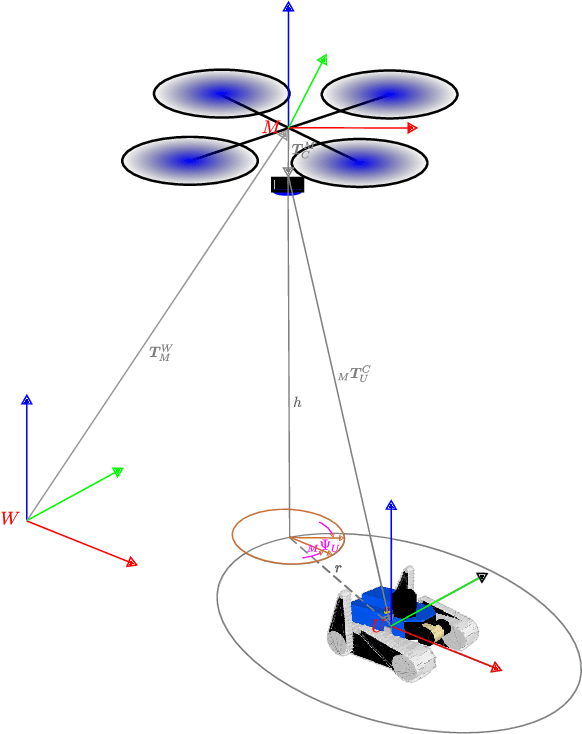

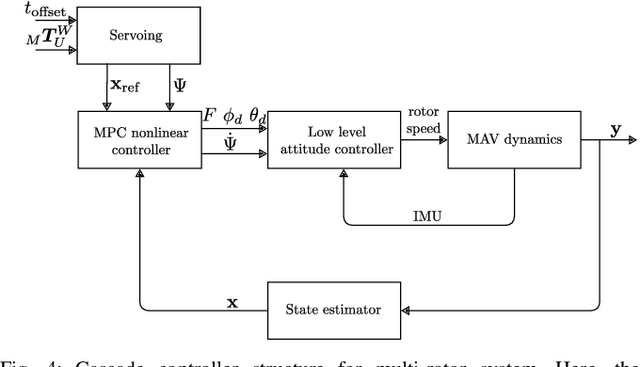

Aerial-Ground collaborative sensing: Third-Person view for teleoperation

Jul 09, 2018

Abstract:Rapid deployment and operation are key requirements in time critical application, such as Search and Rescue (SaR). Efficiently teleoperated ground robots can support first-responders in such situations. However, first-person view teleoperation is sub-optimal in difficult terrains, while a third-person perspective can drastically increase teleoperation performance. Here, we propose a Micro Aerial Vehicle (MAV)-based system that can autonomously provide third-person perspective to ground robots. While our approach is based on local visual servoing, it further leverages the global localization of several ground robots to seamlessly transfer between these ground robots in GPS-denied environments. Therewith one MAV can support multiple ground robots on a demand basis. Furthermore, our system enables different visual detection regimes, and enhanced operability, and return-home functionality. We evaluate our system in real-world SaR scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge