Charles Toth

Lightweight Road Environment Segmentation using Vector Quantization

Apr 19, 2025

Abstract:Road environment segmentation plays a significant role in autonomous driving. Numerous works based on Fully Convolutional Networks (FCNs) and Transformer architectures have been proposed to leverage local and global contextual learning for efficient and accurate semantic segmentation. In both architectures, the encoder often relies heavily on extracting continuous representations from the image, which limits the ability to represent meaningful discrete information. To address this limitation, we propose segmentation of the autonomous driving environment using vector quantization. Vector quantization offers three primary advantages for road environment segmentation. (1) Each continuous feature from the encoder is mapped to a discrete vector from the codebook, helping the model discover distinct features more easily than with complex continuous features. (2) Since a discrete feature acts as compressed versions of the encoder's continuous features, they also compress noise or outliers, enhancing the image segmentation task. (3) Vector quantization encourages the latent space to form coarse clusters of continuous features, forcing the model to group similar features, making the learned representations more structured for the decoding process. In this work, we combined vector quantization with the lightweight image segmentation model MobileUNETR and used it as a baseline model for comparison to demonstrate its efficiency. Through experiments, we achieved 77.0 % mIoU on Cityscapes, outperforming the baseline by 2.9 % without increasing the model's initial size or complexity.

UAS Visual Navigation in Large and Unseen Environments via a Meta Agent

Mar 20, 2025Abstract:The aim of this work is to develop an approach that enables Unmanned Aerial System (UAS) to efficiently learn to navigate in large-scale urban environments and transfer their acquired expertise to novel environments. To achieve this, we propose a meta-curriculum training scheme. First, meta-training allows the agent to learn a master policy to generalize across tasks. The resulting model is then fine-tuned on the downstream tasks. We organize the training curriculum in a hierarchical manner such that the agent is guided from coarse to fine towards the target task. In addition, we introduce Incremental Self-Adaptive Reinforcement learning (ISAR), an algorithm that combines the ideas of incremental learning and meta-reinforcement learning (MRL). In contrast to traditional reinforcement learning (RL), which focuses on acquiring a policy for a specific task, MRL aims to learn a policy with fast transfer ability to novel tasks. However, the MRL training process is time consuming, whereas our proposed ISAR algorithm achieves faster convergence than the conventional MRL algorithm. We evaluate the proposed methodologies in simulated environments and demonstrate that using this training philosophy in conjunction with the ISAR algorithm significantly improves the convergence speed for navigation in large-scale cities and the adaptation proficiency in novel environments.

Snail-Radar: A large-scale diverse dataset for the evaluation of 4D-radar-based SLAM systems

Jul 16, 2024

Abstract:4D radars are increasingly favored for odometry and mapping of autonomous systems due to their robustness in harsh weather and dynamic environments. Existing datasets, however, often cover limited areas and are typically captured using a single platform. To address this gap, we present a diverse large-scale dataset specifically designed for 4D radar-based localization and mapping. This dataset was gathered using three different platforms: a handheld device, an e-bike, and an SUV, under a variety of environmental conditions, including clear days, nighttime, and heavy rain. The data collection occurred from September 2023 to February 2024, encompassing diverse settings such as roads in a vegetated campus and tunnels on highways. Each route was traversed multiple times to facilitate place recognition evaluations. The sensor suite included a 3D lidar, 4D radars, stereo cameras, consumer-grade IMUs, and a GNSS/INS system. Sensor data packets were synchronized to GNSS time using a two-step process: a convex hull algorithm was applied to smooth host time jitter, and then odometry and correlation algorithms were used to correct constant time offsets. Extrinsic calibration between sensors was achieved through manual measurements and subsequent nonlinear optimization. The reference motion for the platforms was generated by registering lidar scans to a terrestrial laser scanner (TLS) point cloud map using a lidar inertial odometry (LIO) method in localization mode. Additionally, a data reversion technique was introduced to enable backward LIO processing. We believe this dataset will boost research in radar-based point cloud registration, odometry, mapping, and place recognition.

Observability Analysis and Keyframe-Based Filtering for Visual Inertial Odometry with Full Self-Calibration

Jan 13, 2022

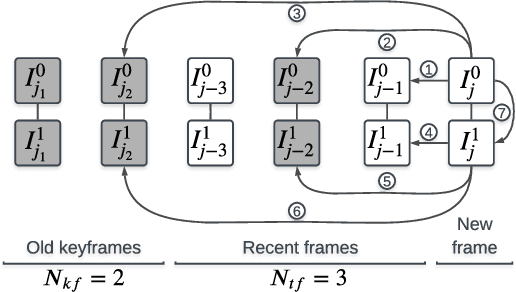

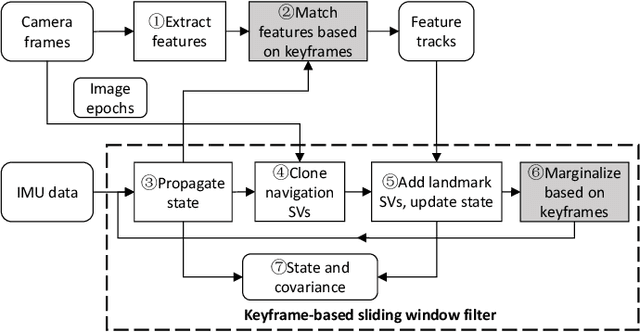

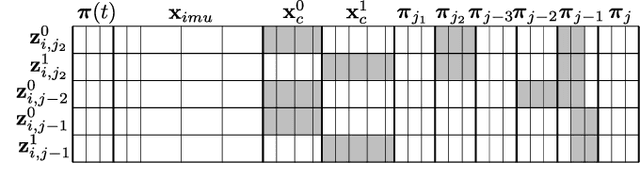

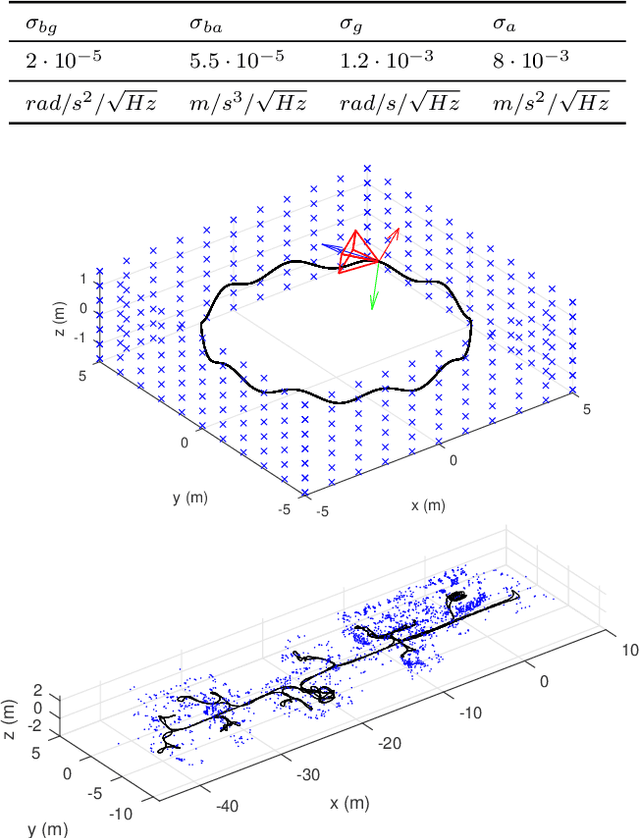

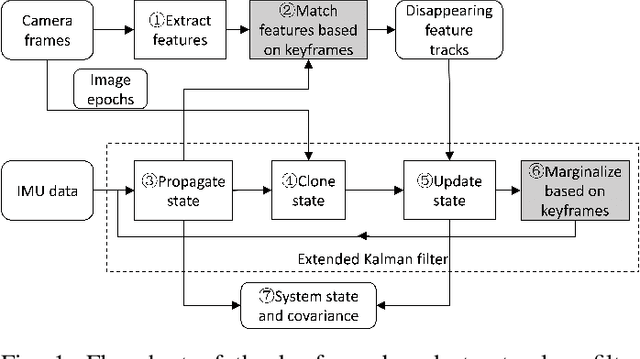

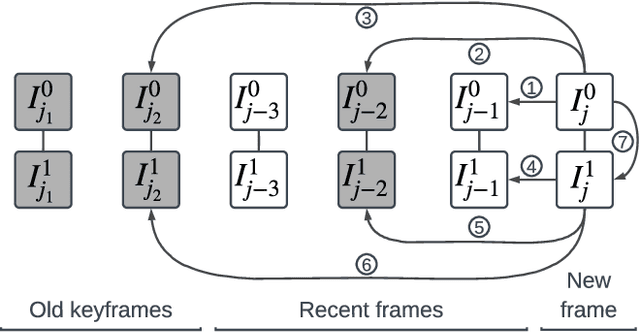

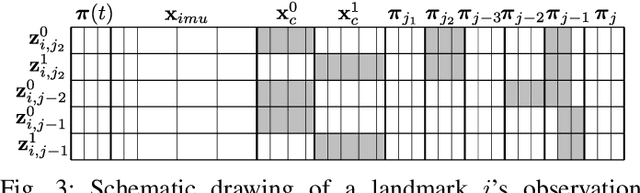

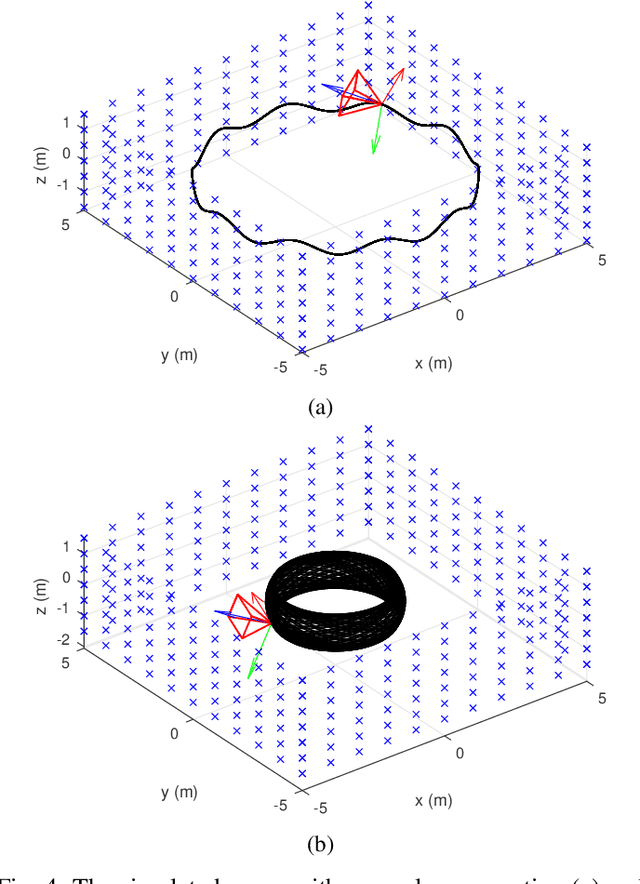

Abstract:Camera-IMU (Inertial Measurement Unit) sensor fusion has been extensively studied in recent decades. Numerous observability analysis and fusion schemes for motion estimation with self-calibration have been presented. However, it has been uncertain whether both camera and IMU intrinsic parameters are observable under general motion. To answer this question, we first prove that for a global shutter camera-IMU system, all intrinsic and extrinsic parameters are observable with an unknown landmark. Given this, time offset and readout time of a rolling shutter (RS) camera also prove to be observable. Next, to validate this analysis and to solve the drift issue of a structureless filter during standstills, we develop a Keyframe-based Sliding Window Filter (KSWF) for odometry and self-calibration, which works with a monocular RS camera or stereo RS cameras. Though the keyframe concept is widely used in vision-based sensor fusion, to our knowledge, KSWF is the first of its kind to support self-calibration. Our simulation and real data tests validated that it is possible to fully calibrate the camera-IMU system using observations of opportunistic landmarks under diverse motion. Real data tests confirmed previous allusions that keeping landmarks in the state vector can remedy the drift in standstill, and showed that the keyframe-based scheme is an alternative cure.

A Versatile Keyframe-Based Structureless Filter for Visual Inertial Odometry

Jan 02, 2021

Abstract:Motion estimation by fusing data from at least a camera and an Inertial Measurement Unit (IMU) enables many applications in robotics. However, among the multitude of Visual Inertial Odometry (VIO) methods, few efficiently estimate device motion with consistent covariance, and calibrate sensor parameters online for handling data from consumer sensors. This paper addresses the gap with a Keyframe-based Structureless Filter (KSF). For efficiency, landmarks are not included in the filter's state vector. For robustness, KSF associates feature observations and manages state variables using the concept of keyframes. For flexibility, KSF supports anytime calibration of IMU systematic errors, as well as extrinsic, intrinsic, and temporal parameters of each camera. Estimator consistency and observability of sensor parameters were analyzed by simulation. Sensitivity to design options, e.g., feature matching method and camera count was studied with the EuRoC benchmark. Sensor parameter estimation was evaluated on raw TUM VI sequences and smartphone data. Moreover, pose estimation accuracy was evaluated on EuRoC and TUM VI sequences versus recent VIO methods. These tests confirm that KSF reliably calibrates sensor parameters when the data contain adequate motion, and consistently estimate motion with accuracy rivaling recent VIO methods. Our implementation runs at 42 Hz with stereo camera images on a consumer laptop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge